Linearity Breeds Contempt

—Peter Lax

A few weeks ago I went into my office and found a book waiting for me. It was one of the most pleasant surprises I’ve had at work for a long while, a biography of Peter Lax written by Reuben Hersh. Hersh is an emeritus professor of mathematics at the University of New Mexico (my alma mater), and a student of Lax at NYU. The book was a gift from my friend, Tim Trucano, who knew of my high regard and depth of appreciation for the work of Lax. I believe that Lax is one of the most important mathematicians of the 20th Century and he embodies a spirit that is all too lacking from current mathematical work. It is Lax’s steadfast commitment and execution of great pure math as a vehicle to producing great applied math that the book explicitly reports and implicitly advertises. Lax never saw a divide in math between the two and complete compatibility between them.

The publisher is the American Mathematical Society (AMS) and the book is a wonderfully technical and personal account of the fascinating and influential life of Peter Lax. Hersh’s account goes far beyond the obvious public and professional impact of Lax into his personal life and family although these are colored greatly by the greatest events of the 20th Century. Lax also has a deep connection to three themes in my own life: scientific computing, hyperbolic conservation laws and Los Alamos. He was a contributing member of the Manhattan Project despite being a corporal in the US Army and only 18 years old! Los Alamos and John von Neumann in particular had an immense influence on his life’s work with the fingerprints of that influence all over his greatest professional achievements.

The publisher is the American Mathematical Society (AMS) and the book is a wonderfully technical and personal account of the fascinating and influential life of Peter Lax. Hersh’s account goes far beyond the obvious public and professional impact of Lax into his personal life and family although these are colored greatly by the greatest events of the 20th Century. Lax also has a deep connection to three themes in my own life: scientific computing, hyperbolic conservation laws and Los Alamos. He was a contributing member of the Manhattan Project despite being a corporal in the US Army and only 18 years old! Los Alamos and John von Neumann in particular had an immense influence on his life’s work with the fingerprints of that influence all over his greatest professional achievements.

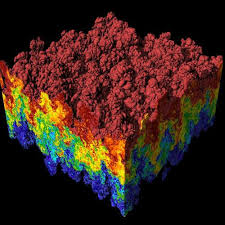

In 1945 scientific computing was just being born having provided an early example in a simulation of the plutonium bomb the previous year. Von Neumann was a visionary in scientific computing having already created the first shock capturing method and realizing the necessity of tackling the solution of shock waves through numerical investigations. The first real computers were a twinkle in Von Neumann’s eye. Lax was exposed to these ideas and along with his mentors at New York University (NYU), Courant and Friedrichs, soon set out making his own contributions to the field. It is easily defensible to credit Lax as being one of the primary creators of the field, Computational Fluid Dynamics (CFD) along with Von Neumann and Frank Harlow. All of these men had a direct association with Los Alamos and access to computers, resources and applications that drove the creation of this area of study.

In 1945 scientific computing was just being born having provided an early example in a simulation of the plutonium bomb the previous year. Von Neumann was a visionary in scientific computing having already created the first shock capturing method and realizing the necessity of tackling the solution of shock waves through numerical investigations. The first real computers were a twinkle in Von Neumann’s eye. Lax was exposed to these ideas and along with his mentors at New York University (NYU), Courant and Friedrichs, soon set out making his own contributions to the field. It is easily defensible to credit Lax as being one of the primary creators of the field, Computational Fluid Dynamics (CFD) along with Von Neumann and Frank Harlow. All of these men had a direct association with Los Alamos and access to computers, resources and applications that drove the creation of this area of study.

Lax’s work started with his thesis work at NYU, and continued with a year on staff at Los Alamos from 1949-1951. It is remarkable that upon leaving Los Alamos to take a professorship at NYU his vision of the future technical work in the area of shock waves and CFD had already achieved remarkable clarity of purpose and direction. He spent the next 20 years filling in all the details and laying the foundation for CFD for hyperbolic conservation laws across the world. He returned to Los Alamos every summer for a while and encouraged his students to do the same. He always felt that the applied environment should provide inspiration for mathematics and the problems studied by Los Alamos were weighty and important. Moreover he was a firm believer in the cause of the defense of the Country and its ideals. Surely this was a product of being driven from his native Hungary by the Nazis and their allies.

Lax’s work started with his thesis work at NYU, and continued with a year on staff at Los Alamos from 1949-1951. It is remarkable that upon leaving Los Alamos to take a professorship at NYU his vision of the future technical work in the area of shock waves and CFD had already achieved remarkable clarity of purpose and direction. He spent the next 20 years filling in all the details and laying the foundation for CFD for hyperbolic conservation laws across the world. He returned to Los Alamos every summer for a while and encouraged his students to do the same. He always felt that the applied environment should provide inspiration for mathematics and the problems studied by Los Alamos were weighty and important. Moreover he was a firm believer in the cause of the defense of the Country and its ideals. Surely this was a product of being driven from his native Hungary by the Nazis and their allies.

Lax also comes from a Hungarian heritage that provided some of the greatest minds of the 20th Century with Von N eumann and Teller being standouts. Their immense intellectual gifts were driven Westward to America through the incalculable hatred and violence of the Nazis and their allies in World War 2. Ultimately, the United States benefited by providing these refugees sanctuary against the forces of hate and intolerance. This among other things led to the Nazis defeat and should provide an ample lesson regarding the values of tolerance and openness as a contrast.

eumann and Teller being standouts. Their immense intellectual gifts were driven Westward to America through the incalculable hatred and violence of the Nazis and their allies in World War 2. Ultimately, the United States benefited by providing these refugees sanctuary against the forces of hate and intolerance. This among other things led to the Nazis defeat and should provide an ample lesson regarding the values of tolerance and openness as a contrast.

The book closes with an overview of Lax’s major areas of technical achievement in a series of short essays. Lax received the Abel Prize for Mathematics in 2005 because of the depth and breath of his work in these areas. While hyperbolic conservation laws and CFD were foremost in his resume, he produced great mathematics in a number of other areas. In addition he provided continuous service to the NYU and United States in broader scientific leadership positions.

Before laying out these topics the book makes a special effort to describe Lax’s devotion to the creation of mathematics that is both pure and applied. In other words beautiful mathematics that stands toe to toe with any other pure math, but also has application to problems in the real world. He has an unwavering commitment to the idea that applied math should be good pure math too. The two are not in any way incompatible. Today too many mathematicians are keen to dismiss applied math as being a lesser topic and beneath pure math as a discipline.

This attitude is harmful to all of mathematics and the root of many deep problems in the field today. Mathematicians far and wide would be well-served to look to Lax as a shining example of how they should be thinking, solve problems, be of service and contribute to a better World.

…who may regard using finite differences as the last resort of a scoundrel that the theory of difference equations is a rather sophisticated affair, more sophisticated than the corresponding theory of partial differential equations.

—Peter Lax

me ten years ago, I’d have thought aliens delivered the technology to humans.

me ten years ago, I’d have thought aliens delivered the technology to humans.

In a sense the modern trajectory of supercomputing is quintessentially American, bigger and faster is better by fiat. Excess and waste are virtues rather than flaw. Except the modern supercomputer it is not better, and not just because they don’t hold a candle to the old Crays. These computers just suck in so many ways; they are soulless and devoid of character. Moreover they are already a massive pain in the ass to use, and plans are afoot to make them even worse. The unrelenting priority of speed over utility is crushing. Terrible is the only path to speed, and terrible is coming with a tremendous cost too. When a colleague recently quipped that she would like to see us get a computer we actually wanted to use, I’m convinced that she had the older generation of Crays firmly in mind.

In a sense the modern trajectory of supercomputing is quintessentially American, bigger and faster is better by fiat. Excess and waste are virtues rather than flaw. Except the modern supercomputer it is not better, and not just because they don’t hold a candle to the old Crays. These computers just suck in so many ways; they are soulless and devoid of character. Moreover they are already a massive pain in the ass to use, and plans are afoot to make them even worse. The unrelenting priority of speed over utility is crushing. Terrible is the only path to speed, and terrible is coming with a tremendous cost too. When a colleague recently quipped that she would like to see us get a computer we actually wanted to use, I’m convinced that she had the older generation of Crays firmly in mind. We have to go back to the mid-1990’s and the combination of computing and geopolitical issues that existed then. The path taken by the classic Cray supercomputers appeared to be running out of steam insofar as improving performance. The attack of the killer micros was defined as the path to continued growth in performance. Overall hardware functionality was effectively abandoned in favor of pure performance. The pure performance was only achieved in the case of benchmark problems that had little in common with actual applications. Performance on real application took a nosedive; a nosedive that the benchmark conveniently covered up. We still haven’t woken up to the reality.

We have to go back to the mid-1990’s and the combination of computing and geopolitical issues that existed then. The path taken by the classic Cray supercomputers appeared to be running out of steam insofar as improving performance. The attack of the killer micros was defined as the path to continued growth in performance. Overall hardware functionality was effectively abandoned in favor of pure performance. The pure performance was only achieved in the case of benchmark problems that had little in common with actual applications. Performance on real application took a nosedive; a nosedive that the benchmark conveniently covered up. We still haven’t woken up to the reality.

are also prone to failures where ideas simply don’t pan out. Without the failure you don’t have the breakthroughs hence the fatal nature of risk aversion. Integrated over decades of timid low-risk behavior we have the makings of a crisis. Our low-risk behavior has already created a fast immeasurable gulf in what we can do today versus what we should be doing today.

are also prone to failures where ideas simply don’t pan out. Without the failure you don’t have the breakthroughs hence the fatal nature of risk aversion. Integrated over decades of timid low-risk behavior we have the makings of a crisis. Our low-risk behavior has already created a fast immeasurable gulf in what we can do today versus what we should be doing today.