The conventional view serves to protect us from the painful job of thinking.

― John Kenneth Galbraith

I chose the name the “Regularized Singularity” because it’s so important to the conduct of computational simulations of significance. For real world computations, the nonlinearity of the models dictates that the formation of a singularity is almost a foregone conclusion. To remain well behaved and physical, the singularity must be regularized, which means the singular behavior is moderated into something computable. This almost always accomplished with the application of a dissipative mechanism and effectively imposes the second law of thermodynamics on the solution.

I chose the name the “Regularized Singularity” because it’s so important to the conduct of computational simulations of significance. For real world computations, the nonlinearity of the models dictates that the formation of a singularity is almost a foregone conclusion. To remain well behaved and physical, the singularity must be regularized, which means the singular behavior is moderated into something computable. This almost always accomplished with the application of a dissipative mechanism and effectively imposes the second law of thermodynamics on the solution.

A useful, if not vital, tool is something called “hyperviscosity”. Taken broadly hyperviscosity is a broad spectrum of mathematical forms arising in numerical calculations. I’ll elaborate a number of the useful forms and options. Basically a hyperviscosity is viscous operator that has a higher differential order than regular viscosity. As most people know, but I’ll remind them the regular viscosity is a second-order differential operator, and it is directly proportional to a physical value of viscosity. Such viscosities are usually a weakly nonlinear function of the solution, and functions of the intensive variables (like temperature, pressure) rather than the structure of the solution. The hyperviscosity falls into a couple of broad categories, the linear form and the nonlinear form.

Unlike most people I view numerical dissipation as a good thing and an absolute necessity. This doesn’t mean that it should be wielded cavalierly or brutally because it can and gives computations a bad name. Generally conventional wisdom dictates that dissipation should always be minimized, but this is wrong-headed. One of the key aspects of important physical systems is the finite amount of dissipation produced dynamically. The correct asymptotically correct solution with a small viscosity is not zero dissipation; it is a non-zero amount of dissipation arising from the proper large-scale dynamics. This knowledge is useful in guiding the construction of good numerical viscosities that enable us to efficiently compute solutions to important physical systems.

One of the really big ideas to grapple with is the utter futility of using computers to simply crush problems into submission. For most problems of any practical significance this will not be happening, ever. In terms of the physics of the problems, this is often the coward’s way out of the issue. In my view, if nature were going to be submitting to our mastery via computational power, it would have already happened. The next generation of computing won’t be doing the trick either. Progress depends on actually thinking about modeling. A more likely outcome will be the diversion of resources away from the sort of thinking that will allow progress to be made. Most systems do not depend on the intricate details of the problem anyway. The small-scale dynamics are universal and driven by large scales. The trick to modeling these systems is to unveil the essence and core of the large-scale dynamics leading to what we observe.

One of the really big ideas to grapple with is the utter futility of using computers to simply crush problems into submission. For most problems of any practical significance this will not be happening, ever. In terms of the physics of the problems, this is often the coward’s way out of the issue. In my view, if nature were going to be submitting to our mastery via computational power, it would have already happened. The next generation of computing won’t be doing the trick either. Progress depends on actually thinking about modeling. A more likely outcome will be the diversion of resources away from the sort of thinking that will allow progress to be made. Most systems do not depend on the intricate details of the problem anyway. The small-scale dynamics are universal and driven by large scales. The trick to modeling these systems is to unveil the essence and core of the large-scale dynamics leading to what we observe.

Given that we aren’t going to be crushing our problems out of existence with raw computing power, hyperviscosity ends up being a handy tool to get more out of the computing we have. Viscosity depends upon having enough computational resolution to effectively allow it to dissipate energy from the computed system. If the computational mesh isn’t fine enough, the viscosity can’t stably remove the energy and the calculation blows up. This provides a very stringent limit on the resolution that can be computationally achieved.

The first form of viscosity to consider is the standard linear form in its simplest form which is a second order differential operator, . If we apply a Fourier transform

to the operator we can see how simple viscosity works,

(just substitute the Fourier description for the function into the operator). The viscosity grows in magnitude with the square of the wave number

. Only when the product of the viscosity and wavenumber squared becomes large will the operator remove energy from the system effectively.

Linear dissipative operators only come from even orders of the differential. Moving to a fourth-order bi-Laplacian operator it is easy to see how the hyperviscosity will works, . The dissipation now kicks in faster (

) with the wavenumber allowing the simulation to be stabilized at comparatively coarser resolution than the corresponding simulation only stabilized by a second-order viscous operator. As a result the simulation can attack more dynamic and energetic flows with the hyperviscosity. One detail is that the sign of the operator changes with each step up the ladder, a sixth order operator will have a negative sign, and attack the spectrum of the solution even faster,

, and so on.

Taking the linear approach to hyperviscosity is simple, but has a number of drawbacks from a practical point-of-view. First the linear hyperviscosity operator becomes quite broad in its extent as the order of the method increases. The method is also still predicated on a relatively well-resolved numerical solution and does not react well to discontinuous solutions. As such the linear hyperviscosity is not entirely robust for general flows. It is better as an additional dissipation mechanism with more industrial strength methods and for studies of a distinctly research flavor. Fortunately there is a class of methods that remove most of these difficulties, nonlinear hyperviscosity. Nonlinear is almost always better, or so it seems, not easier, but better.

Linearity breeds contempt

– Peter Lax

The first nonlinear viscosity came about from Prantl’s mixing length theory and still forms the foundation of most practical turbulence modeling today. For numerical work the original shock viscosity derived by Richtmyer is the simplest hyperviscosity possible, . Here

is a relevant length scale for the viscosity. In purely numerical work,

. It provides what linear hyperviscosity cannot, stability and robustness, making flows that would be

computed with pervasive instability and making them stable and practically useful. It provides the fundamental foundation for shock capturing and the ability to compute discontinuous flows on grids. In many respects the entire CFD field is grounded upon this method. The notable aspect of the method is the dependence of the dissipation on the product of the coefficient

computed with pervasive instability and making them stable and practically useful. It provides the fundamental foundation for shock capturing and the ability to compute discontinuous flows on grids. In many respects the entire CFD field is grounded upon this method. The notable aspect of the method is the dependence of the dissipation on the product of the coefficient and the absolute value of the gradient of the solution.

Looking at the functional form of the artificial viscosity, one sees that it is very much like the Prantl mixing length model of turbulence. The simplest model used for large eddy simulation (LES) is the Smagorinsky model developed first by Joseph Smagorinsky and used in the first three dimensional model for global circulation. This model is significant as the first LES and the model that is a precursor of the modern codes used to predict climate change. The LES subgrid model is really nothing more than Richtmyer (and Von Neumann’s) artificial viscosity and is used to stabilize the calculation against instability that invariably creeps in with enough simulation time. The suggestion to do this was made by Jules Charney upon seeing early weather simulations. The significance of having the first useful numerical method for capturing shock waves, and computing turbulence being one and the same is rarely commented upon. I believe this connection is important and profound. Equally valid arguments can be made that state that the form of nonlinear dissipation is fated by the dimensional form of the governing equations and the resulting dimensional analysis.

Before I derive a general form for the nonlinear hyperviscosity, I should discuss a little bit about another shortcoming of the linear hyperviscosity. In its simplest form the linear operator for classical linear viscosity produces a positive-definite operator. Its application as a numerical solution will keep positive quantities positive. This is actually a form of strong nonlinear stability. The solutions will satisfy discrete forms for the second law of thermodynamics, and provide so-called “entropy solutions”. In other words the solutions are guaranteed to be physically relevant.

This isn’t generally considered important for viscosity, but in the content of more complex systems of equations may have importance. One of the keys to bringing this up is that generally speaking linear hyperviscosity will not have this property, but we can build nonlinear hyperviscosity that will preserve this property. At some level this probably explains the utility of nonlinear hyperviscosity for shock capturing. In nonlinear hyperviscosity we have immense freedom in designing the viscosity as long as we keep it positive. We then have a positive viscosity multiplying a positive definite operator, and this provides a deep form of stability we want along with a connection that guarantees of physically relevant solutions.

This isn’t generally considered important for viscosity, but in the content of more complex systems of equations may have importance. One of the keys to bringing this up is that generally speaking linear hyperviscosity will not have this property, but we can build nonlinear hyperviscosity that will preserve this property. At some level this probably explains the utility of nonlinear hyperviscosity for shock capturing. In nonlinear hyperviscosity we have immense freedom in designing the viscosity as long as we keep it positive. We then have a positive viscosity multiplying a positive definite operator, and this provides a deep form of stability we want along with a connection that guarantees of physically relevant solutions.

With the basic principles in hand we can go wild and derive forms for the hyperviscosity that are well-suited to whatever we are doing. If we have a method with high-order accuracy, we can derive a hyperviscosity to stabilize the method that will not intrude on the accuracy of the method. For example, let’s just say we have a fourth-order accurate method, so we want a viscosity with at least a fifth order operator, . If one wanted better high-frequency damping a different form would work like

. To finish the generalization of the idea consider that you have eighth-order method, now a ninth- or tenth-order viscosity would work, for example,

. The point is that one can exercise immense flexibility in deriving a useful method.

I’ll finish with making brief observation about how to apply these ideas to systems of conservations laws, . This system of equations will have characteristic speeds,

determined by the eigen-analysis of the flux Jacobian,

. A reasonable way to think about hyperviscosity would be to write the nonlinear version as

, where

is the number of derivatives to take. A second approach that would work with Godunov-type methods would compute the absolute value jump at cell interfaces in the characteristic speeds where the Riemann problem is solved to set the magnitude of the viscous coefficient. This jump is the order of the approximation, and would multiply the cell-centered jump in the variables,

. This would guarantee proper entropy production through the hyperviscous flux that would augment the flux computed via the Riemann solver. The hyperviscosity would not impact the formal accuracy of the method.

We can not solve our problems with the same level of thinking that created them

― Albert Einstein

I spent the last two posts railing against the way science works today and its rather dismal reflection in my professional life. I’m taking a week off. It wasn’t that last week was any better, it was actually worse. The rot in the world of science is deep, but the rot is simply part of larger World to which science is a part. Events last week were even more appalling and pregnant with concerns. Maybe if I can turn away and focus on something positive, it might be better, or simply more tolerable. Soon I have a trip to Washington and into the proverbial belly of the beast, it should be entertaining at the very least.

Till next Friday, keep all your singularities regularized.

Think before you speak. Read before you think.

― Fran Lebowitz

VonNeumann, John, and Robert D. Richtmyer. “A method for the numerical calculation of hydrodynamic shocks.” Journal of applied physics 21.3 (1950): 232-237.

Borue, Vadim, and Steven A. Orszag. “Local energy flux and subgrid-scale statistics in three-dimensional turbulence.” Journal of Fluid Mechanics 366 (1998): 1-31.

Cook, Andrew W., and William H. Cabot. “Hyperviscosity for shock-turbulence interactions.” Journal of Computational Physics 203.2 (2005): 379-385.

Smagorinsky, Joseph. “General circulation experiments with the primitive equations: I. the basic experiment*.” Monthly weather review 91.3 (1963): 99-164.

Sometimes the blog is just an open version of a personal journal. I feel myself torn between wanting to write about some thoroughly nerdy topic that holds my intellectual interest (like hyperviscosity for example), but end up ranting about some aspect of my professional life (like last week). I genuinely felt like the rant from last week would be followed this week by a technical post because things would be better. Was I ever wrong! This week is even more appalling! I’m getting to see the rollout of the new national program reaching for Exascale computers. As deeply problematic as the current NNSA program might be, it is a paragon of technical virtue compared with the broader DOE program. Its as if we already had a President Trump in the White House to lead our Nation over the brink toward chaos, stupidity and making everything an episode in the World’s scariest reality show. Electing Trump would just make the stupidity obvious, make no mistake, we are already stupid.

Sometimes the blog is just an open version of a personal journal. I feel myself torn between wanting to write about some thoroughly nerdy topic that holds my intellectual interest (like hyperviscosity for example), but end up ranting about some aspect of my professional life (like last week). I genuinely felt like the rant from last week would be followed this week by a technical post because things would be better. Was I ever wrong! This week is even more appalling! I’m getting to see the rollout of the new national program reaching for Exascale computers. As deeply problematic as the current NNSA program might be, it is a paragon of technical virtue compared with the broader DOE program. Its as if we already had a President Trump in the White House to lead our Nation over the brink toward chaos, stupidity and making everything an episode in the World’s scariest reality show. Electing Trump would just make the stupidity obvious, make no mistake, we are already stupid. I think there are parallels that are worth discussing in depth. Something big is happening and right now it looks like a great unraveling. People are choosing sides and the outcome will determine the future course of our World. On one side we have the forces of conservatism, which want to preserve the status quo through the application of fear to control the populace. This allows control, lack of initiative, deprivation and a herd mentality. The prime directive for the forces on the right is the maintenance of the existing structures of power in society. The forces of liberalization and progress are arrayed on the other side wanting freedom, personal meaning, individuality, diversity, and new societal structure. These forces are colliding on many fronts and the outcome is starting to be violent. The outcome still hangs in the balance.

I think there are parallels that are worth discussing in depth. Something big is happening and right now it looks like a great unraveling. People are choosing sides and the outcome will determine the future course of our World. On one side we have the forces of conservatism, which want to preserve the status quo through the application of fear to control the populace. This allows control, lack of initiative, deprivation and a herd mentality. The prime directive for the forces on the right is the maintenance of the existing structures of power in society. The forces of liberalization and progress are arrayed on the other side wanting freedom, personal meaning, individuality, diversity, and new societal structure. These forces are colliding on many fronts and the outcome is starting to be violent. The outcome still hangs in the balance. The Internet is a great liberalizing force, but it also provides a huge amplifier for ignorance, propaganda and the instigation of violence. It is simply a technology and it is not intrinsically good or bad. On the one hand the Internet allows people to connect in ways that were unimaginable mere years ago. It allows access to incredible levels of information. The same thing creates immense fear in society because new social structures are emerging. Some of these structures are criminal or terrorists, some of these are dissidents against the establishment, and some of these are viewed as immoral. The information availability for the general public becomes an overload. This works in favor of the establishment, which benefits from propaganda and ignorance. The result is a distinct tension between knowledge and ignorance, freedom and tyranny hinging upon fear and security. I can’t see who is winning, but signs are not good.

The Internet is a great liberalizing force, but it also provides a huge amplifier for ignorance, propaganda and the instigation of violence. It is simply a technology and it is not intrinsically good or bad. On the one hand the Internet allows people to connect in ways that were unimaginable mere years ago. It allows access to incredible levels of information. The same thing creates immense fear in society because new social structures are emerging. Some of these structures are criminal or terrorists, some of these are dissidents against the establishment, and some of these are viewed as immoral. The information availability for the general public becomes an overload. This works in favor of the establishment, which benefits from propaganda and ignorance. The result is a distinct tension between knowledge and ignorance, freedom and tyranny hinging upon fear and security. I can’t see who is winning, but signs are not good. The core of the issue is an unhealthy relationship to risk, fear and failure. Our management is focused upon controlling risk, fear of bad things, and outright avoidance of failure. The result is an implemented culture of caution and compliance manifesting itself as a gulf of leadership. The management becomes about budgets and money while losing complete sight of purpose and direction. The focus on leading ourselves in new directions gets lost completely. The ability take risks get destroyed because of fears and outright fear of failure. People are so completely wrapped up in trying to avoid ever fucking up that all the energy behind doing progressive things moving forward are completely sapped. We are so tied to compliance that plans must be followed even when they make no sense at all.

The core of the issue is an unhealthy relationship to risk, fear and failure. Our management is focused upon controlling risk, fear of bad things, and outright avoidance of failure. The result is an implemented culture of caution and compliance manifesting itself as a gulf of leadership. The management becomes about budgets and money while losing complete sight of purpose and direction. The focus on leading ourselves in new directions gets lost completely. The ability take risks get destroyed because of fears and outright fear of failure. People are so completely wrapped up in trying to avoid ever fucking up that all the energy behind doing progressive things moving forward are completely sapped. We are so tied to compliance that plans must be followed even when they make no sense at all. ry small degree. Our managers are human and limited in capacity for complexity and time available to provide focus. If all of the focus is applied to management nothing is left for leadership. The impact is clear, the system is full of management assurance, compliance and surety, yet virtually absent of vision and inspiration. We are bereft of aspirational perspectives with clear goals looking forward. The management focus breeds an incremental approach that too concretely grounds future vision completely on what is possible today. All of this is brewing in a sea of risk aversion and intolerance for failure.

ry small degree. Our managers are human and limited in capacity for complexity and time available to provide focus. If all of the focus is applied to management nothing is left for leadership. The impact is clear, the system is full of management assurance, compliance and surety, yet virtually absent of vision and inspiration. We are bereft of aspirational perspectives with clear goals looking forward. The management focus breeds an incremental approach that too concretely grounds future vision completely on what is possible today. All of this is brewing in a sea of risk aversion and intolerance for failure. that I work for. It has resulted in the wholesale divestiture of quality because quality no longer matters to success. It is creating a thoroughly awful and untenable situation where truth and reality are completely detached from how we operate. Every time that something of low quality is allowed to be characterized as being high quality, we undermine our culture. Capability to make real progress is completely undermined because progress is extremely difficult and prone to failure and setbacks. It is much easier to simply incrementally move along doing what we are already doing. We know that will work and frankly those managing us don’t know the difference anyway. Doing what we are already doing is simply the status quo and orthogonal to progress.

that I work for. It has resulted in the wholesale divestiture of quality because quality no longer matters to success. It is creating a thoroughly awful and untenable situation where truth and reality are completely detached from how we operate. Every time that something of low quality is allowed to be characterized as being high quality, we undermine our culture. Capability to make real progress is completely undermined because progress is extremely difficult and prone to failure and setbacks. It is much easier to simply incrementally move along doing what we are already doing. We know that will work and frankly those managing us don’t know the difference anyway. Doing what we are already doing is simply the status quo and orthogonal to progress. micromanagement. Each step in micromanagement produces another tax on the time and energy of every one impacted by the system and diminishes the good that can be done. In essence we are draining our system of energy for creating positive outcomes. The management system is unremittingly negative in its focus, trying to stop stuff from happening rather than enable stuff. It is ultimately a losing battle, which is gutting our ability to produce great things.

micromanagement. Each step in micromanagement produces another tax on the time and energy of every one impacted by the system and diminishes the good that can be done. In essence we are draining our system of energy for creating positive outcomes. The management system is unremittingly negative in its focus, trying to stop stuff from happening rather than enable stuff. It is ultimately a losing battle, which is gutting our ability to produce great things. management work shouldn’t be done in the abstract. Almost all of the management stuff are a good ideas and “good”. They are bad in the sense of what they displace from the sorts of efforts we have the time and energy to engage in. We all have limits in terms of what we can reasonably achieve. If we spend our energy on good, but low value activities, we do not have the energy to focus on difficult high value activities. A lot of these management activities are good, easy, and time consuming and directly displace lots of hard high value work. The core of our problems is the inability to focus sufficient energy on hard things. Without focus the hard things simply don’t get done. This is where we are today, consumed by easy low value things, and lacking the energy and focus to do anything truly great.

management work shouldn’t be done in the abstract. Almost all of the management stuff are a good ideas and “good”. They are bad in the sense of what they displace from the sorts of efforts we have the time and energy to engage in. We all have limits in terms of what we can reasonably achieve. If we spend our energy on good, but low value activities, we do not have the energy to focus on difficult high value activities. A lot of these management activities are good, easy, and time consuming and directly displace lots of hard high value work. The core of our problems is the inability to focus sufficient energy on hard things. Without focus the hard things simply don’t get done. This is where we are today, consumed by easy low value things, and lacking the energy and focus to do anything truly great. s a general ambiguity regarding the purpose of our Labs, the goals of our science and the importance of the work. None of this is clear. It is the generic implication of the lack of leadership within the specific context of our Labs, or federally supported science. It is probably a direct result of a broader and deeper vacuum of leadership nationally infecting all areas of endeavor. We have no visionary or aspirational goals as a society either.

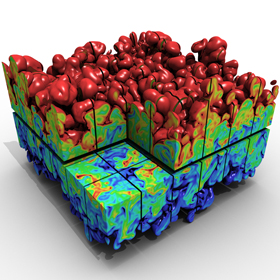

s a general ambiguity regarding the purpose of our Labs, the goals of our science and the importance of the work. None of this is clear. It is the generic implication of the lack of leadership within the specific context of our Labs, or federally supported science. It is probably a direct result of a broader and deeper vacuum of leadership nationally infecting all areas of endeavor. We have no visionary or aspirational goals as a society either. I’ll write the equation in TeX and show all of you a picture, you can make out a little of my other ink too, a lithium-7 atom and a Rayleigh-Taylor instability (I also have my favorite dog’s paw on my right shoulder and the famous Von Karmen vortex street on my forearm). The equation is how I think about the second law of thermodynamics in operation through the application of a vanishing viscosity principle tying the solution of equations to a concept of physical admissibility. In other words I believe in entropy and its power to guide us to solutions that matter in the physical world.

I’ll write the equation in TeX and show all of you a picture, you can make out a little of my other ink too, a lithium-7 atom and a Rayleigh-Taylor instability (I also have my favorite dog’s paw on my right shoulder and the famous Von Karmen vortex street on my forearm). The equation is how I think about the second law of thermodynamics in operation through the application of a vanishing viscosity principle tying the solution of equations to a concept of physical admissibility. In other words I believe in entropy and its power to guide us to solutions that matter in the physical world.