The best dividends on the labor invested have invariably come from seeking more knowledge rather than more power.

– The Wright brothers

Some messages are so important that they need to be repeated over and over. This is one of those times. Computing is mostly not about computers. A computer is a tool, powerful, important and unrelentingly useful, but a tool. Using computing is a fundamentally human activity that uses a powerful tool to augment human’s capacity for calculation and monotony. Today we see attitudes expressing more interest in the computers themselves with little regard for how they are used. The computers are essential tools that enable a certain level of utility, but the holistic human activity is at the core of what they do. This holistic approach is exactly the spirit that has been utterly lost by the current high performance computer push. In a deep way the program lacks the appropriate humanity in its composition, which is absolutely necessary for progress.

is exactly the spirit that has been utterly lost by the current high performance computer push. In a deep way the program lacks the appropriate humanity in its composition, which is absolutely necessary for progress.

Most clearly the computers themselves are not an end in themselves, but only useful insofar as they can provide benefit to the solution of problems for mankind. Taking human thinking and augmenting it derives the benefits for humanity. It is our imagination and inspiration automated as to enable solutions through primarily approximate means. The key to all of the true benefits of computing come from the fields of physics, engineering, medicine, biology, chemistry and mathematics. Subjects closer to the practice of computing do not necessarily push benefits forward to society at large. It is this break in the social contract that current high performance computing has entirely ignored. The societal end product is a mere after thought and little more than simply a marketing ploy for a seemingly unremitting focus on computer hardware.

Mathematics is the door and key to the sciences.

— Roger Bacon

This approach is destined to fail, or at the very most not reap the potential benefits the investment should yield. It is completely and utterly inconsistent with the v enerated history of scientific computing. The key to the success and impact of scientific computing has been its ability to augment its foundational fields as a supplement to human’s innate intellect in an area that human’s ability is a bit diminished. While it supplements raw computational power, the impact of the field depends entirely on human’s natural talent as expressed in the base science and mathematics. One place of natural connection is the mathematical expression of the knowledge in basic science. Among the greatest sins of modern scientific computing is the diminished role of mathematics in the march toward progress.

enerated history of scientific computing. The key to the success and impact of scientific computing has been its ability to augment its foundational fields as a supplement to human’s innate intellect in an area that human’s ability is a bit diminished. While it supplements raw computational power, the impact of the field depends entirely on human’s natural talent as expressed in the base science and mathematics. One place of natural connection is the mathematical expression of the knowledge in basic science. Among the greatest sins of modern scientific computing is the diminished role of mathematics in the march toward progress.

Computing should never be an excuse to not think; the truth is that computing has become exactly that; it is an excuse to stop thinking, and simply automatically get “answers”. The importance of this connection cannot be underestimated. It is the complete and total foundation of computing. This is where the current programs become completely untethered from logic, common sense and the basic recipe of success. The mathematics program is virtually absent from the drive toward  greater scientific computing. For example I work in an organization that is devoted to applied mathematics, yet virtually no mathematics actually takes place. Our applied mathematics programs have turned into software programs. Somehow the decision was made 20-30 years ago that software “weaponized” mathematics, and in the process the software became the entire enterprise, and the mathematics itself became lost, an afterthought of the process. Without the actual mathematical foundation for computing, important efficiencies, powerful insights and structural understanding is scarified.

greater scientific computing. For example I work in an organization that is devoted to applied mathematics, yet virtually no mathematics actually takes place. Our applied mathematics programs have turned into software programs. Somehow the decision was made 20-30 years ago that software “weaponized” mathematics, and in the process the software became the entire enterprise, and the mathematics itself became lost, an afterthought of the process. Without the actual mathematical foundation for computing, important efficiencies, powerful insights and structural understanding is scarified.

The software has become the major product and end point of almost all research efforts in mathematics to the point of displacing actual math. The product of work needs to be expressed in software and the construction and maintenance of the software packages has become the major enterprise being conducted. In the process the centrality of the mathematical exploration and discovery has been submerged. Software is a difficult, valuable and important endeavor in itself, but distinct from mathematics. In many cases the software itself has become the raison d’être for math programs. In the process of the emphasis on the software instantiating mathematical ideas, the production and assault on mathematics has stalled. It has lost its centrality to the enterprise. This is horrible because there is so much yet to do.

raison d’être for math programs. In the process of the emphasis on the software instantiating mathematical ideas, the production and assault on mathematics has stalled. It has lost its centrality to the enterprise. This is horrible because there is so much yet to do.

Worse yet, the mathematical software is horribly expensive to maintain and loses its modernity with a frightful path. We hear calls to preserve the code base because it was so expensive. A preserved code base loses its value more surely than a car depreciates. The software is only as good as the intellect of the people maintaining it. In the process we lose intellectual ownership of the code. This is beyond the horrible accumulation of technical debt in the software, which erodes its value like mold or dry rot. None of these problems are the worst of the myriad of issues around this emphasis; the worst issue is the opportunity cost of turning our mathematicians into software engineers and removing the attention from some of our most pressing issues.

A single discovery of a new concept, principle, algorithms or technique can render one of these software packages completely obsolete. We seem to be in an era where we believe that more computer power is all that is needed to bring reality to heel. These discoveries can allow results and efficiencies that were com pletely unthinkable to be achieved. Discoveries make the impossible, possible, and we are denying ourselves the possibility of these results through our inept management of mathematics proper role in scientific computing. What might be some of the important topics in need of refined and focused mathematical thinking?

pletely unthinkable to be achieved. Discoveries make the impossible, possible, and we are denying ourselves the possibility of these results through our inept management of mathematics proper role in scientific computing. What might be some of the important topics in need of refined and focused mathematical thinking?

The work of Peter Lax and others has brought great mathematical understanding, discipline and order to the world of shock physics. Amazingly this has all happened in one dimension plus time. In two or three dimensions where the real World happens, we know far less. As a result our knowledge and mastery over the equations of (compressible) fluid dynamics is limited and incomplete. Bringing order and understanding to the real World of fluids could have a massive impact on our ability to solve realistic problems. Today we largely exist on the faith that our limited one-dimensional knowledge gives us the key to multi-dimensional real World problems. A program to expand our knowledge and fill these gaps in knowledge would be a boon to analytical and numerical methods seeding a new renaissance for scientific computing, physics and engineering.

One of the key things to understanding the power of computing is the comprehension that the ability compute belies a deep understanding that enables analytical, physical and domain specific knowledge. A problem intimatel y related to the multi-dimensional issues with compressible fluids is the topic of one of the Clay prizes. This is a million dollar prize for proving the existence of solutions to the Navier-Stokes equations. There is a deep problem with the way this problem is posed that may make its solution both impossible and practically useless. The equations posed in the problem statement are fundamentally wrong. They are physically wrong, not mathematically although this wrongness has consequences. In a very deep practical way fluids are never truly incompressible; incompressible is an approximation, but not a fact. This makes the equations have an intrinsically elliptic character (because incompressibility implies infinite sound speeds, and lack of thermodynamic character).

y related to the multi-dimensional issues with compressible fluids is the topic of one of the Clay prizes. This is a million dollar prize for proving the existence of solutions to the Navier-Stokes equations. There is a deep problem with the way this problem is posed that may make its solution both impossible and practically useless. The equations posed in the problem statement are fundamentally wrong. They are physically wrong, not mathematically although this wrongness has consequences. In a very deep practical way fluids are never truly incompressible; incompressible is an approximation, but not a fact. This makes the equations have an intrinsically elliptic character (because incompressibility implies infinite sound speeds, and lack of thermodynamic character).

Physically the infinite sound speeds remove causality from the equations, and the removal of thermodynamics takes them further outside the realm of reality. This also creates immense mathematical difficulties that make these equations almost intractable. So this problem touted as the route to mathematically contribute to understanding turbulence may be a waste of time for that endeavor as well. Again, we need a concerted effort to put this part of the mathematical physics World into better order. The benefits to computation through some order would be virtually boundless.

This gets at one of the greatest remaining unsolved problems in physics, turbulence. The ability to solve problems depends critically upon models and the mathematics that make s such models tractable or not. The existence theory problems for the incompressible Navier-Stokes equations are essential for turbulence. For a century it has largely been assumed that the Navier-Stokes equations describe turbulent flow with an acute focus on incompressibility. More modern understanding should have highlighted that the very mechanism we depend upon for creating the sort of singularities turbulence observations imply has been removed in the process of the choice of incompressibility. The irony is absolutely tragic. Turbulence brings almost an endless amount of difficulty to its study whether experimental, theoretical, or computational. In every case the depth of the necessary contributions by mathematics is vast. It seems somewhat likely that we have compounded the difficulty of turbulence by choosing a model with terrible properties. If so, it is likely that the problem remains unsolved, not due to its difficulty, but rather our blindness to the shortcomings, and the almost religious faith many have followed in attacking turbulence with such a model.

s such models tractable or not. The existence theory problems for the incompressible Navier-Stokes equations are essential for turbulence. For a century it has largely been assumed that the Navier-Stokes equations describe turbulent flow with an acute focus on incompressibility. More modern understanding should have highlighted that the very mechanism we depend upon for creating the sort of singularities turbulence observations imply has been removed in the process of the choice of incompressibility. The irony is absolutely tragic. Turbulence brings almost an endless amount of difficulty to its study whether experimental, theoretical, or computational. In every case the depth of the necessary contributions by mathematics is vast. It seems somewhat likely that we have compounded the difficulty of turbulence by choosing a model with terrible properties. If so, it is likely that the problem remains unsolved, not due to its difficulty, but rather our blindness to the shortcomings, and the almost religious faith many have followed in attacking turbulence with such a model.

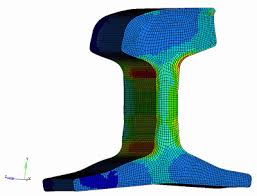

Before I close I’ll touch on a few more areas where some progress could either bring great order to a disordered, but important area, or potentially unleash new approach es to problem solving. An area in need of fresh ideas, connections and better understanding is mechanics. This is a classical field with a rich and storied past, but suffering from a dire lack of connection between the classical mathematical rigor and the modern numerical world. Perhaps in no way is this more evident in the prevalent use of hypo-elastic models where hyper-elasticity would be far better. The hypo-elastic legacy comes from the simplicity of its numerical solution being the basis of methods and codes used around the World. It also only applies to very small incremental deformations. For the applications being studied, it should is invalid. In spite of this famous shortcoming, hypo-elasticity rules supreme, and hyper-elasticity sits in an almost purely academic role. Progress is needed here and mathematical rigor is part of the solution.

es to problem solving. An area in need of fresh ideas, connections and better understanding is mechanics. This is a classical field with a rich and storied past, but suffering from a dire lack of connection between the classical mathematical rigor and the modern numerical world. Perhaps in no way is this more evident in the prevalent use of hypo-elastic models where hyper-elasticity would be far better. The hypo-elastic legacy comes from the simplicity of its numerical solution being the basis of methods and codes used around the World. It also only applies to very small incremental deformations. For the applications being studied, it should is invalid. In spite of this famous shortcoming, hypo-elasticity rules supreme, and hyper-elasticity sits in an almost purely academic role. Progress is needed here and mathematical rigor is part of the solution.

A couple of areas of classical numerical methods are in dire need of breakthroughs with the current technology simply being accepted as good enough. A key one is the solution of sparse linear systems of equations. The current methods are relatively fragile and it’s been 30-40 years since we had a big improvement. Furthermore these successes are somewhat hollowed by the lack of a robust solution path. Right now the gold standard of scaling comes from multigrid, invented in the mid-1970’s to mid-1980’s. Robust solvers use some sort of banded method with quadratic scaling or pre-conditioned Krylov methods (which a re less reliable). This area needs new ideas and a fresh perspective in the worst way. The second classical area of investigation that has stalled is high-order methods. I’ve written about this a lot. Needless to say we need a combination of new ideas, and a somewhat more honest and pragmatic assessment of what is needed in practical terms. We have to thread the needle of accuracy, efficiency and robustness in both cases. Again without mathematics holding us to the level of rigor it demands progress seems unlikely.

re less reliable). This area needs new ideas and a fresh perspective in the worst way. The second classical area of investigation that has stalled is high-order methods. I’ve written about this a lot. Needless to say we need a combination of new ideas, and a somewhat more honest and pragmatic assessment of what is needed in practical terms. We have to thread the needle of accuracy, efficiency and robustness in both cases. Again without mathematics holding us to the level of rigor it demands progress seems unlikely.

Lastly we have broad swaths of application and innovation waiting to be discovered. We need to work to make optimization something that yields real results on a regular basis. The problem in making this work is similar to the problem with high-order methods; we need to combine the best technology with an unerring focus on the practical and pragmatic. Optimization today only applies to problems that are far too idealized. Other methodologies are laying in wait of great impact among these the generalization of statistical methods. There is an immense need for better and more robust statistical methods in a variety of fields (turbulence being a prime example). We need to unleash the forces of innovation to reshape how we apply statistics.

When you change the way you look at things, the things you look at change.

The depth of the problem for mathematics does seem to be slightly self-imposed. In a drive for mathematical rigor and professional virtue in applied mathematics, the field has lost a great deal of connection to physics and engineering. If one looks to the past for guidance, the obvious truth is that the ties between physics, engineering and mathematics have been quite fruitful. There needs to be healthy dynamics of push and pull between these areas of emphasis. The w orlds of physics and engineering need to seek mathematical rigor as a part of solidifying advances. Mathematics needs to seek inspiration from physics and engineering. Sometimes we need the pragmatic success in the ad hoc “seat of the pants” approach to provide the impetus for mathematical investigation. Finding out that something works tends to be a powerful driver to understanding why something works. For example the field of compressed sensing arose from a practical and pragmatic regularization method that worked without theoretical support. Far too much emphasis is placed on software and far too little on mathematical discovery and deep understand. We need a lot more discovery and understanding today, perhaps no place more than scientific computing!

orlds of physics and engineering need to seek mathematical rigor as a part of solidifying advances. Mathematics needs to seek inspiration from physics and engineering. Sometimes we need the pragmatic success in the ad hoc “seat of the pants” approach to provide the impetus for mathematical investigation. Finding out that something works tends to be a powerful driver to understanding why something works. For example the field of compressed sensing arose from a practical and pragmatic regularization method that worked without theoretical support. Far too much emphasis is placed on software and far too little on mathematical discovery and deep understand. We need a lot more discovery and understanding today, perhaps no place more than scientific computing!

Mathematics is as much an aspect of culture as it is a collection of algorithms.

— Carl Boyer

Note: Sometimes my post is simply a way of working on narrative elements for a talk. I have a talk on Exascale computing and (applied) mathematics next Monday at the University of New Mexico. This post is serving to help collect my thoughts in advance.

and terrifying shit show of the 2016 American Presidential election. It all seems to be coming together in a massive orgy of angst, lack of honesty and fundamental integrity across the full spectrum of life. As an active adult within this society I feel the forces tugging away at me, and I want to recoil from the carnage I see. A lot of days it seems safer to simply stay at home and hunker down and let this storm pass. It seems to be present at every level of life involving what is open and obvious to what is private and hidden.

and terrifying shit show of the 2016 American Presidential election. It all seems to be coming together in a massive orgy of angst, lack of honesty and fundamental integrity across the full spectrum of life. As an active adult within this society I feel the forces tugging away at me, and I want to recoil from the carnage I see. A lot of days it seems safer to simply stay at home and hunker down and let this storm pass. It seems to be present at every level of life involving what is open and obvious to what is private and hidden. the same thing is that progress depends on finding important, valuable problems and driving solutions. A second piece of integrity is hard work, persistence, and focus on the important valuable problems linked to great National or World concerns. Lastly, a powerful aspect of integrity is commitment to self. This includes a focus on self-improvement, and commitment to a full and well-rounded life. Every single bit of this is in rather precarious and constant tension, which is fine. What isn’t fine is the intrusion of outright bullshit into the mix undermining integrity at every turn.

the same thing is that progress depends on finding important, valuable problems and driving solutions. A second piece of integrity is hard work, persistence, and focus on the important valuable problems linked to great National or World concerns. Lastly, a powerful aspect of integrity is commitment to self. This includes a focus on self-improvement, and commitment to a full and well-rounded life. Every single bit of this is in rather precarious and constant tension, which is fine. What isn’t fine is the intrusion of outright bullshit into the mix undermining integrity at every turn. inimal value that get marketed as breakthroughs. The lack of integrity at the level of leadership simply takes this bullshit and passes it along. Eventually the bullshit gets to people who are incapable of recognizing the difference. Ultimately, the result of the acceptance of bullshit produces a lowering of standards, and undermines the reality of progress. Bullshit is the death of integrity in the professional world.

inimal value that get marketed as breakthroughs. The lack of integrity at the level of leadership simply takes this bullshit and passes it along. Eventually the bullshit gets to people who are incapable of recognizing the difference. Ultimately, the result of the acceptance of bullshit produces a lowering of standards, and undermines the reality of progress. Bullshit is the death of integrity in the professional world. within a vibrant and growing bullshit economy. Taken in this broad context, the dominance of bullshit in my professional life and the potential election of Donald Trump are closely connected.

within a vibrant and growing bullshit economy. Taken in this broad context, the dominance of bullshit in my professional life and the potential election of Donald Trump are closely connected. ated by fear mongering bullshit. Even worse it has paved the way for greater purveyors of bullshit like Trump. We may well see bullshit providing the vehicle to elect an unqualified con man as the President.

ated by fear mongering bullshit. Even worse it has paved the way for greater purveyors of bullshit like Trump. We may well see bullshit providing the vehicle to elect an unqualified con man as the President. e beauty of it is the made up shit can conform to whatever narrative you desire, and completely fit whatever your message is. In such a world real problems can simply be ignored when the path to progress is problematic for those in power.

e beauty of it is the made up shit can conform to whatever narrative you desire, and completely fit whatever your message is. In such a world real problems can simply be ignored when the path to progress is problematic for those in power. n the early days of computational science we were just happy to get things to work for simple physics in one spatial dimension. Over time, our grasp on more difficult coupled multi-dimensional physics became ever more bold and expansive. The quickest route to this goal was the use of operator splitting where the simple operators, single physics and one-dimensional were composed into complex operators. Most of our complex multiphysics codes operate in this operator split manner. Research into doing better almost always entails doing away with this composition of operators, or operator splitting and doing everything fully coupled. It is assumed that this is always superior. Reality is more difficult than this proposition, and most of the time the fully coupled or unsplit approach is actually worse with lower accuracy and greater expense with little identifiable benefits. So the question is should we keep trying to do this?

n the early days of computational science we were just happy to get things to work for simple physics in one spatial dimension. Over time, our grasp on more difficult coupled multi-dimensional physics became ever more bold and expansive. The quickest route to this goal was the use of operator splitting where the simple operators, single physics and one-dimensional were composed into complex operators. Most of our complex multiphysics codes operate in this operator split manner. Research into doing better almost always entails doing away with this composition of operators, or operator splitting and doing everything fully coupled. It is assumed that this is always superior. Reality is more difficult than this proposition, and most of the time the fully coupled or unsplit approach is actually worse with lower accuracy and greater expense with little identifiable benefits. So the question is should we keep trying to do this? solution involves a precise dynamic balance. This is when you have the situation of equal and opposite terms in the equations that produce solutions in near equilibrium. This produces critical points where solution make complete turns in outcomes based on the very detailed nature of the solution. These situations also produce substantial changes in the effective time scales of the solution. When very fast phenomena combine in this balanced form, the result is a slow time scale. It is most acute in the form of the steady-state solution where such balances are the full essence of the physical solution. This is where operator splitting is problematic and should be avoided.

solution involves a precise dynamic balance. This is when you have the situation of equal and opposite terms in the equations that produce solutions in near equilibrium. This produces critical points where solution make complete turns in outcomes based on the very detailed nature of the solution. These situations also produce substantial changes in the effective time scales of the solution. When very fast phenomena combine in this balanced form, the result is a slow time scale. It is most acute in the form of the steady-state solution where such balances are the full essence of the physical solution. This is where operator splitting is problematic and should be avoided. ods is their disadvantage for the fundamental approximations. When operators are discretized separately quite efficient and optimized approaches can be applied. For example if solving a hyperbolic equation it can be very effective and efficient to produce an extremely high-order approximation to the equations. For the fully coupled (unsplit) case such approximations are quite expensive, difficult and complex to produce. If the solution you are really interested in is first-order accurate, the benefit of the fully coupled case is mostly lost. This is with the distinct exception of small part of the solution domain where the dynamic balance is present and the benefits of coupling are undeniable.

ods is their disadvantage for the fundamental approximations. When operators are discretized separately quite efficient and optimized approaches can be applied. For example if solving a hyperbolic equation it can be very effective and efficient to produce an extremely high-order approximation to the equations. For the fully coupled (unsplit) case such approximations are quite expensive, difficult and complex to produce. If the solution you are really interested in is first-order accurate, the benefit of the fully coupled case is mostly lost. This is with the distinct exception of small part of the solution domain where the dynamic balance is present and the benefits of coupling are undeniable. method should be adaptive and locally tailored to the nature of the solution. One size fits all is almost never the right answer (to anything). Unfortunately this whole line of attack is not favored by anyone these days, we seem to be stuck in the worst of both worlds where codes used for solving real problems are operator split, and research is focused on coupling without regard for the demands of reality. We need to break out of this stagnation! This is ironic because stagnation is one of the things that coupled methods excel at!

method should be adaptive and locally tailored to the nature of the solution. One size fits all is almost never the right answer (to anything). Unfortunately this whole line of attack is not favored by anyone these days, we seem to be stuck in the worst of both worlds where codes used for solving real problems are operator split, and research is focused on coupling without regard for the demands of reality. We need to break out of this stagnation! This is ironic because stagnation is one of the things that coupled methods excel at! Today’s title is a conclusion that comes from my recent assessments and experiences at work. It has completely thrown me off stride as I struggle to come to terms with the evidence in front of me. The obvious and reasonable conclusions from the consideration of recent experiential evidence directly conflicts with most of my most deeply held values. As a result I find myself in a deep quandary about how to proceed with work. Somehow my performance is perceived to be better when I don’t care much about my work. One reasonable conclusion is that when I have little concern about outcomes of the work, I don’t show my displeasure when those outcomes are poor.

Today’s title is a conclusion that comes from my recent assessments and experiences at work. It has completely thrown me off stride as I struggle to come to terms with the evidence in front of me. The obvious and reasonable conclusions from the consideration of recent experiential evidence directly conflicts with most of my most deeply held values. As a result I find myself in a deep quandary about how to proceed with work. Somehow my performance is perceived to be better when I don’t care much about my work. One reasonable conclusion is that when I have little concern about outcomes of the work, I don’t show my displeasure when those outcomes are poor. nal integrity is important to pay attention to. Hard work, personal excellence and a devotion to progress has been the path to my success professionally. The only thing that the current environment seems to favor is hard work (and even that’s questionable). The issues causing tension are related to technical and scientific quality, or work that denotes any commitment to technical excellence. It’s everything I’ve written about recently, success with high performance computing, progress in computational science, and integrity in peer review. Attention to any and all of these topics is a source of tension that seems to be completely unwelcome. We seem to be managed to mostly pay attention to nothing but the very narrow and well-defined boundaries of work. Any thinking or work “outside the box” seems to invite ire, punishment and unhappiness. Basically, the evidence seems to indicate that my performance is perceived to be much better if I “stay in the box”. In other words I am managed to be predictable and well defined in my actions, don’t provide any surprises.

nal integrity is important to pay attention to. Hard work, personal excellence and a devotion to progress has been the path to my success professionally. The only thing that the current environment seems to favor is hard work (and even that’s questionable). The issues causing tension are related to technical and scientific quality, or work that denotes any commitment to technical excellence. It’s everything I’ve written about recently, success with high performance computing, progress in computational science, and integrity in peer review. Attention to any and all of these topics is a source of tension that seems to be completely unwelcome. We seem to be managed to mostly pay attention to nothing but the very narrow and well-defined boundaries of work. Any thinking or work “outside the box” seems to invite ire, punishment and unhappiness. Basically, the evidence seems to indicate that my performance is perceived to be much better if I “stay in the box”. In other words I am managed to be predictable and well defined in my actions, don’t provide any surprises. The only way I can “stay in the box” is to turn my back on the same values that brought me success. Most of my professional success is based on doing “out of the box” thinking working to provide real progress on important issues. Recently it’s been pretty clear that this isn’t appreciated any more. To stop thinking out of the box I need to stop giving a shit. Every time I seem to care more deeply about work and do something extra not only is it not appreciated; it gets me into trouble. Just do the minimum seems to be the real directive, and extra effort is not welcome seem to be the modern mantra. Do exactly what you’re told to do, no more and no less. This is the path to success.

The only way I can “stay in the box” is to turn my back on the same values that brought me success. Most of my professional success is based on doing “out of the box” thinking working to provide real progress on important issues. Recently it’s been pretty clear that this isn’t appreciated any more. To stop thinking out of the box I need to stop giving a shit. Every time I seem to care more deeply about work and do something extra not only is it not appreciated; it gets me into trouble. Just do the minimum seems to be the real directive, and extra effort is not welcome seem to be the modern mantra. Do exactly what you’re told to do, no more and no less. This is the path to success. done the experiment and the evidence was absolutely clear. When I don’t care, don’t give a shit and have different priorities than work, I get awesome performance reviews. When I do give a shit, it creates problems. A big part of the problem is the whole “in the box” and “out of the box” issue. We are managed to provide predictable results and avoid surprises. It is all part of the low risk mindset that permeates the current World, and the workplace as well. Honesty and progress is a source of tension (i.e., risk) and as such it makes waves, and if you make waves you create problems. Management doesn’t like tension, waves or anything that isn’t completely predictable. Don’t cause trouble or make problems, just do stuff that makes us look good. The best way to provide this sort of outcome is just come to work and do what you’re expected to do, no more, no less. Don’t be creative and let someone else tell you what is important. In other words, don’t give a shit, or better yet don’t give fuck either.

done the experiment and the evidence was absolutely clear. When I don’t care, don’t give a shit and have different priorities than work, I get awesome performance reviews. When I do give a shit, it creates problems. A big part of the problem is the whole “in the box” and “out of the box” issue. We are managed to provide predictable results and avoid surprises. It is all part of the low risk mindset that permeates the current World, and the workplace as well. Honesty and progress is a source of tension (i.e., risk) and as such it makes waves, and if you make waves you create problems. Management doesn’t like tension, waves or anything that isn’t completely predictable. Don’t cause trouble or make problems, just do stuff that makes us look good. The best way to provide this sort of outcome is just come to work and do what you’re expected to do, no more, no less. Don’t be creative and let someone else tell you what is important. In other words, don’t give a shit, or better yet don’t give fuck either.

The news is full of stories and outrage at Hillary Clinton’s e-mail scandal. I don’t feel that anyone has remotely the right perspective on how this happened, and why it makes perfect sense in the current system. It epitomizes a system that is prone to complete breakdown because of the deep neglect of information systems both unclassified and classified within the federal system. We just don’t pay IT professionals enough to get good service. The issue also gets to the heart of the overall treatment of classified information by the United States that is completely out of control. The tendency to classify things is completely running amok far beyond anything that is in the actual best interests of society. To

The news is full of stories and outrage at Hillary Clinton’s e-mail scandal. I don’t feel that anyone has remotely the right perspective on how this happened, and why it makes perfect sense in the current system. It epitomizes a system that is prone to complete breakdown because of the deep neglect of information systems both unclassified and classified within the federal system. We just don’t pay IT professionals enough to get good service. The issue also gets to the heart of the overall treatment of classified information by the United States that is completely out of control. The tendency to classify things is completely running amok far beyond anything that is in the actual best interests of society. To compound things, further it highlights the utter and complete disparity in how laws and rules do not apply to the rich and powerful. All of this explains what happened, and why; yet it doesn’t make what she did right or justified. Instead it points out why this sort of thing is both inevitable and much more widespread (i.e., John Deutch, Condoleezza Rich, Colin Powell,

compound things, further it highlights the utter and complete disparity in how laws and rules do not apply to the rich and powerful. All of this explains what happened, and why; yet it doesn’t make what she did right or justified. Instead it points out why this sort of thing is both inevitable and much more widespread (i.e., John Deutch, Condoleezza Rich, Colin Powell, and what is surely a much longer list of violations of the same thing Clinton did).

and what is surely a much longer list of violations of the same thing Clinton did). Last week I had to take some new training at work. It was utter torture. The DoE managed to find the worst person possible to train me, and managed to further drain them of all personality then treated him with sedatives. I already take a massive amount of training, most of which utterly and completely useless. The training is largely compliance based, and generically a waste of time. Still by the already appalling standards, the new training was horrible. It is the Hillary-induced E-mail classification training where I now have the authority to mark my classified E-mails as an “E-mail derivative classifier”. We are constantly taking reactive action via training that only undermines the viability and productivity of my workplace. Like most my training, this current training is completely useless, and only serves the “cover your ass” purpose that most training serves. Taken as a whole our environment is corrosive and undermines any and all motivation to give a single fuck about work.

Last week I had to take some new training at work. It was utter torture. The DoE managed to find the worst person possible to train me, and managed to further drain them of all personality then treated him with sedatives. I already take a massive amount of training, most of which utterly and completely useless. The training is largely compliance based, and generically a waste of time. Still by the already appalling standards, the new training was horrible. It is the Hillary-induced E-mail classification training where I now have the authority to mark my classified E-mails as an “E-mail derivative classifier”. We are constantly taking reactive action via training that only undermines the viability and productivity of my workplace. Like most my training, this current training is completely useless, and only serves the “cover your ass” purpose that most training serves. Taken as a whole our environment is corrosive and undermines any and all motivation to give a single fuck about work. professionals work on old hardware with software restrictions that serve outlandish and obscene security regulations that in many cases are actually counter-productive. So, if Hillary were interested in getting anything done she would be quite compelled to leave the federal network for greener, more productive pastures.

professionals work on old hardware with software restrictions that serve outlandish and obscene security regulations that in many cases are actually counter-productive. So, if Hillary were interested in getting anything done she would be quite compelled to leave the federal network for greener, more productive pastures. ything is not my or anyone’s actual productivity, but rather the protection of information, or at least the appearance of protection. Our approach to everything is administrative compliance with directives. Actual performance on anything is completely secondary to the appearance of performance. The result of this pathetic approach to providing the taxpayer with benefit for money expended is a dysfunctional system that provides little in return. It is primed for mistakes and outright systematic failures. Nothing stresses the system more than a high-ranking person hell-bent on doing their job. The sort of people who ascend to high positions like Hillary Clinton find the sort of compliance demanded by the system awful (because it is), and have the power to ignore it.

ything is not my or anyone’s actual productivity, but rather the protection of information, or at least the appearance of protection. Our approach to everything is administrative compliance with directives. Actual performance on anything is completely secondary to the appearance of performance. The result of this pathetic approach to providing the taxpayer with benefit for money expended is a dysfunctional system that provides little in return. It is primed for mistakes and outright systematic failures. Nothing stresses the system more than a high-ranking person hell-bent on doing their job. The sort of people who ascend to high positions like Hillary Clinton find the sort of compliance demanded by the system awful (because it is), and have the power to ignore it. Of course I’ve seen this abuse of power live and in the flesh. Take the former Los Alamos Lab Director, Admiral Pete Nanos who famously shut the Lab down an denounced the staff as “Butthead Cowboys!” He blurted out classified information in an unclassified meeting in front of hundreds if not thousands of people. If he had taken his training, and been compliant he should have known better. Instead of being issued a security infraction like any of the butthead cowboys in attendance would have gotten, he got a pass. The powers that be simply declassified the material and let him slide by. Why? Power comes with privileges. When you’re in a position of power you find the rules are different. This is a maxim repeated over and over in our World. Some of this looks like white privilege, or rich white privilege where you can get away with smoking pot, or raping unconscious girls with no penalty, or lightened penalties. If you’re not white or not rich you pay a much stiffer penalty including prison time.

Of course I’ve seen this abuse of power live and in the flesh. Take the former Los Alamos Lab Director, Admiral Pete Nanos who famously shut the Lab down an denounced the staff as “Butthead Cowboys!” He blurted out classified information in an unclassified meeting in front of hundreds if not thousands of people. If he had taken his training, and been compliant he should have known better. Instead of being issued a security infraction like any of the butthead cowboys in attendance would have gotten, he got a pass. The powers that be simply declassified the material and let him slide by. Why? Power comes with privileges. When you’re in a position of power you find the rules are different. This is a maxim repeated over and over in our World. Some of this looks like white privilege, or rich white privilege where you can get away with smoking pot, or raping unconscious girls with no penalty, or lightened penalties. If you’re not white or not rich you pay a much stiffer penalty including prison time.

complete and utter bullshit. People who have highlighted huge systematic abuses of power involving murder and vast violation of constitutional law are thrown to the proverbial wolves. There is no protection, it is viewed as treason and these people are treated as harshly as possible (Snowden, Assange, and Manning come to mind). As I’ve noted above people in positions of authority can violate the law with utter impunity. At the same time classification is completely out of control. More and mo

complete and utter bullshit. People who have highlighted huge systematic abuses of power involving murder and vast violation of constitutional law are thrown to the proverbial wolves. There is no protection, it is viewed as treason and these people are treated as harshly as possible (Snowden, Assange, and Manning come to mind). As I’ve noted above people in positions of authority can violate the law with utter impunity. At the same time classification is completely out of control. More and mo re is being classified with less and less control. Such classification often only serves to hide information and serve the needs of the status quo power structure.

re is being classified with less and less control. Such classification often only serves to hide information and serve the needs of the status quo power structure. I’ll just say up front that my contention is that there is precious little excellence to be found today in many fields. Modeling & simulation is no different. I will also contend that excellence is relatively easy to obtain, or at the very least a key change in mindset will move us in that direction. This change in mindset is relatively small, but essential. It deals with the general setting of satisfaction with the current state and whether restlessness exists that ends up allowing progress to be sought. Too often there seems to be an innate satisfaction with too much of the “ecosystem” for modeling & simulation, and not enough agitation for progress. We should continually seek the opportunity and need for progress in the full spectrum of work. Our obsession with planning, and micromanagement of research ends up choking the success from everything it touches by short-circuiting the entire natural process of progress, discovery and serendipity.

I’ll just say up front that my contention is that there is precious little excellence to be found today in many fields. Modeling & simulation is no different. I will also contend that excellence is relatively easy to obtain, or at the very least a key change in mindset will move us in that direction. This change in mindset is relatively small, but essential. It deals with the general setting of satisfaction with the current state and whether restlessness exists that ends up allowing progress to be sought. Too often there seems to be an innate satisfaction with too much of the “ecosystem” for modeling & simulation, and not enough agitation for progress. We should continually seek the opportunity and need for progress in the full spectrum of work. Our obsession with planning, and micromanagement of research ends up choking the success from everything it touches by short-circuiting the entire natural process of progress, discovery and serendipity. n my view the desire for continual progress is the essence of excellence. When I see the broad field of modeling & simulation the need for progress seems pervasive and deep. When I hear our leaders talk such needs are muted and progress seems to only depend on a few simple areas of focus. Such a focus is always warranted if there is an opportunity to be taken advantage of. Instead we seem to be in an age where the technological opportunity being sought is arrayed against progress, computer hardware. In the process of trying to force progress where it is less available the true engines of progress are being shut down. This represents mismanagement of epic proportions and needs to be met with calls for sanity and intelligence in our future.

n my view the desire for continual progress is the essence of excellence. When I see the broad field of modeling & simulation the need for progress seems pervasive and deep. When I hear our leaders talk such needs are muted and progress seems to only depend on a few simple areas of focus. Such a focus is always warranted if there is an opportunity to be taken advantage of. Instead we seem to be in an age where the technological opportunity being sought is arrayed against progress, computer hardware. In the process of trying to force progress where it is less available the true engines of progress are being shut down. This represents mismanagement of epic proportions and needs to be met with calls for sanity and intelligence in our future. The way toward excellence, innovation and improvement is to figure out how to break what you have. Always push your code to its breaking point; always know what reasonable (or even unreasonable) problems you can’t successfully solve. Lack of success can be defined in multiple ways including complete failure of a code, lack of convergence, lack of quality, or lack of accuracy. Generally people test their code where it works and if they are good code developers they continue to test the code all the time to make sure it still works. If you want to get better you push at the places where the code doesn’t work, or doesn’t work well. You make the problems where it didn’t work part of the ones that do work. This is the simple and straightforward way to progress, and it is stunning how few efforts follow this simple, and obvious path. It is the golden path that we deny ourselves of today.

The way toward excellence, innovation and improvement is to figure out how to break what you have. Always push your code to its breaking point; always know what reasonable (or even unreasonable) problems you can’t successfully solve. Lack of success can be defined in multiple ways including complete failure of a code, lack of convergence, lack of quality, or lack of accuracy. Generally people test their code where it works and if they are good code developers they continue to test the code all the time to make sure it still works. If you want to get better you push at the places where the code doesn’t work, or doesn’t work well. You make the problems where it didn’t work part of the ones that do work. This is the simple and straightforward way to progress, and it is stunning how few efforts follow this simple, and obvious path. It is the golden path that we deny ourselves of today. n the success of modeling & simulation to date. We are too satisfied that the state of the art is fine and good enough. We lack a general sense that improvements, and progress are always possible. Instead of a continual striving to improve, the approach of focused and planned breakthroughs has beset the field. We have a distinct management approach that provides distinctly oriented improvements while ignoring important swaths of the technical basis for modeling & simulation excellence. The result of this ignorance is an increasingly stagnant status quo that embraces “good enough” implicitly through a lack of support for “better”.

n the success of modeling & simulation to date. We are too satisfied that the state of the art is fine and good enough. We lack a general sense that improvements, and progress are always possible. Instead of a continual striving to improve, the approach of focused and planned breakthroughs has beset the field. We have a distinct management approach that provides distinctly oriented improvements while ignoring important swaths of the technical basis for modeling & simulation excellence. The result of this ignorance is an increasingly stagnant status quo that embraces “good enough” implicitly through a lack of support for “better”. Modeling & simulation arose to utility in support of real things. It owes much of its prominence to the support of national defense during the cold war. Everything from fighter planes to nuclear weapons to bullets and bombs utilized modeling & simulation to strive toward the best possible weapon. Similarly modeling & simulation moved into the world of manufacturing aiding in the design and analysis of cars, planes and consumer products across the spectrum of the economy. The problem is that we have lost sight of the necessity of these real world products as the engine of improvement in modeling & simulation. Instead we have allowed computer hardware to become an end unto itself rather than simply a tool. Even in computing, hardware has little centrality to the field. In computing today, the “app” is king and the keys to the market hardware is simply a necessary detail.

Modeling & simulation arose to utility in support of real things. It owes much of its prominence to the support of national defense during the cold war. Everything from fighter planes to nuclear weapons to bullets and bombs utilized modeling & simulation to strive toward the best possible weapon. Similarly modeling & simulation moved into the world of manufacturing aiding in the design and analysis of cars, planes and consumer products across the spectrum of the economy. The problem is that we have lost sight of the necessity of these real world products as the engine of improvement in modeling & simulation. Instead we have allowed computer hardware to become an end unto itself rather than simply a tool. Even in computing, hardware has little centrality to the field. In computing today, the “app” is king and the keys to the market hardware is simply a necessary detail. To address the proverbial “elephant in the room” the national exascale program is neither a good goal, nor bold in any way. It is the actual antithesis of what we need for excellence. The entire program will only power the continued decline in achievement in the field. It is a big project that is being managed the same way bridges are built. Nothing of any excellence will come of it. It is not inspirational or aspirational either. It is stale. It is following the same path that we have been on for the past 20 years, improvement in modeling & simulation by hardware. We have tremendous places we might harness modeling & simulation to help produce and even enable great outcomes. None of these greater societal goods is in the frame with exascale. It is a program lacking a soul.

To address the proverbial “elephant in the room” the national exascale program is neither a good goal, nor bold in any way. It is the actual antithesis of what we need for excellence. The entire program will only power the continued decline in achievement in the field. It is a big project that is being managed the same way bridges are built. Nothing of any excellence will come of it. It is not inspirational or aspirational either. It is stale. It is following the same path that we have been on for the past 20 years, improvement in modeling & simulation by hardware. We have tremendous places we might harness modeling & simulation to help produce and even enable great outcomes. None of these greater societal goods is in the frame with exascale. It is a program lacking a soul.