Von Neumann told Shannon to call his measure entropy, since “no one knows what entropy is, so in a debate you will always have the advantage.

― Jeremy Campbell

Too often in seeing discourse about numerical methods, one gets the impression that dissipation is something to be avoided at all costs. Calculations are constantly under attack for being too dissipative. Rarely does one ever hear about calculations that are not dissipative enough. A reason for this is the tendency for too little dissipation to cause outright instability contrasted with too much dissipation with low-order methods. In between too little dissipation and instability are a wealth of unphysical solutions, oscillations and terrible computational results. These results may be all too common because of people’s standard disposition toward dissipation. The problem is that too few among the computational cognoscenti recognize that too little dissipation is as poisonous to results as too much (maybe more).

Too often in seeing discourse about numerical methods, one gets the impression that dissipation is something to be avoided at all costs. Calculations are constantly under attack for being too dissipative. Rarely does one ever hear about calculations that are not dissipative enough. A reason for this is the tendency for too little dissipation to cause outright instability contrasted with too much dissipation with low-order methods. In between too little dissipation and instability are a wealth of unphysical solutions, oscillations and terrible computational results. These results may be all too common because of people’s standard disposition toward dissipation. The problem is that too few among the computational cognoscenti recognize that too little dissipation is as poisonous to results as too much (maybe more).

Why might I say that it is more problematic than too much dissipation? A big part of the reason is the nature physical realizability of solutions. A solution with too much dissipation is utterly physical in the sense that it can be found in nature. The solutions with too little dissipation more often than not are not found in nature. This is not because those solutions are unstable, but rather solutions that are stable, and have some dissipation; however, they simply aren’t dissipative enough to match natural law. What many do not recognize is that natural systems actually produce a large amount of dissipation without regard to the size of the mechanisms for explicit dissipative physics. This is both a profound physical truth, and the result of acute nonlinear focusing. It is important for numerical methods to recognize this necessity. Furthermore, this fact of nature reflects an uncomfortable coming together of modelling and numerical methods that many simply choose to ignore as an unpleasant reality.

In this house, we obey the laws of thermodynamics!

– Homer Simpson

Entropy stability is an increasingly important concept in the design of robust, accurate and convergent methods for solving systems defined by nonlinear conservation laws (see Tadmor 2016) The schemes are designed to automatically satisfy an entropy inequality that comes from the second law of thermodynamics, . Implicit in the thinking about the satisfaction of the entropy inequality is a view that approaching the limit of $latex d S / d t = 0$ as viscosity becomes negligible (i.e., inviscid) is desirable. This is

a grave error in thinking about the physical laws of direct interest, as the solution of conservation laws does not satisfy this limit when flows are inviscid. Instead the solutions of interest (i.e., weak solutions with discontinuities) in the inviscid limit approach a solution where the entropy production is proportional to variation in the large scale solution cubed,

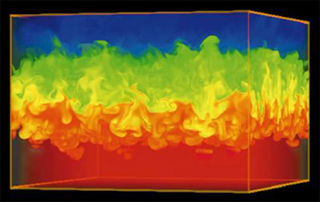

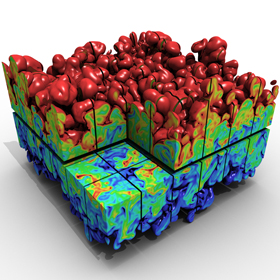

a grave error in thinking about the physical laws of direct interest, as the solution of conservation laws does not satisfy this limit when flows are inviscid. Instead the solutions of interest (i.e., weak solutions with discontinuities) in the inviscid limit approach a solution where the entropy production is proportional to variation in the large scale solution cubed, . This scaling appears over and over in the solution of conservation laws including Burgers’ equation, the equations of compressible flow, MHD, and incompressible turbulence (Margolin & Rider, 2001). The seeming universality of these relations and their implications for numerical methods are discussed below in more detail, but follow the profound implications turbulence modelling are explored in detail for implicit LES modelling (our book edited by Grinstein, Margolin & Rider, 2007). Valid solutions will invariably produce the inequality, but the route to achievement varies greatly.

The satisfaction of the entropy inequality can be achieved in a number of ways and the one most worth avoiding is oscillations in the solution. Oscillatory solutions from nonlinear conservation laws are as common as they are problematic. In a sense, the proper solution is strong attractor for solutions and solutions will adjust to produce the necessary amount of dissipation in the solution. One vehicle for entropy production is oscillations in the solution field. Such oscillations are unphysical and can result in a host of issues undermining other physical aspects of the solution such as positivity of quantities such as density and pressure. They are to be avoided to whatever degree possible. If explicit action isn’t taken to avoid oscillations, one should expect them to appear.

There ain’t no such thing as a free lunch.

― Pierre Dos Utt

A more proactive approach to dissipation leading to entropy satisfaction is generally desirable. Another path toward entropy satisfaction is offered by numerical methods in control volume form. For second-order numerical methods the analysis of the approximation via the modified equation methodology unveils nonlinear dissipation terms that provide the necessary form for satisfying the entropy inequality via a nonlinearly dissipative term in the truncation error. This truncation error takes the form , which integrates to replicate inviscid dissipation as a residual term in the “energy” equation,

. This term comes directly from being in conservation form and disappears when the approximation is in non-conservative from. In large part the overly large success of these second-order methods is related to this character.

Other options to add this character to solutions may be achieved by an explicit nonlinear (artificial) viscosity or through a Riemann solver. The nonlinear hyperviscosities discussed before on this blog work well. One of the pathological misnomers in the community is the belief that the specific form of the viscosity matters. This thinking infests direct numerical simulation (DNS) as it perhaps should, but the reality is that the form of dissipation is largely immaterial to establishing physically relevant flows. In other words inertial range physics does not depend upon the actual form or value of viscosity its impact is limited to the small scales of the flow. Each approach has distinct benefits as well as shortcomings. The key thing to recognize is the necessity of taking some sort of conscious action to achieve this end. The benefits and pitfalls of different approaches are discussed and recommended actions are suggested.

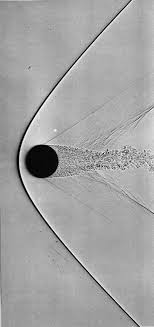

Enforcing the proper sort of entropy production through Riemann solvers is another possibility. A Riemann solver is simply a way of upwinding for a system of equations. For linear interaction modes the upwinding is purely a function of the characteristic motion in the flow, and induces a simple linear dissipative effect. This shows up as a linear even-order truncation error in modified equation analysis where the dissipation coefficient is proportional to the absolute value of the characteristic speed. For nonlinear mode s in the flow, the characteristic speed is a function of the solution, which induces a set of entropy considerations. The simplest and most elegant condition is due to Lax, which says that the characteristics dictate that information flows into a shock. In a Lagrangian frame of reference for a right running shock this would look like,

s in the flow, the characteristic speed is a function of the solution, which induces a set of entropy considerations. The simplest and most elegant condition is due to Lax, which says that the characteristics dictate that information flows into a shock. In a Lagrangian frame of reference for a right running shock this would look like, with

being the sound speed. It has a less clear, but equivalent form through a nonlinear sound speed,

. The differential term describes the fundamental derivative, which describes the nonlinear response of the sound speed to the solution itself. This same condition can be seen in a differential form and dictates some essential sign conventions in flows. The key is that these conditions have a degree of equivalence. The beauty is that the differential form lacks the simplicity of Lax’s condition, but establishes a clear connection to artificial viscosity.

The key to this entire discussion is realizing that dissipation is a fact of reality. Avoiding it is simply a demonstration of an inability to confront the non-ideal nature of the universe. This is simply contrary to progress and a sign of immaturity. Let’s just deal with reality.

The law that entropy always increases, holds, I think, the supreme position among the laws of Nature. If someone points out to you that your pet theory of the universe is in disagreement with Maxwell’s equations — then so much the worse for Maxwell’s equations. If it is found to be contradicted by observation — well, these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of thermodynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation.

– Sir Arthur Stanley Eddington

References

Tadmor, E. (2016). Entropy stable schemes. Handbook of Numerical Analysis.

Margolin, L. G., & Rider, W. J. (2002). A rationale for implicit turbulence modelling. International Journal for Numerical Methods in Fluids, 39(9), 821-841.

Grinstein, F. F., Margolin, L. G., & Rider, W. J. (Eds.). (2007). Implicit large eddy simulation: computing turbulent fluid dynamics. Cambridge university press.

Lax, Peter D. Hyperbolic systems of conservation laws and the mathematical theory of shock waves. Vol. 11. SIAM, 1973.

Harten, Amiram, James M. Hyman, Peter D. Lax, and Barbara Keyfitz. “On finite‐difference approximations and entropy conditions for shocks.” Communications on pure and applied mathematics 29, no. 3 (1976): 297-322.

Dukowicz, John K. “A general, non-iterative Riemann solver for Godunov’s method.” Journal of Computational Physics 61, no. 1 (1985): 119-137.

When we hear about supercomputing, the media focus, press release is always talking about massive calculations. The bigger is always better with as many zeros as possible with some sort of exotic name for the rate of computation, mega, tera, peta, eta, zeta,… Up and to the right! The implicit proposition is that bigger the calculation, the better the science. This is quite simply complete and utter bullshit. These big calculations providing the media footprint for supercomputing and winning prizes are simply stunts, or more generously technology demonstrations, and not actual science. Scientific computation is a much more involved and thoughtful activity involving lots of different calculations many at a vastly smaller scale. Rarely, if ever, do the massive calculations come as a package including the sorts of evidence science is based upon. Real science has error analysis, uncertainty estimates, and in this sense the massive calculations produce a disservice to computational science by skewing the picture of what science using computers should look like.

When we hear about supercomputing, the media focus, press release is always talking about massive calculations. The bigger is always better with as many zeros as possible with some sort of exotic name for the rate of computation, mega, tera, peta, eta, zeta,… Up and to the right! The implicit proposition is that bigger the calculation, the better the science. This is quite simply complete and utter bullshit. These big calculations providing the media footprint for supercomputing and winning prizes are simply stunts, or more generously technology demonstrations, and not actual science. Scientific computation is a much more involved and thoughtful activity involving lots of different calculations many at a vastly smaller scale. Rarely, if ever, do the massive calculations come as a package including the sorts of evidence science is based upon. Real science has error analysis, uncertainty estimates, and in this sense the massive calculations produce a disservice to computational science by skewing the picture of what science using computers should look like. masquerading as a scientific conference. It is simply another in a phalanx of echo chambers we seem to form with increasing regularity across every sector of society. I’m sure the cheerleaders for supercomputing will be crowing about the transformative power of these computers and the boon for science they represent. There will be celebrations of enormous calculations and pronouncements about their scientific value. There is a certain lack of political correctness to the truth about all this; it is mostly pure bullshit.

masquerading as a scientific conference. It is simply another in a phalanx of echo chambers we seem to form with increasing regularity across every sector of society. I’m sure the cheerleaders for supercomputing will be crowing about the transformative power of these computers and the boon for science they represent. There will be celebrations of enormous calculations and pronouncements about their scientific value. There is a certain lack of political correctness to the truth about all this; it is mostly pure bullshit. provided an enormous gain in the power of computers for 50 years and enabled much of the transformative power of computing technology. The key point is that computers and software are just tools; they are incredibly useful tools, but tools nonetheless. Tools allow a human being to extend their own biological capabilities in a myriad of ways. Computers are marvelous at replicating and automating calculations and thought operations at speeds utterly impossible for humans. Everything useful done with these tools is utterly dependent on human beings to devise. My key critique about this approach to computing is the hollowing out of the investigation into devising better ways to use computers and focusing myopically on enhancing the speed of computation.

provided an enormous gain in the power of computers for 50 years and enabled much of the transformative power of computing technology. The key point is that computers and software are just tools; they are incredibly useful tools, but tools nonetheless. Tools allow a human being to extend their own biological capabilities in a myriad of ways. Computers are marvelous at replicating and automating calculations and thought operations at speeds utterly impossible for humans. Everything useful done with these tools is utterly dependent on human beings to devise. My key critique about this approach to computing is the hollowing out of the investigation into devising better ways to use computers and focusing myopically on enhancing the speed of computation. vised to unveil difficult to see phenomena. We then produce explanations or theories to describe what we see, and allow us to predict what we haven’t see yet. The degree of comparison between the theory and the observations confirms our degree of understanding. There is always a gap between our theory and our observations, and each is imperfect in its own way. Observations are intrinsically prone to a variety of errors, and theory is always imperfect. The solutions to theoretical models are also imperfect especially when solved via computation. Understanding these imperfections and the nature of the comparisons between theory and observation is essential to a comprehension of the state of our science.

vised to unveil difficult to see phenomena. We then produce explanations or theories to describe what we see, and allow us to predict what we haven’t see yet. The degree of comparison between the theory and the observations confirms our degree of understanding. There is always a gap between our theory and our observations, and each is imperfect in its own way. Observations are intrinsically prone to a variety of errors, and theory is always imperfect. The solutions to theoretical models are also imperfect especially when solved via computation. Understanding these imperfections and the nature of the comparisons between theory and observation is essential to a comprehension of the state of our science. As I’ve stated before, the scientific method applied to scientific computing is embedded in the practice of verification and validation. Simply stated, a single massive calculation cannot be verified or validated (it could be, but not with current computational techniques and the development of such capability is a worthy research endeavor). The uncertainties in the solution and the model cannot be unveiled in a single calculation, and the comparison with observations cannot be put into a quantitative context. The proponents of our current approach to computing want you to believe that massive calculations have intrinsic scientific value. Why? Because they are so big, they have to be the truth. The problem with this thinking is that any single calculation does not contain steps necessary for determining the quality of the calculation, or putting any model comparison in context.

As I’ve stated before, the scientific method applied to scientific computing is embedded in the practice of verification and validation. Simply stated, a single massive calculation cannot be verified or validated (it could be, but not with current computational techniques and the development of such capability is a worthy research endeavor). The uncertainties in the solution and the model cannot be unveiled in a single calculation, and the comparison with observations cannot be put into a quantitative context. The proponents of our current approach to computing want you to believe that massive calculations have intrinsic scientific value. Why? Because they are so big, they have to be the truth. The problem with this thinking is that any single calculation does not contain steps necessary for determining the quality of the calculation, or putting any model comparison in context. s. These computers are generally the focus of all the attention and cost the most money. The dirty secret is that they are almost completely useless for science and engineering. They are technology demonstrations and little else. They do almost nothing of value to the myriad of programs reporting to use computations to do produce results. All of the utility to actual science and engineering come from the homely cousins of these supercomputers, the capacity computers. These computers are the workhorses of science and engineering because they are set up to do something useful. The capability computers are just show ponies, and perfect exemplars of the modern bullshit based science economy. I’m not OK with this; I’m here to do science and engineering. Are our so-called leaders OK with the focus of attention (and bulk of funding) being non-scientific, media-based, press release generators?

s. These computers are generally the focus of all the attention and cost the most money. The dirty secret is that they are almost completely useless for science and engineering. They are technology demonstrations and little else. They do almost nothing of value to the myriad of programs reporting to use computations to do produce results. All of the utility to actual science and engineering come from the homely cousins of these supercomputers, the capacity computers. These computers are the workhorses of science and engineering because they are set up to do something useful. The capability computers are just show ponies, and perfect exemplars of the modern bullshit based science economy. I’m not OK with this; I’m here to do science and engineering. Are our so-called leaders OK with the focus of attention (and bulk of funding) being non-scientific, media-based, press release generators? How would we do a better job with science and high performance computing?

How would we do a better job with science and high performance computing? e are certainly cases where exascale computing is enabling for model solutions with small enough error to make models useful. This case is rarely made or justified in any massive calculation rather being asserted by authority.

e are certainly cases where exascale computing is enabling for model solutions with small enough error to make models useful. This case is rarely made or justified in any massive calculation rather being asserted by authority. culations. The most common practice is to assess the modeling uncertainty via some sort of sampling approach. This requires many calculations because of the high-dimensional nature of the problem. Sampling converges very slowly with any mean value for the modeling being proportional to the inverse square root of the number of samples and the measure of the variance of the solution.

culations. The most common practice is to assess the modeling uncertainty via some sort of sampling approach. This requires many calculations because of the high-dimensional nature of the problem. Sampling converges very slowly with any mean value for the modeling being proportional to the inverse square root of the number of samples and the measure of the variance of the solution. The uncertainty structure can be approached at a high level, but to truly get to the bottom of the issue requires some technical depth. For example numerical error has many potential sources: discretization error (space, time, energy, … whatever we approximate in), linear algebra error, nonlinear solver error, round-off error, solution regularity and smoothness. Many classes of problems are not well posed and admit multiple physically valid solutions. In this case the whole concept of convergence under mesh refinement needs overhauling. Recently the concept of measure-valued (statistical) solutions has entered the fray. These are taxing on computer resources in the same manner as sampling approaches to uncertainty. Each of these sources requires specific and focused approaches to their estimation along with requisite fidelity.

The uncertainty structure can be approached at a high level, but to truly get to the bottom of the issue requires some technical depth. For example numerical error has many potential sources: discretization error (space, time, energy, … whatever we approximate in), linear algebra error, nonlinear solver error, round-off error, solution regularity and smoothness. Many classes of problems are not well posed and admit multiple physically valid solutions. In this case the whole concept of convergence under mesh refinement needs overhauling. Recently the concept of measure-valued (statistical) solutions has entered the fray. These are taxing on computer resources in the same manner as sampling approaches to uncertainty. Each of these sources requires specific and focused approaches to their estimation along with requisite fidelity. The bottom line is that science and engineering is evidence. To do things correctly you need to operate on an evidentiary basis. More often than not, high performance computing avoids this key scientific approach. Instead we see the basic decision-making operating via assumption. The assumption is that a bigger, more expensive calculation is always better and always serves the scientific interest. This view is as common as it is naïve. There are many and perhaps most cases where the greatest service of science is many smaller calculations. This hinges upon the overall structure of uncertainty in the simulations and whether it is dominated by approximation error, modeling form or lack of knowledge, and even the observational quality available. These matters are subtle and complex, and we all know that today neither subtle, nor complex sells.

The bottom line is that science and engineering is evidence. To do things correctly you need to operate on an evidentiary basis. More often than not, high performance computing avoids this key scientific approach. Instead we see the basic decision-making operating via assumption. The assumption is that a bigger, more expensive calculation is always better and always serves the scientific interest. This view is as common as it is naïve. There are many and perhaps most cases where the greatest service of science is many smaller calculations. This hinges upon the overall structure of uncertainty in the simulations and whether it is dominated by approximation error, modeling form or lack of knowledge, and even the observational quality available. These matters are subtle and complex, and we all know that today neither subtle, nor complex sells. is appalling trend, but the same dynamic is acutely felt there too. The elements undermining facts and reality in our public life are infesting my work. Many institutions are failing society and contributing to the slow-motion disaster we have seen unfolding. We need to face this issue head-on and rebuild our important institutions and restore our functioning society, democracy and governance.

is appalling trend, but the same dynamic is acutely felt there too. The elements undermining facts and reality in our public life are infesting my work. Many institutions are failing society and contributing to the slow-motion disaster we have seen unfolding. We need to face this issue head-on and rebuild our important institutions and restore our functioning society, democracy and governance. broader public sphere, the same thing has happened in the conduct of science. In many ways the undermining of expertise in science is even worse and more corrosive. Increasingly, there is no tolerance or space for the intrusion of expertise into the conduct of scientific or engineering work. The way this tolerance manifests itself is subtle and poisonous. Expertise is tolerated and welcomed as long as it is confirmatory and positive. Expertise is not allowed to offer strong criticism or the slightest rebuke without regard for the shoddiness of work. If an expert does offer anything that seems critical or negative they can expect to be dismissed and never invited back to provide feedback again. Rather than welcome their service and attention, they are derided as troublemakers and malcontents. We see in every corner of the scientific and technical World a steady intrusion of mediocrity and outright bullshit into our discourse as a result.

broader public sphere, the same thing has happened in the conduct of science. In many ways the undermining of expertise in science is even worse and more corrosive. Increasingly, there is no tolerance or space for the intrusion of expertise into the conduct of scientific or engineering work. The way this tolerance manifests itself is subtle and poisonous. Expertise is tolerated and welcomed as long as it is confirmatory and positive. Expertise is not allowed to offer strong criticism or the slightest rebuke without regard for the shoddiness of work. If an expert does offer anything that seems critical or negative they can expect to be dismissed and never invited back to provide feedback again. Rather than welcome their service and attention, they are derided as troublemakers and malcontents. We see in every corner of the scientific and technical World a steady intrusion of mediocrity and outright bullshit into our discourse as a result. to to review technical work for a large important project. The expected outcome was a “rubber stamp” that said the work was excellent, and offered no serious objections. Basically the management wanted me to sign off on the work as being awesome. Instead, I found a number of profound weaknesses in the work, and pointed these out along with some suggested corrective actions. These observations were dismissed and never addressed by the team conducting the work. It became perfectly clear that no such critical feedback was welcome and I wouldn’t be invited back. Worse yet, I was punished for my trouble. I was sent a very clear and unequivocal message, “don’t ever be critical of our work.”

to to review technical work for a large important project. The expected outcome was a “rubber stamp” that said the work was excellent, and offered no serious objections. Basically the management wanted me to sign off on the work as being awesome. Instead, I found a number of profound weaknesses in the work, and pointed these out along with some suggested corrective actions. These observations were dismissed and never addressed by the team conducting the work. It became perfectly clear that no such critical feedback was welcome and I wouldn’t be invited back. Worse yet, I was punished for my trouble. I was sent a very clear and unequivocal message, “don’t ever be critical of our work.”  increasingly meaningless nature of any review, and the hollowing out of expertise’s seal of approval. In the process experts and expertise become covered in the bullshit they pedal and become diminished in the end.

increasingly meaningless nature of any review, and the hollowing out of expertise’s seal of approval. In the process experts and expertise become covered in the bullshit they pedal and become diminished in the end. utterly incompetent bullshit artist president. Donald Trump was completely unfit to hold office, but he is a consummate con man and bullshit artist. In a sense he is the emblem of the age and the perfect exemplar of our addiction to bullshit over substance.

utterly incompetent bullshit artist president. Donald Trump was completely unfit to hold office, but he is a consummate con man and bullshit artist. In a sense he is the emblem of the age and the perfect exemplar of our addiction to bullshit over substance. make, progress is sacrificed. We bury immediate conflict for long-term decline and plant the seeds for far more deep, widespread and damaging conflict. Such horrible conflict may be unfolding right in front of us in the nature of the political process. By finding our problems and being critical we identify where progress can be made, where work can be done to make the World better. By bullshitting our way through things, the problems persist and fester and progress is sacrificed.

make, progress is sacrificed. We bury immediate conflict for long-term decline and plant the seeds for far more deep, widespread and damaging conflict. Such horrible conflict may be unfolding right in front of us in the nature of the political process. By finding our problems and being critical we identify where progress can be made, where work can be done to make the World better. By bullshitting our way through things, the problems persist and fester and progress is sacrificed. implicitly aid and abed the forces in society undermining progress toward a better future. The result of this acceptance of bullshit can be seen in the reduced production of innovation, and breakthrough work, but most acutely in the decay of these institutions.

implicitly aid and abed the forces in society undermining progress toward a better future. The result of this acceptance of bullshit can be seen in the reduced production of innovation, and breakthrough work, but most acutely in the decay of these institutions.

In the past quarter century the role of software in science has made a huge change in importance. I work in a computer research organization that employs many applied mathematicians. One would think that we have a little maelstrom of mathematical thought. Very little actual mathematics takes place with most of them writing software as their prime activity. A great deal of emphasis is placed on software as something to be preserved or invested in. This dynamic places a great deal of other forms of work on the backburner like mathematics (or modeling or algorithmic-methods investigation). The proper question to think about is whether the emphasis on software along with collateral decreases in focus on mathematics or physical modeling is a benefit to the conduct of science.

In the past quarter century the role of software in science has made a huge change in importance. I work in a computer research organization that employs many applied mathematicians. One would think that we have a little maelstrom of mathematical thought. Very little actual mathematics takes place with most of them writing software as their prime activity. A great deal of emphasis is placed on software as something to be preserved or invested in. This dynamic places a great deal of other forms of work on the backburner like mathematics (or modeling or algorithmic-methods investigation). The proper question to think about is whether the emphasis on software along with collateral decreases in focus on mathematics or physical modeling is a benefit to the conduct of science. The simplest answer to the question at hand is that code is a set of instructions that a computer can understand that provides a recipe provided by humans for conducting some calculations. These instructions could integrate a function, or a differential equations, sort some data out, filter an image, or millions of other things. In every case the instructions are devised by humans to do something, and carried out by a computer with greater automation and speed than humans can possibly manage. Without the guidance of humans, the computer is utterly useless, but with human guidance it is a transformative tool. We see modern society completely reshaped by the computer. Too often the focus of humans is on the tool and not the things that give it power, skillful human instructions devised by creative intellects. Dangerously, science is falling into this trap, and the misunderstanding of the true dynamic may have disastrous consequences for the state of progress. We must keep in mind the nature of computing and man’s key role in its utility.

The simplest answer to the question at hand is that code is a set of instructions that a computer can understand that provides a recipe provided by humans for conducting some calculations. These instructions could integrate a function, or a differential equations, sort some data out, filter an image, or millions of other things. In every case the instructions are devised by humans to do something, and carried out by a computer with greater automation and speed than humans can possibly manage. Without the guidance of humans, the computer is utterly useless, but with human guidance it is a transformative tool. We see modern society completely reshaped by the computer. Too often the focus of humans is on the tool and not the things that give it power, skillful human instructions devised by creative intellects. Dangerously, science is falling into this trap, and the misunderstanding of the true dynamic may have disastrous consequences for the state of progress. We must keep in mind the nature of computing and man’s key role in its utility. Nothing is remotely wrong with creating working software to demonstrate a mathematical concept. Often mathematics is empowered by the tangible demonstration of the utility of the ideas expressed in code. The problem occurs when the code becomes the central activity and mathematics is subdued in priority. Increasingly, the essential aspects of mathematics are absent from the demands of the research being replaced by software. This software is viewed as an investment that must be transferred along to new generations of computers. The issue is that the porting of libraries of mathematical code has become the raison d’etre for research. This porting has swallowed innovation in mathematical ideas whole, and the balance in research is desperately lacking.

Nothing is remotely wrong with creating working software to demonstrate a mathematical concept. Often mathematics is empowered by the tangible demonstration of the utility of the ideas expressed in code. The problem occurs when the code becomes the central activity and mathematics is subdued in priority. Increasingly, the essential aspects of mathematics are absent from the demands of the research being replaced by software. This software is viewed as an investment that must be transferred along to new generations of computers. The issue is that the porting of libraries of mathematical code has become the raison d’etre for research. This porting has swallowed innovation in mathematical ideas whole, and the balance in research is desperately lacking. impoverishing our future. In addition we are failing to take advantage of the skills, talents and imagination of the current generation of scientists. We are creating a deficit of possibility that will harm our future in ways we can scarcely imagine. The guilt lies in the failure of our leaders to have sufficient faith in the power of human thought and innovation to continue to march forward into the future in the manner we have in the

impoverishing our future. In addition we are failing to take advantage of the skills, talents and imagination of the current generation of scientists. We are creating a deficit of possibility that will harm our future in ways we can scarcely imagine. The guilt lies in the failure of our leaders to have sufficient faith in the power of human thought and innovation to continue to march forward into the future in the manner we have in the