Science is not about making predictions or performing experiments. Science is about explaining.

― Bill Gaede

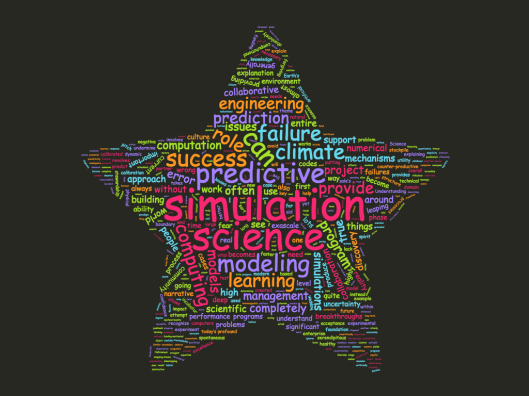

In the modern dogmatic view of high performance computing, the dominant theme of utility revolves around being predictive. This narrative theme is both appropriate and important, but often fails to recognize the essential prerequisites for predictive science, the need to understand and explain. In scientific computing the ability to predict with confidence is always preceded by the use of simulations to aid and enable understanding and assist in explanation. A powerful use of models is the explanation of the mechanisms leading to what is observed. In some cases simulations allow exquisite testing of models of reality, and when a model matches reality we infer that we understand the mechanisms at work in the World. In other cases we have observations of reality that cannot be explained. With simulations we can test our models or experiment with mechanisms that can explain what we see. In both cases the confidence of the traditional science and engineering community is gained through the process of simulation-based understanding.

In the modern dogmatic view of high performance computing, the dominant theme of utility revolves around being predictive. This narrative theme is both appropriate and important, but often fails to recognize the essential prerequisites for predictive science, the need to understand and explain. In scientific computing the ability to predict with confidence is always preceded by the use of simulations to aid and enable understanding and assist in explanation. A powerful use of models is the explanation of the mechanisms leading to what is observed. In some cases simulations allow exquisite testing of models of reality, and when a model matches reality we infer that we understand the mechanisms at work in the World. In other cases we have observations of reality that cannot be explained. With simulations we can test our models or experiment with mechanisms that can explain what we see. In both cases the confidence of the traditional science and engineering community is gained through the process of simulation-based understanding.

Leadership and learning are indispensable to each other.

― John F. Kennedy

Too often in today’s world we see a desire to leap over this step and move directly to prediction. This is a foolish thing to attempt. Like fools, this is exactly where we are leaping! The role of understanding in the utility of simulation is vital in building the foundation upon which prediction is laid. This has important technical and cultural imprints that should never be overlooked. The role of building understanding is deep and effective in providing a healthy culture of modeling and simulation excellence. Most essentially it builds deep bonds of curiosity satisfaction within the domain science and engineering community. The experimental and test community is absolutely vital to a healthy approach, and needs a collaborative spirit to thrive. When prediction becomes the mantra without first building understanding, simulations often get put into an adversarial position. For example we see simulation touted as a replacement to experiment and observation. Instead of collaboration simulation becomes an outright threat. This can lead to completely and utterly counter-productive competition where collaboration would serve everyone far better in almost every case.

Understanding as the object of modeling and simulation also works keenly to provide a culture of technical depth necessary for prediction. I see simulation leaping into the predictive fray without the understanding stage as arrogant and naïve. This is ultimately highly counter-productive. Rather than building on the deep trust that the explanatory process provides, any failure on the part of simulation becomes proof of the negative. In the artificially competitive environment we too often produce, the result is destructive rather than constructive. Prediction without first establishing understanding is an act of hubris, and plants the seeds of distrust. In essence by sidestepping the understanding phase of simulation use makes failures absolutely fatal to success instead of stepping-stones to excellence. This is because the understanding phase is far more forgiving. Understanding is learning and can be engaged in with a playful abandon that yields real progress and breakthroughs. It works through a joint investigation of things no one knows and any missteps are easily and quickly forgiven. This allows the competence and knowledge to be built through the acceptance of failure. Without allowing these failures, success in the long run cannot happen.

Understanding as the object of modeling and simulation also works keenly to provide a culture of technical depth necessary for prediction. I see simulation leaping into the predictive fray without the understanding stage as arrogant and naïve. This is ultimately highly counter-productive. Rather than building on the deep trust that the explanatory process provides, any failure on the part of simulation becomes proof of the negative. In the artificially competitive environment we too often produce, the result is destructive rather than constructive. Prediction without first establishing understanding is an act of hubris, and plants the seeds of distrust. In essence by sidestepping the understanding phase of simulation use makes failures absolutely fatal to success instead of stepping-stones to excellence. This is because the understanding phase is far more forgiving. Understanding is learning and can be engaged in with a playful abandon that yields real progress and breakthroughs. It works through a joint investigation of things no one knows and any missteps are easily and quickly forgiven. This allows the competence and knowledge to be built through the acceptance of failure. Without allowing these failures, success in the long run cannot happen.

Raise your quality standards as high as you can live with, avoid wasting your time on routine problems, and always try to work as closely as possible at the boundary of your abilities. Do this, because it is the only way of discovering how that boundary should be moved forward.

― Edsger W. Dijkstra

The essence of the discussion revolves around the sort of incubator that can be created by a collaborative, learning environment focused on understanding. When the focus is understanding of something the dynamic is forgiving and open. No one knows the answer and people are eager to accept failure as long as it is an honest attempt. More importantly when success comes it has the flavor of discovery and serendipity. The discovery takes the role of an epic win by heroic forces. After the collaboration has worked to provide new understanding and guided the true advance of knowledge, simulation sits in a place where it can be a trusted partner in the scientific or engineering enterprise. Too often in today’s world we disallow the sort of organic mode of capability development in favor of an artificial project based approach.

Our current stockpile stewardship program is a perfect example of how we have systematically screwed all this up. Over time we have created a project management structure with lots of planning, lots of milestone, lots of fear of failure and managed to completely undermine the natural flow of collaborative science. The accounting structure and funding has grown into a noose that is destroying the ability to build a sustainable success. We divide the simulation work from the experimental or application work in ways that completely undermine any collaborative opportunity. Collaborations become forced and teaming with negative context instead of natural and spontaneous. In fact anything spontaneous or serendipitous is completely antithetical to the entire management approach. Worse yet, the newer programs have all the issues hurting the success of stockpile stewardship and have added a lot additional program management formality. The biggest inhibition to success is the artificial barriers to multi-disciplinary simulation-experimental collaborations, and the pervasive fear of failure permeating the entire management construct. By leaping over the understanding and learning phase of modeling and simulation we are short-circuiting the very mechanisms for the most glorious successes. We are addicted to managing programs not to ever fail, which ironically sew the seeds of abject failure.

Our current stockpile stewardship program is a perfect example of how we have systematically screwed all this up. Over time we have created a project management structure with lots of planning, lots of milestone, lots of fear of failure and managed to completely undermine the natural flow of collaborative science. The accounting structure and funding has grown into a noose that is destroying the ability to build a sustainable success. We divide the simulation work from the experimental or application work in ways that completely undermine any collaborative opportunity. Collaborations become forced and teaming with negative context instead of natural and spontaneous. In fact anything spontaneous or serendipitous is completely antithetical to the entire management approach. Worse yet, the newer programs have all the issues hurting the success of stockpile stewardship and have added a lot additional program management formality. The biggest inhibition to success is the artificial barriers to multi-disciplinary simulation-experimental collaborations, and the pervasive fear of failure permeating the entire management construct. By leaping over the understanding and learning phase of modeling and simulation we are short-circuiting the very mechanisms for the most glorious successes. We are addicted to managing programs not to ever fail, which ironically sew the seeds of abject failure.

The problem with the current project milieu is the predetermination of what success looks like. This is then encoded into the project plans and enforced via our prevalent compliance culture. In the process we almost completely destroy the potential for serendipitous discovery. Good discovery science is driven by having rough and loosely defined goals with an acceptance of outcomes that are unknown beforehand, but generally speaking provide immense value at the end of the projects. Today we have instituted project management that attempts to guide our science toward scheduled breakthroughs and avoid any chance at failure. The bottom line is that breakthroughs are grounded on numerous failures and course corrections that power enhanced understanding and a truly learning environment. Our current risk aversion and fear of failure is paving the road to a less prosperous and knowledgeable future.

A specific area where this dynamic is playing out with maximal dysfunctionality is climate science. Climate modeling codes are not predictive and tend to be highly calibrated to the mesh used. The overall modeling paradigm involves a vast number submodels to include a plethora of physical processes important within the Earth’s climate. In a very real sense the numerical solution of the equations describing the climate are forever to be under-resolved with significant numerical error. The system of Earth’s climate also involves very intricate and detailed balances between physical processes. The numerical error is generally quite a bit larger than the balance effects determining the climate, so the overall model must be calibrated to be useful.

specific area where this dynamic is playing out with maximal dysfunctionality is climate science. Climate modeling codes are not predictive and tend to be highly calibrated to the mesh used. The overall modeling paradigm involves a vast number submodels to include a plethora of physical processes important within the Earth’s climate. In a very real sense the numerical solution of the equations describing the climate are forever to be under-resolved with significant numerical error. The system of Earth’s climate also involves very intricate and detailed balances between physical processes. The numerical error is generally quite a bit larger than the balance effects determining the climate, so the overall model must be calibrated to be useful.

You couldn’t predict what was going to happen for one simple reason: people.

― Sara Sheridan

In the modern modeling and simulation world this calibration then provides the basis of very large uncertainties. The combination of numerical error and modeling error means that the true simulation uncertainty is relatively massive. The calibration assures that the actual simulation is quite close to the behavior of the true climate. The models can then be used to study the impact of various factors on climate and aid the level of understanding of climate science. This entire enterprise is highly model-driven and the level of uncertainty is quite large. When we transition over to predictive climate science, the issues become profound. We live in a world where people believe that computing should help to provide quantitative assistance for vexing problems. The magnitude of uncertainty from all sources should provide people with significant pause and provide a pushback from putting simulations in the wrong role. It should also not prevent simulation from providing a key tool in understanding this incredibly complex problem.

In the modern modeling and simulation world this calibration then provides the basis of very large uncertainties. The combination of numerical error and modeling error means that the true simulation uncertainty is relatively massive. The calibration assures that the actual simulation is quite close to the behavior of the true climate. The models can then be used to study the impact of various factors on climate and aid the level of understanding of climate science. This entire enterprise is highly model-driven and the level of uncertainty is quite large. When we transition over to predictive climate science, the issues become profound. We live in a world where people believe that computing should help to provide quantitative assistance for vexing problems. The magnitude of uncertainty from all sources should provide people with significant pause and provide a pushback from putting simulations in the wrong role. It should also not prevent simulation from providing a key tool in understanding this incredibly complex problem.

The premier program for high performance computing simply takes all of these issues and amplifies them to an almost ridiculous degree. The entire narrative around the need for exascale computing is entirely predicated on the computers providing predictive calculations. This is counter to the true role of computation as a modeling, learning, explanation, and understanding partner with scientific and engineering domain expertise. While it is wrong at an intrinsic level the secondary element in the program’s spiel is the simplicity of moving existing codes to new, faster computers for better science. Nothing could be further from the truth on either account. Most codes are woefully inadequate for predictive science first and foremost because of their models. All the things that the exascale program ignores are the very things that are necessary for predictivity. At the end of the day this program is likely to only produce more accurately solved wrong models and do little for predictive science. To exacerbate these issues, the exascale  program generally does not support the understanding role of simulation in science.

program generally does not support the understanding role of simulation in science.

The long-term impact of this lack of support for understanding is profound. It will produce a significant issue with the ability for simulation to elevate itself to a predictive role in science and engineering. The use of computation to help with understanding difficult problems paves the way for a mature predictive future. Removing the understanding is akin to putting someone into an adult role in life without going through a complete childhood. This is a recipe for disaster. The understanding portion of computational collaboration with engineering and science is the incubator for prediction. It allows the modeling and simulation to be very unsuccessful with prediction and still succeed. The success can arise through learning things scientifically through trial and error. These trials, errors and response over time provide a foundation for predictive computation. In a very real way this spirit should always be present in computation. When it is absent, the computational efficacy will become stagnant.

In summary we have yet another case of marketing of science overwhelming the true narrative. In the search for funding to support computing, the sale’s pitch has been arranged around prediction as a product. Increasingly, we are told that a faster computer is all that we really need to do. The implied message in this sale’s pitch is a lack of necessity to support and pursue other aspects of modeling and simulation for predictive success. These issues are plaguing our scientific computing programs. Long-term success of high performance computing is going to be sacrificed, based on this funding-motivated approach. We can add the failure to recognize understanding, explaining and learning as a key products for science and engineering from computation.

In summary we have yet another case of marketing of science overwhelming the true narrative. In the search for funding to support computing, the sale’s pitch has been arranged around prediction as a product. Increasingly, we are told that a faster computer is all that we really need to do. The implied message in this sale’s pitch is a lack of necessity to support and pursue other aspects of modeling and simulation for predictive success. These issues are plaguing our scientific computing programs. Long-term success of high performance computing is going to be sacrificed, based on this funding-motivated approach. We can add the failure to recognize understanding, explaining and learning as a key products for science and engineering from computation.

Any fool can know. The point is to understand.

― Albert Einstein

more important who does a calculation than how the work is done although these two items are linked. This was true 25 years ago with ASCI as it is today. The progress has not happened in large part because we let it, and failed to address the core issues while focusing on press releases and funding profiles. We see the truth squashed because it doesn’t match rhetoric. Now we see lack of funding and emphasis on calculation credibility in the Nation’s premier program for HPC. We continue to trumpet the fiction that the bigger the calculation and computer, the more valuable a calculation is a priori.

more important who does a calculation than how the work is done although these two items are linked. This was true 25 years ago with ASCI as it is today. The progress has not happened in large part because we let it, and failed to address the core issues while focusing on press releases and funding profiles. We see the truth squashed because it doesn’t match rhetoric. Now we see lack of funding and emphasis on calculation credibility in the Nation’s premier program for HPC. We continue to trumpet the fiction that the bigger the calculation and computer, the more valuable a calculation is a priori. Even today with vast amounts of computer power, the job of modeling reality is subtle and nuanced. The modeler who conspires to represent reality on the computer still makes the lion’s share of the decisions necessary for high fidelity representations of reality. All of the items associated with HPC impact a relatively small amount of the overall load of analysis credibility. The analyst decides how to model problems in detail including selection of sub-models, meshes, boundary conditions, and the details included and neglected. The computer power and the mesh resolution usually end up being an afterthought and minor detail. The true overall modeling uncertainty is dominated by everything in the analyst’s power. In other words, the pacing uncertainty in modeling & simulation is not HPC; it is all the decisions made by the analysts. Even with the focus on “mesh resolution” the uncertainty associated with the finite integration of governing equations is rarely measured or estimated. We are focusing on a small part of the overall modeling & simulation capability to the exclusion of the big stuff that drives utility.

Even today with vast amounts of computer power, the job of modeling reality is subtle and nuanced. The modeler who conspires to represent reality on the computer still makes the lion’s share of the decisions necessary for high fidelity representations of reality. All of the items associated with HPC impact a relatively small amount of the overall load of analysis credibility. The analyst decides how to model problems in detail including selection of sub-models, meshes, boundary conditions, and the details included and neglected. The computer power and the mesh resolution usually end up being an afterthought and minor detail. The true overall modeling uncertainty is dominated by everything in the analyst’s power. In other words, the pacing uncertainty in modeling & simulation is not HPC; it is all the decisions made by the analysts. Even with the focus on “mesh resolution” the uncertainty associated with the finite integration of governing equations is rarely measured or estimated. We are focusing on a small part of the overall modeling & simulation capability to the exclusion of the big stuff that drives utility. The current HPC belief system believes that massive computations are predictive and credible solely by the virtue of overwhelming computational power. In essence they use proof by massive computation as the foundation of belief. The problem is that science and engineering do not work this way at all. Belief comes from evidence and the evidence that matters are measurements and observations of the real World (i.e., this would be validation). Models of reality can be steered and coaxed into agreement via calibration in ways that are anathema to prediction. Part of assuring that this isn’t happening is verification. We ultimately want to make sure that the calculations are getting the right answers for the right reasons. Deviations from correctness should be understood at a deep level. Part of putting everything in proper context is uncertainty quantification (UQ). UQ is part of V&V. Unfortunately UQ has replaced V&V in much of the computational science community, and UQ estimated is genuinely incomplete. Now in HPC most of UQ has been replaced by misguided overconfidence.

The current HPC belief system believes that massive computations are predictive and credible solely by the virtue of overwhelming computational power. In essence they use proof by massive computation as the foundation of belief. The problem is that science and engineering do not work this way at all. Belief comes from evidence and the evidence that matters are measurements and observations of the real World (i.e., this would be validation). Models of reality can be steered and coaxed into agreement via calibration in ways that are anathema to prediction. Part of assuring that this isn’t happening is verification. We ultimately want to make sure that the calculations are getting the right answers for the right reasons. Deviations from correctness should be understood at a deep level. Part of putting everything in proper context is uncertainty quantification (UQ). UQ is part of V&V. Unfortunately UQ has replaced V&V in much of the computational science community, and UQ estimated is genuinely incomplete. Now in HPC most of UQ has been replaced by misguided overconfidence. At the core of the problem with bullshit as a technical medium is a general lack of trust, and inability to accept outright failure as an outcome. This combination forms the basis for bullshit and alternative facts becoming accepted within society writ large. When people are sure they will be punished for the truth, you get lies, and finely packaged lies are bullshit. If you want the truth you need to accept it and today, the truth can get you skewered. The same principle holds for the acceptance of failure. Failures are viewed as scandals and not accepted. The flipside of this coin is the truth that failures are the fuel for progress. We need to fail to learn, if we are not failing, we are not learning. Instead of hiding, or bullshitting our way through in order to avoid being labeled failures, we avoid learning, and also corrode our foundational principles. We are locked in a tight downward spiral and all our institutions are under siege. Our political, scientific and intellectual elite are not respected because truth is not valued. False success and feeling good is acceptable as an alternative to reality. In this environment bullshit reigns supreme and being useful isn’t enough to be important.

At the core of the problem with bullshit as a technical medium is a general lack of trust, and inability to accept outright failure as an outcome. This combination forms the basis for bullshit and alternative facts becoming accepted within society writ large. When people are sure they will be punished for the truth, you get lies, and finely packaged lies are bullshit. If you want the truth you need to accept it and today, the truth can get you skewered. The same principle holds for the acceptance of failure. Failures are viewed as scandals and not accepted. The flipside of this coin is the truth that failures are the fuel for progress. We need to fail to learn, if we are not failing, we are not learning. Instead of hiding, or bullshitting our way through in order to avoid being labeled failures, we avoid learning, and also corrode our foundational principles. We are locked in a tight downward spiral and all our institutions are under siege. Our political, scientific and intellectual elite are not respected because truth is not valued. False success and feeling good is acceptable as an alternative to reality. In this environment bullshit reigns supreme and being useful isn’t enough to be important. huge program ($250 million/year) and the talent present at the meeting was truly astounding, a veritable who’s who in computational science in the United States. This project is the crown jewel of the national strategy to retain (or recapture) pre-eminence in high performance computing. Such a meeting has all the makings for banquet of inspiration, and intellectually thought-provoking discussions along with incredible energy. Simply meeting all of these great scientists, many of whom also happen to be wonderful friends only added to the potential. While friends abounded and acquaintances were made or rekindled, this was the high point of the week. The wealth of inspiration and intellectual discourse possible was quenched by bureaucratic imperatives leaving the meeting a barren and lifeless launch of a soulless project.

huge program ($250 million/year) and the talent present at the meeting was truly astounding, a veritable who’s who in computational science in the United States. This project is the crown jewel of the national strategy to retain (or recapture) pre-eminence in high performance computing. Such a meeting has all the makings for banquet of inspiration, and intellectually thought-provoking discussions along with incredible energy. Simply meeting all of these great scientists, many of whom also happen to be wonderful friends only added to the potential. While friends abounded and acquaintances were made or rekindled, this was the high point of the week. The wealth of inspiration and intellectual discourse possible was quenched by bureaucratic imperatives leaving the meeting a barren and lifeless launch of a soulless project. degree of project management formality being applied, which is appropriate for a benign construction projects and completely inappropriate for HPC success. The demands of the management formality was delivered to the audience much like the wasteful prep work for standardized testing in our public schools. It will almost certainly have the same mediocrity inducing impact as that same testing regime, the illusion of progress and success where none actually exists. The misapplication of this management formality is likely to provide a merciful deathblow to this wounded mutant of a program. Some point in the next couple of years we will see the euthanized project as being the subject of a mercy killing.

degree of project management formality being applied, which is appropriate for a benign construction projects and completely inappropriate for HPC success. The demands of the management formality was delivered to the audience much like the wasteful prep work for standardized testing in our public schools. It will almost certainly have the same mediocrity inducing impact as that same testing regime, the illusion of progress and success where none actually exists. The misapplication of this management formality is likely to provide a merciful deathblow to this wounded mutant of a program. Some point in the next couple of years we will see the euthanized project as being the subject of a mercy killing. is exactly the same except the power of the computers is 1000 times greater than the computers that would unlock the secrets of the universe a quarter of a century ago. Aside from the Exascale replacing Petascale in computing power, the vision of 25 years ago is identical to today’s vision. The problem then as now is the incompleteness of the vision and fatal flaws in how it is executed. If one adds a management approach that is seemingly devised by Chinese spies to undermine the program’s productivity and morale, the outcome of ECP seems assured, failure. This wouldn’t be the glorious failure of putting your best foot forward seeking great things, but failure born of incompetence and almost malicious disregard for the talent at their disposal.

is exactly the same except the power of the computers is 1000 times greater than the computers that would unlock the secrets of the universe a quarter of a century ago. Aside from the Exascale replacing Petascale in computing power, the vision of 25 years ago is identical to today’s vision. The problem then as now is the incompleteness of the vision and fatal flaws in how it is executed. If one adds a management approach that is seemingly devised by Chinese spies to undermine the program’s productivity and morale, the outcome of ECP seems assured, failure. This wouldn’t be the glorious failure of putting your best foot forward seeking great things, but failure born of incompetence and almost malicious disregard for the talent at their disposal. succeed at something so massive and difficult while the voices of those paid to work on the project are silenced? At the same time we are failing to develop an entire generation of scientists with the holistic set of activities needed for successful HPC. The balance of technical activities needed for healthy useful HPC capability is simply unsupported and almost actively discouraged. We are effectively hollowing out an entire generation of applied mathematicians, computational engineers and physicists pushing them to focus more on software engineering than their primary disiplines. Today someone working in applied mathematics is more likely to focus on object oriented constructs in C++ than functional analysis. Moreover the software is acting as a straightjacket for the mathematics slowly suffocating actual mathematical investigations. We see important applied mathematical work avoided because software interfaces and assumptions are incompatible. One of the key aspects of ECP is the drive for everything to be expressed in software as products and our raison d’être. We’ve lost the balance of software as a necessary element in checking the utility of mathematics. We now have software in ascendency, and mathematics as a mere afterthought. Seeing this unfold with the arrayed talents on display in Knoxville last week felt absolutely and utterly tragic. Key scientific questions that the vitality of scientific computing absolutely hinge upon are left hanging without attention and progress on them is almost actively discouraged.

succeed at something so massive and difficult while the voices of those paid to work on the project are silenced? At the same time we are failing to develop an entire generation of scientists with the holistic set of activities needed for successful HPC. The balance of technical activities needed for healthy useful HPC capability is simply unsupported and almost actively discouraged. We are effectively hollowing out an entire generation of applied mathematicians, computational engineers and physicists pushing them to focus more on software engineering than their primary disiplines. Today someone working in applied mathematics is more likely to focus on object oriented constructs in C++ than functional analysis. Moreover the software is acting as a straightjacket for the mathematics slowly suffocating actual mathematical investigations. We see important applied mathematical work avoided because software interfaces and assumptions are incompatible. One of the key aspects of ECP is the drive for everything to be expressed in software as products and our raison d’être. We’ve lost the balance of software as a necessary element in checking the utility of mathematics. We now have software in ascendency, and mathematics as a mere afterthought. Seeing this unfold with the arrayed talents on display in Knoxville last week felt absolutely and utterly tragic. Key scientific questions that the vitality of scientific computing absolutely hinge upon are left hanging without attention and progress on them is almost actively discouraged. the methods used to solve these models. Much of the enabling efficiency of solution is found in innovative algorithms. The key to this discussion is the subtext that these three most important elements in the HPC ecosystem are unsupported and minimized in priority by ECP. The focal point on hardware arises from two elements, the easier path to funding, and the fandom of hardware among the HPC cognoscenti.

the methods used to solve these models. Much of the enabling efficiency of solution is found in innovative algorithms. The key to this discussion is the subtext that these three most important elements in the HPC ecosystem are unsupported and minimized in priority by ECP. The focal point on hardware arises from two elements, the easier path to funding, and the fandom of hardware among the HPC cognoscenti.

y has been a leading light in progress globally. A combination of our political climate and innate limits in the American mindset seem to be conspiring to undo this engine of progress. Looking at the ECP program as a microcosm of the American experience is instructive. The overt control of all activities is suggestive of the pervasive lack of trust in our society. This lack of trust is paired with deep fear of scandal and more demands for control. Working in almost unison with these twin engines of destruction is the lack of respect for human capital in general, which is only made more tragic when one realizes the magnitude of the talent being wasted. Instead of trust and faith in the arrayed talent of the individuals being funded by the program, we are going to undermine all their efforts with doubt, fear and marginalization. The active role of bullshit in defining success allows the disregard for talent to go unnoticed (think bullshit and alternative facts as brothers).

y has been a leading light in progress globally. A combination of our political climate and innate limits in the American mindset seem to be conspiring to undo this engine of progress. Looking at the ECP program as a microcosm of the American experience is instructive. The overt control of all activities is suggestive of the pervasive lack of trust in our society. This lack of trust is paired with deep fear of scandal and more demands for control. Working in almost unison with these twin engines of destruction is the lack of respect for human capital in general, which is only made more tragic when one realizes the magnitude of the talent being wasted. Instead of trust and faith in the arrayed talent of the individuals being funded by the program, we are going to undermine all their efforts with doubt, fear and marginalization. The active role of bullshit in defining success allows the disregard for talent to go unnoticed (think bullshit and alternative facts as brothers). potential of the human resource available to them. Proper and able management of the people working on the project would harness and channel their efforts productively. Better yet, it would inspire and enable these talented individuals to innovate and discover new things that might power a brighter future for all of us. Instead we see the rule of fear, and limitations governing people’s actions. Instead we see an ever-tightening leash placed around people’s neck suffocating their ability to perform at their best. This is the core of the unfolding research tragedy that is doubtlessly playing out across a myriad of programs far beyond the small-scale tragedy unfolding with HPC.

potential of the human resource available to them. Proper and able management of the people working on the project would harness and channel their efforts productively. Better yet, it would inspire and enable these talented individuals to innovate and discover new things that might power a brighter future for all of us. Instead we see the rule of fear, and limitations governing people’s actions. Instead we see an ever-tightening leash placed around people’s neck suffocating their ability to perform at their best. This is the core of the unfolding research tragedy that is doubtlessly playing out across a myriad of programs far beyond the small-scale tragedy unfolding with HPC. If one wants to understand fear and how it can destroy competence and achievement take a look at (American) football. How many times have you seen a team undone during the two minute drill? A team who has been dominating the other team defensively suddenly becomes porous when it switches to the prevent defense, it is a strategy born out of fear. They stop doing what works, but is risking and takes a safety first approach. It happens over and over providing the Madden quip that the only thing the prevent defense prevents is victory. It is a perfect metaphor for how fear plays out in society.

If one wants to understand fear and how it can destroy competence and achievement take a look at (American) football. How many times have you seen a team undone during the two minute drill? A team who has been dominating the other team defensively suddenly becomes porous when it switches to the prevent defense, it is a strategy born out of fear. They stop doing what works, but is risking and takes a safety first approach. It happens over and over providing the Madden quip that the only thing the prevent defense prevents is victory. It is a perfect metaphor for how fear plays out in society. Over 80 years ago we had a leader, FDR, who chastened us against fear saying, “we have nothing to fear but fear itself”. Today we have leaders who embrace fear as a prime motivator in almost every single public policy decision. We have the cynical use of fear to gain power used across the globe. Fear is also a really powerful way to free money from governments too. Terrorism is both a powerful political tool for both those committing the terrorist acts, and the military-police-industrial complexes to retain their control over society. We see the rise of vast police states across the Western world fueled by irrational fears of terrorism.

Over 80 years ago we had a leader, FDR, who chastened us against fear saying, “we have nothing to fear but fear itself”. Today we have leaders who embrace fear as a prime motivator in almost every single public policy decision. We have the cynical use of fear to gain power used across the globe. Fear is also a really powerful way to free money from governments too. Terrorism is both a powerful political tool for both those committing the terrorist acts, and the military-police-industrial complexes to retain their control over society. We see the rise of vast police states across the Western world fueled by irrational fears of terrorism. Fear also keeps people from taking risks. Many people decide not to travel because of fears associated with terrorism, among other things. Fear plays a more subtle role in work. If failure becomes unacceptable, fear will keep people from taking on difficult work, and focus on easier, low-risk work. This ultimately undermines our ability to achieve great things. If one does not focus on attempting to achieve great things, the great things simply will not happen. We are all poorer for it. Fear is ultimately the victory of small-minded limited thinking over hope and abundance of a better future. Instead of attacking the future with gusto and optimism, fear pushes us to contact to the past and turn our backs on progress.

Fear also keeps people from taking risks. Many people decide not to travel because of fears associated with terrorism, among other things. Fear plays a more subtle role in work. If failure becomes unacceptable, fear will keep people from taking on difficult work, and focus on easier, low-risk work. This ultimately undermines our ability to achieve great things. If one does not focus on attempting to achieve great things, the great things simply will not happen. We are all poorer for it. Fear is ultimately the victory of small-minded limited thinking over hope and abundance of a better future. Instead of attacking the future with gusto and optimism, fear pushes us to contact to the past and turn our backs on progress. ommunication. Good communication is based on trust. Fear is the absence of trust. People are afraid of ideas, and afraid to share their ideas or information with others. As Google amply demonstrates, knowledge is power. Fear keeps people form sharing information and leader to an overall diminishment in power. Information if held closely will produce control, but control of a smaller pie. Free information makes the pie bigger, creates abundance, but people are afraid of this. For example a lot of information is viewed as dangerous and held closely leading to things like classification. This is necessary, but also prone to horrible abuse.

ommunication. Good communication is based on trust. Fear is the absence of trust. People are afraid of ideas, and afraid to share their ideas or information with others. As Google amply demonstrates, knowledge is power. Fear keeps people form sharing information and leader to an overall diminishment in power. Information if held closely will produce control, but control of a smaller pie. Free information makes the pie bigger, creates abundance, but people are afraid of this. For example a lot of information is viewed as dangerous and held closely leading to things like classification. This is necessary, but also prone to horrible abuse.

Without leadership rejecting fear too many people simply give into it. Today leaders do not reject fear; they embrace it; they use it for their purposes, and amplify their power. It is easy to do because fear engages people’s animal core and it is prone to cynical manipulation. This fear paralyzes us and makes us weak. Fear is expensive, and slow. Fear is starving the efforts society could be making to make a better future. Progress and the hope of a better future rests squarely on our courage and bravery in the face of fear and the rejection of it as the organizing principle for our civilization.

Without leadership rejecting fear too many people simply give into it. Today leaders do not reject fear; they embrace it; they use it for their purposes, and amplify their power. It is easy to do because fear engages people’s animal core and it is prone to cynical manipulation. This fear paralyzes us and makes us weak. Fear is expensive, and slow. Fear is starving the efforts society could be making to make a better future. Progress and the hope of a better future rests squarely on our courage and bravery in the face of fear and the rejection of it as the organizing principle for our civilization.