To test a perfect theory with imperfect instruments did not impress the Greek philosophers as a valid way to gain knowledge.

― Isaac Asimov

Note: I got super annoyed with the ability of WordPress to parse LaTeX so there are a lot of math type in here that I gave up on. Apologies!

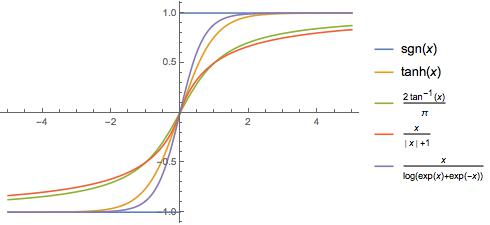

Last week I introduced a set of alternatives to discontinuous intrinsic functions providing logical functional capability in programming. In the process I outlined some of the issues that arise due to discontinuous aspects of computer code operation. The utility of these alternatives applies to common regression testing in codes and convergence of nonlinear solvers. The issue remains with respect to how useful these alternatives are. My intent is to address components of this aspect of the methods this week. Do these advantageous functions provide their benefit without undermining more fundamental properties of numerical methods like stability and convergence of numerical methods? Or do we need to modify the implementation of these smoothed functions in some structured manner to assure proper behavior.

The answer to both questions is an unqualified yes, they are both useful and they need some careful modification to assure correct behavior. The smoothed functions may be used, but the details do matter.

To this end we will introduce several analysis techniques to show these issues concretely. One thing to get out of the way immediately is how this analysis does not change some basic aspects of the functions. For all of the functions we have the property that the original function is recovered in an asymptotic limit that is becomes the original sign function,

. Our goal is to understand the behavior of these functions within the context of a numerical method away from this limit where we have obviously deviated substantially from the classical functions. We fundamentally want to assure that the basic approximation properties of methods are not altered in some fatal manner by their use. A big part of the tool kit will be systematic use of Taylor series approximations to make certain that the consistency of the numerical method and order of accuracy are retained when switching from the classical functions to their smoothed versions. Consistency simply means that the approximations are valid approximations to the original differential equation (meaning the error is ordered).

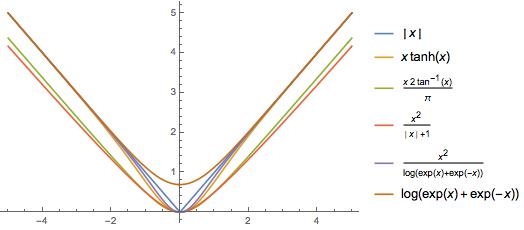

There was one important detail that I misplaced during last week’s post. If one takes the definition of the sign function we can see an alternative that wasn’t explored. If we have . Thus we can easily rearrange this expression to give two very different regularized absolute value expressions,

and

. When we move to the softened sign function the behavior of the absolute value changes in substantive ways. In particular in the cases where the softened absolute value was everywhere less than the classical absolute value, it would be greater for the second interpretation and vice-versa. As a result functions like

now can produce an absolute value,

that doesn’t have issues with entropy conditions as the dissipation will be more than the minimum, not less. Next we will examine whether these different views have significance in truncation error.

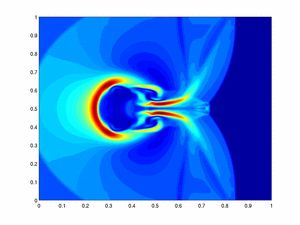

Our starting point will be the replacement of the sign function or absolute value in upwind approximations to differential equations. For entropy satisfaction and generally stable approximation the upwind approximation is quite fundamental as the foundation of robust numerical methods for fluid dynamics. We can start with the basic upwind approximation with classical function, the absolute value in this case. We will base the analysis on the semi-discrete version of the scheme using a flux difference, $\frac{1}{h} \left[ f(j+1/2) – f(j-1/2) \right] $. The basic upwind approximation is

$ – \frac{1}{2} \left|a\right| \left[ u(j+1) – u(j) \right]$ where

is the characteristic velocity for the flux and provides the upwind dissipation. The Taylor series analysis gives the dissipation in this scheme as

. We find a set of very interesting conclusions can be drawn almost immediately.

All of the smoothed functions introduced are workable as alternatives although some versions seem to be intrinsically better. In other words all produce a valid consistent first-order approximation. The functions based on analytical functions like or

are valid approximations, but the amount of dissipation is always less than the classical function leading to potential entropy violation. They approach the classical absolute value as one would expect and the deviations similarly diminish. Functions such as

or

result in no change in the leading order truncation error although similarly the deviations are always produce less dissipation than classical upwind. We do find that for both functions we need to modify the form of the regularization to get good behavior to

and

.For the classical softmax function based on logarithms and exponentials is the same, but it always produces more dissipation than upwinding rather than less,

. This may make this functional basis better for replacing the absolute value for the purpose of upwinding. The downside to this form of the absolute value is the regularized sign function’s passage through hard zero, which makes division problematic.

Let’s look at the functions useful for producing a more entropy satisfactory result for upwinding. We find that these functions work differently than the original ones. For example the hyperbolic tangent does not as quickly become equivalent to the upwind scheme as . There is a lingering departure from linearity with

proportional to the mesh spacing and

. As a result the quadratic form of the softened sign is best because of the

regularization. Perhaps this is a more widely applicable conclusion as will see as we develop the smoothed function more with limiters.

Where utility ends and decoration begins is perfection.

― Jack Gardner

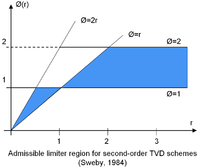

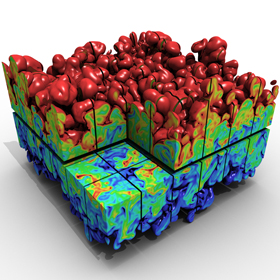

Now we can transition to looking at a more complex and subtle subject, limiters. Briefly put, limiters are nonlinear functions applied to differencing schemes to produce non-oscillatory (or monotone solutions) with higher order accuracy. Generally in this context high-order is anything above first order. We have theory that confines non-oscillatory methods to first-order accuracy where upwind differencing is canonical. As a result the basic theory applies to second-order method where a linear basis is added to the piecewise constant basis the upwind method is based on. The result is the term “slope limiter” where the linear, slope, is modified by a nonlinear function. Peter Sweby produced a diagram to describe what successful limiters look like parametrically. The parameter is non-dimensionally described by the ratio of discrete gradients, $ r = \frac{(u(j+1) – u(j)}{u(j) – u(j-1)}$. The smoothed functions described here modify the adherence to this diagram. The classical diagram has a region where second-order accuracy can be expected. It is bounded by the function and twice the magnitude of this function.

We can now visualize the impact of the smoothed functions on this diagram. This produces systematic changes in the diagram that lead to deviations from the ideal behavior. Realize that the ideal diagram is always recovered in the limit as the functions recover the classical form. What we see is that the classical curves are converged upon from above or below, and produces wiggles in the overall functional evaluation. My illustrations all show the functions with the regularization chosen to be unrealistically small to exaggerate the impact of the smooth functions. A bigger and more important question is whether the functions impact the order of approximation.

To finish up this discussion I’m going to look at analyzing the truncation error of the methods. Our starting point is the classical scheme’s error, which provides a viewpoint on the nature of the nonlinearity associated with limiters. What is clear about a successful limiter is its ability to produce a valid approximation to a gradient with an ordered error of a least order . The minmod limiter produces a truncation error of $ \mbox{minmod}(u(j)-u(j-1), u(j+1) – u(j)) = u_x – \frac{h}{2} \left| \frac{u_{xx}}{u_x} \right| u_x $. The results with different sorts of recipes for the smoothed sign function and its extension to softabs, softmin and softmax are surprising to say the least and a bit unexpected.

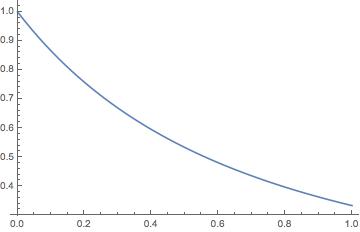

Here is a structured summary of the options as applied to a minmod limiter, :

and

. The gradient approximation is

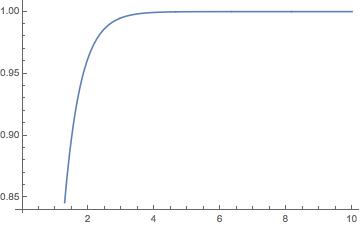

. The constant in front of the gradient approaches one very quickly as

grows.

and

. The gradient approximation is

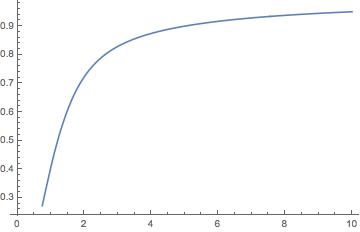

. The constant in front of the gradient approaches one very slowly as

grows. This is smoothing is unworkable for limiters.

and

putting a mesh dependence in the sign function results in inconsistent gradient approximations. The gradient approximation is

. The leading constant goes slowly to one as

.

and

. The gradient approximation is

.

and

. putting a mesh dependence in the sign results in inconsistent gradient approximations. The gradient approximation is

. This makes the approximation utterly useless in this context.

and

. The gradient approximation is $ u_x \approx u_x – \left[ u_x\sqrt{n^2 + \left(\frac{(u_{xx}}{u_x}} \right)^2 \right]h+ \cal{O}(h^2)$.

Being useful to others is not the same thing as being equal.

― N.K. Jemisin

Sweby, Peter K. “High resolution schemes using flux limiters for hyperbolic conservation laws.” SIAM journal on numerical analysis 21, no. 5 (1984): 995-1011.

will touch upon at the very end of this post, Riemann solvers-numerical flux functions can also benefit from this, but some technicalities must be proactively dealt with.

will touch upon at the very end of this post, Riemann solvers-numerical flux functions can also benefit from this, but some technicalities must be proactively dealt with. (

(

some optimization solutions (

some optimization solutions ( approximations. This issue can easily be explained by looking at the smooth sign function compared with the standard form. Since the dissipation in the Riemann solver is proportional to the characteristic velocity, we can see that the smoothed sign function is everywhere less than the standard function resulting in less dissipation. This is a stability issue analogous to concerns around limiters where these smoothed functions are slightly more permissive. Using the exponential version of “softabs” where the value is always greater than the standard absolute value can modulate this permissive nature.

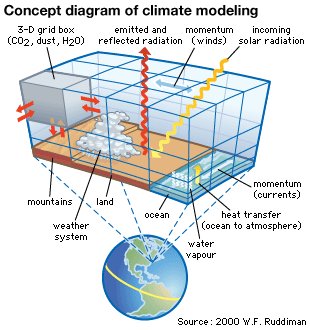

approximations. This issue can easily be explained by looking at the smooth sign function compared with the standard form. Since the dissipation in the Riemann solver is proportional to the characteristic velocity, we can see that the smoothed sign function is everywhere less than the standard function resulting in less dissipation. This is a stability issue analogous to concerns around limiters where these smoothed functions are slightly more permissive. Using the exponential version of “softabs” where the value is always greater than the standard absolute value can modulate this permissive nature. computing. Worse yet, computing can not be a replacement for reality, but rather is simply a tool for dealing with it better. In the final analysis the real world still needs to be in the center of the frame. Computing needs to be viewed in the proper context and this perspective should guide our actions in its proper use.

computing. Worse yet, computing can not be a replacement for reality, but rather is simply a tool for dealing with it better. In the final analysis the real world still needs to be in the center of the frame. Computing needs to be viewed in the proper context and this perspective should guide our actions in its proper use. many ways computing is driving enormous change societally and creating very real stress in the real world. These stresses are stoking fears and lots of irrational desire to control dangers and risks. All of this control is expensive, and drives an economy of fear. Fear is very expensive. Trust, confidence and surety are cheap and fast. One totally irrational way to control fear is ignore it, allowing reality to be replaced. For people who don’t deal with reality well, the online world can be a boon. Still the relief from a painful reality ultimately needs to translate to something tangible physically. We see this in an over-reliance on modeling and simulation in technical fields. We falsely believe that experiments and observations can be replaced. The needs of the human endeavor of communication can be done away with through electronic means. In the end reality must be respected, and people must be engaged in conversation. Computing only augments, but never replaces the real world, or real people, or real experience. This perspective is a key realization in making the best use of technology.

many ways computing is driving enormous change societally and creating very real stress in the real world. These stresses are stoking fears and lots of irrational desire to control dangers and risks. All of this control is expensive, and drives an economy of fear. Fear is very expensive. Trust, confidence and surety are cheap and fast. One totally irrational way to control fear is ignore it, allowing reality to be replaced. For people who don’t deal with reality well, the online world can be a boon. Still the relief from a painful reality ultimately needs to translate to something tangible physically. We see this in an over-reliance on modeling and simulation in technical fields. We falsely believe that experiments and observations can be replaced. The needs of the human endeavor of communication can be done away with through electronic means. In the end reality must be respected, and people must be engaged in conversation. Computing only augments, but never replaces the real world, or real people, or real experience. This perspective is a key realization in making the best use of technology.

on computer power coupled to an unchanging model as the recipe for progress. Focus and attention to improving modeling is almost completely absent in the modeling and simulation world. This ignores one of the greatest truths in computing that no amount of computer power can rescue an incorrect model. These truths do little to alter the approach although we can be sure that we will ultimately pay for the lack of attention to these basics. Reality cannot be ignored forever; it will make itself felt in the end. We could make it more important now to our great benefit, but eventually our lack of consideration will demand more attention.

on computer power coupled to an unchanging model as the recipe for progress. Focus and attention to improving modeling is almost completely absent in the modeling and simulation world. This ignores one of the greatest truths in computing that no amount of computer power can rescue an incorrect model. These truths do little to alter the approach although we can be sure that we will ultimately pay for the lack of attention to these basics. Reality cannot be ignored forever; it will make itself felt in the end. We could make it more important now to our great benefit, but eventually our lack of consideration will demand more attention. independently. The proper decomposition of error allows the improvement of modeling in a principled manner.

independently. The proper decomposition of error allows the improvement of modeling in a principled manner. ng the model we believe we are using, the validation is powerful evidence. One must recognize that the degree of understanding is always relative to the precision of the questions being asked. The more precise the question being asked is, the more precise the model needs to be. This useful tension can help to drive science forward. Specifically the improving precision of observations can spur model improvement, and the improving precision of modeling can drive observation improvements, or at least the necessity of improvement. In this creative tension the accuracy of solution of models and computer power plays but a small role.

ng the model we believe we are using, the validation is powerful evidence. One must recognize that the degree of understanding is always relative to the precision of the questions being asked. The more precise the question being asked is, the more precise the model needs to be. This useful tension can help to drive science forward. Specifically the improving precision of observations can spur model improvement, and the improving precision of modeling can drive observation improvements, or at least the necessity of improvement. In this creative tension the accuracy of solution of models and computer power plays but a small role. Sometimes you read something that hits you hard. Yesterday was one of those moments while reading Seth Godin’s daily blog post (

Sometimes you read something that hits you hard. Yesterday was one of those moments while reading Seth Godin’s daily blog post ( I rewrote Godin’s quote to reflect how work is changing me (at the bottom of the post). It really says something needs to give. I worry about how many of us feel the same thing. Right now the workplace is making me a shittier version of myself. I feel that self-improvement is a constant struggle against my baser instincts. I’m thankful for a writer like Seth Godin who can push me to into a vital and much needed self-reflective “what the fuck” !

I rewrote Godin’s quote to reflect how work is changing me (at the bottom of the post). It really says something needs to give. I worry about how many of us feel the same thing. Right now the workplace is making me a shittier version of myself. I feel that self-improvement is a constant struggle against my baser instincts. I’m thankful for a writer like Seth Godin who can push me to into a vital and much needed self-reflective “what the fuck” ! are seeing cultural, economic, and political changes of epic proportions across the human world. With the Internet forming a backbone of immense interconnection, and globalization, the transformations to our society are stressing people resulting in fearful reactions. These are combining with genuine threats to humanity in the form of weapons of mass destruction, environmental damage, mass extinctions and climate change to form the basis of existential danger. We are not living on the cusp of history; we are living through the tidal wave of change. There are massive opportunities available, but the path is never clear or safe. As the news every day testifies, the present mostly kind of sucks. While I’d like to focus on the possibilities of making things better, the scales are tipped toward the negative backlash to all this change. The forces trying to stop the change in its tracks are strong and appear to be growing stronger.

are seeing cultural, economic, and political changes of epic proportions across the human world. With the Internet forming a backbone of immense interconnection, and globalization, the transformations to our society are stressing people resulting in fearful reactions. These are combining with genuine threats to humanity in the form of weapons of mass destruction, environmental damage, mass extinctions and climate change to form the basis of existential danger. We are not living on the cusp of history; we are living through the tidal wave of change. There are massive opportunities available, but the path is never clear or safe. As the news every day testifies, the present mostly kind of sucks. While I’d like to focus on the possibilities of making things better, the scales are tipped toward the negative backlash to all this change. The forces trying to stop the change in its tracks are strong and appear to be growing stronger. Many of our institutions are under continual assault by the realities of today. The changes we are experiencing are incompatible with many of our institutional structures such as the places I work. Increasingly this assault is met with fear. The evidence of the overwhelming fear is all around us. It finds its clearest articulation within the political world where fear-based policies abound with the rise of Nationalist anti-Globalization candidates everywhere. We see the rise of racism, religious tensions and protectionist attitudes all over the World. The religious tensions arise from an increased tendency to embrace traditional values as a hedge against change and the avalanche of social change accompanying technology, globalization and openness. Many embrace restrictions and prejudice as a solution to changes that make them fundamentally uncomfortable. This produces a backlash of racist, sexist, homophobic hatred that counters everything about modernity. In the workplace this mostly translates to a genuinely awful situation of virtual paralysis and creeping bureaucratic over-reach resulting in a workplace that is basically going no where fast. For someone like me who prizes true progress above all else, the workplace has become a continually disappointing experience.

Many of our institutions are under continual assault by the realities of today. The changes we are experiencing are incompatible with many of our institutional structures such as the places I work. Increasingly this assault is met with fear. The evidence of the overwhelming fear is all around us. It finds its clearest articulation within the political world where fear-based policies abound with the rise of Nationalist anti-Globalization candidates everywhere. We see the rise of racism, religious tensions and protectionist attitudes all over the World. The religious tensions arise from an increased tendency to embrace traditional values as a hedge against change and the avalanche of social change accompanying technology, globalization and openness. Many embrace restrictions and prejudice as a solution to changes that make them fundamentally uncomfortable. This produces a backlash of racist, sexist, homophobic hatred that counters everything about modernity. In the workplace this mostly translates to a genuinely awful situation of virtual paralysis and creeping bureaucratic over-reach resulting in a workplace that is basically going no where fast. For someone like me who prizes true progress above all else, the workplace has become a continually disappointing experience. ance. As we embrace online life and social media, we have gotten supremely fixated on superficial appearances and lost the ability to focus on substance. The way things look has become far more important than the actuality of anything. Having a reality show celebrity as the President seems like a rather emphatic exemplar of this trend. Someone who looks like a leader, but lacks most of the basic qualifications is acceptable to many people. People with actual qualifications are viewed as suspicious. The elite are rejected because they don’t relate to the common man. While this is obvious on a global scale through political upheaval, the same trends are impacting work. The superficial has become a dominant element in managing because the system demands lots of superficial input while losing any taste for anything of enduring depth. Basically, the system as a whole is mirroring society at large.

ance. As we embrace online life and social media, we have gotten supremely fixated on superficial appearances and lost the ability to focus on substance. The way things look has become far more important than the actuality of anything. Having a reality show celebrity as the President seems like a rather emphatic exemplar of this trend. Someone who looks like a leader, but lacks most of the basic qualifications is acceptable to many people. People with actual qualifications are viewed as suspicious. The elite are rejected because they don’t relate to the common man. While this is obvious on a global scale through political upheaval, the same trends are impacting work. The superficial has become a dominant element in managing because the system demands lots of superficial input while losing any taste for anything of enduring depth. Basically, the system as a whole is mirroring society at large. The prime institutional directive is survival and survival means no fuck ups, ever. We don’t have to do anything as long as no fuck ups happen. We are ruled completely by fear. There is no balance at all between fear-based motivations and the needs for innovation and progress. As a result our core operational principle for is compliance above all else. Productivity, innovation, progress and quality all fall by the wayside to empower compliance. Time and time again decisions are made to prize compliance over productivity, innovation, progress, quality, or efficiency. Basically the fear of fuck ups will engender a management action to remove that possibility. No risk is ever allowed. Without risk there can be no reward. Today no reward is sufficient to blunt the destructive power of fear.

The prime institutional directive is survival and survival means no fuck ups, ever. We don’t have to do anything as long as no fuck ups happen. We are ruled completely by fear. There is no balance at all between fear-based motivations and the needs for innovation and progress. As a result our core operational principle for is compliance above all else. Productivity, innovation, progress and quality all fall by the wayside to empower compliance. Time and time again decisions are made to prize compliance over productivity, innovation, progress, quality, or efficiency. Basically the fear of fuck ups will engender a management action to remove that possibility. No risk is ever allowed. Without risk there can be no reward. Today no reward is sufficient to blunt the destructive power of fear. rection. It is the twin force for professional drift and institutional destruction. Working at an under-led institution is like sleepwalking. Every day you go to work basically making great progress at accomplishing absolutely nothing of substance. Everything is make-work and nothing is really substantive you have lots to do because of management oversight and the no fuck up rules. You make up results and produce lots of spin to market the illusion of success, but there is damn little actual success or progress. The utter and complete lack of leadership and vision is understandable if you recognize the prime motivation of fear. To show leadership and vision requires risk, and risk cannot take place without failure and failure courts scandal. Risk requires trust and trust is one of the things in shortest supply today. Without the trust that allows a fuck up without dire consequences, risks are not taken. Management is now set up to completely control and remove the possibility of failure from the system.

rection. It is the twin force for professional drift and institutional destruction. Working at an under-led institution is like sleepwalking. Every day you go to work basically making great progress at accomplishing absolutely nothing of substance. Everything is make-work and nothing is really substantive you have lots to do because of management oversight and the no fuck up rules. You make up results and produce lots of spin to market the illusion of success, but there is damn little actual success or progress. The utter and complete lack of leadership and vision is understandable if you recognize the prime motivation of fear. To show leadership and vision requires risk, and risk cannot take place without failure and failure courts scandal. Risk requires trust and trust is one of the things in shortest supply today. Without the trust that allows a fuck up without dire consequences, risks are not taken. Management is now set up to completely control and remove the possibility of failure from the system. rewards and achievement without risk is incompatible with experience. Everyday I go to work with the very explicit mandate to do what I’m told. The clear message every day is never ever fuck up. Any fuck ups are punished. The real key is don’t fuck up, don’t point out fuckups and help produce lots of “alternative results” or “fake breakthroughs” to help sell our success. We all have lots of training to do so that we make sure that everyone thinks we are serious about all this shit. The one thing that is absolutely crystal clear is that getting our management stuff correct is far more important than every doing any real work. As long as this climate of fear and oversight is in place, the achievements and breakthroughs that made our institutions famous (or great) will be a thing of the past. Our institutions are all about survival and not about achievement. This trend is replicated across society as a whole; progress is something to be feared because it unleashes unknown forces potentially scaring everyone. The fear resulting in being scared undermines trust and without trust the whole cycle re-enforces itself.

rewards and achievement without risk is incompatible with experience. Everyday I go to work with the very explicit mandate to do what I’m told. The clear message every day is never ever fuck up. Any fuck ups are punished. The real key is don’t fuck up, don’t point out fuckups and help produce lots of “alternative results” or “fake breakthroughs” to help sell our success. We all have lots of training to do so that we make sure that everyone thinks we are serious about all this shit. The one thing that is absolutely crystal clear is that getting our management stuff correct is far more important than every doing any real work. As long as this climate of fear and oversight is in place, the achievements and breakthroughs that made our institutions famous (or great) will be a thing of the past. Our institutions are all about survival and not about achievement. This trend is replicated across society as a whole; progress is something to be feared because it unleashes unknown forces potentially scaring everyone. The fear resulting in being scared undermines trust and without trust the whole cycle re-enforces itself. A big piece of the puzzle is the role of money in perceived success. Instead of other measures of success, quality and achievement, money has become the one-size fits all measure of the goodness of everything. Money serves to provide the driving tool for management to execute its control and achieve broad-based compliance. You only work on exactly what you are supposed to be working on. There is no time to think or act on ideas, learn, or produce anything outside the contract you’ve made with you customers. Money acts like a straightjacket for everyone and serves to constrict any freedom of action. The money serves to control and constrain all efforts. A core truth of the modern environment is that all other principles are ruled by money. Duty to money subjugates all other responsibilities. No amount of commitment to professional duties, excellence, learning, and your fellow man can withstand the pull of money. If push comes to shove, money wins. The peer review issues I’ve written about are testimony to this problem; excellence is always trumped by money.

A big piece of the puzzle is the role of money in perceived success. Instead of other measures of success, quality and achievement, money has become the one-size fits all measure of the goodness of everything. Money serves to provide the driving tool for management to execute its control and achieve broad-based compliance. You only work on exactly what you are supposed to be working on. There is no time to think or act on ideas, learn, or produce anything outside the contract you’ve made with you customers. Money acts like a straightjacket for everyone and serves to constrict any freedom of action. The money serves to control and constrain all efforts. A core truth of the modern environment is that all other principles are ruled by money. Duty to money subjugates all other responsibilities. No amount of commitment to professional duties, excellence, learning, and your fellow man can withstand the pull of money. If push comes to shove, money wins. The peer review issues I’ve written about are testimony to this problem; excellence is always trumped by money. called leaders who utilize fear as a prime motivation. Every time a leader uses fear to further their agenda, we take a step backward. One the biggest elements in this backwards march is thinking that fear and danger can be managed. Danger can only be pushed back, but never defeated. By controlling it in the explicit manner we attempt today, we only create a darker more fearsome danger in the future that will eventually overwhelm us. Instead we should face our normal fears as a requirement of the risk progress brings. If we want the benefits of modern life, we must accept risk and reject fear. We need actual leaders who encourage us to be bold and brave instead of using fear to control the masses. We need to quit falling for fear-based pitches and hold to our principles. Ultimately our principles need to act as a barrier to fear becoming the prevalent force in our decision-making.

called leaders who utilize fear as a prime motivation. Every time a leader uses fear to further their agenda, we take a step backward. One the biggest elements in this backwards march is thinking that fear and danger can be managed. Danger can only be pushed back, but never defeated. By controlling it in the explicit manner we attempt today, we only create a darker more fearsome danger in the future that will eventually overwhelm us. Instead we should face our normal fears as a requirement of the risk progress brings. If we want the benefits of modern life, we must accept risk and reject fear. We need actual leaders who encourage us to be bold and brave instead of using fear to control the masses. We need to quit falling for fear-based pitches and hold to our principles. Ultimately our principles need to act as a barrier to fear becoming the prevalent force in our decision-making. Everyone wants his or her work or work they pay for to be high quality. The rub comes when you start to pay for the quality you want. Everyone seems to want high quality for free, and too often believes that low cost quality is a real thing. Time and time again it becomes crystal clear that high quality is extremely expensive to obtain. Quality is full of tedious detail oriented work that is very expensive to conduct. More importantly when quality is aggressively pursued, it will expose problems that need to be solved to reach quality. For quality to be achieved these problems must be addressed and rectified. This ends up being the rub, as people often need to stop adding capability or producing results, and focus on fixing the problems. People, customer and those paying for things tend to not want to pay for fixing problems, which is necessary for quality. As a result, it’s quite tempting to not look so hard at quality and simply do more superficial work where quality is largely asserted by fiat or authority.

Everyone wants his or her work or work they pay for to be high quality. The rub comes when you start to pay for the quality you want. Everyone seems to want high quality for free, and too often believes that low cost quality is a real thing. Time and time again it becomes crystal clear that high quality is extremely expensive to obtain. Quality is full of tedious detail oriented work that is very expensive to conduct. More importantly when quality is aggressively pursued, it will expose problems that need to be solved to reach quality. For quality to be achieved these problems must be addressed and rectified. This ends up being the rub, as people often need to stop adding capability or producing results, and focus on fixing the problems. People, customer and those paying for things tend to not want to pay for fixing problems, which is necessary for quality. As a result, it’s quite tempting to not look so hard at quality and simply do more superficial work where quality is largely asserted by fiat or authority. The entirety of this issue is manifested in the conduct of verification and validation in modeling and simulation. Doing verification and validation is a means of high quality work for modeling and simulation. Like other forms of quality work, it can be done well engaging in details and running problems to ground. Thus V&V is expensive and time consuming. These quality measures take time and effort away from results, and worse yet produce doubt in the results. As a consequence the quality mindset and efforts need to have significant focus and commitment, or they will fall by the wayside. For many customers the results are all that matters, they aren’t willing to pay for more. This becomes particularly true if those doing the work are willing to assert quality without doing the work to actually assure it. In other words the customer will take work that is asserted to be high quality based on the word of those doing the work. If those doing the work are trying to do this on the cheap, we produce low or indeterminate quality work, sold as high quality work masking the actual costs.

The entirety of this issue is manifested in the conduct of verification and validation in modeling and simulation. Doing verification and validation is a means of high quality work for modeling and simulation. Like other forms of quality work, it can be done well engaging in details and running problems to ground. Thus V&V is expensive and time consuming. These quality measures take time and effort away from results, and worse yet produce doubt in the results. As a consequence the quality mindset and efforts need to have significant focus and commitment, or they will fall by the wayside. For many customers the results are all that matters, they aren’t willing to pay for more. This becomes particularly true if those doing the work are willing to assert quality without doing the work to actually assure it. In other words the customer will take work that is asserted to be high quality based on the word of those doing the work. If those doing the work are trying to do this on the cheap, we produce low or indeterminate quality work, sold as high quality work masking the actual costs. The largest part of the issue is the confluence of two terrible trends: increasingly naïve customers for modeling and simulation and decreasing commitment for paying for modeling and simulation quality. Part of this comes from customers who believe in modeling and simulation, which is a good thing. The “quality on the cheap” simulations create a false sense of security because it provides them financial resources. Basically we have customers who increasingly have no ability to tell the difference between low and high quality work. The work’s quality is completely dependent upon those doing the work. This is dangerous in the extreme. This is especially dangerous when the modeling and simulation work is not emphasizing quality or paying for its expensive acquisition. We have become too comfortable with the tempting quick and dirty quality. The (color) viewgraph norm that used to be the quality standard for computational work that had faded in use is making a come back. A viewgraph norm version of quality is orders of magnitude cheaper than detailed quantitative work needed to accumulate evidence. Many customers are perfectly happy with the viewgraph norm and naïvely accept results that simply look good and asserted as high quality.

The largest part of the issue is the confluence of two terrible trends: increasingly naïve customers for modeling and simulation and decreasing commitment for paying for modeling and simulation quality. Part of this comes from customers who believe in modeling and simulation, which is a good thing. The “quality on the cheap” simulations create a false sense of security because it provides them financial resources. Basically we have customers who increasingly have no ability to tell the difference between low and high quality work. The work’s quality is completely dependent upon those doing the work. This is dangerous in the extreme. This is especially dangerous when the modeling and simulation work is not emphasizing quality or paying for its expensive acquisition. We have become too comfortable with the tempting quick and dirty quality. The (color) viewgraph norm that used to be the quality standard for computational work that had faded in use is making a come back. A viewgraph norm version of quality is orders of magnitude cheaper than detailed quantitative work needed to accumulate evidence. Many customers are perfectly happy with the viewgraph norm and naïvely accept results that simply look good and asserted as high quality. Perhaps an even bigger issue is the misguided notion that the pursuit of high quality won’t derail plans. We have gotten into the habit of accepting highly delusional plans for developing capability that do not factor in the cost of quality. We have allowed ourselves to bullshit the customer to believing that quality is simple to achieve. Instead the pursuit of quality will uncover issues that must be dealt with and ultimately change schedules. We can take the practice of verification as an object lesson in how this works out. If done properly verification will uncover numerous and subtle errors in codes such as bugs, incorrect implementations, boundary conditions, or error accumulation mechanisms. Sometimes the issues uncovered are deeply mysterious and solving them requires great effort. Sometimes the problems exposed require research with uncertain or indeterminate outcomes. Other times the issues overthrow basic presumptions about your capability that require significant corrections in large-scale objectives. We increasingly live in a world that cannot tolerate these realities. The current belief is that we can apply project management to the work, and produce high quality results that ignore all of this.

Perhaps an even bigger issue is the misguided notion that the pursuit of high quality won’t derail plans. We have gotten into the habit of accepting highly delusional plans for developing capability that do not factor in the cost of quality. We have allowed ourselves to bullshit the customer to believing that quality is simple to achieve. Instead the pursuit of quality will uncover issues that must be dealt with and ultimately change schedules. We can take the practice of verification as an object lesson in how this works out. If done properly verification will uncover numerous and subtle errors in codes such as bugs, incorrect implementations, boundary conditions, or error accumulation mechanisms. Sometimes the issues uncovered are deeply mysterious and solving them requires great effort. Sometimes the problems exposed require research with uncertain or indeterminate outcomes. Other times the issues overthrow basic presumptions about your capability that require significant corrections in large-scale objectives. We increasingly live in a world that cannot tolerate these realities. The current belief is that we can apply project management to the work, and produce high quality results that ignore all of this. The way that the trip down to “quality hell” starts is the impact of digging into quality. Most customers are paying for capability rather than quality. When we allow quick and dirty means of assuring quality to be used, the door is open for the illusion of quality. For the most part the verification and validation done by most scientists and engineers is the quick, dirty and incomplete variety. We see the use of eyeball or viewgraph norm pervasively in comparing results in both verification and validation. We see no real attempt to grapple with the uncertainties in calculations or measurements to put comparisons in quantitative context. Usually we see people create graphics that have the illusion of good results, and use authority to dictate that these results indicate mastery and quality. For the most part the scientific and engineering community simply gives in to the authoritative claims despite a lack of evidence. The deeper issue with the quick and dirty verification is the mindset of those conducting it; they are working from the presumption that the code is correct instead of assuming there are problems, and collecting evidence to disprove this.

The way that the trip down to “quality hell” starts is the impact of digging into quality. Most customers are paying for capability rather than quality. When we allow quick and dirty means of assuring quality to be used, the door is open for the illusion of quality. For the most part the verification and validation done by most scientists and engineers is the quick, dirty and incomplete variety. We see the use of eyeball or viewgraph norm pervasively in comparing results in both verification and validation. We see no real attempt to grapple with the uncertainties in calculations or measurements to put comparisons in quantitative context. Usually we see people create graphics that have the illusion of good results, and use authority to dictate that these results indicate mastery and quality. For the most part the scientific and engineering community simply gives in to the authoritative claims despite a lack of evidence. The deeper issue with the quick and dirty verification is the mindset of those conducting it; they are working from the presumption that the code is correct instead of assuming there are problems, and collecting evidence to disprove this. quantitative work is the remedy for the qualitative, eyeball, viewgraph, and color video metric so often used today. Deep quantitative studies show the sort of evidence that cannot be ignored. If the results are good, the evidence of quality is strong. If a problem is found, the need for remedy is equally strong. In validation or verification the creation of an error bar goes a long way to putting any quality discussion in context. The lack of an error bar casts any result adrift and lacking in context. A secondary issue would be the incomplete work where full error bars are not pursued, or results that are not favorable are not pursued or worse yet, suppressed.

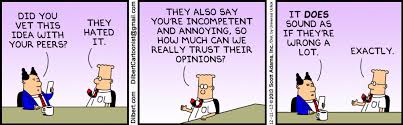

quantitative work is the remedy for the qualitative, eyeball, viewgraph, and color video metric so often used today. Deep quantitative studies show the sort of evidence that cannot be ignored. If the results are good, the evidence of quality is strong. If a problem is found, the need for remedy is equally strong. In validation or verification the creation of an error bar goes a long way to putting any quality discussion in context. The lack of an error bar casts any result adrift and lacking in context. A secondary issue would be the incomplete work where full error bars are not pursued, or results that are not favorable are not pursued or worse yet, suppressed. alternative facts” are driven by this lack of willingness to deal with reality. Why deal with truth and the reality of real problems when we can just define them away with more convenient facts. In today’s world we are seeing a rise of lies, bullshit and delusion all around us. As a result, we are systematically over-promising and under-delivering on our work. We over-promise to get the money, and then under-deliver because of the realities of doing work one cannot get maximum capability with maximum quality for discount prices. Increasingly bullshit (propaganda) fills in the space between what we promise and what we deliver. Pairing with this deep dysfunction is a systematic failure of peer review within programs. Peer review has been installed as backs stop again the tendencies outlined above. The problem is that too often peer review does not have a free reign. Too often with have conflicts of interest, or control that provide an explicit message that the peer review had better be positive, or else.

alternative facts” are driven by this lack of willingness to deal with reality. Why deal with truth and the reality of real problems when we can just define them away with more convenient facts. In today’s world we are seeing a rise of lies, bullshit and delusion all around us. As a result, we are systematically over-promising and under-delivering on our work. We over-promise to get the money, and then under-deliver because of the realities of doing work one cannot get maximum capability with maximum quality for discount prices. Increasingly bullshit (propaganda) fills in the space between what we promise and what we deliver. Pairing with this deep dysfunction is a systematic failure of peer review within programs. Peer review has been installed as backs stop again the tendencies outlined above. The problem is that too often peer review does not have a free reign. Too often with have conflicts of interest, or control that provide an explicit message that the peer review had better be positive, or else. We bring in external peer reviews filled with experts who have the mantle of legitimacy. The problem is that these experts are hired or drafted by the organizations being reviewed. Being too honest or frank in a peer review is the quickest route to losing that gig and the professional kudos that goes along with it. One bad or negative review will assure that the reviewer is never invited back. I’ve seen it over and over again. Anyone who provides an honest critique is never seen again. A big part of the issue is that the reviews are viewed as pass-fail tests and problems uncovered are dealt with punitively. Internal peer reviews are even worse. Again any negative review is met with distain. The person having the audacity and stupidity to be critical is punished. This punishment is meted out with the clear message, “only positive reviews are tolerated.” Positive reviews are thus mandated by threat and retribution. We have created the recipe for systemic failure.

We bring in external peer reviews filled with experts who have the mantle of legitimacy. The problem is that these experts are hired or drafted by the organizations being reviewed. Being too honest or frank in a peer review is the quickest route to losing that gig and the professional kudos that goes along with it. One bad or negative review will assure that the reviewer is never invited back. I’ve seen it over and over again. Anyone who provides an honest critique is never seen again. A big part of the issue is that the reviews are viewed as pass-fail tests and problems uncovered are dealt with punitively. Internal peer reviews are even worse. Again any negative review is met with distain. The person having the audacity and stupidity to be critical is punished. This punishment is meted out with the clear message, “only positive reviews are tolerated.” Positive reviews are thus mandated by threat and retribution. We have created the recipe for systemic failure. Putting the blame on systematic wishful thinking is far too kind. High quality for a discount price is wishful thinking at best. If the drivers for this weren’t naïve customers and dishonest programs, it might be forgivable. The problem is that everyone who is competent knows better. The real key to seeing where we are going is the peer review issue. By squashing negative peer review, the truth is exposed. Those doing all this substandard work know the work is poor, and simply want a system that does not expose the truth. We have created a system with rewards and punishments that allows this. Reward is all monetary, and very little positive happens based on quality. We can assert excellence without doing the hard things necessary to achieve it. As long as we allow people to simply declare their excellence without producing evidence of said excellence quality will languish.

Putting the blame on systematic wishful thinking is far too kind. High quality for a discount price is wishful thinking at best. If the drivers for this weren’t naïve customers and dishonest programs, it might be forgivable. The problem is that everyone who is competent knows better. The real key to seeing where we are going is the peer review issue. By squashing negative peer review, the truth is exposed. Those doing all this substandard work know the work is poor, and simply want a system that does not expose the truth. We have created a system with rewards and punishments that allows this. Reward is all monetary, and very little positive happens based on quality. We can assert excellence without doing the hard things necessary to achieve it. As long as we allow people to simply declare their excellence without producing evidence of said excellence quality will languish.