Tiny details imperceptible to us decide everything!

― W.G. Sebald

The standards of practice in verification of computer codes and applied calculations are generally appalling. Most of the time when I encounter work, I’m just happy to see anything at all done to verify a code. Put differently, most of the published literature accepts a slip shod practice in terms of verification. In some areas like shock physics, the viewgraph norm still reigns supreme. It actually rules supreme in a far broader swath of science, but you talk about what you know. The missing element in most of the literature is the lack of quantitative analysis of results. Even when the work is better and includes detailed quantitative analysis, the work usually lacks a deep connection with numerical analysis results. The typical best practice in verification only includes the comparison of the observed rate of convergence with the theoretical rate of convergence. Worse yet, the result is asymptotic and codes are rarely practically used with asymptotic meshes. Thus, standard practice is largely superficial, and only scratches the surface of the connections with numerical analysis.

The standards of practice in verification of computer codes and applied calculations are generally appalling. Most of the time when I encounter work, I’m just happy to see anything at all done to verify a code. Put differently, most of the published literature accepts a slip shod practice in terms of verification. In some areas like shock physics, the viewgraph norm still reigns supreme. It actually rules supreme in a far broader swath of science, but you talk about what you know. The missing element in most of the literature is the lack of quantitative analysis of results. Even when the work is better and includes detailed quantitative analysis, the work usually lacks a deep connection with numerical analysis results. The typical best practice in verification only includes the comparison of the observed rate of convergence with the theoretical rate of convergence. Worse yet, the result is asymptotic and codes are rarely practically used with asymptotic meshes. Thus, standard practice is largely superficial, and only scratches the surface of the connections with numerical analysis.

The Devil is in the details, but so is salvation.

― Hyman G. Rickover

The generic problem is that it rarely occurs at all much less being practiced well, then we might want to do it with genuine excellence. Thus, the first step to take is regular pedestrian application of standard analysis. Thus, what masquerades as excellence today is quite threadbare. We verify order of convergence in code verification under circumstances that usually don’t meet the conditions where they formally apply. The theoretical order of convergence only applies in the limit where the mesh is asymptotically fine. Today, the finite size of the discretization is not taken directly into account. This can be done, I’ll show you how below. Beyond this rather great leap of faith, verification does not usually focus on the magnitude of error, numerical stability, or the nature of the problem being solved. All of these are available results through competent numerical analysis, in many cases via utterly classical techniques.

A maxim of verification that is important to emphasize is that the results are a combination of theoretical expectations, the finite resolution and the nature of the problem being solved. All of these factors should be considered in interpreting results.

Before I highlight all of the ways we might make verification a deeper and more valuable investigation, a few other points are worth making about the standards of practice. The first thing to note is the texture within verification, and its two flavors. Code verification is used to investigate the correctness of a code’s implementation. This is accomplished by solving problems with an analytical (exact or nearly-exact) solution. The key is to connect the properties of the method defined by analysis with the observed behavior in the code. The “gold standard” is verifying that the order of convergence observed matches that expected from analysis.

Truth is only relative to those that ignore hard evidence.

― A.E. Samaan

The second flavor of verification is solution (calculation) verification. In solution verification, the objective is to estimate the error in the numerical solution of an applied problem. The error estimate is for the numerical component in the overall error separated from modeling errors. It is an important component in the overall uncertainty estimate for a calculation. The numerical uncertainty is usually derived from the numerical error estimate. The rate or order of convergence is usually available as an auxiliary output of the process. Properly practiced the rate of convergence provides context for the overall exercise.

One of things to understand is that code verification also contains a complete accounting of the numerical error. This error can be used to compare methods with “identical” orders of accuracy for levels of numerical error, which can be useful in making decisions about code options. By the same token solution verification provides information about the observed order of accuracy. Because the applied problems are not analytical or smooth enough, they generally can’t be expected to provide the theoretical order of convergence. The rate of convergence is then an auxiliary result of the solution verification exercise just as the error is an auxiliary result for code verification. It contains useful information on the solution, but it is subservient to the error estimate. Conversely, the error provided in code verification is subservient to the order of accuracy. Nonetheless, the current practice simply scratches the surface of what could be done via verification and its unambiguous ties to numerical analysis.

One of things to understand is that code verification also contains a complete accounting of the numerical error. This error can be used to compare methods with “identical” orders of accuracy for levels of numerical error, which can be useful in making decisions about code options. By the same token solution verification provides information about the observed order of accuracy. Because the applied problems are not analytical or smooth enough, they generally can’t be expected to provide the theoretical order of convergence. The rate of convergence is then an auxiliary result of the solution verification exercise just as the error is an auxiliary result for code verification. It contains useful information on the solution, but it is subservient to the error estimate. Conversely, the error provided in code verification is subservient to the order of accuracy. Nonetheless, the current practice simply scratches the surface of what could be done via verification and its unambiguous ties to numerical analysis.

Little details have special talents in creating big problems!

― Mehmet Murat ildan

If one looks at the fundamental (or equivalence( theorem of numerical analysis, the two aspects of theorem are stability and consistency implying convergence (https://williamjrider.wordpress.com/2016/05/20/the-lax-equivalence-theorem-its-importance-and-limitations/ ). Verification usually uses a combination of error estimation and convergence testing to imply consistency. Stability is merely assumed. This all highlights the relatively superficial nature of the current practice. The result being tested is completely asymptotic, and the stability is merely assumed and never really strictly tested. Some methods are unconditionally stable, which might also be tested. In all cases the lack of stress testing the results of numerical analysis is short-sighted.

One of the most important results in numerical analysis is the stability of the approximation. Failures of stability are one of the most horrific things to encounter in practice. Stability results should be easy and revealing to explore via verification. It also offers the ability to explore what failure of a method looks like, and the sharpness of the estimates of stability. Tests could be devised to examine the stability of a method and confirm this rather fundamental aspect of a numerical method. In addition to confirming this rather fundamental behavior, the character of instability will be made clear if it should arise. Generally, one would expect calculations to diverge under mesh refinement and the instability to manifest itself earlier and earlier as the mesh is refined. I might suggest that stability could be examined via mesh refinement, and observing the conditions where the convergence character changes.

One of the most unpleasant issues with verification is the deviations of the observed rate of convergence from what is expected theoretically. No one seems to have a good answer to how close, is close enough? Sometimes we can observe that we systematically get closer and closer as the mesh is refined. This is quite typical, but systematic

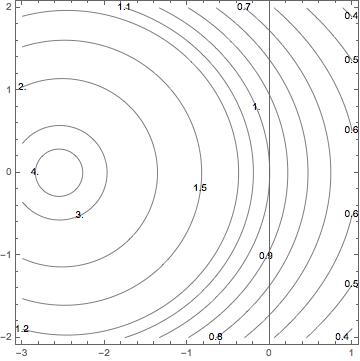

The expected convergence rate for a single time step using forward Euler for a linear ODE

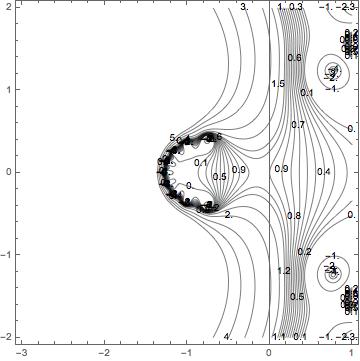

deviations are common. As I will show, the deviations are expected and may be predicted by detailed numerical analysis. The key is to realize that the effects of finite resolution can be included in the analysis. As such for simple problems we can predict the rate of convergence observed and its deviations for the asymptotic rate. Beyond the ability to predict the rate of convergence, this analysis provides a systematic explanation for this oft-seen results.

This can be done very easily using classical methods for numerical analysis (see previous blog post https://williamjrider.wordpress.com/2014/07/15/conducting-von-neumann-stability-analysis/). We can start with the knowledge that detailed numerical analysis uses an analytical solution to the equations as its basis. We can then analyze the deviations from the analytical and their precise character including the finite resolution. As noted in that previous post, the order of accuracy is examined via a series expansion in the limit where the step size or mesh is vanishingly small. We also know that this limit is only approached and never actually reached in any practical calculation.

For the simple problems amenable to these classical analyses, we can derive the exact rate of convergence for a given step size (this result is limited to the ideal problem central to the analysis). The key part of this approach is using the exact solution to the model equation and the numerical symbol providing an error estimate. Consider the forward Euler method for ODE’s, , the error is

. We can now estimate the error for any step size and analytically estimate the convergence rate we would observe in practice. If we employ the relatively standard practice of mesh halving for verification, we get the estimate of the rate of convergence,

. A key point to remember is that the solution with the halved time step takes twice the number of steps. Using this methodology, we can easily see the impact of finite resolution. For the forward Euler method, we can see that steps larger than zero raise the rate of convergence above the theoretical value of one. This is exactly what we see in practice.

The expected convergence rate for a ten time steps using forward Euler for a linear ODE

When one starts to examine what we expect through analysis, a number of interesting things can be observed. If the coarsest step size is slightly unstable, the method will exhibit very large rates of convergence. Remarkably, we see this all the time. Sometimes results of verification produces seemingly absurdly high rates of convergence. Rather than being indicative of everything being great, it is an indication that the calculation is highly suspect. The naïve practitioner will often celebrate the absurd result as being triumphant when it is actually a symptom of problems requiring greater attention. With the addition of a refined analysis, this sort of result can be seen as pathological.

The expected convergence rate for one hundred time steps using forward Euler for a linear ODE

Immediately recognize that we have yielded a significant result with the analysis of perhaps the simplest numerical method in existence. Think of the untapped capacity for explaining the behavior observed in computational practice. Moreover, this significant result explains a serious and far pernicious problem in verification, the misreading of results. Even where the verification practice is quite good, the issue of deviation of convergence rates from the theoretical rates is pervasive. We can easily see that this is a completely expected behavior that falls utterly in line with expectations. This ought to “bait the hook” to conducting more analysis, and connecting it to the verification results.

There is a lot more that could be done here, I’ve merely scratched the surface.

The truth of the story lies in the details.

― Paul Auster

ted in the shadows for years and years as one of Hollywood’s worst kept secrets. Weinstein preyed on women with virtual impunity with his power and prestige acting to keep his actions in the dark. The promise and threat of his power in that industry gave him virtual license to act. The silence of the myriad of insiders who knew about the pattern of abuse allowed the crimes to continue unabated. Only after the abuse came to light broadly and outside the movie industry did the unacceptability arise. When the abuse stayed in the shadows, and its knowledge limited to industry insiders, it continued.

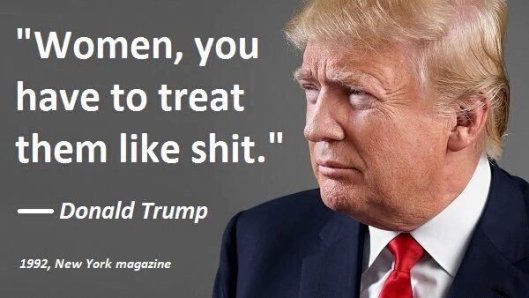

ted in the shadows for years and years as one of Hollywood’s worst kept secrets. Weinstein preyed on women with virtual impunity with his power and prestige acting to keep his actions in the dark. The promise and threat of his power in that industry gave him virtual license to act. The silence of the myriad of insiders who knew about the pattern of abuse allowed the crimes to continue unabated. Only after the abuse came to light broadly and outside the movie industry did the unacceptability arise. When the abuse stayed in the shadows, and its knowledge limited to industry insiders, it continued. Our current President is serial abuser of power whether it be the legal system, women, business associates or the American people, his entire life is constructed around abuse of power and the privileges of wealth. Many people are his enablers, and nothing enables it more than silence. Like Weinstein, his sexual misconducts are many and well known, yet routinely go unpunished. Others either remain silence or ignore and excuse the abuse a being completely normal.

Our current President is serial abuser of power whether it be the legal system, women, business associates or the American people, his entire life is constructed around abuse of power and the privileges of wealth. Many people are his enablers, and nothing enables it more than silence. Like Weinstein, his sexual misconducts are many and well known, yet routinely go unpunished. Others either remain silence or ignore and excuse the abuse a being completely normal. ower and ability to abuse it. They are an entire collection of champion power abusers. Like all abusers, they maintain their power through the cowering masses below them. When we are silent their power is maintained. They are moving the squash all resistance. My training was pointed at the inside of the institutions and instruments of government where they can use “legal” threats to shut us up. They have waged an all-out assault against the news media. Anything they don’t like is labeled as “fake news” and attacked. The legitimacy of facts has been destroyed, providing the foundation for their power. We are now being threatened to cut off the supply of facts to base resistance upon. This training was the act of people wanting to rule like dictators in an authoritarian manner.

ower and ability to abuse it. They are an entire collection of champion power abusers. Like all abusers, they maintain their power through the cowering masses below them. When we are silent their power is maintained. They are moving the squash all resistance. My training was pointed at the inside of the institutions and instruments of government where they can use “legal” threats to shut us up. They have waged an all-out assault against the news media. Anything they don’t like is labeled as “fake news” and attacked. The legitimacy of facts has been destroyed, providing the foundation for their power. We are now being threatened to cut off the supply of facts to base resistance upon. This training was the act of people wanting to rule like dictators in an authoritarian manner. the set-up is perfect. They are the wolves and we, the sheep, are primed for slaughter. Recent years have witnessed an explosion in the amount of information deemed classified or sensitive. Much of this information is controlled because it is embarrassing or uncomfortable for those in power. Increasingly, information is simply hidden based on non-existent standards. This is a situation that is primed for abuse of power. People is positions of power can hide anything they don’t like. For example, something bad or embarrassing can be deemed to be proprietary or business-sensitive, and buried from view. Here the threats come in handy to make sure that everyone keeps their mouths shut. Various abuses of power can now run free within the system without risk of exposure. Add a weakened free press and you’ve created the perfect storm.

the set-up is perfect. They are the wolves and we, the sheep, are primed for slaughter. Recent years have witnessed an explosion in the amount of information deemed classified or sensitive. Much of this information is controlled because it is embarrassing or uncomfortable for those in power. Increasingly, information is simply hidden based on non-existent standards. This is a situation that is primed for abuse of power. People is positions of power can hide anything they don’t like. For example, something bad or embarrassing can be deemed to be proprietary or business-sensitive, and buried from view. Here the threats come in handy to make sure that everyone keeps their mouths shut. Various abuses of power can now run free within the system without risk of exposure. Add a weakened free press and you’ve created the perfect storm. him. No one even asks the question, and the abuse of power goes unchecked. Worse yet, it becomes the “way things are done”. This takes us full circle to the whole Harvey Weinstein scandal. It is a textbook example of unchecked power, and the “way we do things”.

him. No one even asks the question, and the abuse of power goes unchecked. Worse yet, it becomes the “way things are done”. This takes us full circle to the whole Harvey Weinstein scandal. It is a textbook example of unchecked power, and the “way we do things”.

oo often we make the case that their misdeeds are acceptable because of the power they grant to your causes through their position. This is exactly the bargain Trump makes with the right wing, and Weinstein made with the left.

oo often we make the case that their misdeeds are acceptable because of the power they grant to your causes through their position. This is exactly the bargain Trump makes with the right wing, and Weinstein made with the left. I’d like to be independent empowered and passionate about work, and I definitely used to be. Instead I find that I’m generally disempowered compliant and despondent these days. The actions that manage us have this effect; sending the clear message that we are not in control; we are to be controlled, and our destiny is determined by our subservience. With the National environment headed in this direction, institutions like our National Labs cannot serve their important purpose. The situation is getting steadily worse, but as I’ve seen there is always somewhere worse. By the standards of most people I still have a good job with lots of perks and benefits. Most might tell me that I’ve got it good, and I do, but I’ve never been satisfied with such mediocrity. The standard of “it could be worse” is simply an appalling way to live. The truth is that I’m in a velvet cage. This is said with the stark realization that the same forces are dragging all of us down. Just because I’m relatively fortunate doesn’t mean that the situation is tolerable. The quip that things could be worse is simply a way of accepting the intolerable.

I’d like to be independent empowered and passionate about work, and I definitely used to be. Instead I find that I’m generally disempowered compliant and despondent these days. The actions that manage us have this effect; sending the clear message that we are not in control; we are to be controlled, and our destiny is determined by our subservience. With the National environment headed in this direction, institutions like our National Labs cannot serve their important purpose. The situation is getting steadily worse, but as I’ve seen there is always somewhere worse. By the standards of most people I still have a good job with lots of perks and benefits. Most might tell me that I’ve got it good, and I do, but I’ve never been satisfied with such mediocrity. The standard of “it could be worse” is simply an appalling way to live. The truth is that I’m in a velvet cage. This is said with the stark realization that the same forces are dragging all of us down. Just because I’m relatively fortunate doesn’t mean that the situation is tolerable. The quip that things could be worse is simply a way of accepting the intolerable. beings (people) into a hive where their basic humanity and individuality is lost. Everything is controlled and managed for the good of the collective. Science Fiction is an allegory for society, and the forces of depersonalized control embodied by the Borg have only intensified in our world. Even people working in my chosen profession are under the thrall of a mindless collective. Most of the time it is my maturity and experience as an adult that is called upon. My expertise and knowledge should be my most valuable commodity as a professional, yet they go unused and languishing. They come to play in an almost haphazard catch-what-catch-can manner. Most of the time it happens when I engage with someone external. It is never planned or systematic. My management is much more concerned about me being up on my compliance training than productively employing my talents. The end result is the loss of identity and sense of purpose, so that now I am simply the ninth member of the bottom unit of the collective, 9 of 13.

beings (people) into a hive where their basic humanity and individuality is lost. Everything is controlled and managed for the good of the collective. Science Fiction is an allegory for society, and the forces of depersonalized control embodied by the Borg have only intensified in our world. Even people working in my chosen profession are under the thrall of a mindless collective. Most of the time it is my maturity and experience as an adult that is called upon. My expertise and knowledge should be my most valuable commodity as a professional, yet they go unused and languishing. They come to play in an almost haphazard catch-what-catch-can manner. Most of the time it happens when I engage with someone external. It is never planned or systematic. My management is much more concerned about me being up on my compliance training than productively employing my talents. The end result is the loss of identity and sense of purpose, so that now I am simply the ninth member of the bottom unit of the collective, 9 of 13.

actually manage the work going on and the people doing the work. They are managing our compliance and control, not the work; the work we do is mere afterthought that increasingly does not need me any competent person would do. At one time work felt good and important with a deep sense of personal value and accomplishment. Slowly and surely this sense is being under-mined. We have gone on a long slow march away from being empowered and valued as contributing individuals. Today we are simply ever-replicable cogs in a machine that cannot tolerate a hint of individuality or personality.

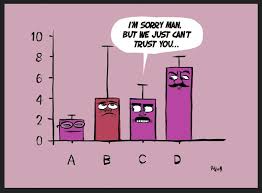

actually manage the work going on and the people doing the work. They are managing our compliance and control, not the work; the work we do is mere afterthought that increasingly does not need me any competent person would do. At one time work felt good and important with a deep sense of personal value and accomplishment. Slowly and surely this sense is being under-mined. We have gone on a long slow march away from being empowered and valued as contributing individuals. Today we are simply ever-replicable cogs in a machine that cannot tolerate a hint of individuality or personality. great, and I believe in it. Management should be the art of enabling and working to get the most out of employees. If the system was working properly this would happen. For some reason society has removed its trust for people. Our systems are driven and motivated by fear. The systems are strongly motivated to make sure that people don’t fuck up. A large part of the overhead and lack of empowerment is designed to keep people from making mistakes. A big part of the issue is the punishment meted out for any fuck ups. Our institutions are mercilessly punished for any mistakes. Honest mistakes and failures are met with negative outcomes and a lack of tolerance. The result is a system that tries to defend itself through caution, training and control of people. Our innate potential is insufficient justification for risking the reaction a fuck up might generate. The result is an increasingly meek and subdued workforce unwilling to take risks because failure is such a grim prospect.

great, and I believe in it. Management should be the art of enabling and working to get the most out of employees. If the system was working properly this would happen. For some reason society has removed its trust for people. Our systems are driven and motivated by fear. The systems are strongly motivated to make sure that people don’t fuck up. A large part of the overhead and lack of empowerment is designed to keep people from making mistakes. A big part of the issue is the punishment meted out for any fuck ups. Our institutions are mercilessly punished for any mistakes. Honest mistakes and failures are met with negative outcomes and a lack of tolerance. The result is a system that tries to defend itself through caution, training and control of people. Our innate potential is insufficient justification for risking the reaction a fuck up might generate. The result is an increasingly meek and subdued workforce unwilling to take risks because failure is such a grim prospect.

The same thing is happening to our work. Fear and risk is dominating our decision-making. Human potential, talent, productivity, and lives of value are sacrificed at the altar of fear. Caution has replaced boldness. Compliance has replaced value. Control has replaced empowerment. In the process work has lost meaning and the ability for an individual to make a difference has disappeared. Resistance is futile, you will be assimilated.

The same thing is happening to our work. Fear and risk is dominating our decision-making. Human potential, talent, productivity, and lives of value are sacrificed at the altar of fear. Caution has replaced boldness. Compliance has replaced value. Control has replaced empowerment. In the process work has lost meaning and the ability for an individual to make a difference has disappeared. Resistance is futile, you will be assimilated.