If you had to identify, in one word, the reason why the human race has not achieved, and never will achieve, its full potential, that word would be “meetings.”

― Dave Barry

Meetings. Meetings, Meetings. Meetings suck. Meetings are awful. Meeting are soul sucking, time wasters. Meetings are a good way to “work” without actually working. Meetings absolutely deserve the bad rap they get. Most people think that meetings should be abolished. One of the most dreaded workplace events is a day that is completely full of meetings. These days invariably feel like complete losses, draining all productive energy from what ought to be a day full of promise. I say this as an unabashed extrovert knowing that the introvert is going to feel overwhelmed by the prospect.

Meetings. Meetings, Meetings. Meetings suck. Meetings are awful. Meeting are soul sucking, time wasters. Meetings are a good way to “work” without actually working. Meetings absolutely deserve the bad rap they get. Most people think that meetings should be abolished. One of the most dreaded workplace events is a day that is completely full of meetings. These days invariably feel like complete losses, draining all productive energy from what ought to be a day full of promise. I say this as an unabashed extrovert knowing that the introvert is going to feel overwhelmed by the prospect.

Meetings are a symptom of bad organization. The fewer meetings the better.

– Peter Drucker

All of this is true, and yet meetings are important, even essential to a properly functioning workplace. As such, meetings need to be the focus of real effort to fix while minimizing unnecessary time spent there. Meetings are a vital humanizing element in collective, collaborative work. Deep engagement with people is enriching, educational, and necessary for fulfilling work. Making meetings better would produce immense benefits in quality, productivity and satisfaction in work.

Meetings are at the heart of an effective organization, and each meeting is an opportunity to clarify issues, set new directions, sharpen focus, create alignment, and move objectives forward.

― Paul Axtell

If there is one thing that unifies people at work, it is meetings, and how much we despise them. Workplace culture is full of meetings and most of them are genuinely awful. Poorly run meetings are a veritable plague in the workplace. Meetings are also an essential human element in work, and work is a completely human and social endeavor. A large part of the problem is the relative difficulty of running a meeting well, which exceeds the talent and will of most people (managers). It is actually very hard to do this well. We have now gotten to the point where all of us almost reflexively expect a meeting to be awful and plan accordingly. For my own part, I take something to read, or my computer to do actual work, or the old stand-by of passing time (i.e., fucking off) on my handy dandy iPhone. I’ve even resorted to the newest meeting past-time of texting another meeting attendee to talk about how shitty the meeting is. All of this can be avoided by taking meetings more seriously and crafting time that is well spent. If this can’t be done the meeting should be cancelled until the time is well spent.

If there is one thing that unifies people at work, it is meetings, and how much we despise them. Workplace culture is full of meetings and most of them are genuinely awful. Poorly run meetings are a veritable plague in the workplace. Meetings are also an essential human element in work, and work is a completely human and social endeavor. A large part of the problem is the relative difficulty of running a meeting well, which exceeds the talent and will of most people (managers). It is actually very hard to do this well. We have now gotten to the point where all of us almost reflexively expect a meeting to be awful and plan accordingly. For my own part, I take something to read, or my computer to do actual work, or the old stand-by of passing time (i.e., fucking off) on my handy dandy iPhone. I’ve even resorted to the newest meeting past-time of texting another meeting attendee to talk about how shitty the meeting is. All of this can be avoided by taking meetings more seriously and crafting time that is well spent. If this can’t be done the meeting should be cancelled until the time is well spent.

The least productive people are usually the ones who are most in favor of holding meetings.

― Thomas Sewell

There are a few uniform things that can be done to improve the impact of meetings on the workplace. If a meeting is mandatory, it will almost surely suck. It will almost always suck hard. No meeting should ever be mandatory, ever. By forcing people to go to mandatory meetings, those running the meeting have no reason to make the meeting enjoyable, useful or engaging. They are not competing for your time, and this allows your time to be abused. A meeting should always be trying to make you want to be there, and honestly compete for your time. A fundamental notion that makes all meetings better is a strong sense that you know why you are at a meeting, and how you are participating. There is no reason for attendance to a meeting where you passively absorb information without any active role. If this is the only way to get the information, we highlight deeper problems that are all too common! Everyone should have an active role in the meeting’s life. If someone is not active, they probably don’t need to be there.

Meetings at work present great opportunities to showcase your talent. Do not let them go to waste.

― Abhishek Ratna

There are a lot of types of meetings, and generally speaking all of them are terrible, and they don’t need to be. None of them really have to be awful, but they are. Some of the reasons are a tremendously deep issue with the modern workplace. It is only a small over reach to say that better meetings would go a huge distance to improve the average workplace and provide untold benefits in terms of productivity and morale. So, to set the stage, let’s talk about the general types of meetings that most of us encounter:

- Conferences, Talks and symposiums

- Informational Meetings

- Organizational Meetings

- Project Meetings

- Reviews

- Phone, Skype, Video Meetings

- Working meetings

- Training Meetings

All of these meetings can stand some serious improvement that would have immense benefits.

Meetings are indispensable when you don’t want to do anything.

–John Kenneth Galbraith

The key common step to a good meeting is planning and attention to the value of people’s time. Part of the planning is a commitment to engagement with the meeting attendees. Do those running the meeting know how to convert the attendees to participants? Part of the meeting is engaging people as social animals and building connections and bonds. The worst thing is a meeting that a person attends solely because they are supposed to be there. Too often our meetings drain energy and make people feel utterly powerless. A person should walk out of a meeting energized and empowered. Instead, meeting are energy and morale sucking machines. A large part of the meeting’s benefit should be a feeling of community and bonding with others. Collaborations and connections should arise naturally from a well run meeting. All of this seems difficult and it is, but anything less does not honor the time of those attending and the great expense their time represents. In the end, the meeting should be a valuable expenditure of time. More than simply valuable, the meeting should produce something better, a stronger human connection and common purpose of all those attending. If the meeting isn’t a better expenditure of people’s time, it probably shouldn’t happen.

A meeting consists of a group of people who have little to say – until after the meeting.

― P.K. Shaw

Conferences, Talks and symposiums. This is a form of meeting that generally works pretty well. The conference has a huge advantage as a form of meeting. Time spend at a conference is almost always time well spent. Even at their worst, a conference should be a banquet of new information and exposure to new ideas. Of course, they can be done very poorly and the benefits can be undermined by poor execution and lack of attention to detail.

Conferences, Talks and symposiums. This is a form of meeting that generally works pretty well. The conference has a huge advantage as a form of meeting. Time spend at a conference is almost always time well spent. Even at their worst, a conference should be a banquet of new information and exposure to new ideas. Of course, they can be done very poorly and the benefits can be undermined by poor execution and lack of attention to detail. Conversely, a conference’s benefits can be magnified by careful and professional planning and execution. One way to augment a conference significantly is find really great keynote speakers to set the tone, provide energy and engage the audience. A thoughtful and thought-provoking talk delivered by an expert who is a great speaker can propel a conference to new heights and send people away with renewed energy. Conferences can also go to greater lengths to make the format and approach welcoming to greater audience participation especially getting the audience to ask questions and stay awake and aware. It’s too easy to tune out these days with a phone or laptop. Good time keeping and attention to the schedule is another way of making a conference work to the greatest benefit. This means staying on time and on schedule. It means paying attention to scheduling so that the best talks don’t compete with each other if there are multiple sessions. It means not letting speaker filibuster through the Q&A period. All of these maxims hold for a talk given in the work hours, just on a smaller and specific scale. There the setting, time of the talk and the time keeping all help to make the experience better. Another hugely beneficial aspect of meetings is food and drink. Sharing food or drink at a meeting is a wonderful way for people to bond and seek greater depth of connection. This sort of engagement can help to foster collaboration and greater information exchange. It engages with the innate human social element that meeting should foster (I will note that my workplace has mostly outlawed food and drink helping to make our meetings suck more uniformly). Too often aspects of the talk or conference that would make the great expense of people’s time worthwhile are skimped on undermining and diminishing the value.

Conversely, a conference’s benefits can be magnified by careful and professional planning and execution. One way to augment a conference significantly is find really great keynote speakers to set the tone, provide energy and engage the audience. A thoughtful and thought-provoking talk delivered by an expert who is a great speaker can propel a conference to new heights and send people away with renewed energy. Conferences can also go to greater lengths to make the format and approach welcoming to greater audience participation especially getting the audience to ask questions and stay awake and aware. It’s too easy to tune out these days with a phone or laptop. Good time keeping and attention to the schedule is another way of making a conference work to the greatest benefit. This means staying on time and on schedule. It means paying attention to scheduling so that the best talks don’t compete with each other if there are multiple sessions. It means not letting speaker filibuster through the Q&A period. All of these maxims hold for a talk given in the work hours, just on a smaller and specific scale. There the setting, time of the talk and the time keeping all help to make the experience better. Another hugely beneficial aspect of meetings is food and drink. Sharing food or drink at a meeting is a wonderful way for people to bond and seek greater depth of connection. This sort of engagement can help to foster collaboration and greater information exchange. It engages with the innate human social element that meeting should foster (I will note that my workplace has mostly outlawed food and drink helping to make our meetings suck more uniformly). Too often aspects of the talk or conference that would make the great expense of people’s time worthwhile are skimped on undermining and diminishing the value.

Highly engaged teams have highly engaged leaders. Leaders must be about presence not productivity. Make meetings a no phone zone.

― Janna Cachola

Informational Meetings. The informational meeting is one of the worst abuses of people’s time. Lots of these meetings are mandatory, and force people to waste time witnessing evidence of what kind of shit show they are part of. This is very often a one-way exchange where people are expected to just sit and absorb. The information content is often poorly packaged, and ham handed in delivery. The talks usually are humorless and lack any soul. The sins are all compounded with a general lack of audience engagement. Their greatest feature is a really good and completely work appropriate time wasting exercise. You are at work and not working at all. You aren’t learning much either, it is almost always some sort of management BS delivered in a politically correct manner. Most of the time the best option is to completely eliminate these meetings. If these meetings are held, those conducting them should spend some real effort into making them worthwhile can valuable. They should seek a format that engages the audience and encourages genuine participation.

When you kill time, remember that it has no resurrection.

― A.W. Tozer

Organizational Meetings. The information’s meeting’s close relative is the organizational meeting. Often this is an informational meeting is disguise. This sort of meeting is called for an organization of some size to get together and hear the management give them some sort of spiel. These meeting happen at various organizational levels and almost all of them are awful. Time wasting drivel is the norm. Corporate or organizational policies, work milestones, and cheesy awards abound. Since these meeting is more personal than the pure informational meeting there is some soul and benefit to them. The biggest sin in these meetings is the faux engagement. Do the managers running these meetings really want questions, and are they really listening to the audience. Will they actually do anything with the feedback? More often than not, the questions and answers are handled professionally then forgotten. The management generally has no interest in really hearing people’s opinions and doing anything with their views, it is mostly a hollow feel good maneuver. Honest and genuine engagement is needed and these days management needs to prove that its more than just a show.

People who enjoy meetings should not be in charge of anything.

― Thomas Sowell

Project Meetings. In many places this is the most common meeting type. It is also tending to be one of the best meeting types where everyone is active and participating. The meeting involves people working to common ends and promotes genuine connection between efforts. These can take a variety of forms such as the stand-up meeting where everyone participates by construction. An important function of the project meeting is active listening. While this form of meeting tends to be good, it still needs planning and effort to keep it positive. If the project meeting is not good, it probably reflects quite fully on the project itself. Some sort of restructuring of the project is a cure. What are the signs that a project meeting is bad? If lots of people are sitting like potted plants and not engaged with the meeting, the project is probably not healthy. The project meeting should be time well spent, if they aren’t engaged, they should be doing something else.

Integrity is telling myself the truth. And honesty is telling the truth to other people.

― Spencer Johnson

Reviews. A review meeting is akin to a project meeting, but has an edge that makes it worse. Reviews often teem with political context and fear. A common form is a project team, reviewers and then stakeholders. The project team presents work to the reviewers, and if things are working well, the reviewers ask lots of questions. The stakeholders sit nervously and watch rarely participating. The spirit of the review is the thing that determines whether the engagement is positive and productive. The core value about which value revolves is honesty and trust. If honesty and trust are high, those being reviewed are forthcoming and their work is presented in a way where everyone learns and benefits. If the reviewers are confident in their charge and role, they can ask probing questions and provide value to the project and the stakeholders. Under the best of circumstances, the audience of stakeholders can be profitably engaged in deepening the discussion, and themselves learn greater context for the work. Too often, the environment is so charged that honesty is not encouraged, and the project team tends to hide unpleasant things. If reviewers do not trust the reception for a truly probing and critical review, they will pull their punches and the engagement will be needlessly and harmfully moderated. A sign that neither trust nor honesty is present comes from an anxious and uninvolved audience.

Reviews. A review meeting is akin to a project meeting, but has an edge that makes it worse. Reviews often teem with political context and fear. A common form is a project team, reviewers and then stakeholders. The project team presents work to the reviewers, and if things are working well, the reviewers ask lots of questions. The stakeholders sit nervously and watch rarely participating. The spirit of the review is the thing that determines whether the engagement is positive and productive. The core value about which value revolves is honesty and trust. If honesty and trust are high, those being reviewed are forthcoming and their work is presented in a way where everyone learns and benefits. If the reviewers are confident in their charge and role, they can ask probing questions and provide value to the project and the stakeholders. Under the best of circumstances, the audience of stakeholders can be profitably engaged in deepening the discussion, and themselves learn greater context for the work. Too often, the environment is so charged that honesty is not encouraged, and the project team tends to hide unpleasant things. If reviewers do not trust the reception for a truly probing and critical review, they will pull their punches and the engagement will be needlessly and harmfully moderated. A sign that neither trust nor honesty is present comes from an anxious and uninvolved audience.

I think there needs to be a meeting to set an agenda for more meetings about meetings.

― Jonah Goldberg

Phone, Skype, Video Meetings. These meetings are convenient and often encouraged as part of a cost saving strategy. Because of the nature of the medium these meetings are often terrible. Most often it turns into a series of monologs usually best suited for reporting work. Such meetings are rarely good places to hear about work. This comes from two truths: the people on the phone are often disengaged and listening while attending to other things. It is difficult to participate in any dynamic discussion, it happens, but it is rare. Most of the content is limited to the spoken word, and lacks body language and visual content. The result is much less information being transmitted, along with a low bandwidth of listening. For the most part these meeting should be done away with. If someone has something really interesting and very timely it might be useful, but only if we are sure the audience is paying real attention. Without dynamic participation one cannot be sure the attention is actually being paid.

Working meetings. These are the best meetings, hands down. They are informal, voluntary and dynamic. The people are there because they want to get something done that requires collaboration. If other types of meetings could incorporate the approach and dynamic of a working meeting, all of them would improve dramatically. Quite often these meetings are deep on communication and low on hierarchical transmission. Everyone in the meeting is usually engaged and active. People are rarely passive. They are there because they want to be there, or they need to be there. In many ways all of meeting could benefit mightily by examining working meetings, and adopting their characteristics more broadly.

Training Meetings. The use of a meeting to conduct training is common, as they are bad. These meetings could be improved greatly by adopting the principles from education. A good training is educational. Again dynamic, engaged meeting attendees are a benefit. If they are viewed as students, good outcomes can be had. Far too often the training is delivered in a hollow mandatory tone that provides little real value for these receiving it. We have a lot of soulless compliance training that simply pollutes the workplace with time wasting. Compliance is often associated with hot-button issues where the organization has no interest in engaging the employees. They are simply forced to do things because those in power say so. A real discussion on this sort of training is likely to be difficult and cast doubt. The conversations are difficult and likely to be confrontational. It is easier to passively waste people’s time and get it over with. This attitude is some blend of mediocrity and cowardice that has a corrosive impact on the workplace.

One source of frustration in the workplace is the frequent mismatch between what people must do and what people can do. When what they must do exceeds their capabilities, the result is anxiety. When what they must do falls short of their capabilities, the result is boredom. But when the match is just right, the results can be glorious. This is the essence of flow.

― Daniel H. Pink

Better meetings are a mechanism where our workplaces have an immense ability to improve. A broad principle is that a meeting needs to have a purpose and desired outcome that is well known and communicated to all participants. The meeting should engage everyone attending, and no one should be a potted plant, or otherwise engaged. Everyone’s time is valuable and expensive, the meeting should be structured and executed in a manner fitting its costs. A simple way of testing the waters are people’s attitudes toward the meeting and whether they are positive or negative. Do they want to go? Are they looking forward to it? Do they know why the meeting is happening? Is there an outcome that they are invested in? If these questions are answered honestly, those calling the meeting will know a lot and they should act accordingly.

Better meetings are a mechanism where our workplaces have an immense ability to improve. A broad principle is that a meeting needs to have a purpose and desired outcome that is well known and communicated to all participants. The meeting should engage everyone attending, and no one should be a potted plant, or otherwise engaged. Everyone’s time is valuable and expensive, the meeting should be structured and executed in a manner fitting its costs. A simple way of testing the waters are people’s attitudes toward the meeting and whether they are positive or negative. Do they want to go? Are they looking forward to it? Do they know why the meeting is happening? Is there an outcome that they are invested in? If these questions are answered honestly, those calling the meeting will know a lot and they should act accordingly.

The cure for bad meetings is recognition of their badness, and a commitment to making the effort necessary to improve them. Few things have a greater capacity to make the workplace better, more productive and improve morale.

When employees feel valued, and are more productive and engaged, they create a culture that can truly be a strategic advantage in today’s competitive market.

― Michael Hyatt

It is time to return to great papers of the past. The past has clear lessons about how progress can be achieved. Here, I will discuss a trio of papers that came at a critical juncture in the history of numerically solving hyperbolic conservation laws. In a sense, these papers were nothing new, but provided a systematic explanation and skillful articulation of the progress at that time. In a deep sense these papers represent applied math at its zenith, providing a structural explanation along with proof to accompany progress made by others. These papers helped mark the transition of modern methods from heuristic ideas to broad adoption and common use. Interestingly, the depth of applied mathematics ended up paving the way for broader adoption in the engineering world. This episode also provides a cautionary lesson about what holds higher order methods back from broader acceptance, and the relatively limited progress since.

It is time to return to great papers of the past. The past has clear lessons about how progress can be achieved. Here, I will discuss a trio of papers that came at a critical juncture in the history of numerically solving hyperbolic conservation laws. In a sense, these papers were nothing new, but provided a systematic explanation and skillful articulation of the progress at that time. In a deep sense these papers represent applied math at its zenith, providing a structural explanation along with proof to accompany progress made by others. These papers helped mark the transition of modern methods from heuristic ideas to broad adoption and common use. Interestingly, the depth of applied mathematics ended up paving the way for broader adoption in the engineering world. This episode also provides a cautionary lesson about what holds higher order methods back from broader acceptance, and the relatively limited progress since.

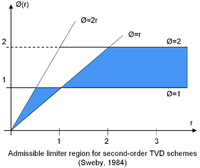

What Sweby did was provide a wonderful narrative description of TVD methods, and a graphical manner to depict them. In the form that Sweby described, TVD methods were a nonlinear combination of classical methods: upwind, Lax-Wendroff and Beam Warming. The limiter was drawn out of the formulation and parameterized by the ratio of local finite differences. The limiter is a way to take an upwind method and modify it with some part of the selection of second-order methods and satisfy the inequalities needed to be TVD. This technical specification took the following form, $ C_{j-1/2} = \nu \left( 1 + 1/2\nu(1-\nu) \phi\ledt(r_{j-1/2}\right) \right) $ and

What Sweby did was provide a wonderful narrative description of TVD methods, and a graphical manner to depict them. In the form that Sweby described, TVD methods were a nonlinear combination of classical methods: upwind, Lax-Wendroff and Beam Warming. The limiter was drawn out of the formulation and parameterized by the ratio of local finite differences. The limiter is a way to take an upwind method and modify it with some part of the selection of second-order methods and satisfy the inequalities needed to be TVD. This technical specification took the following form, $ C_{j-1/2} = \nu \left( 1 + 1/2\nu(1-\nu) \phi\ledt(r_{j-1/2}\right) \right) $ and  My own connection to this work is a nice way of rounding out this discussion. When I started looking at modern numerical methods, I started to look at the selection of approaches. FCT was the first thing I hit upon and tried. Compared to the classical methods I was using, it was clearly better, but its lack of theory was deeply unsatisfying. FCT would occasionally do weird things. TVD methods had the theory and this made is far more appealing to my technically immature mind. After the fact, I tried to project FCT methods onto the TVD theory. I wrote a paper documenting this effort. It was my first paper in the field. Unknowingly, I walked into a veritable mine field and complete shit show. All three of my reviewers were very well-known contributors to the field (I know it is supposed to be anonymous, and the shit show that unveiled itself, unveiled the reviewers too).

My own connection to this work is a nice way of rounding out this discussion. When I started looking at modern numerical methods, I started to look at the selection of approaches. FCT was the first thing I hit upon and tried. Compared to the classical methods I was using, it was clearly better, but its lack of theory was deeply unsatisfying. FCT would occasionally do weird things. TVD methods had the theory and this made is far more appealing to my technically immature mind. After the fact, I tried to project FCT methods onto the TVD theory. I wrote a paper documenting this effort. It was my first paper in the field. Unknowingly, I walked into a veritable mine field and complete shit show. All three of my reviewers were very well-known contributors to the field (I know it is supposed to be anonymous, and the shit show that unveiled itself, unveiled the reviewers too). exaggeration to say that getting funding for science has replaced the conduct and value of that science today. This is broadly true, and particularly true in scientific computing where getting something funded has replaced funding what is needed or wise. The truth of the benefit of pursuing computer power above all else is decided upon a priori. The belief was that this sort of program could “make it rain” and produce funding because this sort of marketing had in the past. All results in the

exaggeration to say that getting funding for science has replaced the conduct and value of that science today. This is broadly true, and particularly true in scientific computing where getting something funded has replaced funding what is needed or wise. The truth of the benefit of pursuing computer power above all else is decided upon a priori. The belief was that this sort of program could “make it rain” and produce funding because this sort of marketing had in the past. All results in the program must bow to this maxim, and support its premise. All evidence to the contrary is rejected because it is politically incorrect and threatens the attainment of the cargo, the funding, the money. A large part of this utterly rotten core of modern science is the ascendency of the science manager as the apex of the enterprise. The accomplished scientist and expert is merely now a useful and necessary detail, the manager reigns as the peak of achievement.

program must bow to this maxim, and support its premise. All evidence to the contrary is rejected because it is politically incorrect and threatens the attainment of the cargo, the funding, the money. A large part of this utterly rotten core of modern science is the ascendency of the science manager as the apex of the enterprise. The accomplished scientist and expert is merely now a useful and necessary detail, the manager reigns as the peak of achievement. In this putrid environment, faster computers seem an obvious benefit to science. They are a benefit and pathway to progress, this is utterly undeniable. Unfortunately, it is an expensive and inefficient path to progress, and an incredibly bad investment in comparison to alternative. The numerous problems with the exascale program are subtle, nuanced, highly technical and pathological. As I’ve pointed out before the modern age is no place for subtlety or nuance, we live it an age of brutish simplicity where bullshit reigns and facts are optional. In such an age, exascale is an exemplar, it is a brutally simple approach tailor made for the ignorant and witless. If one is willing to cast away the cloak of ignorance and embrace subtlety and nuance, a host of investments can be described that would benefit scientific computing vastly more than the current program. If we followed a better balance of research, computing to contribute to science far more greatly and scale far greater heights than the current path provides.

In this putrid environment, faster computers seem an obvious benefit to science. They are a benefit and pathway to progress, this is utterly undeniable. Unfortunately, it is an expensive and inefficient path to progress, and an incredibly bad investment in comparison to alternative. The numerous problems with the exascale program are subtle, nuanced, highly technical and pathological. As I’ve pointed out before the modern age is no place for subtlety or nuance, we live it an age of brutish simplicity where bullshit reigns and facts are optional. In such an age, exascale is an exemplar, it is a brutally simple approach tailor made for the ignorant and witless. If one is willing to cast away the cloak of ignorance and embrace subtlety and nuance, a host of investments can be described that would benefit scientific computing vastly more than the current program. If we followed a better balance of research, computing to contribute to science far more greatly and scale far greater heights than the current path provides. Today supercomputing is completely at odds with the commercial industry. After decades of first pacing advances in computing hardware, then riding along with increases in computing power, supercomputing has become separate. The separation occurred when Moore’s law died at the chip level (in about 2007). The supercomputing world has become increasingly disparate to continue the free lunch, and tied to an outdated model for delivering results. Basically, supercomputing is still tied to the mainframe model of computing that died in the business World long ago. Supercomputing has failed to embrace modern computing with its pervasive and multiscale nature moving all the way from mobile to cloud.

Today supercomputing is completely at odds with the commercial industry. After decades of first pacing advances in computing hardware, then riding along with increases in computing power, supercomputing has become separate. The separation occurred when Moore’s law died at the chip level (in about 2007). The supercomputing world has become increasingly disparate to continue the free lunch, and tied to an outdated model for delivering results. Basically, supercomputing is still tied to the mainframe model of computing that died in the business World long ago. Supercomputing has failed to embrace modern computing with its pervasive and multiscale nature moving all the way from mobile to cloud.  Expansive uncertainty quantification – too many uncertainties are ignored rather than considered and addressed. Uncertainty is a big part V&V, a genuinely hot topic in computational circles, and practiced quite incompletely. Many view uncertainty quantification as only being a small set of activities that only address a small piece of the uncertainty question. Too much benefit is achieved by simply ignoring a real uncertainty because the value of zero that is implicitly assumed is not challenged. This is exacerbated significantly by a half funded and deemphasized V&V effort in scientific computing. Significant progress was made several decades ago, but the signs now point to regression. The result of this often willful ignorance is a lessening of impact of computing and limiting the true benefits.

Expansive uncertainty quantification – too many uncertainties are ignored rather than considered and addressed. Uncertainty is a big part V&V, a genuinely hot topic in computational circles, and practiced quite incompletely. Many view uncertainty quantification as only being a small set of activities that only address a small piece of the uncertainty question. Too much benefit is achieved by simply ignoring a real uncertainty because the value of zero that is implicitly assumed is not challenged. This is exacerbated significantly by a half funded and deemphasized V&V effort in scientific computing. Significant progress was made several decades ago, but the signs now point to regression. The result of this often willful ignorance is a lessening of impact of computing and limiting the true benefits.  progress are the computer codes. Old computer codes are still being used, and most of them use operator splitting. Back in the 1990’s a big deal was made regarding replacing legacy codes with new codes. The codes developed then are still in use, and no one is replacing them. The methods in these old codes are still being used and now we are told that the codes need to be preserved. The codes, the models, the methods and the algorithms all come along for the ride. We end up having no practical route to advancing the methods.

progress are the computer codes. Old computer codes are still being used, and most of them use operator splitting. Back in the 1990’s a big deal was made regarding replacing legacy codes with new codes. The codes developed then are still in use, and no one is replacing them. The methods in these old codes are still being used and now we are told that the codes need to be preserved. The codes, the models, the methods and the algorithms all come along for the ride. We end up having no practical route to advancing the methods.  Complete code refresh – we have produced and now we are maintaining a new generation of legacy codes. A code is a storage for vast stores of knowledge in modeling, numerical methods, algorithms, computer science and problem solving. When we fail to replace codes, we fail to replace knowledge. The knowledge comes directly from those who write the code and create the ability to solve useful problems with that code. Much of the methodology for problem solving is complex and problem specific. Ultimately a useful code becomes something that many people are deeply invested in. In addition, the people who originally write the code move on taking their expertise, history and knowledge with them. The code becomes an artifact for this knowledge, but it is also a deeply imperfect reflection of the knowledge. The code usually contains some techniques that are magical, and unexplained. These magic bits of code are often essential for success. If they get changed the code ceases to be useful. The result of this process is a deep loss of expertise and knowledge that arises from the process of creating a code that can solve real problems. If a legacy code continues to be used it also acts to block progress of all the things it contains starting with the model and its fundamental assumption. As a result, progress stops because even when there is research advances, it has no practical outlet. This is where we are today.

Complete code refresh – we have produced and now we are maintaining a new generation of legacy codes. A code is a storage for vast stores of knowledge in modeling, numerical methods, algorithms, computer science and problem solving. When we fail to replace codes, we fail to replace knowledge. The knowledge comes directly from those who write the code and create the ability to solve useful problems with that code. Much of the methodology for problem solving is complex and problem specific. Ultimately a useful code becomes something that many people are deeply invested in. In addition, the people who originally write the code move on taking their expertise, history and knowledge with them. The code becomes an artifact for this knowledge, but it is also a deeply imperfect reflection of the knowledge. The code usually contains some techniques that are magical, and unexplained. These magic bits of code are often essential for success. If they get changed the code ceases to be useful. The result of this process is a deep loss of expertise and knowledge that arises from the process of creating a code that can solve real problems. If a legacy code continues to be used it also acts to block progress of all the things it contains starting with the model and its fundamental assumption. As a result, progress stops because even when there is research advances, it has no practical outlet. This is where we are today.  Democratization of expertise – the manner in which codes are applied has a very large impact on solutions. The overall process is often called a workflow, encapsulating activities starting with problem conception, meshing, modeling choices, code input, code execution, data analysis, visualization. One of the problems that has arisen is the use of codes by non-experts. Increasingly code users are simply not sophisticated and treat codes like black boxes. Many refer to this as the democratization of the simulation capability, which is generally beneficial. On the other hand, we increasingly see calculations conducted by novices who are generally ignorant of vast swaths of the underlying science. This characteristic is keenly related to a lack of V&V focus and loose standards of acceptance for calculations. Calibration is becoming more prevalent again, and distinctions between calibration and validation are vanishing anew. The creation of broadly available simulation tools must be coupled to first rate practices and appropriate professional education. In both of these veins the current trends are completely in the wrong direction. V&V practices are in decline and recession. Professional education is systematically getting worse as the educational mission of universities is attacked, and diminished along with the role of elites in society.

Democratization of expertise – the manner in which codes are applied has a very large impact on solutions. The overall process is often called a workflow, encapsulating activities starting with problem conception, meshing, modeling choices, code input, code execution, data analysis, visualization. One of the problems that has arisen is the use of codes by non-experts. Increasingly code users are simply not sophisticated and treat codes like black boxes. Many refer to this as the democratization of the simulation capability, which is generally beneficial. On the other hand, we increasingly see calculations conducted by novices who are generally ignorant of vast swaths of the underlying science. This characteristic is keenly related to a lack of V&V focus and loose standards of acceptance for calculations. Calibration is becoming more prevalent again, and distinctions between calibration and validation are vanishing anew. The creation of broadly available simulation tools must be coupled to first rate practices and appropriate professional education. In both of these veins the current trends are completely in the wrong direction. V&V practices are in decline and recession. Professional education is systematically getting worse as the educational mission of universities is attacked, and diminished along with the role of elites in society.

Last week I tried to envision a better path forward for scientific computing. Unfortunately, a true better path flows invariably through a better path for science itself and the Nation as a whole. Ultimately scientific computing, and science more broadly is dependent on the health of society in the broadest sense. It also depends on leadership and courage, two other attributes we are lacking in almost every respect. Our society is not well, the problems we are confronting are deep and perhaps the most serious crisis since the Civil War. I believe that historians will look back to 2016-2018 and perhaps longer as the darkest period in American history since the Civil War. We can’t build anything great when the Nation is tearing itself apart. I hope and pray that it will be resolved before we plunge deeper into the abyss we find ourselves. We see the forces opposed to knowledge, progress and reason emboldened and running amok. The Nation is presently moving backward and embracing a deeply disturbing and abhorrent philosophy. In such an environment science cannot flourish, it can only survive. We all hope the darkness will lift and we can again move forward toward a better future; one with purpose and meaning where science can be a force for the betterment of society as a whole.

Last week I tried to envision a better path forward for scientific computing. Unfortunately, a true better path flows invariably through a better path for science itself and the Nation as a whole. Ultimately scientific computing, and science more broadly is dependent on the health of society in the broadest sense. It also depends on leadership and courage, two other attributes we are lacking in almost every respect. Our society is not well, the problems we are confronting are deep and perhaps the most serious crisis since the Civil War. I believe that historians will look back to 2016-2018 and perhaps longer as the darkest period in American history since the Civil War. We can’t build anything great when the Nation is tearing itself apart. I hope and pray that it will be resolved before we plunge deeper into the abyss we find ourselves. We see the forces opposed to knowledge, progress and reason emboldened and running amok. The Nation is presently moving backward and embracing a deeply disturbing and abhorrent philosophy. In such an environment science cannot flourish, it can only survive. We all hope the darkness will lift and we can again move forward toward a better future; one with purpose and meaning where science can be a force for the betterment of society as a whole. It would really be great to be starting 2018 feeling good about the work I do. Useful work that impacts important things would go a long way toward achieving this. I’ve put some thought into considering what might constitute work having these properties. This has two parts, what work would be useful and impactful in general, and what would be important to contribute to. As a necessary subtext to this conversation is a conclusion that most of the work we are doing in scientific computing today is neither useful, nor impactful and nothing important is at stake. This alone is a rather bold assertion. Simply put, as a Nation and society we are not doing anything aspirational, nothing big. This shows up in the lack of substance in the work we are paid to pursue. More deeply, I believe that if we did something big and aspirational, the utility and impact of our work would simply sort itself out as part of a natural order.

It would really be great to be starting 2018 feeling good about the work I do. Useful work that impacts important things would go a long way toward achieving this. I’ve put some thought into considering what might constitute work having these properties. This has two parts, what work would be useful and impactful in general, and what would be important to contribute to. As a necessary subtext to this conversation is a conclusion that most of the work we are doing in scientific computing today is neither useful, nor impactful and nothing important is at stake. This alone is a rather bold assertion. Simply put, as a Nation and society we are not doing anything aspirational, nothing big. This shows up in the lack of substance in the work we are paid to pursue. More deeply, I believe that if we did something big and aspirational, the utility and impact of our work would simply sort itself out as part of a natural order. The march of science is the 20th Century was deeply impacted by international events, several World Wars and a Cold (non) War that spurred National interests in supporting science and technology. The twin projects of the atom bomb and the nuclear arms race along with space exploration drove the creation of much of the science and technology today. These conflicts steeled resolve, purpose and granted resources needed for success. They were important enough that efforts were earnest. Risks were taken because risk is necessary for achievement. Today we don’t take risks because nothing important is a stake. We can basically fake results and market progress where little or none exists. Since nothing is really that essential bullshit reigns supreme.

The march of science is the 20th Century was deeply impacted by international events, several World Wars and a Cold (non) War that spurred National interests in supporting science and technology. The twin projects of the atom bomb and the nuclear arms race along with space exploration drove the creation of much of the science and technology today. These conflicts steeled resolve, purpose and granted resources needed for success. They were important enough that efforts were earnest. Risks were taken because risk is necessary for achievement. Today we don’t take risks because nothing important is a stake. We can basically fake results and market progress where little or none exists. Since nothing is really that essential bullshit reigns supreme. resistance was not real. Ironically the Soviets were ultimately defeated by bullshit. The Strategic Defense Initiative, or Star Wars bankrupted the Soviets. It was complete bullshit and never had a chance to succeed. This was a brutal harbinger of today’s World where reality is optional, and marketing is the coin of the realm. Today American power seems unassailable. This is partially true and partially over-confidence. We are not on our game at all, and far to much of our power is based on bullshit. As a result, we can basically just pretend to try, and actually not execute anything with substance and competence. This is where we are today; we are doing nothing important, and wasting lots of time and money in the process.

resistance was not real. Ironically the Soviets were ultimately defeated by bullshit. The Strategic Defense Initiative, or Star Wars bankrupted the Soviets. It was complete bullshit and never had a chance to succeed. This was a brutal harbinger of today’s World where reality is optional, and marketing is the coin of the realm. Today American power seems unassailable. This is partially true and partially over-confidence. We are not on our game at all, and far to much of our power is based on bullshit. As a result, we can basically just pretend to try, and actually not execute anything with substance and competence. This is where we are today; we are doing nothing important, and wasting lots of time and money in the process. The result of the current model is a research establishment that only goes through the motions and does little or nothing. We make lots of noise and produce little substance. Our nation deeply needs a purpose that is greater. There are plenty of worthier National goals. If war-making is needed, Russia and China are still worthy adversaries. For some reason, we have chosen to capitulate to Putin’s Russia simply because they are an ally against the non-viable threat of Islamic fundamentalism. This is a completely insane choice that is only rhetorically useful. If we want peaceful goals, there are challenges aplenty. Climate change and weather are worthy problems to tackle requiring both scientific understanding and societal transformation to conquer. Creating clean and renewable energy that does not create horrible environmental side-effects remains unsolved. Solving the international needs for food and prosperity for mankind is always there. Scientific exploration and particularly space remain unconquered frontiers. Medicine and genetics offer new vistas for scientific exploration. All of these areas could transform the Nation in broad ways socially and economically. All of these could meet broad societal needs. More to the point of my post, all need scientific computing in one form or another to fully succeed. Computing always works best as a useful tool employed to help achieve objectives in the real World. The real-World problems provide constraints and objectives that spur innovation and keep the enterprise honest.

The result of the current model is a research establishment that only goes through the motions and does little or nothing. We make lots of noise and produce little substance. Our nation deeply needs a purpose that is greater. There are plenty of worthier National goals. If war-making is needed, Russia and China are still worthy adversaries. For some reason, we have chosen to capitulate to Putin’s Russia simply because they are an ally against the non-viable threat of Islamic fundamentalism. This is a completely insane choice that is only rhetorically useful. If we want peaceful goals, there are challenges aplenty. Climate change and weather are worthy problems to tackle requiring both scientific understanding and societal transformation to conquer. Creating clean and renewable energy that does not create horrible environmental side-effects remains unsolved. Solving the international needs for food and prosperity for mankind is always there. Scientific exploration and particularly space remain unconquered frontiers. Medicine and genetics offer new vistas for scientific exploration. All of these areas could transform the Nation in broad ways socially and economically. All of these could meet broad societal needs. More to the point of my post, all need scientific computing in one form or another to fully succeed. Computing always works best as a useful tool employed to help achieve objectives in the real World. The real-World problems provide constraints and objectives that spur innovation and keep the enterprise honest. Instead our scientific computing is being applied as a shallow marketing ploy to shore up a vacuous program. Nothing really important or impactful is at stake. The applications for computing are mostly make believe and amount to nothing of significance. The marketing will tell you otherwise, but the lack of gravity for the work is clear and poisons the work. The result of this lack of gravity are phony goals and objectives that have the look and feel of impact, but contribute nothing toward an objective reality. This lack of contribution comes from the deeper malaise of purpose as a Nation, and science’s role as an engine of progress. With little or nothing at stake the tools used for success suffer, scientific computing is no different. The standards of success simply are not real, and lack teeth. Even stockpile stewardship is drifting into the realm of bullshit. It started as a worthy program, but over time it has been allowed to lose its substance. Political and financial goals have replaced science and fact, the goals of the program losing connection to objective reality.

Instead our scientific computing is being applied as a shallow marketing ploy to shore up a vacuous program. Nothing really important or impactful is at stake. The applications for computing are mostly make believe and amount to nothing of significance. The marketing will tell you otherwise, but the lack of gravity for the work is clear and poisons the work. The result of this lack of gravity are phony goals and objectives that have the look and feel of impact, but contribute nothing toward an objective reality. This lack of contribution comes from the deeper malaise of purpose as a Nation, and science’s role as an engine of progress. With little or nothing at stake the tools used for success suffer, scientific computing is no different. The standards of success simply are not real, and lack teeth. Even stockpile stewardship is drifting into the realm of bullshit. It started as a worthy program, but over time it has been allowed to lose its substance. Political and financial goals have replaced science and fact, the goals of the program losing connection to objective reality. We would still be chasing faster computers, but the faster computers would not be the primary focus. We would focus on using computing to solve problems that were important. We would focus on making computers that were useful first and foremost. We would want computers that were faster as long as they enabled progress on problem solving. As a result, efforts would be streamlined toward utility. We would not throw vast amounts of effort into making computers faster, just to make them faster (this is what is happening today there is no rhyme or reason to exascale other than, faster is like better, Duh!). Utility means that we would honestly look at what is limiting problem solving and putting our efforts into removing those limits. The effects of this dose of reality on our current efforts would be stunning; we would see a wholesale change in our emphasis and focus away from hardware. Computing hardware would take its proper role as an important tool for scientific computing and no longer be the driving force. The fact that hardware is a driving force for scientific computing is one of clearest indicators of how unhealthy the field is today.

We would still be chasing faster computers, but the faster computers would not be the primary focus. We would focus on using computing to solve problems that were important. We would focus on making computers that were useful first and foremost. We would want computers that were faster as long as they enabled progress on problem solving. As a result, efforts would be streamlined toward utility. We would not throw vast amounts of effort into making computers faster, just to make them faster (this is what is happening today there is no rhyme or reason to exascale other than, faster is like better, Duh!). Utility means that we would honestly look at what is limiting problem solving and putting our efforts into removing those limits. The effects of this dose of reality on our current efforts would be stunning; we would see a wholesale change in our emphasis and focus away from hardware. Computing hardware would take its proper role as an important tool for scientific computing and no longer be the driving force. The fact that hardware is a driving force for scientific computing is one of clearest indicators of how unhealthy the field is today. Current computing focus is only porting old codes to new computers, a process that keeps old models, methods and algorithms in place. This is one of the most corrosive elements in the current mix. The porting of old codes is the utter abdication of intellectual ownership. These old codes are scientific dinosaurs and act to freeze antiquated models, methods and algorithms in place while acting to squash progress. Worse yet, the skillsets necessary for improving the most valuable and important parts of modeling and simulation are allowed to languish. This is worse than simply choosing a less efficient road, this is going backwards. When we need to turn our attention to serious real work, our scientists will not be ready. These choices are dooming an entire generation that could have been making breakthroughs to simply become caretakers. To be proper stewards of our science we need to write new codes containing new models using new methods and algorithms. Porting codes turns our scientists into mindless monks simply transcribing sacred texts without any depth of understanding. It is a recipe for transforming our science into magic. It is the recipe for defeat and the passage away from the greatness we once had.

Current computing focus is only porting old codes to new computers, a process that keeps old models, methods and algorithms in place. This is one of the most corrosive elements in the current mix. The porting of old codes is the utter abdication of intellectual ownership. These old codes are scientific dinosaurs and act to freeze antiquated models, methods and algorithms in place while acting to squash progress. Worse yet, the skillsets necessary for improving the most valuable and important parts of modeling and simulation are allowed to languish. This is worse than simply choosing a less efficient road, this is going backwards. When we need to turn our attention to serious real work, our scientists will not be ready. These choices are dooming an entire generation that could have been making breakthroughs to simply become caretakers. To be proper stewards of our science we need to write new codes containing new models using new methods and algorithms. Porting codes turns our scientists into mindless monks simply transcribing sacred texts without any depth of understanding. It is a recipe for transforming our science into magic. It is the recipe for defeat and the passage away from the greatness we once had.