White privilege is an absence of the consequences of racism. An absence of structural discrimination, an absence of your race being viewed as a problem first and foremost.

― Reni Eddo-Lodge

Every Monday I stare at a blank page and write. Sometimes it is obvious with work demands driving my thoughts in a very focused direction. I’d love to be there right now. Work is pissing me off, and providing no such inspiration, only anger. It’s especially unfortunate because I ought to have something to say on differential equations. Last week’s post was interesting, and the feedback has provided wonderful topics to pick up. One comment focused on other constraints and invariants for modeling that equations commonly violate. A wonderful manager I had from Los Alamos argued with me about nonlinearity, and integral laws with respect to hyperbolic PDEs and causality. Both of these topics are worthy of deep exploration, but my head isn’t there. The issue of societal inclusivity and the maintenance of power structures looms in my thinking. The acceptance of individuality and personal differences within our social structures is another theme that resonates. So, I take a pause from technical to ponder the ability of our modern World to honor and harness the life work and experience from others especially those who are different. The gulf between our leader’s rhetoric and actions is vast leading to poor results, and maintenance of traditional power.

Every Monday I stare at a blank page and write. Sometimes it is obvious with work demands driving my thoughts in a very focused direction. I’d love to be there right now. Work is pissing me off, and providing no such inspiration, only anger. It’s especially unfortunate because I ought to have something to say on differential equations. Last week’s post was interesting, and the feedback has provided wonderful topics to pick up. One comment focused on other constraints and invariants for modeling that equations commonly violate. A wonderful manager I had from Los Alamos argued with me about nonlinearity, and integral laws with respect to hyperbolic PDEs and causality. Both of these topics are worthy of deep exploration, but my head isn’t there. The issue of societal inclusivity and the maintenance of power structures looms in my thinking. The acceptance of individuality and personal differences within our social structures is another theme that resonates. So, I take a pause from technical to ponder the ability of our modern World to honor and harness the life work and experience from others especially those who are different. The gulf between our leader’s rhetoric and actions is vast leading to poor results, and maintenance of traditional power.

Why am I concerned?

It does strange things to you to realize that the conservative establishment is forcing you to be a progressive liberal fighter for universal rights.

― Brandon Sanderson

This whole topic might seem to be odd coming from me. I am a middle aged white man who is a member of the intellectual elite. I’m not a racial minority, a foreigner or part of the LBGTQ community although I have a child who is. Of course, my (true) identity is far more complex than that. For example, my personality an outlier at work. It makes many of my co-workers extremely uncomfortable. I’m an atheist and this makes people of faith uncomfortable. Other aspects of my life remain hidden too, maybe they aren’t germane; maybe they simply would make people uncomfortable; maybe they would result in implicit shunning and rejection. All of this runs against the theme of inclusion and diversity, which society as a whole and our institutions play lip service to. In the backdrop of this lip service are entrenched behaviors, practices and power that undermines inclusion at every turn. Many forms of diversity have no protection at all, and the power structures attack them without relief. The talk is all about diversity and inclusion, the actions are all about exclusivity. Without genuine leadership and commitment diversity and inclusion fail. Worse yet, a great deal of our leadership has moved to explicit attacks on diversity as a social ill.

who is a member of the intellectual elite. I’m not a racial minority, a foreigner or part of the LBGTQ community although I have a child who is. Of course, my (true) identity is far more complex than that. For example, my personality an outlier at work. It makes many of my co-workers extremely uncomfortable. I’m an atheist and this makes people of faith uncomfortable. Other aspects of my life remain hidden too, maybe they aren’t germane; maybe they simply would make people uncomfortable; maybe they would result in implicit shunning and rejection. All of this runs against the theme of inclusion and diversity, which society as a whole and our institutions play lip service to. In the backdrop of this lip service are entrenched behaviors, practices and power that undermines inclusion at every turn. Many forms of diversity have no protection at all, and the power structures attack them without relief. The talk is all about diversity and inclusion, the actions are all about exclusivity. Without genuine leadership and commitment diversity and inclusion fail. Worse yet, a great deal of our leadership has moved to explicit attacks on diversity as a social ill.

A great democracy has got to be progressive or it will soon cease to be great or a democracy.

― Theodore Roosevelt

Let’s pull this thread a bit with respect to the obvious diversity that we see highlighted societally, race, gender, or sexual identity. These groups are protected legally and the focus of most of our diversity efforts. The discrimination against these groups of people has been pervasive and chronic. Moreover, there are broad swaths of our society that seek to push back progress and reinstitute outright discrimination. The efforts resisting diversity are active and vigorous. In lieu of the explicit and obvious discrimination we have plenty of implicit institutional discrimination and oppression. The most obvious societal form that implicitly targets minorities is the drug war and the plague of mass incarceration. It is a way of instituting “Jim Crow” laws within a  modern society. Any examination of the prisons in the United States shows massive racial inequity. Police enforce laws in a selective fashion letting whites go, while imprisoning blacks and Hispanics for the same offenses. Prison sentences are also harsher toward minorities. Other minority groups have been targeted and selected for similar treatments. In these cases, everyone is claimed to be under the same legal system, but the execution of the law lacks uniformity. At work we have policies and practices often revolving around hiring that have the same effect. They work to implicitly exclude people and undermine the actual impact of diversity.

modern society. Any examination of the prisons in the United States shows massive racial inequity. Police enforce laws in a selective fashion letting whites go, while imprisoning blacks and Hispanics for the same offenses. Prison sentences are also harsher toward minorities. Other minority groups have been targeted and selected for similar treatments. In these cases, everyone is claimed to be under the same legal system, but the execution of the law lacks uniformity. At work we have policies and practices often revolving around hiring that have the same effect. They work to implicitly exclude people and undermine the actual impact of diversity.

Equality before the law is probably forever unattainable. It is a noble ideal, but it can never be realized, for what men value in this world is not rights but privileges.

― H.L. Mencken

Other practices hurt women in an implicit manner. These practices are relabeled as “traditional values” and are reflected through health care and sexual harassment. The implicit message is that women should be barefoot and pregnant, second class citizens whose role in society is child rearing and home making. Young women are also there to provide sexual outlets to men, although those that do are sluts. They are not worthy of enjoying the same sexual pleasure the men are free to pursue. The whole of the behavior is focused on propping up men and the center of society, work and power. None of their needs or contributions in the realm where men traditionally rule is welcome. Our health care coverage and laws completely reflect this polarity where women’s needs are controversial. Men’s needs are standard. Nothing says this more than the attitudes toward reproductive and sexual health. Men are cared for; women are denied. The United States is a nexus of backwards thought with labor laws that penalize women in the name of the almighty dollar using religious freedom as an excuse.

Other practices hurt women in an implicit manner. These practices are relabeled as “traditional values” and are reflected through health care and sexual harassment. The implicit message is that women should be barefoot and pregnant, second class citizens whose role in society is child rearing and home making. Young women are also there to provide sexual outlets to men, although those that do are sluts. They are not worthy of enjoying the same sexual pleasure the men are free to pursue. The whole of the behavior is focused on propping up men and the center of society, work and power. None of their needs or contributions in the realm where men traditionally rule is welcome. Our health care coverage and laws completely reflect this polarity where women’s needs are controversial. Men’s needs are standard. Nothing says this more than the attitudes toward reproductive and sexual health. Men are cared for; women are denied. The United States is a nexus of backwards thought with labor laws that penalize women in the name of the almighty dollar using religious freedom as an excuse.

If we cannot end now our differences, at least we can help make the world safe for diversity.

― John F. Kennedy

In the past decades we have seen huge strides for the LBGTQ community. The greatest victory is marriage equality. Discrimination is still something many people in society would love to practice. At an implicit level they do. Everywhere that discrimination can be gotten away with, it happens. Many people remain in the closet and do not feel free letting people know who they really are. Of course, this is a bigger issue for transgender people for whom hiding is almost impossible. At the same time the level of discrimination is still largely approved by society. Most of the discrimination is the result of people’s innate discomfort with LBGTQ sexuality and opening themselves to even considering being somewhere on any non-standard spectrum of sexuality. It becomes an issue and a thought they would just as soon submerge. This discomfort extends to other forms of non-standard expressions of sexuality that invariably leak out into people’s everyday lives. This sort of discomfort is greater in the United States where sexuality is heavily suppressed and viewed as morally inferior. Our sexuality is an important part of our self-identity. For many people it is an aspect of self that must be hidden lest they suffer reprisals.

In the past decades we have seen huge strides for the LBGTQ community. The greatest victory is marriage equality. Discrimination is still something many people in society would love to practice. At an implicit level they do. Everywhere that discrimination can be gotten away with, it happens. Many people remain in the closet and do not feel free letting people know who they really are. Of course, this is a bigger issue for transgender people for whom hiding is almost impossible. At the same time the level of discrimination is still largely approved by society. Most of the discrimination is the result of people’s innate discomfort with LBGTQ sexuality and opening themselves to even considering being somewhere on any non-standard spectrum of sexuality. It becomes an issue and a thought they would just as soon submerge. This discomfort extends to other forms of non-standard expressions of sexuality that invariably leak out into people’s everyday lives. This sort of discomfort is greater in the United States where sexuality is heavily suppressed and viewed as morally inferior. Our sexuality is an important part of our self-identity. For many people it is an aspect of self that must be hidden lest they suffer reprisals.

This is a global issue

Hate and oppression are rising Worldwide. This hate is a reaction to many changes in society. Ethnic changes, migrations, displacement and demographics are undermining traditional majorities. Global economics and broad information technology/telecommunication are also stressing traditional structures at every turn. Disparate people and communities can now form through the power of this medium. At the same time disinformation, propaganda and wholesale manipulation are empowering the masses to both good and evil. Some of these online communities are wonderful such as LGBTQ people who can form greater bonds, and outreach to similar people. It also allows hate to flourish and bond in the same way. The technology isn’t bad or good, and its impacts are similarly divided. It is immensely destabilizing. Traditional culture and society is also rising up to push back. The change makes people uncomfortable. Exclusion and casting the different out is a way for them to push back. They respond with a defense of traditional values and judgements grounded in exclusion of those who don’t fit in. As with most bigotry and exclusion, it is fear based. Fear is something promoted across the globe by the enemies of inclusion and progress. The same fear is being harnessed by the traditional powers to solidify their hold over their hegemony. This fear is particularly acute with the older part of the population who also tend to be more politically active. These two things form the most active implicit threat to achieving diversity.

In many cases this whole issue is framed in terms of religion. Many traditional religious views are really excuses and justification for exclusion and bigotry. The aspects of the religious traditions focused on love, compassion and inclusion are diminished or ignored. This sort of perversion of religious views is a common practice by authoritarian regimes who harness the fear-based aspects of faith to enhance their power and sway over the masses. This is true for Christians, Jews, Muslims, Hindus, … virtually every major faith. It is manifesting itself in the movement to authoritarian rule in the United States. The forces of hate are cloaked in faith to immunize themselves from critical views. Hatred, discrimination and bigotry are justified in their faith and freed from much critical feedback. They also complain about being repressed by society even when they are the majority and ruling social order. This is a common response when the forces of inclusion complain about their institutionalized bigotry. In the United States the minority that is truly oppressed are atheists. An atheist can’t generally get elected to office and in many places needs to hide this identity lest be subject to persecution. It is among the personal identities that I need to hide. At the same time Christians can be utterly open and brazen in self-expression of their faith.

In many cases this whole issue is framed in terms of religion. Many traditional religious views are really excuses and justification for exclusion and bigotry. The aspects of the religious traditions focused on love, compassion and inclusion are diminished or ignored. This sort of perversion of religious views is a common practice by authoritarian regimes who harness the fear-based aspects of faith to enhance their power and sway over the masses. This is true for Christians, Jews, Muslims, Hindus, … virtually every major faith. It is manifesting itself in the movement to authoritarian rule in the United States. The forces of hate are cloaked in faith to immunize themselves from critical views. Hatred, discrimination and bigotry are justified in their faith and freed from much critical feedback. They also complain about being repressed by society even when they are the majority and ruling social order. This is a common response when the forces of inclusion complain about their institutionalized bigotry. In the United States the minority that is truly oppressed are atheists. An atheist can’t generally get elected to office and in many places needs to hide this identity lest be subject to persecution. It is among the personal identities that I need to hide. At the same time Christians can be utterly open and brazen in self-expression of their faith.

In virtually every case the forces against inclusion are fear-driven. Many people are not comfortable with people who are different because of how it reflects on them. For example, many of the greatest homophobes actually harbor homosexual feelings of their own they are trying to squash. Openly accepted homosexuality is something that they resist because it seems to implicitly encourage them to act on their own feelings. In response to these fears they engage in bigotry. Generally, gender and sexual identities will bring these attitudes out because of the discomfort with possibilities the identities offer people. These sorts of dynamics are present with religious minorities too. Rather than question their faith, gender or sexuality, the different people are driven into their respective closets and out of view.

Getting at Subtle Exclusion

‘Controversial’ as we all know, is often a euphemism for ‘interesting and intelligent’.

― Kevin Smith

I’m writing this essay on diversity as a white middle-aged male who is seemingly a member of the ruling class, what gives? I represent a different diversity in many respects than the obvious forms we focus on. Being discriminated against happen without consequence. I’m outgoing and extroverted in a career and work environment dominated by introverts who are uncomfortable with emotion and human contact. I’m opinionated, outspoken and brave enough to be controversial in a sea of committed conformists. Both of these traits are punished with advice to blunt my natural tendencies. You are expected to toe the line and conform. The powers that be are not welcoming to any challenge or debate, the mantra of the day is “sit down, shut up, and do as you’re told” and “you’re lucky to be here”. The message is clear, you aren’t here as an equal, you need to be a compliant cog who plays your role. Individual contributions, opinions and talent are not important or welcome unless they fit neatly into the master’s plan. Increasingly in corporate America, you are either part of the ruling class or simply a disempowered serf whose personal value is completely dismissed. In this sense any diversity is discouraged and squashed by our overlords.

If one trait defines the vast number of people today, it is disempowerment. Your personal value and potential are meaningless to our management. Do you job; do what you are told to do; comply with their requirements. Whatever you do don’t make waves or question the powers. I’ve done this, and the reaction is to punish people through marginalization and lack of access. Retribution is implicit and subtle. In the end you don’t know how much you were punished through opportunity and information denial. The key is that the powers that be are in control of you and if you don’t play ball with them, you are an outsider. A way of not getting into trouble is being a compliant, boring and utterly vanilla worker bee. If you tow the party line you simply get the benefit of employment “success”. Eventually if you play your cards right you can join the power structure as one of them. In this way diversity of any sort is punished. They see diversity as a threat and work to drive it out.

If one trait defines the vast number of people today, it is disempowerment. Your personal value and potential are meaningless to our management. Do you job; do what you are told to do; comply with their requirements. Whatever you do don’t make waves or question the powers. I’ve done this, and the reaction is to punish people through marginalization and lack of access. Retribution is implicit and subtle. In the end you don’t know how much you were punished through opportunity and information denial. The key is that the powers that be are in control of you and if you don’t play ball with them, you are an outsider. A way of not getting into trouble is being a compliant, boring and utterly vanilla worker bee. If you tow the party line you simply get the benefit of employment “success”. Eventually if you play your cards right you can join the power structure as one of them. In this way diversity of any sort is punished. They see diversity as a threat and work to drive it out.

You could jump so much higher when you had somewhere safe to fall.

― Liane Moriarty

Given that the treatment of relatively benign diversity is met with implicit resistance, one might reasonably ask how edgier forms of diversity will be welcomed? Granted, one’s personality can have a distinct work-related impact, and behavior is certainly work appropriate, but what are the bounds on how that is managed? My experience that the workplace goes too far in moderating people’s innate tendencies. My particular work has promoted the aspirational view toward diversity of bringing your true and best self to work. In all honesty, it is an aspiration that they fail at to a spectacular degree. When relatively common and universal aspects of our humanity are not accepted, how would more controversial aspects be accepted? A fair reaction is the conclusion that they wouldn’t be accepted at all. To add to the mix is a broader governance that is getting less accepting of differences than more. As a result, people whose lives are outside the conservatively defined norms are prone to hide major aspects of their identity. The accepted majority and accepted identity is white, male, Christian, heterosexual, and monogamous. We still accept political differences, but to varying degrees we expect people to fall into only a few narrow bins.

It is time for parents to teach young people early on that in diversity there is beauty and there is strength.

― Maya Angelou

What happens when someone falls outside these identities? If one is female and/or non-white there are protections and discrimination and bias is purely implicit. What about being an atheist? What about being gay, or transsexual? The legal protections begin to be weaker, and major societal elements seek to remove these protections. What if you’re non-monogamous, or committed to some other divergent sexual identity? What if you’re a communist or fascist? Then you are likely to be actively persecuted and discriminated against. Where is the line where this should happen? What life choices are so completely outside the norm of societal acceptance that they should be subject to effective banishment. If you have made one of these life choices, your choice is often hidden and secret. You are in the closet. This is a very real issue especially when large parts of society want you in the closet or put you back there. Moreover, this part of society is emboldened to try and put gays, women and people of color back in their traditional closets, kitchens and ghettos.

What happens when someone falls outside these identities? If one is female and/or non-white there are protections and discrimination and bias is purely implicit. What about being an atheist? What about being gay, or transsexual? The legal protections begin to be weaker, and major societal elements seek to remove these protections. What if you’re non-monogamous, or committed to some other divergent sexual identity? What if you’re a communist or fascist? Then you are likely to be actively persecuted and discriminated against. Where is the line where this should happen? What life choices are so completely outside the norm of societal acceptance that they should be subject to effective banishment. If you have made one of these life choices, your choice is often hidden and secret. You are in the closet. This is a very real issue especially when large parts of society want you in the closet or put you back there. Moreover, this part of society is emboldened to try and put gays, women and people of color back in their traditional closets, kitchens and ghettos.

We are in a time where progress in diversity has stopped, and elements of society are moving backwards. Progress in expanding diversity and acceptance has stopped, and we are making America Great Again by enhancing the power of whites hiding people of color, closeting gays, and making women subservient. All of this is done in service of those in power both to maintain their power, wealth and control. Any sort of commitment to diversity is viewed as an assault on the power and wealth of the ruling class. As a result, we see a continued concentration of wealth and power in the hands of the few. General social mobility has been diminished so that people keep their place in society. For the rank and file, we see disempowerment and meager rewards for slavish conformity. Step out of line and draw attention to yourself and expect punishment and shunning. The slavishly conformant masses provide the peer pressure and bulk of the enforcement, but all of it serves those in power.

Instead of creating a society and system that gets the best out of people and maximizes human potential, we have pure maintenance of power by the ruling class. We let p eople act out through their basest fears to squash people and ideas that are uncomfortable. We are not leading people to be better, we are encouraging them to be worse. Rather than act out of love, we are emboldening hate. Rather than accept people and allow them flourish using their distinct individuality and achieve satisfying lives, we choose conformity and order. The conformity and order that is imposed serves only those in power and limits any aspirational dreams of the masses. The masses are controlled by fear that naturally arises with those who are different. Humans are naturally fearful of other humans that are different. Rather than encourage people to accept these differences (i.e., accept and promote diversity), we encourage them to discriminate against them by promoting fear of the other. This fear is the tool of rich and powerful to create systems that maintain their grip on society.

eople act out through their basest fears to squash people and ideas that are uncomfortable. We are not leading people to be better, we are encouraging them to be worse. Rather than act out of love, we are emboldening hate. Rather than accept people and allow them flourish using their distinct individuality and achieve satisfying lives, we choose conformity and order. The conformity and order that is imposed serves only those in power and limits any aspirational dreams of the masses. The masses are controlled by fear that naturally arises with those who are different. Humans are naturally fearful of other humans that are different. Rather than encourage people to accept these differences (i.e., accept and promote diversity), we encourage them to discriminate against them by promoting fear of the other. This fear is the tool of rich and powerful to create systems that maintain their grip on society.

We are in need of leadership that blazes a trail to being courageous and better. Without leadership and bravery, the progress we have achieved will turn back and be lost. There is so much more to do to get the best out of people, which cannot happen when our leaders allow and even encourage the worst in people to serve their own selfish needs. We have a choice and it is past time to choose excellence and progress through inclusion and diversity.

Here’s to the crazy ones. The misfits. The rebels. The troublemakers. The round pegs in the square holes. The ones who see things differently. They’re not fond of rules. And they have no respect for the status quo. You can quote them, disagree with them, glorify or vilify them. About the only thing you can’t do is ignore them. Because they change things. They push the human race forward. And while some may see them as the crazy ones, we see genius. Because the people who are crazy enough to think they can change the world, are the ones who do.

― Rob Siltanen

rovide a systematic explanation for what we see. More often than not this explanation has a mathematical character as the mechanism we use. Among our mathematical devices differential equations are among our most powerful tools. In the most basic form these equations are rate of change laws for some observable in the World. Most crudely these rate equations can be empirical vehicles for taking observations into a form useful for prediction, design and optimization. A more basic form is partial differential equations (PDEs) that describe the basic physics in a more expansive form. It is important to consider the consequences of the model forms we use. Several important categories of models are intrinsically unphysical in aspects thus highlighting the George Box aphorism that “essentially, all models are wrong”!

rovide a systematic explanation for what we see. More often than not this explanation has a mathematical character as the mechanism we use. Among our mathematical devices differential equations are among our most powerful tools. In the most basic form these equations are rate of change laws for some observable in the World. Most crudely these rate equations can be empirical vehicles for taking observations into a form useful for prediction, design and optimization. A more basic form is partial differential equations (PDEs) that describe the basic physics in a more expansive form. It is important to consider the consequences of the model forms we use. Several important categories of models are intrinsically unphysical in aspects thus highlighting the George Box aphorism that “essentially, all models are wrong”!

erms. This is a philosophical violation of the second law of thermodynamics, which can be used to establish the arrow of time. In this sense we find that elliptic equations are an asymptotic simplification of more fundamental laws. Another implication of ellipticity of PDEs is infinite speed of information, or more correctly an absence of time. If elliptic equations are found within a set of equations, we can be absolutely sure that some physics has been chosen to be ignored. In many cases these ignored physics are not important and some benefit is achieved through the simplification. On the other hand, we shouldn’t lose sight of what has been done and its potential for mischief. At some point this mischief will become relevant and disqualifying.

erms. This is a philosophical violation of the second law of thermodynamics, which can be used to establish the arrow of time. In this sense we find that elliptic equations are an asymptotic simplification of more fundamental laws. Another implication of ellipticity of PDEs is infinite speed of information, or more correctly an absence of time. If elliptic equations are found within a set of equations, we can be absolutely sure that some physics has been chosen to be ignored. In many cases these ignored physics are not important and some benefit is achieved through the simplification. On the other hand, we shouldn’t lose sight of what has been done and its potential for mischief. At some point this mischief will become relevant and disqualifying. With elliptic equations the strength of the signal is unabated in time, but with parabolic equations, the signal diminishes in time. As such the sin of causality violation isn’t quite so profound, but it is a sin nonetheless. As before we get parabolic equations by ignoring physics. Usually this is a valid thing to do based on the time and length scales of interest. We need to remember that at some point this ignorance will damage the ability to model. We are making simplifications that are not always justified. This point is lost quite often. People are allowed to think the elliptic or parabolic equations are fundamental when they are not.

With elliptic equations the strength of the signal is unabated in time, but with parabolic equations, the signal diminishes in time. As such the sin of causality violation isn’t quite so profound, but it is a sin nonetheless. As before we get parabolic equations by ignoring physics. Usually this is a valid thing to do based on the time and length scales of interest. We need to remember that at some point this ignorance will damage the ability to model. We are making simplifications that are not always justified. This point is lost quite often. People are allowed to think the elliptic or parabolic equations are fundamental when they are not.

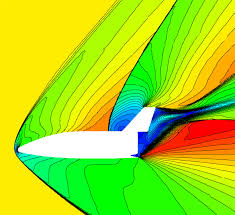

The incompressible Navier-Stokes equations are second primal example where hyperbolic equations are replaced by both parabolic-elliptic equations. A starting point would be the compressible equations that are purely hyperbolic without viscosity. Of course, the viscosity could be replaced with hyperbolic equations to make the compressible flow totally hyperbolic. These equations are the following,

The incompressible Navier-Stokes equations are second primal example where hyperbolic equations are replaced by both parabolic-elliptic equations. A starting point would be the compressible equations that are purely hyperbolic without viscosity. Of course, the viscosity could be replaced with hyperbolic equations to make the compressible flow totally hyperbolic. These equations are the following,  For modeling and numerical work, the selection of the less physical parabolic and elliptic equations provides better conditioning. The conditioning provides a better numerical and analytical basis for the solutions. The recognition that the equations are less physical is not commonly appreciated. A broader and common appreciation may provide impetus for identifying when these differences are significant. Holding models that are unphysical as sacrosanct is always dangerous. It is important to recognize the limitations of models and allow ourselves to question them regularly. Even models that are fully hyperbolic are wrong themselves, this is the very nature of models. By using hyperbolic models, we remove an obviously unphysical aspect of a given model. Models are abstractions of reality, not the operating system of the universe. We must never lose sight of this.

For modeling and numerical work, the selection of the less physical parabolic and elliptic equations provides better conditioning. The conditioning provides a better numerical and analytical basis for the solutions. The recognition that the equations are less physical is not commonly appreciated. A broader and common appreciation may provide impetus for identifying when these differences are significant. Holding models that are unphysical as sacrosanct is always dangerous. It is important to recognize the limitations of models and allow ourselves to question them regularly. Even models that are fully hyperbolic are wrong themselves, this is the very nature of models. By using hyperbolic models, we remove an obviously unphysical aspect of a given model. Models are abstractions of reality, not the operating system of the universe. We must never lose sight of this. ms, the deterministic attitude runs aground. The natural World and engineered systems rarely behave in a completely deterministic manner. We see varying degrees of non-determinism and chance in how things work. Some of this is the action of humans in a system, some of it are complex initial conditions, or structure that deterministic models ignore. This variability, chance, and structure is typically not captured by our modeling, and as such modeling is limited in utility for understanding reality.

ms, the deterministic attitude runs aground. The natural World and engineered systems rarely behave in a completely deterministic manner. We see varying degrees of non-determinism and chance in how things work. Some of this is the action of humans in a system, some of it are complex initial conditions, or structure that deterministic models ignore. This variability, chance, and structure is typically not captured by our modeling, and as such modeling is limited in utility for understanding reality.

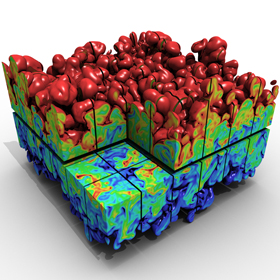

To move forward we should embrace some degree of randomness in the fundamental models we solve. This random response naturally arises from various sources. In our deterministic models, the random response is heavily incorporated in boundary and initial conditions. The initial conditions include things like texture and structure that the standard models homogenize over. Boundary conditions are the means for the model to communicate with the broader world, which has vast complexities are grossly simplified. In reality both the initial and boundary conditions are far more complex than our models currently use.

To move forward we should embrace some degree of randomness in the fundamental models we solve. This random response naturally arises from various sources. In our deterministic models, the random response is heavily incorporated in boundary and initial conditions. The initial conditions include things like texture and structure that the standard models homogenize over. Boundary conditions are the means for the model to communicate with the broader world, which has vast complexities are grossly simplified. In reality both the initial and boundary conditions are far more complex than our models currently use. Another aspect of the complexity that current modeling ignores are the dynamics associated with the stochastic phenomena or lumps it whole cloth into the model’s closure. In a real system the stochastic aspects of the model evolve over time including nonlinear interactions between deterministic and stochastic aspects. When the dynamics are completely confined to deterministic models, these nonlinearities are ignored or lumped into the deterministic mean field. When models lack the proper connection to the correct dynamics, the modeling capability is diminished. The result is greater uncertainty and less explanation of what is happening in nature. From an engineering point of view, the problem is that the ability to explicitly control for the non-deterministic aspect of systems is diminished because its influence on results isn’t directly exposed. If the actual dynamics were exposed, we could work proactively to design better. This is the power of understanding in science; if we understand we can attempt to mitigate and control the phenomena. Without proper modeling we are effectively flying blind.

Another aspect of the complexity that current modeling ignores are the dynamics associated with the stochastic phenomena or lumps it whole cloth into the model’s closure. In a real system the stochastic aspects of the model evolve over time including nonlinear interactions between deterministic and stochastic aspects. When the dynamics are completely confined to deterministic models, these nonlinearities are ignored or lumped into the deterministic mean field. When models lack the proper connection to the correct dynamics, the modeling capability is diminished. The result is greater uncertainty and less explanation of what is happening in nature. From an engineering point of view, the problem is that the ability to explicitly control for the non-deterministic aspect of systems is diminished because its influence on results isn’t directly exposed. If the actual dynamics were exposed, we could work proactively to design better. This is the power of understanding in science; if we understand we can attempt to mitigate and control the phenomena. Without proper modeling we are effectively flying blind.

The sort of science needed is enormously risky. I am proposing that we have reached the end of utility for models used for hundreds of years. This is a rather bold assertion on the face of it. On the other hand, the models we are using have a legacy going back to when only analytical solutions to models were used, or only very crude numerical tools. Now our modeling is dominated by numerical solutions, and computing from the desktop (or handheld) to supercomputers of unyielding size and complexity. Why should we expect models derived in the 18th and 19th centuries to still be used today? Shouldn’t our modeling advance as much as our solution methods have. Shouldn’t all the aspects of modeling and simulation be advancing. The answer is a dismal no.

The sort of science needed is enormously risky. I am proposing that we have reached the end of utility for models used for hundreds of years. This is a rather bold assertion on the face of it. On the other hand, the models we are using have a legacy going back to when only analytical solutions to models were used, or only very crude numerical tools. Now our modeling is dominated by numerical solutions, and computing from the desktop (or handheld) to supercomputers of unyielding size and complexity. Why should we expect models derived in the 18th and 19th centuries to still be used today? Shouldn’t our modeling advance as much as our solution methods have. Shouldn’t all the aspects of modeling and simulation be advancing. The answer is a dismal no. uncertainty and error. Most good results using these models are heavily calibrated and lack any true predictive power. In the absence of experiments, we are generally lost and rarely hit the mark. Instead of seeing any of this as shortcomings in the models, we seek to continue using the same models and focus primarily on computing power as a remedy. This is both foolhardy and intellectually empty if not outright dishonest.

uncertainty and error. Most good results using these models are heavily calibrated and lack any true predictive power. In the absence of experiments, we are generally lost and rarely hit the mark. Instead of seeing any of this as shortcomings in the models, we seek to continue using the same models and focus primarily on computing power as a remedy. This is both foolhardy and intellectually empty if not outright dishonest.

We would be far better off removing the word “predictive” as a focus for science. If we replaced the emphasis on prediction with a focus on explanation and understanding, our science would improve overnight. The sense that our science must predict carries connotations that are unrelentingly counter-productive to the conduct of science. The side-effects of the predictivity undermine the scientific method at every turn. The goal of understanding nature and explaining what happens in the natural world is consistent with the conduct of high quality science. In many respects large swaths of the natural world are unpredictable in highly predictable ways. Our weather is a canonical example of this. Moreover, we find the weather to be unpredictable in a bounded manner as time scales become longer. Science that has focused on understanding and explanation has revealed these truths. Attempting to focus prediction under some circumstances is both foolhardy and technically impossible. As such the reality of prediction needs to be entered into carefully and thoughtfully under well-chosen circumstances. We also need the freedom to find out that we are wrong and incapable of prediction. Ultimately, we need to find out limits on prediction and work to improve or accept these limits.

We would be far better off removing the word “predictive” as a focus for science. If we replaced the emphasis on prediction with a focus on explanation and understanding, our science would improve overnight. The sense that our science must predict carries connotations that are unrelentingly counter-productive to the conduct of science. The side-effects of the predictivity undermine the scientific method at every turn. The goal of understanding nature and explaining what happens in the natural world is consistent with the conduct of high quality science. In many respects large swaths of the natural world are unpredictable in highly predictable ways. Our weather is a canonical example of this. Moreover, we find the weather to be unpredictable in a bounded manner as time scales become longer. Science that has focused on understanding and explanation has revealed these truths. Attempting to focus prediction under some circumstances is both foolhardy and technically impossible. As such the reality of prediction needs to be entered into carefully and thoughtfully under well-chosen circumstances. We also need the freedom to find out that we are wrong and incapable of prediction. Ultimately, we need to find out limits on prediction and work to improve or accept these limits. chances of hitting funding. A slightly less cynical take would take predictive as the objective for science that is completely aspirational. In the context of our current world, we strive for predictive science as a means of confirming our mastery over a scientific subject. In this context the word predictive implies that the we understand the science well enough to foresee outcomes. We should also practice some deep humility in what this means. Predictivity is always a limited statement, and these limitations should always be firmly in mind. First, predictions are limited to some subset of what can be measured and fail for other quantities. The question is whether the predictions are correct for what matters? Secondly, the understanding is always waiting to be disproved by a reality that is more complex than we realize. Good science is acutely aware of these limitations and actively probes the boundary of our understanding.

chances of hitting funding. A slightly less cynical take would take predictive as the objective for science that is completely aspirational. In the context of our current world, we strive for predictive science as a means of confirming our mastery over a scientific subject. In this context the word predictive implies that the we understand the science well enough to foresee outcomes. We should also practice some deep humility in what this means. Predictivity is always a limited statement, and these limitations should always be firmly in mind. First, predictions are limited to some subset of what can be measured and fail for other quantities. The question is whether the predictions are correct for what matters? Secondly, the understanding is always waiting to be disproved by a reality that is more complex than we realize. Good science is acutely aware of these limitations and actively probes the boundary of our understanding. In the modern world we constantly have new tools to help expand our understanding of science. Among the most important of these new tools is modeling and simulation. Modeling and simulation is simply an extension of the classical scientific approach. Computers allow us to solve our models in science more generally than classical means. This has increased the importance and role of models in science. We can envision more complex models having more general solutions with computational solutions. Part of this power comes with some substantial responsibility; computational simulations are highly technical and difficult. They come with a host of potential flaws, errors and uncertainties that cloud results and need focused assessment. Getting the science of computation correct and assessed to play a significant role in the scientific enterprise requires a broad multidisciplinary approach with substantial rigor. Playing a broad integrating role in predictive science is verification and validation (V&V). In a nutshell V&V is the scientific method as applied to modeling and simulation. Its outcomes are essential for making any claims regarding how predictive your science is.

In the modern world we constantly have new tools to help expand our understanding of science. Among the most important of these new tools is modeling and simulation. Modeling and simulation is simply an extension of the classical scientific approach. Computers allow us to solve our models in science more generally than classical means. This has increased the importance and role of models in science. We can envision more complex models having more general solutions with computational solutions. Part of this power comes with some substantial responsibility; computational simulations are highly technical and difficult. They come with a host of potential flaws, errors and uncertainties that cloud results and need focused assessment. Getting the science of computation correct and assessed to play a significant role in the scientific enterprise requires a broad multidisciplinary approach with substantial rigor. Playing a broad integrating role in predictive science is verification and validation (V&V). In a nutshell V&V is the scientific method as applied to modeling and simulation. Its outcomes are essential for making any claims regarding how predictive your science is. We can take a moment to articulate the scientific method and then restate it in a modern context using computational simulation. The scientific method involves making hypotheses about the universe and testing those hypotheses against observations of the natural world. One of the key ways to make observations are experiments where the measurements of reality are controlled and focused to elucidate nature more clearly. These hypotheses or theories usually produce models of reality, which take the form of mathematical statements. These models can be used to make predictions about what an observation will be, which then confirms the hypothesis. If the observations are in conflict with the model’s predictions, the hypothesis and model need to be discarded or modified. Over time observations become more accurate, often showing the flaws in models. This usually means a model needs to be refined rather than thrown out. This process is the source of progress in science. In a sense it is a competition between what we observe and how well we observe it, and the quality of our models of reality. Predictions are the crucible where this tension can be realized.

We can take a moment to articulate the scientific method and then restate it in a modern context using computational simulation. The scientific method involves making hypotheses about the universe and testing those hypotheses against observations of the natural world. One of the key ways to make observations are experiments where the measurements of reality are controlled and focused to elucidate nature more clearly. These hypotheses or theories usually produce models of reality, which take the form of mathematical statements. These models can be used to make predictions about what an observation will be, which then confirms the hypothesis. If the observations are in conflict with the model’s predictions, the hypothesis and model need to be discarded or modified. Over time observations become more accurate, often showing the flaws in models. This usually means a model needs to be refined rather than thrown out. This process is the source of progress in science. In a sense it is a competition between what we observe and how well we observe it, and the quality of our models of reality. Predictions are the crucible where this tension can be realized. Validation is then the structured comparison of the simulated model’s solution with observations. Validation is not something that is completed, but rather it is an assessment of work. At the end of the validation process evidence has been accumulated as to the state of the model. Is the model consistent with the observations? If the uncertainties in the modeling and simulation process along with the uncertainties in the observations can lead to the conclusion that the model is correct enough to be used. In many cases the model is found to be inadequate for the purpose and needs to be modified ˙or changed completely. This process is simply the hypothesis testing so central to the conduct of science.

Validation is then the structured comparison of the simulated model’s solution with observations. Validation is not something that is completed, but rather it is an assessment of work. At the end of the validation process evidence has been accumulated as to the state of the model. Is the model consistent with the observations? If the uncertainties in the modeling and simulation process along with the uncertainties in the observations can lead to the conclusion that the model is correct enough to be used. In many cases the model is found to be inadequate for the purpose and needs to be modified ˙or changed completely. This process is simply the hypothesis testing so central to the conduct of science. Let’s take a well thought of and highly accepted model, the incompressible Navier-Stokes equations. This model is thought to largely contain the proper physics of fluid mechanics, most notably turbulence. Perhaps this is true although our lack of progress in turbulence might indicate that something is amiss. I will state without doubt that the incompressible Navier-Stokes equations are wrong in some clear and unambiguous ways. The deepest problem with the model is incompressibility. Incompressible fluids do not exist and the form of the mass equation showing divergence free velocity fields implies several deeply unphysical things. All materials in the universe are compressible and support sound waves, and this relation opposes this truth. Incompressible flow is largely divorced from thermodynamics and materials are thermodynamic. The system of equations violates causality rather severely, the sound waves travel at infinite speeds. All of this is true, but at the same time this system of equations is undeniably useful. There are large categories of fluid physics that they explain quite remarkably. Nonetheless the equations are also obviously unphysical. Whether or not this unphysical character is consequential should be something people keep in mind.

Let’s take a well thought of and highly accepted model, the incompressible Navier-Stokes equations. This model is thought to largely contain the proper physics of fluid mechanics, most notably turbulence. Perhaps this is true although our lack of progress in turbulence might indicate that something is amiss. I will state without doubt that the incompressible Navier-Stokes equations are wrong in some clear and unambiguous ways. The deepest problem with the model is incompressibility. Incompressible fluids do not exist and the form of the mass equation showing divergence free velocity fields implies several deeply unphysical things. All materials in the universe are compressible and support sound waves, and this relation opposes this truth. Incompressible flow is largely divorced from thermodynamics and materials are thermodynamic. The system of equations violates causality rather severely, the sound waves travel at infinite speeds. All of this is true, but at the same time this system of equations is undeniably useful. There are large categories of fluid physics that they explain quite remarkably. Nonetheless the equations are also obviously unphysical. Whether or not this unphysical character is consequential should be something people keep in mind. In conducting predictive science one of the most important things you can do is make a prediction. While you might start with something where you expect the prediction to be correct (or correct enough), the real learning comes from making predictions that turn out to be wrong. It is wrong predictions that will teach you something. Sometimes the thing you learn is something about your measurement or experiment that needs to be refined. At other times the wrong prediction can be traced back to the model itself. This is your demand and opportunity to improve the model. Is the difference due to something fundamental in the model’s assumptions? Or is it simply something that can be fixed by adjusting the closure of the model? Too often we view failed predictions as problems when instead they are opportunities to improve the state of affairs. I might posit that if you succeed with a prediction, it is a call to improvement; either improve the measurement and experiment, or the model. Experiments should set out to show flaws in the models. If this is done the model needs to be improved. Successful predictions are simply not vehicles for improving scientific knowledge, they tell us we need to do better.

In conducting predictive science one of the most important things you can do is make a prediction. While you might start with something where you expect the prediction to be correct (or correct enough), the real learning comes from making predictions that turn out to be wrong. It is wrong predictions that will teach you something. Sometimes the thing you learn is something about your measurement or experiment that needs to be refined. At other times the wrong prediction can be traced back to the model itself. This is your demand and opportunity to improve the model. Is the difference due to something fundamental in the model’s assumptions? Or is it simply something that can be fixed by adjusting the closure of the model? Too often we view failed predictions as problems when instead they are opportunities to improve the state of affairs. I might posit that if you succeed with a prediction, it is a call to improvement; either improve the measurement and experiment, or the model. Experiments should set out to show flaws in the models. If this is done the model needs to be improved. Successful predictions are simply not vehicles for improving scientific knowledge, they tell us we need to do better. A healthy focus on predictive science with a taste for failure produces a strong driver for lubricating the scientific method and successfully integrating modeling and simulation as a valuable tool. Prediction involves two sides of science to work in concert; the experiment-observation of the natural world, and the modeling of the natural world via mathematical abstraction. The better the observations and experiments, the greater the challenge to models. Conversely, the better the model, the greater the challenge to observations. We need to tee up the tension between how we sense and perceive the natural world, and how we understand that world through modeling. It is important to examine where the ascendency in science exists. Are the observations too good for the models? Or can no observation challenge the models? This tells us clearly where we should prioritize.

A healthy focus on predictive science with a taste for failure produces a strong driver for lubricating the scientific method and successfully integrating modeling and simulation as a valuable tool. Prediction involves two sides of science to work in concert; the experiment-observation of the natural world, and the modeling of the natural world via mathematical abstraction. The better the observations and experiments, the greater the challenge to models. Conversely, the better the model, the greater the challenge to observations. We need to tee up the tension between how we sense and perceive the natural world, and how we understand that world through modeling. It is important to examine where the ascendency in science exists. Are the observations too good for the models? Or can no observation challenge the models? This tells us clearly where we should prioritize. The important word I haven’t mentioned yet is “uncertainty”. We cannot have predictive science without dealing with uncertainty and its sources. In general, we systematically or perhaps even pathologically underestimate how uncertain our knowledge is. We like to believe our experiments and models are more certain than they actually are. This is really easy to do in practice. For many categories of experiments, we ignore sources of uncertainty and simply get away with an estimate of zero for that uncertainty. If we do a single experiment, we never have to explicitly confront that the experiment isn’t completely reproducible. On the modeling side we see the particular experiment as something to be modeling precisely even if the phenomena of interest are highly variable. This is common and a source of willful cognitive dissonance. Rather than confront this rather fundamental uncertainty, we willfully ignore it. We do not run replicate experiments and measure the variation in results. We do not subject the modeling to reasonable variations in the experimental conditions and check the variation in the results. We pretend that the experiment is completely well-posed, and the model is too. In doing this we fail at the scientific method rather profoundly.

The important word I haven’t mentioned yet is “uncertainty”. We cannot have predictive science without dealing with uncertainty and its sources. In general, we systematically or perhaps even pathologically underestimate how uncertain our knowledge is. We like to believe our experiments and models are more certain than they actually are. This is really easy to do in practice. For many categories of experiments, we ignore sources of uncertainty and simply get away with an estimate of zero for that uncertainty. If we do a single experiment, we never have to explicitly confront that the experiment isn’t completely reproducible. On the modeling side we see the particular experiment as something to be modeling precisely even if the phenomena of interest are highly variable. This is common and a source of willful cognitive dissonance. Rather than confront this rather fundamental uncertainty, we willfully ignore it. We do not run replicate experiments and measure the variation in results. We do not subject the modeling to reasonable variations in the experimental conditions and check the variation in the results. We pretend that the experiment is completely well-posed, and the model is too. In doing this we fail at the scientific method rather profoundly. In this light we can see that V&V is simply a structured way of collecting evidence necessary the scientific method. Collecting this evidence is difficult and requires assumptions to be challenged. Challenging assumptions is courting failure. Making progress requires failure and the invalidation of models. It requires doing experiments that we fail to be able to predict with existing models. We need to assure that the model is the problem, and the failure isn’t due to numerical error. To determine these predictive failures requires a good understanding of uncertainty in both experiments and computational modeling. The more genuinely high quality the experimental work is, the more genuinely testing the validation is to model. We can collect evidence about the correctness of the model and clear standards for judging improvements in the models. The same goes for the uncertainty in computations, which needs evidence so that progress can be measured.

In this light we can see that V&V is simply a structured way of collecting evidence necessary the scientific method. Collecting this evidence is difficult and requires assumptions to be challenged. Challenging assumptions is courting failure. Making progress requires failure and the invalidation of models. It requires doing experiments that we fail to be able to predict with existing models. We need to assure that the model is the problem, and the failure isn’t due to numerical error. To determine these predictive failures requires a good understanding of uncertainty in both experiments and computational modeling. The more genuinely high quality the experimental work is, the more genuinely testing the validation is to model. We can collect evidence about the correctness of the model and clear standards for judging improvements in the models. The same goes for the uncertainty in computations, which needs evidence so that progress can be measured. In the process we create conditions where the larger goal of prediction is undermined at every turn. Rather than define success in terms of real progress, we produce artificial measures of success. A key to improving this state of affairs is an honest assessment of all of our uncertainties both experimentally and computationally. There are genuine challenges to this honesty. Generally, the more work we do, the more uncertainty we unveil. This is true of experiments and computations. Think about examining replicate uncertainty in complex experiments. In most cases the experiment is done exactly once, and the prospect of reproducing the experiment is completely avoided. As soon as replicate experiments are conducted the uncertainty becomes larger. Before the replicates, this uncertainty was simply zero and no one challenges this assertion. Instead of going back and adjusting our past state based on current knowledge we run the very real risk of looking like we are moving backwards. The answer is not to continue this willful ignorance but take a mea culpa and admit our former shortcomings. These mea culpas are similarly avoided thus backing the forces of progress into an ever-tighter corner.

In the process we create conditions where the larger goal of prediction is undermined at every turn. Rather than define success in terms of real progress, we produce artificial measures of success. A key to improving this state of affairs is an honest assessment of all of our uncertainties both experimentally and computationally. There are genuine challenges to this honesty. Generally, the more work we do, the more uncertainty we unveil. This is true of experiments and computations. Think about examining replicate uncertainty in complex experiments. In most cases the experiment is done exactly once, and the prospect of reproducing the experiment is completely avoided. As soon as replicate experiments are conducted the uncertainty becomes larger. Before the replicates, this uncertainty was simply zero and no one challenges this assertion. Instead of going back and adjusting our past state based on current knowledge we run the very real risk of looking like we are moving backwards. The answer is not to continue this willful ignorance but take a mea culpa and admit our former shortcomings. These mea culpas are similarly avoided thus backing the forces of progress into an ever-tighter corner. The core of the issue is relentlessly psychological. People are uncomfortable with uncertainty and want to believe things are certain. They are uncomfortable about random events, and a sense of determinism is comforting. As such modeling reflects these desires and beliefs. Experiments are similarly biased toward these beliefs. When we allow these beliefs to go unchallenged, the entire basis of scientific progress becomes unhinged. Confronting and challenging these comforting implicit assumptions may be the single most difficult for predictive science. We are governed by assumptions that limit our actual capacity to predict nature. Admitting flaws in these assumptions and measuring how much we don’t know is essential for creating the environment necessary for progress. The fear of saying, “I don’t know” is our biggest challenge. In many respects we are managed to never give that response. We need to admit what we don’t know and challenge ourselves to seek those answers.

The core of the issue is relentlessly psychological. People are uncomfortable with uncertainty and want to believe things are certain. They are uncomfortable about random events, and a sense of determinism is comforting. As such modeling reflects these desires and beliefs. Experiments are similarly biased toward these beliefs. When we allow these beliefs to go unchallenged, the entire basis of scientific progress becomes unhinged. Confronting and challenging these comforting implicit assumptions may be the single most difficult for predictive science. We are governed by assumptions that limit our actual capacity to predict nature. Admitting flaws in these assumptions and measuring how much we don’t know is essential for creating the environment necessary for progress. The fear of saying, “I don’t know” is our biggest challenge. In many respects we are managed to never give that response. We need to admit what we don’t know and challenge ourselves to seek those answers.