tl;dr; Algorithms shape our world today. When a new algorithm is created it can transform a computational landscape. These changes happen in enormous leaps that take us by surprise. The latest changes in the artificial intelligence are the result of such a breakthrough. It is unlikely to be followed by another breakthrough soon reducing the seeming pace of change. For this reason the threats of doom and vast wealth are overblown. If we want more progress it is essential to understand how such breakthroughs happen and their limits.

“The purpose of computing is insight, not numbers.”

– Richard Hamming

We live in the age of the algorithm. In the past ten years this has leapt to the front of mind with social media and the online world. It has actually been true ever since the computer took hold of society. This began in the 1940’s with the first serious computers, and numerical mathematics. A new improved algorithm always drives the use of the computer forward as much as hardware. What people do not realize is that the improvements that get noticed are practically quantum in change. These algorithms get our attention.

“I am worried that algorithms are getting too prominent in the world. It started out that computer scientists were worried nobody was listening to us. Now I’m worried that too many people are listening.”

– Donald Knuth

Now that the internet has become central to our lives we need to understand this. One reason is understanding how algorithms create value for business and stock market valuations. How these sorts of advances fool people on the pace of change? We should also know how this breakthroughs are made. We need to understand how likely we are to see progress? How can we create an environment where advances are possible? How the way we fund and manage work actually destroys the ability to continue progress?

“You can harvest any data that you want, on anybody. You can infer any data that you like, and you can use it to manipulate them in any way that you choose. And you can roll out an algorithm that genuinely makes massive differences to people’s lives, both good and bad, without any checks and balances.”

– Hannah Fry

Two examples come to mind in recent year to illustrate these points. The first is the Google search algorithm, pagerank. The second is the transformer, which elevated large language models to the forefront of the public’s mind in the last two years. What both of these algorithms illustrate clearly is the pattern for algorithmic improvement. A quantum leap in performance and behavior followed by incremental changes. These incremental changes are basically fine tuning and optimization. They are welcome, but do not change the World. The key is realizing the impact of the quantum leap from an algorithm and putting it into proper perspective.

Google is an archetype

Google transformed search and the internet and ushered algorithms into the public eye. Finding things online used to be torture as early services tried to produce a “phone book” for the internet. I used Alta Vista, but Yahoo was another example. Then Google appeared and we never went back. Once you used Google the old indexes of the internet were done. It was like walking through a door. You shut the door and never looked back. This algorithm turned the company Google into a verb, household name and one of the most powerful forces on Earth. Behind it was an algorithm that blended graph theory and linear algebra into an engine of discovery. Today’s online world and its software are built on the foundation of Google.

“The Google algorithm was a significant development. I’ve had thank-you emails from people whose lives have been saved by information on a medical website or who have found the love of their life on a dating website.”

– Tim Berners-Lee

Google changed the internet introduced search and demonstrated the power of information. All of a sudden information was unveiled and shown to be power. Google unleashed the internet into a transformative engine for business, but society as well. The online world we know today owes its existence to Google. We need to acknowledge that Google today is a shadow of the algorithm of the past. Google has become a predatory monopoly and the epitome of “enshitification” of the internet. This is the process of getting worse over time. This is because Google is searching for profits over performance. Instead of giving us the best results they are selling spaces for money. This process is repeated across the internet undermining the power of the algorithms that created it.

“In comparison, Google is brilliant because it uses an algorithm that ranks Web pages by the number of links to them, with those links themselves valued by the number of links to their page of origin.”

The Transformer and LLMs

The next glorious example of algorithmic power comes from Google (Brain) with the Transformer. Invented at Google in 2017 this algorithm has changed the world again. With a few tweaks and practical implementations OpenAI unleashed ChatGPT. This was a large language model (LLM) that ingested large swaths of the internet to teach it. The LLM can then produce results that were absolutely awe-inspiring. This was especially in comparison to what came before where suddenly the LLM could produce almost human like responses. Granted this is true if that human was a corporate dolt. Try to get ChatGPT to talk like a real fucking person! (just proved a person wrote this!)

“An algorithm must be seen to be believed.”

– Donald Knuth

These results were great even after OpenAI lobodomized ChatGPT with reinforcement learning that kept it from being politically incorrect. The LLM’s won’t curse or say racist or sexist stuff either. In the process the LLM becomes as lame as a conversation with your dullest coworker. The unrestrained ChatGPT was almost human in creativity, but also prone to sexist, racist and hate speech (like people). It is amazing to know how much creativity was sacrificed to make it corporately acceptable. It is worth thinking about and how this reflects on people. Does the wonder of creativity depend upon accepting our flaws?

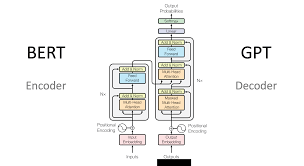

Under the covers in the implementation of the foundation models at the core of ChatGPT is the transformer. The transformer has a couple of key elements. One if the ability to devour data in huge chunks perfectly fitting for modern GPU chips. This has allowed far more data to be used and transformed NVIDIA into a mulit-trillion dollar company overnight. This efficiency is only one of the two bits of magic. The real magic is the attention mechanism. This is what the LLM takes as instructions for its results. The transformer allows longer more complex instructions to be given. It also allows multiple instructions to guide its output. The attention mechanism has led to fundamentally different behavior from the LLMs. Together these elements demonstrate the power of algorithms.

“Science is what we understand well enough to explain to a computer. Art is everything else we do.”

– Donald Knuth

The real key to LLMs is NOT the computing available. A lot of capable computing helps and makes it easier. The real key to the huge leap in performance is the attention mechanism that changed the algorithm. This produced the qualitative change in how LLMs functioned. This produced the sort of results that made the difference. It was not the computers; it was the algorithms!

The world collectively lost their shit when ChatGPT went live. People everywhere freaked the fuck out! As noted above the impact could have easily been more profound without the restraint offered by reinforcement learning. Nonetheless feelings were unleashed that felt like we were on the cusp of exponential change. We are not. The reason why we are not is something key about the change. The real difference with these new LLMs was all predicated on the transformer algorithm’s character. Unless the breakthroughs of the transformer are repeated with new ideas, the exponential growth will not happen. Another change will happen, but it is not likely for a number of years from now.

A look at the history of computational science unveils that such changes happen more slowly. One cannot count on these algorithmic breakthroughs. They happen episodically with sudden leaps followed by periods of fallow growth. The fallow periods are optimization of the breakthrough and incremental change. As 2024 plays out I have become convinced that LLMs are like this. There will be no exponential growth into general AI that people fear. The transformer was the breakthrough and without another breakthrough we are on a pleateau of performance. Nonetheless like Google, ChatGPT was a world changing algorithm. Until a new algorithm is discovered, we will be on a slow path to change.

“So my favorite online dating website is OkCupid, not least because it was started by a group of mathematicians.”

– Hannah Fry

Computational Science and Quantum Leaps from Algorithms

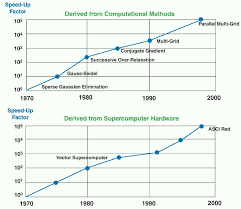

To examine what this sort of algorithmic growth in performance we can look at examples from classical comptuational science. Linear algebra is an archetype of this sort of growth. Over a span of years from 1947 to 1985, the algorithmic performance matched the performance gains from hardware. This meant that Moore’s law for hardware was amplified by better algorithms. Moore’s law is the result of multiple technologies working together to create the exponential growth.

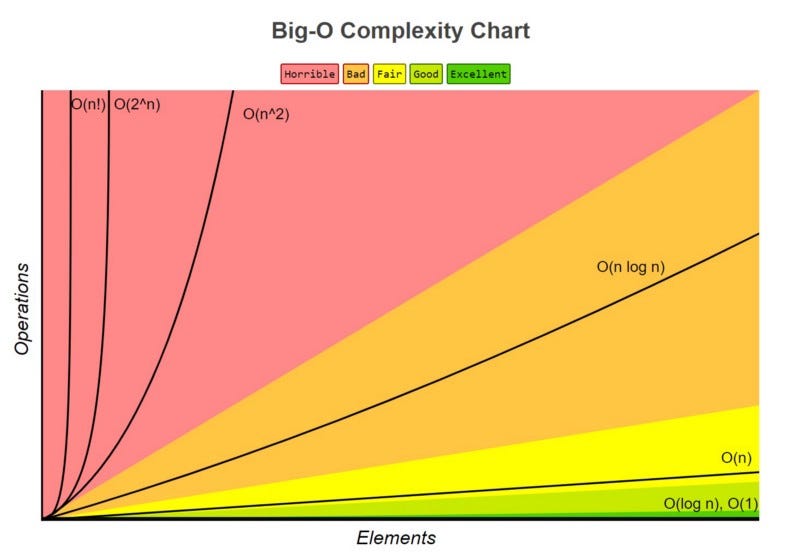

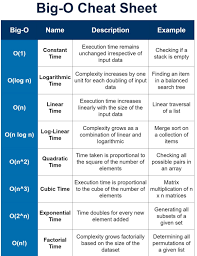

In the 1940’s linear algebra worked using dense matrix algorithms that scaled cubically with problem size. As it turned out most computational science applications were sparse structured matrices. These could be solved more efficiently with quadratic scaling. This was a huge difference. For a system with 1000 equations this is the difference of a million instead of a billion in terms of the work done and storage taken on the computer. Further advances happened with Krylov algorithms and ultimately multigrid where the scaling is linear (1000 in the above example). These are all huge speedups and advances. A key point is that the changes above occurred over the span of 40 years.

The nature of these changes is quantum in nature where the performance of the new algorithm leaps orders of magnitude. The new algorithm allows new problems to be solved and is efficient in ways the former algorithm is incapable of. This is exactly like what happened with the transformer. In between these advances the new algorithm is optimized and gets better. It does not change the fundamental performance. Nothing amazing happens until something is introduced that acts fundamentally differently. This is why there is a giant AI bubble. Unless another algorithmic advance is made, the LLM world will not change dramatically. The power and fears around AI is overblown. People do not understand that this moment is largely algorithmically driven.

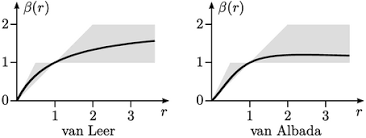

These sorts of leaps in performance are not limited to linear algebra. In optimization a 1988 study showed a 43,000,000 times improvement in performance over a 15 year period. Of this improvement 1000 was due to computer improvements, but 43,000 was due to better algorithms. Another example is the profound change in hydrodynamic algorithms based on transport methods. The introduction of “limiters” in the early 1970’s allowed second-order methods to be used for the most difficult problems. Before the limiters the second-order methods produced oscillations that resulted in unphysical results. The difference was transformative. I have recently shown that the leap in performance is about a factor of 50 in three dimensions. Moreover the results also compare to the basic physical laws in ways the first-order methods cannot produce.

How do algorithms leap ahead?

“This is the real secret of life — to be completely engaged with what you are doing in the here and now. And instead of calling it work, realize it is play.” ― Alan Watts

Where do these algorithm breakthroughs come from? Some come out of pure inspiration where someone sees an entirely different way to solve a problem. Others come through the long slog through seeking efficiency. The deep analysis yields observations that are profound and lead to better approaches. Many are pure inspiration coming out of giving people the space to operate in a playful space. This playful space is largely absent in the modern business or government world. To play is to fail and to fail is to learn. Today we have everything planned and everyone should know that breakthroughs are not planned. We cannot play; we cannot fail; we cannot learn; breakthroughs are impossible.

“Our brains are built to benefit from play no matter what our age.”

– Theresa A. Kestly

The problems with algorithm advancements are everywhere in today’s environment. Lack of fundamental trust leads to constrained planning and lack of risk taking. Worse yet, failure is not allowed as the essential engine of learning and discovery. This sort of attitude is pervasive in the government and corporate system. Basic and applied research is both lacking funding and that funding is not free to go after problems.

In the corporate environment the breakthroughs often do not benefit the company where things are discovered. The transformer was discovered by Google (Brain), but the LLM breakthrough was made by OpenAI. Its greatest beneficiary is Google’s rival microsoft. A more natural way to harness the power of innovation is the government funding. There laboratories and universities can produce work that is in the public domain. At the same time the public domain is harmed by various information hiding policies and lack of transparency. We are not organized for success at these things as a society. We have destroyed most of the engines of innovation. Until these engines are restarted we will live in a fallow time.

“There is no innovation and creativity without failure. Period.”

― Brene Brown

I see this clearly at work. There we argue about whether to keep using 30, 40 and 50 year old algorithms rather than invest in the state of the art. They then convince themselves that it is good because their customers like the code. The code is modern because it is written in C++ instead of Fortran. The results feel good simply because they use the most modern computing hardware. Our “leadership” does not realize that this approach is getting substandard return on investment. If the algorithms were advancing the results would be vastly improved. Yet, there is little or no appetite to develop new algorithms or invest in research in finding them. This sort of research is too failure prone to fund.

“Good scientists will fight the system rather than learn to work with the system.”

– Richard Hamming

Page, Lawrence. The PageRank citation ranking: Bringing order to the web. Technical Report, 1999.

Vaswani, A. “Attention is all you need.” Advances in Neural Information Processing Systems (2017).Vaswani, A. “Attention is all you need.” Advances in Neural Information Processing Systems (2017).

Margolin, Len G., and William J. Rider. “A rationale for implicit turbulence modelling.” International Journal for Numerical Methods in Fluids 39, no. 9 (2002): 821-841.

Boris, Jay P., and David L. Book. “Flux-corrected transport. I. SHASTA, a fluid transport algorithm that works.” Journal of computational physics 11, no. 1 (1973): 38-69.