tl;dr

A faster computer is always a good thing. It is not the best way to get faster or better results. A better program (or method or algorithm) is as important, if not more so. A new algorithm can be transformational and create new value. The faster computer also depends on a correct program, which isn’t a foregone conclusion. Demonstrating that things are done right is also difficult. Technically, this is called verification. Here, we get at the challenges of doing verification across the computing landscape. This is especially true as machine learning (AI) grows in importance where verification is not possible today.

“The man of science has learned to believe in justification, not by faith, but by verification.” ― Thomas H. Huxley

The Basic Premise of Better Computing

All of us have experienced the joy of a faster computer. We buy a new laptop and it responds far better than the old one. Faster internet is similar and all of a sudden streaming is routine and painless. If your phone has more memory you can more freely shoot pictures and video at the highest resolution. At the same time, the new computer can bring problems we all recognize. Often our software does not move smoothly over to the new computer. Sometimes a beloved program is incompatible with the new computer and a new one must be adopted. This sort of change is difficult whenever it is encountered.

Each of these commonplace things has a parallel issue in the more technical professional computing world. There are places where proving the improvement from the new computer is difficult. In some cases, it is so difficult that it is actually an article of faith, not science. It is in these areas where science needs to step up and provide means to prove. My broad observation that the faster, bigger computer as a good thing is largely an article of faith. Without the means to prove it, we are left to believe. Belief is not science or reliable. By and large, we are not doing the necessary work to make this a fact. This means recognizing where the gaps result in the faith is being applied dangerously.

A more critical issue is the recession of algorithmic progress. As we struggle to have faster computers as in the past, algorithms are the means to progress. Instead, we have doubled down on computers as they become worse paths to progress. This is just plain stupid. Algorithmic progress requires different strategies for adopting risky failure-prone research. Progress in algorithms occurs in leaps and bounds after long fallow periods. It also requires investments in thinking particularly in mathematics.

“If I had asked people what they wanted, they would have said faster horses.” ― Henry Ford

Verification in Classical Computational Science

“Trust, but verify.” ― Felix Edmundovich Dzerzhinsky

Where this situation is the clearest is traditional computational science. In areas where computers are employed to solve science problems classically, the issues are well known. To a large extent, mathematical foundations are firmly established and employed. The math is a springboard for progress. A long and storied track record of achievement exists to provide examples. For the most part, this area drove early advances in computing and laid the groundwork for today’s computational wonders. For most of the history of computing, scientific computing drove all the advances. All of it is built on a solid foundation of mathematics and domain science. Today progress lacks these advantages.

In no area was the advance more powerful than the solution of (partial) differential equations. This was the original killer app. Computers were employed to design nuclear weapons, understand the weather, simulate complex materials, and more. These tasks produced the will to create generations of supercomputers. It also drove the creation of programming languages and operating systems. Eventually, computers leaked out to be used for business purposes. Tasks such as accounting were obvious along with related business systems. Still scientific computing was the vanguard. It is useful to examine its foundations. More importantly, it is useful to see where the foundations in other areas are weak. We have a history of success to guide our path ahead.

The impact of computing on society today is huge and powerful It forms the basis of powerful businesses. The incredible run-up of the stock market is all computing. The promise of artificial intelligence is driving recent advances. Most of this is built on a solid technical foundation. In key areas of progress, the objective truth of improvements is flimsy. This is not good. We are ignoring history. In the long run, we are threatening the sustainability of progress and economic success. We need sustained strategic investment in mathematical foundations and algorithmic research. If not, we put the entire field at extreme risk.

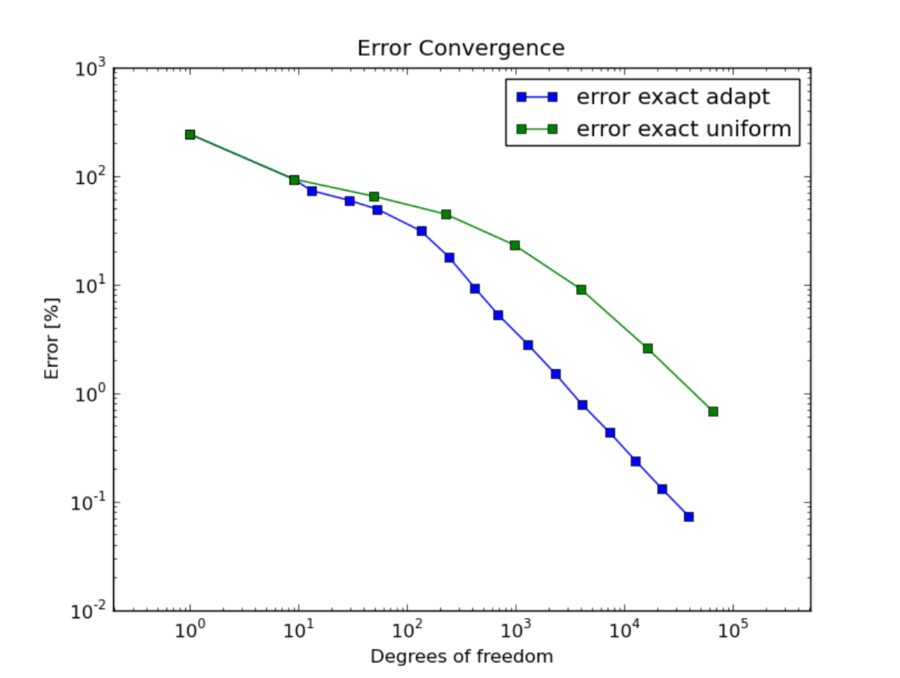

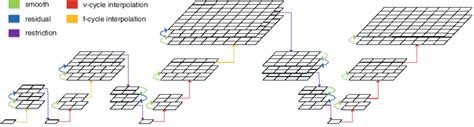

If one goes back to the origins of computational science, the use of it showed promise first. First in application to nuclear weapons then rapidly with weather and climate. Based on this success the computers were advanced as the technology was refined. As these efforts began to yield progress mathematics joined. One of the key bits of theoretical work was conditions for proper numerical solutions of models. Chief among this theory was the equivalence theorem by Peter Lax (along with Robert Richymyer). This theorem established conditions for the convergence of solutions to the exact solution of models. Convergence means that as more computing is applied, the solution gets closer to exact.

This is the theoretical justification for more computing. More computing power produces more accuracy. This is a pretty basic assumption made with computing, but it does not come for free. To get convergence the methods must do things correctly. In the same breath, the theory of how to do things better as well. Just as importantly, the theorem gives us guidance on how to check for correctness. This is the foundation for the practice of verification.

In verification, we can do many things. In its simplest form, we get evidence of the correctness of the method. We can get evidence that the method is implemented and provides the accuracy advertised. This is essential for trustworthy, credible computational results. With these guarantees in place, the work done with computational science can be used with confidence. This confidence then allows it to be invested in and trusted. The verification and theory provided confident means to improve methods and measure the impact. For 70 years this has been a guiding light for computational science.

We should be paying attention to its importance moving forward. We are not.

Machine Learning and Artificial Intelligence Are An Issue

“If people do not believe that mathematics is simple, it is only because they do not realize how complicated life is.” ― John von Neumann

More recently, the promise of artificial intelligence (AI) has grabbed the headlines. Actually, the technical foundation for AI is machine learning (ML). The breakthrough of generative AI with Large Language Models (LLMs) has rightly captured the world’s imagination and interest. A combination of algorithm (method) advances with high-end computing, powers LLMs. These LLMs are one of the strongest driving forces in the World economically. Their power is founded partly on the fruits of computational science discussed above. This includes computers, software, and algorithms. Unfortunately, the history of success is not being paid sufficient attention to.

The current developments and investments in AI/ML are focused on computers (Big Iron following the exascale program’s emphasis). Secondarily software is given much attention. Missing investments and attention are algorithms and applied math. We seem to have lost the ability to provide focus and attention on laying the ground for algorithm advances. A key driver for algorithmic advances is applied mathematics where the theory guides practice.

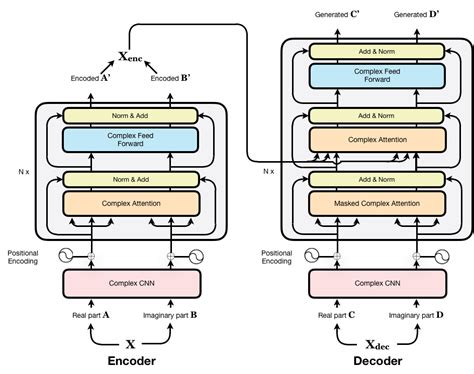

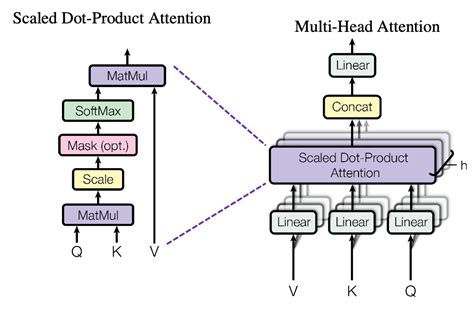

For the formative years of computational science applied math gave key guidance. Theoretical advances and knowledge are essential to progress. Today that experience seems to be on the verge of being forgotten. The irony is that the LLM breakthrough in the past few years was dominated by algorithmic innovation. This is the transformer architecture. The attention mechanism is responsible for the phase transition in performance. It is what produced the amazing LLM results that grabbed everyone’s notice. Investments in mathematics could provide avenues for the next advance.

What is missing today is much of the mathematical theory driving credibility and trustworthy methods.

One of the essential aspects of computational science is the concept of convergence. Convergence means more computation yields better results. Mathematics provides the theory underpinning this idea. The process to demonstrate this is known as verification. In verification, convergence is used to prove the correctness and accuracy of algorithms. One of the biggest problems for AI/ML is the lack of theory. This problem is a lack of rigor. Thus the process of verification is not available. Furthermore, the understanding of accuracy for AI/ML is similarly threadbare. Investments and focus to fill these gaps is needed and long overdue.

One of the problems is that this research is extremely difficult and success is not guaranteed. It is likely to be failure-prone and takes some time. Nonetheless, the stakes of not having such a theory are growing. Moreover, success would likely provide pathways for improving algorithms. Many essential ML methods are ill-behaved and perform erratically. Better mathematical theory involving convergence could pave the way for better ML. The theory tells us what works and how to structurally improve techniques. This is what happened in computational science. We should expect the same for AI/ML. Likely, this work would significantly improve trust in systems as well. The combination of trust, efficiency, and accuracy should sufficiently inspire investments. This is if a logical-rational policy was in place.

It is not. Either by the government or the private enterprise. We will all suffer for this lack of foresight.

“Today’s scientists have substituted mathematics for experiments, and they wander off through equation after equation, and eventually build a structure which has no relation to reality. ” ― Nikola Tesla

Algorithms Win and Verification Matters

Anyone who has read my writing knows that algorithms are a clear winning path for computing. Verification is the testing and measurement of algorithmic performance. If one is interested in better computing algorithmic verification is a vehicle for progress. Verification is about producing evidence of correctness and performance. This provides a concrete measurement of algorithmic performance, which can be an engine for progress. In a future without Moore’s law algorithms are the path to improvement.

“Pure mathematics is in its way the poetry of logical ideas.” ― Albert Einstein

As I’ve written before, algorithmic improvement is currently hampered by a lack of support. Some of this is funding and the rest is risk aversion. Algorithmic research is highly failure prone and progress is episodic. A great deal of tolerance for risk and failure is necessary for algorithm advances. All of this can benefit from a focused verification effort. This can measure the impact of work and provide immediate feedback. The mathematical expectations underpinning verification can also provide the basis for improvements. This math provides focus and inspiration for work.

“We can only see a short distance ahead, but we can see plenty there that needs to be done.” ― Alan Turing