TL;DR

Right now, there is a lot of discussion of AI job cuts on the horizon. Computer coders are at the top of the list. So are other white-collar jobs. It is one of the dumbest things I can imagine. The reasons are legion. First, you take the greatest proponents for AI at work and make many of them angry and scared. You make the greatest proponents of AI angry. You create Luddites. Secondly, it fails to recognize that AI is an exceptional productivity enhancement. It should make these people more valuable, not remove their jobs. Layoffs are simply scarcity at work. It is cruel and greedy. It is short-sighted in the extreme. It is looking at AI as the glass half empty. The last point gets to bullshit jobs that many people do in full or part. Instead, I think AI is a bullshit job detector. We can use it to get rid of them and find ways to make jobs more human, creative, and productive. This is a path to abundance and a better future.

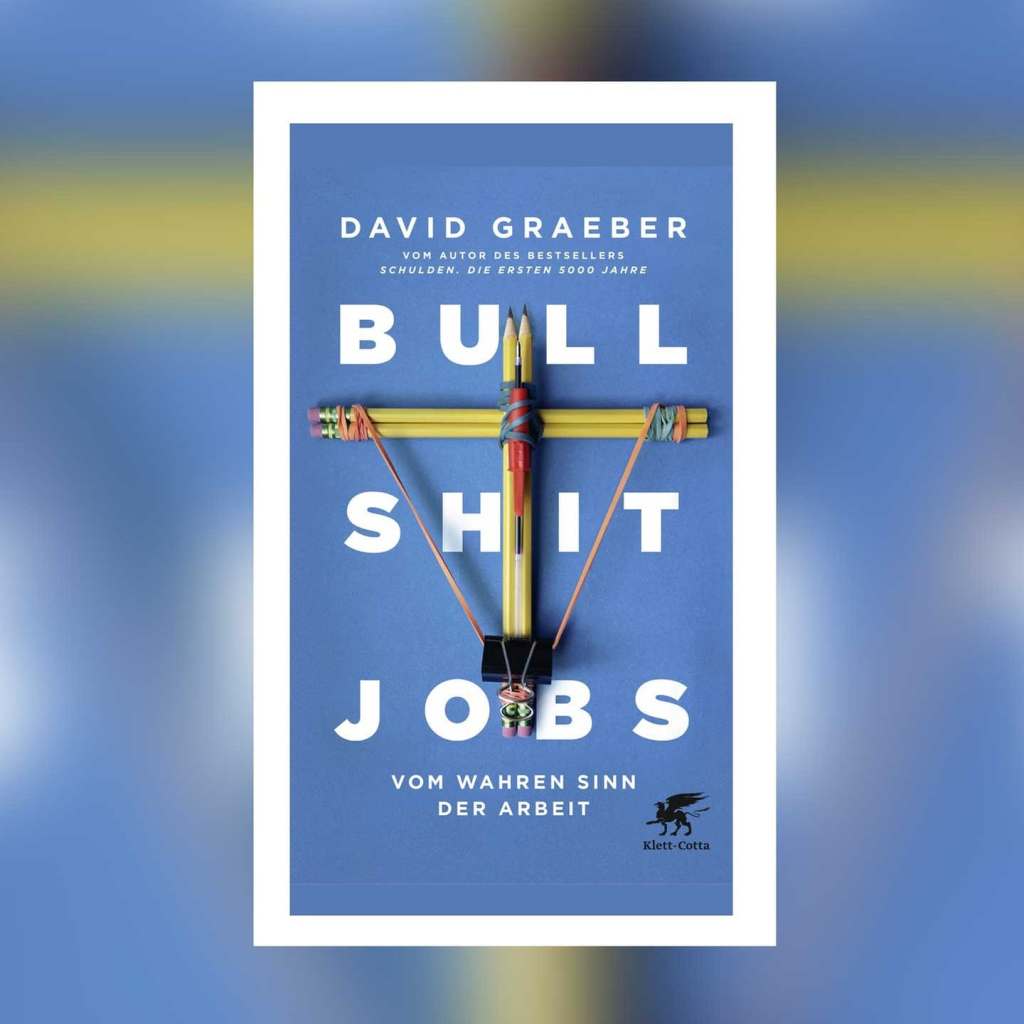

“It’s hard to imagine a surer sign that one is dealing with an irrational economic system than the fact that the prospect of eliminating drudgery is considered to be a problem.” ― David Graeber, Bullshit Jobs: A Theory

AI as a Threat, Instead of as a Gift

Lately, the news has been full of reports that white-collar jobs are gonna be replaced by AI. I do a white-collar job. I also work with AI in research. I possess first-hand knowledge of how well AI possesses prowess in my areas of expertise. Hint, its prowess is novice and naive at best. I think I have a hell of a lot to say about this. Its ability is quite superficial. As soon as the prompt asks for anything nuanced or deep, AI falls flat on its face.

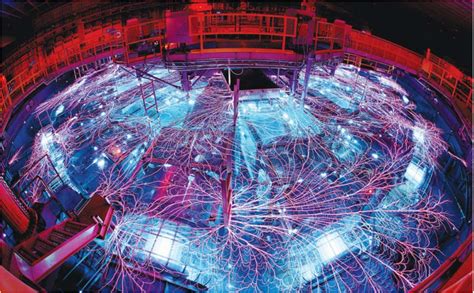

Back to the concern of vast numbers of white-collar workers. Note, that computer programmers are at the top of the “hit list.” The concern is that all of this will lead to widespread unemployment of educated and talented people. At the same time, those of us who use AI professionally can see the stupidity of this. Firing all these people would be a huge mistake. All the claims and desires to cut jobs by AI make the people saying this look like idiots. These idiots have a lot of power with a vested interest in profiting from that AI. They are mostly AI managers who have lots to gain. In all likelihood, they are just as full of shit as my managers are. My own managers are constantly bullshitting their way through reality whenever they are in public. At the Labs, the comments about fusion are at the top of the bullshit parade. A great example of stupid shit that sells to nonexperts.

“Shit jobs tend to be blue collar and pay by the hour, whereas bullshit jobs tend to be white collar and salaried.” ― David Graeber, Bullshit Jobs: A Theory

AI Can Do Bullshit Jobs

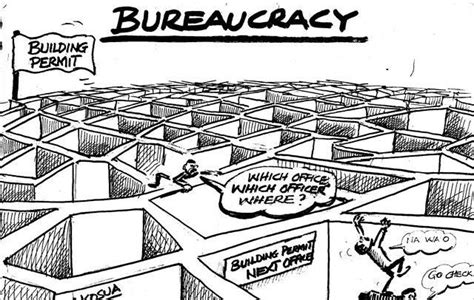

I think This narrative should be more clearly connected to another concept, “Bullshit Jobs.” These are jobs that add little to society and merely make work for lots of people. These jobs also exact an effort tax on every person. These jobs drive the costs and time at work. Most of my exorbitant cost at work is driven by people doing bullshit jobs (my cost is more than 3 times my salary).

On top of that they lower my productivity. What I’ve noticed is that the jobs are mostly related to a lack of trust and lots of checks on stuff. They don’t produce anything, but make sure that I do. My day is full of these things from every corner and touching every activity. I think a hallmark of these jobs is the extent to which AI could do them. I will then take this a step further; if AI can do a job perhaps that job should not be done at all. These jobs are actually beneath the humanity of the people doing them. We need to devote effort to better jobs for people.

The real question is what do we do with all these people who do these bullshit jobs. The AI elite today seem to be saying just fire everyone and reduce payroll. This is an extremely small-minded approach. It is pure greed combined with pessimism and stupidity. The far better approach would be to retool these jobs and people to be creators of value. Unleash creativity and ideas using AI to boost productivity and success. A big part of this is to take more risks and invest in far more failed starts. Allowing more failures will allow more new successes. Among these risks and failed starts are great ideas and breakthroughs. Great ideas and breakthroughs that lie fallow today. They lay fallow under the yoke of all the lack of trust fueling the bullshit jobs. If AI is truly a boon to humanity, we should see an explosion of growth, not mass unemployment.

“We have become a civilization based on work—not even “productive work” but work as an end and meaning in itself.” ― David Graeber, Bullshit Jobs: A Theory

Why don’t we hear a narrative of AI-driven abundance? One really has to wonder if our AI masters are really that smart if their sales pitch is “fire people”. I will just come out and say that the idea of firing swaths of coders because of AI is one of the dumbest things ever. The real answer is to write more code and do more things. The real experience of coders is that AI helps, but ultimately the expert person must be “in the loop”. AI is incapable of replacing code developers. The expert developed is absolutely essential to the process, and that AI just makes them more efficient. We need to embrace the productivity gains and grow the pie. Instead, we are ruled by small-minded greed instead of growth-minded visionaries.

“A human being unable to have a meaningful impact on the world ceases to exist.” ― David Graeber, Bullshit Jobs: A Theory

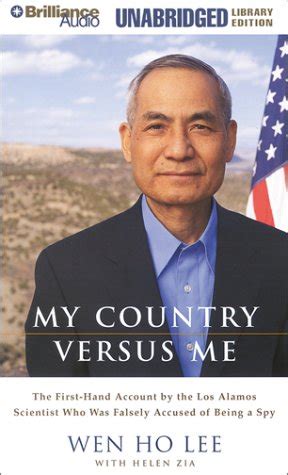

A Painful Lesson

To reiterate, If AI can do your job, there’s a good chance that your job is bullshit. AI is an enhancement for productivity and it should allow you to be free of much of the bullshit. What companies organization should do is illuminate work and jobs that can be done by AI. If AI can do the job entirely, the job isn’t worth doing. They should use this money to free up productivity enhance what is done, and not cut people’s employment. We’ve seen this mentality in attacks on government programs. This is the single greatest failing of Elon Musk and DOGE. They didn’t realize that what he really needed was to unleash people to do more creative and better work. It is not about getting rid of the work; it is about improving the work that is done.

“Efficiency’ has come to mean vesting more and more power to managers, supervisors, and presumed ‘efficiency experts,’ so that actual producers have almost zero autonomy.” ― David Graeber, Bullshit Jobs: A Theory

i’ve written about AI as producing bullshit. What if AI is a way of detecting bullshit? The real truth is when it comes to American science there’s far too little creativity and far too little freedom to do amazing work. Sometimes amazing work cannot be recognized until it is tried. It looks stupid or insane, worthy of ridicule until its genius is obvious. Or it can be not worth trying, but you don’t know until you try. A lot of bureaucratic bullshit stands in the way of progress. One reason for this is the insane amount of bullshit Jobs. The cost of them is huge with our outrageous overhead rates. In addition, they also make bullshit work for those of us trying to produce science. They get in the way of productivity in a myriad of ways with required bullshit that has no value.

What we really need to do is eliminate the bullshit and free up the mind and the creativity. We already aren’t spending enough on science and what is spent is done very unproductive. We don’t take the risks we need for breakthroughs and don’t allow the right kinds of failure. A variety of forms of bullshit jobs lead the way. Managers obsess with meaningless repetitive reviews. They micromanage and apply far too much accounting. All of this kills creativity and undermines breakthroughs. Managers should know what we do, but do it through managing. Not contrived reporting mechanisms. They should create a productive environment. There should be much more effort to determine what would be better for our lives and better productivity.

AI helps this in some focused ways. It can help to supercharge the abilities of creative and talented scientists. Just get the bullshit out of the way. I’ve found that AI is really good a churning out this bullshit. The best answer is to stop doing any bullshit that AI is capable of producing. It is a telltale sign that the work is worthless.

“Young people in Europe and North America in particular, but increasingly throughout the world, are being psychologically prepared for useless jobs, trained in how to pretend to work, and then by various means shepherded into jobs that almost nobody really believes serve any meaningful purpose.” ― David Graeber, Bullshit Jobs: A Theory

If your job is so mundane so routine and so rudimentary that AI can do it, the best option is to delete it. It is a serious question to ask about a job. Most of the bullshit Jobs revolve around a lack of trust and it’s really a broader social issue. In my life, it has become a science productivity issue. If there are reports and things that AI could just as well produce, the best option is to not produce them at all because no one needs to read them. A very large portion of our reporting is never really read. If no one reads a piece of writing, should it even exist? We have a duty as a society to give people productive useful work. Every job should have that undeniable spark of humanity.

The other part of this dialog is about what kind of future we want. Do we want a scarce future where technology ravages good jobs? Whereas corporations simply think about maximizing money for the rich and care little about the employees. Do we want a future where technology like AI takes humanity away? Instead, we should want abundance and growth. Technology that enhances our humanity and reduces our drudgery. AI should be a tool to unleash our best. Any job should also require the spark of humanity to produce genuine value. It should raise our standard of living and allow more time for leisure, art and the pursuit of pleasure. It should directly lead to a better World to live in.

“Yet for some reason, we as a society have collectively decided it’s better to have millions of human beings spending years of their lives pretending to type into spreadsheets or preparing mind maps for PR meetings than freeing them to knit sweaters, play with their dogs, start a garage band, experiment with new recipes, or sit in cafés arguing about politics, and gossiping about their friends’ complex polyamorous love affairs.” ― David Graeber, Bullshit Jobs: A Theory