Simply, in a word, NO, I’m not productive.

Most of us spend too much time on what is urgent and not enough time on what is important.

― Stephen R. Covey

A big part of productivity is doing something worthwhile and meaningful. It means  attacking something important and creating innovative solutions. I do a lot of things every day, but very little of it is either worthwhile or meaningful. At the same time I’m doing exactly what I am supposed to be doing! This means that my employer (or masters) are asking me to spend all of my valuable time doing meaningless, time wasting things as part of my conditions of employment. This includes trips to stupid, meaningless meetings with little or no content of value, compliance training, project planning, project reports, e-mails, jumping through hoops to get a technical paper, and a smorgasbord of other paperwork. Much of this is hoisted upon us by our governing agency, coupled with rampant institutional over-compliance, or managerially driven ass covering. All of this equals no time or focus on anything that actually matters squeezing out all the potential for innovation. Many of the direct actions result in creating an environment where risk and failure are not tolerated thus killing innovation before it can attempt to appear.

attacking something important and creating innovative solutions. I do a lot of things every day, but very little of it is either worthwhile or meaningful. At the same time I’m doing exactly what I am supposed to be doing! This means that my employer (or masters) are asking me to spend all of my valuable time doing meaningless, time wasting things as part of my conditions of employment. This includes trips to stupid, meaningless meetings with little or no content of value, compliance training, project planning, project reports, e-mails, jumping through hoops to get a technical paper, and a smorgasbord of other paperwork. Much of this is hoisted upon us by our governing agency, coupled with rampant institutional over-compliance, or managerially driven ass covering. All of this equals no time or focus on anything that actually matters squeezing out all the potential for innovation. Many of the direct actions result in creating an environment where risk and failure are not tolerated thus killing innovation before it can attempt to appear.

Worse yet, the environment designed to provide “accountability” is destroying the very conditions innovative research depends upon. Thus we are completely accountable for producing nothing of value.

Every single time I do what I supposed to do at work my productivity is reduced. The  only thing not required of me at work is actual productivity. All my training, compliance, and other work activities are focused on things that produce nothing of value. At some level we fail to respect our employees and end up wasting our lives by having us invest time in activities devoid of content and value. Basically the entire apparatus of my work is focused on forcing me to do things that produce nothing worthwhile other than providing a wage to support my family. Real productivity and innovation is all on me and increasingly a pro bono activity. The fact that actual productivity isn’t a concern for my employer is really fucked up. The bottom line is that we aren’t funded to do anything valuable, and the expectations on me are all bullshit and no substance. Its been getting steadily worse with each passing year too. When my employer talks about efficiency, it is all about saving money, not producing anything for the money we spend. Instead of focusing on producing more or better with the funding and unleashing the creative energy of people, we focus on penny pinching and making the workplace more unpleasant and genuinely terrible. None of the changes make for a better, more engaging workplace and simply continually reduce the empowerment of employees.

only thing not required of me at work is actual productivity. All my training, compliance, and other work activities are focused on things that produce nothing of value. At some level we fail to respect our employees and end up wasting our lives by having us invest time in activities devoid of content and value. Basically the entire apparatus of my work is focused on forcing me to do things that produce nothing worthwhile other than providing a wage to support my family. Real productivity and innovation is all on me and increasingly a pro bono activity. The fact that actual productivity isn’t a concern for my employer is really fucked up. The bottom line is that we aren’t funded to do anything valuable, and the expectations on me are all bullshit and no substance. Its been getting steadily worse with each passing year too. When my employer talks about efficiency, it is all about saving money, not producing anything for the money we spend. Instead of focusing on producing more or better with the funding and unleashing the creative energy of people, we focus on penny pinching and making the workplace more unpleasant and genuinely terrible. None of the changes make for a better, more engaging workplace and simply continually reduce the empowerment of employees.

One of the big things to get to is what is productive in the first place?

There is nothing quite so useless, as doing with great efficiency, something that should not be done at all.

― Peter F. Drucker

Productivity is creating ideas and things whether it is science or art. Done properly science is art. I need to be unleashed to provide value for my time. Creativity requires focus and inspiration making the best of opportunities provided by low hanging fruit. Science can be inspired by good mission focus producing clear and well-defined problems to solve. None of these wonderful things is the focus of efficiency or productivity initiatives today. Every single thing my employer does put a leash on me, and undermines productivity at every turn. This leash is put into a seemingly positive form of accountability, but never asks or even allows me to be productive in the slightest.

Poorly designed and motivated projects are not productive. Putting research projects into a project management straightjacket makes everything worse. We make everything myopic and narrow in focus. This kills one of the biggest sources of innovation. Most breakthroughs aren’t totally original, but rather the adaptation of a mature idea from one field into another. It is almost never a completely original thing. Our focused and myopic management destroys the possibility of these innovations. Increasingly our projects and proposals are all written to illicit funding, not produce the best results. We produce a system that focuses on doing things that are low risk and nearly guaranteed payoff, which results in terrible outcomes where progress is  incremental at best. The result is a system where I am almost definitively not productive if I do exactly what I’m supposed to do. The entire apparatus of accountability is absurd and an insult to productive work. It sounds good, but its completely destructive, and we keep adding more and more of it.

incremental at best. The result is a system where I am almost definitively not productive if I do exactly what I’m supposed to do. The entire apparatus of accountability is absurd and an insult to productive work. It sounds good, but its completely destructive, and we keep adding more and more of it.

Why am I wasting my time, I could produce so much with a few hours of actual work. I read article after article that says I should be able to produce incredible results working only four hours a day. The truth is that we are creating systems at work that keep us from doing anything productive at all. The truth is that I have to go to incredible lengths to get anything close to four hours of actual scientific focus time. We are destroying the ability for us to work effectively all in the name of accountability. We destroy work in the process of assuring that work is getting done. Its ironic, its tragic, and its totally unnecessary.

If you want something new, you have to stop doing something old

― Peter F. Drucker

The key is how do we get the point of not wasting my time with this shit? Accountability sounds good, but underneath its execution is a deep lack of trust. The key to allowi ng me to be productive is to trust me. We need to realize that our systems at work are structured to deal with a lack of trust. Implicit in all the systems is a feeling that people need to be constantly being checked up on. If people aren’t constantly being checked up on they are fucking off. The result is an almost complete lack of empowerment, and a labyrinth of micromanagement. To be productive we need to be trusted, and we need to be empowered. We need to be chasing big important goals that we are committed to achieving. Once we accept the goals, we need to be unleashed to accomplish them. In the process we need to solve all sorts of problems, and in the process we can provide innovative solutions that enrich the knowledge of humanity and enrich society at large. This is a tried and true formula for progress that we have lost faith in, and with this lack of faith we have lost trust in our fellow citizens.

ng me to be productive is to trust me. We need to realize that our systems at work are structured to deal with a lack of trust. Implicit in all the systems is a feeling that people need to be constantly being checked up on. If people aren’t constantly being checked up on they are fucking off. The result is an almost complete lack of empowerment, and a labyrinth of micromanagement. To be productive we need to be trusted, and we need to be empowered. We need to be chasing big important goals that we are committed to achieving. Once we accept the goals, we need to be unleashed to accomplish them. In the process we need to solve all sorts of problems, and in the process we can provide innovative solutions that enrich the knowledge of humanity and enrich society at large. This is a tried and true formula for progress that we have lost faith in, and with this lack of faith we have lost trust in our fellow citizens.

The whole system needs to be oriented toward the positive and away from the underlying premise that people cannot be trusted. This goes hand in hand with the cult of the manager today. If one looks at the current organizational philosophy, the manager is king and apex of importance. Work is to be managed and controlled. The workers are just cogs in the machine, interchangeable and utterly replicable. To be productive, the work itself needs to be celebrated and enabled. The people doing this work need to be the focus of the organization and getting wonderful work done enabled by its actions. The organization needs to enable and support productive work, and create an environment that fosters the best in people. Today’s organizations are centered on expecting and controlling the worst in people with the assumption tha t they can’t be trusted. If people are treated like they can’t be trusted, you can’t expect them to be productive. To be better and productive, we need to start with a different premise. To be productive we need to center and focus our work on the producers, not the managers. We need to trust and put faith in each other to solve problems, innovate and create a better future.

t they can’t be trusted. If people are treated like they can’t be trusted, you can’t expect them to be productive. To be better and productive, we need to start with a different premise. To be productive we need to center and focus our work on the producers, not the managers. We need to trust and put faith in each other to solve problems, innovate and create a better future.

To be happy we need something to solve. Happiness is therefore a form of action;

― Mark Manson

decisions for business, money is all that matters. If it puts more money in the pockets of those in power (i.e., the stockholder), it is by current definition a good decision. The flow and availability of money is maximized by a short business cycle, and an utter lack of long-term perspective. In the work that I do, we define the correctness of our work by whether money is allocated for it. This attitude has led us toward some really disturbing outcomes.

decisions for business, money is all that matters. If it puts more money in the pockets of those in power (i.e., the stockholder), it is by current definition a good decision. The flow and availability of money is maximized by a short business cycle, and an utter lack of long-term perspective. In the work that I do, we define the correctness of our work by whether money is allocated for it. This attitude has led us toward some really disturbing outcomes. s second World standards, and for the poor third World standards. The outcomes for our citizens follow these outcomes in terms of life expectancy. The reasons for our terrible health care system are clear, as the day is long, money. More specifically, the medical system is tied to profit motive rather than responsibility and ethics resulting in outcomes being directly linked to people’s ability to pay. The moral and ethical dimension of health care in the United States is indefensible and appalling. It is because money is the prime mover for decisions. Worse yet, the substandard medical care for most of our citizens is a drain on society, produces awful results, but provides a vast well of money for the rich and wealthy to leech off of.

s second World standards, and for the poor third World standards. The outcomes for our citizens follow these outcomes in terms of life expectancy. The reasons for our terrible health care system are clear, as the day is long, money. More specifically, the medical system is tied to profit motive rather than responsibility and ethics resulting in outcomes being directly linked to people’s ability to pay. The moral and ethical dimension of health care in the United States is indefensible and appalling. It is because money is the prime mover for decisions. Worse yet, the substandard medical care for most of our citizens is a drain on society, produces awful results, but provides a vast well of money for the rich and wealthy to leech off of. Money is a tool. Period. Computers are tools too. When tools become reasons and central organizing principles we are bound to create problems. I’ve written volumes on the issues created by the lack of perspective on computers as tools as opposed to ends unto themselves. Money is similar in character. In my world these two issues are intimately linked, but the problems with money are broader. Money’s role as a tool is a surrogate for value and worth, and can be exchanged for other things of value. Money’s meaning is connected to the real world things it can be exchanged for. We have increasingly lost this sense and put ourselves in a position where value and money have become independent of each other. This independence is truly a crisis and leads to severe misallocation of resources. At work, the attitude is increasingly “do what you are paid to do” “the customer is always right” “we are doing what we get funded to do”. The law, training and all manner of organizational tools, enforces all of this. This shadowed by business where the ability to make money justifies anything. We trade, destroy and carve up businesses so that stockholders can make money. All sense of morality, justice, and long-term consequence is scarified if money can be extracted from the system. Today’s stock market is built to create wealth in this manner, and legally enforced. The true meaning of the stock market is a way of creating resources for businesses to invest and grow. This purpose has been completely lost today, and the entire apparatus is in place to generate wealth. This wealth generation is done without regard for the health of the business. Increasingly we have used business as the model for managing everything. To its disservice, science has followed suit and lost the sense of long-term investment by putting business practice into use to manage research. In many respects the core religion in the United States is money and profit with its unquestioned supremacy as an organizing and managing principle.

Money is a tool. Period. Computers are tools too. When tools become reasons and central organizing principles we are bound to create problems. I’ve written volumes on the issues created by the lack of perspective on computers as tools as opposed to ends unto themselves. Money is similar in character. In my world these two issues are intimately linked, but the problems with money are broader. Money’s role as a tool is a surrogate for value and worth, and can be exchanged for other things of value. Money’s meaning is connected to the real world things it can be exchanged for. We have increasingly lost this sense and put ourselves in a position where value and money have become independent of each other. This independence is truly a crisis and leads to severe misallocation of resources. At work, the attitude is increasingly “do what you are paid to do” “the customer is always right” “we are doing what we get funded to do”. The law, training and all manner of organizational tools, enforces all of this. This shadowed by business where the ability to make money justifies anything. We trade, destroy and carve up businesses so that stockholders can make money. All sense of morality, justice, and long-term consequence is scarified if money can be extracted from the system. Today’s stock market is built to create wealth in this manner, and legally enforced. The true meaning of the stock market is a way of creating resources for businesses to invest and grow. This purpose has been completely lost today, and the entire apparatus is in place to generate wealth. This wealth generation is done without regard for the health of the business. Increasingly we have used business as the model for managing everything. To its disservice, science has followed suit and lost the sense of long-term investment by putting business practice into use to manage research. In many respects the core religion in the United States is money and profit with its unquestioned supremacy as an organizing and managing principle. needed, we construct programs to get money. Increasingly, the way we are managed pushes a deep level of accountability to the money instead of value and purpose. The workplace messaging is “only work on what you are paid to do.” Everything we do is based on the customer who is writing the checks. The vacuous and shallow end results of this management philosophy are clear. Instead of doing the best thing possible for real world outcomes, we propose what people want to hear and what is easily funded. Purpose, value and principles are all sacrificed for money. The biggest loss is the inability to deal with difficult issues or get to the heart of anything subtle. The money is increasingly uncoordinated and nothing is tied to large objectives. In the trenches people simply work on the thing they are being paid by and learn to not ask difficult questions or think in the long term. The customer cares nothing about the career development or expertise of those they fund. In the process of money first our career development and National scientific research is plummeting and in free fall whether we look at National Labs or Universities.

needed, we construct programs to get money. Increasingly, the way we are managed pushes a deep level of accountability to the money instead of value and purpose. The workplace messaging is “only work on what you are paid to do.” Everything we do is based on the customer who is writing the checks. The vacuous and shallow end results of this management philosophy are clear. Instead of doing the best thing possible for real world outcomes, we propose what people want to hear and what is easily funded. Purpose, value and principles are all sacrificed for money. The biggest loss is the inability to deal with difficult issues or get to the heart of anything subtle. The money is increasingly uncoordinated and nothing is tied to large objectives. In the trenches people simply work on the thing they are being paid by and learn to not ask difficult questions or think in the long term. The customer cares nothing about the career development or expertise of those they fund. In the process of money first our career development and National scientific research is plummeting and in free fall whether we look at National Labs or Universities. business is always lost to the possibility of making more money in the now. By the same token, the short-term thinking is terrible for value to society and leads to many businesses simply being chewed up and spit out. Unfortunately our society has adopted the short term thinking for everything including science. All activities are measured quarterly (or even monthly) against the funded plans. Organizations are driving everyone to abide by this short-term thinking. No one can use their judgment or knowledge gained to change this for values that transcend money. The result is a complete loss of long-term perspective in decision-making. We have lost the ability to care for the health and growth of careers. The defined financial path has become the only arbiter of right and wrong. All of our judgment is based on money, if its funded, it is right, if it isn’t funded its wrong. More and more all the long-term interests aren’t funded, so our future whither right in front of us. The only ones benefiting from the short-term thinking are a small number of the wealthiest people in society. Most people and society itself are left behind, but forced to serve their own demise.

business is always lost to the possibility of making more money in the now. By the same token, the short-term thinking is terrible for value to society and leads to many businesses simply being chewed up and spit out. Unfortunately our society has adopted the short term thinking for everything including science. All activities are measured quarterly (or even monthly) against the funded plans. Organizations are driving everyone to abide by this short-term thinking. No one can use their judgment or knowledge gained to change this for values that transcend money. The result is a complete loss of long-term perspective in decision-making. We have lost the ability to care for the health and growth of careers. The defined financial path has become the only arbiter of right and wrong. All of our judgment is based on money, if its funded, it is right, if it isn’t funded its wrong. More and more all the long-term interests aren’t funded, so our future whither right in front of us. The only ones benefiting from the short-term thinking are a small number of the wealthiest people in society. Most people and society itself are left behind, but forced to serve their own demise. need to receive a significant benefit for putting off short-term profit to take the long-term perspective. We need to overhaul how science is done. The notably long-term investment is research must be recovered and freed from the business ideas that are destroying the ability of science to create value. The idea that business practices today are correct is utterly perverse and damaging.

need to receive a significant benefit for putting off short-term profit to take the long-term perspective. We need to overhaul how science is done. The notably long-term investment is research must be recovered and freed from the business ideas that are destroying the ability of science to create value. The idea that business practices today are correct is utterly perverse and damaging. . We need a realization of the long-term effects of current attitudes and policies as a loss to everyone. A piece of this puzzle is a greater degree of responsibility for the future on the part of the rich and powerful. Our leaders need to work for the benefit of everyone, not for their accumulation of more wealth and power. Until this fact becomes more evident to the population as a whole we can expect the wealthy and powerful to continue to favor a system that benefits himself or herself to exclusion of everyone else.

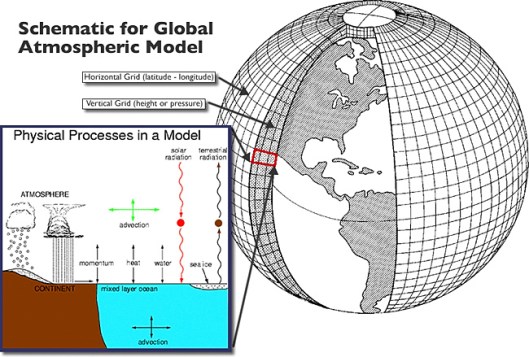

. We need a realization of the long-term effects of current attitudes and policies as a loss to everyone. A piece of this puzzle is a greater degree of responsibility for the future on the part of the rich and powerful. Our leaders need to work for the benefit of everyone, not for their accumulation of more wealth and power. Until this fact becomes more evident to the population as a whole we can expect the wealthy and powerful to continue to favor a system that benefits himself or herself to exclusion of everyone else. unsatisfactorily understood thing. For general nonlinear problems dominating the use and utility of high performance computing, the state of affairs is quite incomplete. It has a central role in modeling and simulation making our gaps in theory, knowledge and practice rather unsettling. Theory is strong for linear problems where solutions are well behaved and smooth (i.e., continuously differentiable, or a least many derivatives exist). Almost every problem of substance driving National investments in computing is nonlinear and rough. Thus, we have theory that largely guides practice by faith rather than rigor. We would be well served by a concerted effort to develop theoretical tools better suited to our reality.

unsatisfactorily understood thing. For general nonlinear problems dominating the use and utility of high performance computing, the state of affairs is quite incomplete. It has a central role in modeling and simulation making our gaps in theory, knowledge and practice rather unsettling. Theory is strong for linear problems where solutions are well behaved and smooth (i.e., continuously differentiable, or a least many derivatives exist). Almost every problem of substance driving National investments in computing is nonlinear and rough. Thus, we have theory that largely guides practice by faith rather than rigor. We would be well served by a concerted effort to develop theoretical tools better suited to our reality. n that the solution approaches the exact solution is the manner of approximation grows closer to a continuum, which is associated with small discrete steps/mesh and more computational resource. This theorem provides the basis and ultimate drive for faster, more capable computing. We apply it most of the time where it is invalid. We would be greatly served by having a theory that is freed of these limits. Today we just cobble together a set of theories, heuristics and lessons into best practices and we stumble forward.

n that the solution approaches the exact solution is the manner of approximation grows closer to a continuum, which is associated with small discrete steps/mesh and more computational resource. This theorem provides the basis and ultimate drive for faster, more capable computing. We apply it most of the time where it is invalid. We would be greatly served by having a theory that is freed of these limits. Today we just cobble together a set of theories, heuristics and lessons into best practices and we stumble forward. polynomials and finite elements. All of these methods depend to some degree on solutions being well behaved and nice. Most of our simulations are neither well behaved nor nice. We assume an idealized nice solution then approximate using some neighborhood of discrete values. Sometimes this is done using finite differences, or cutting the world into little control volumes (equivalent in simple cases), or creating finite elements and using variational calculus to make approximations. In all cases the underlying presumption is smooth, nice solutions while most of the utility of approximations violates these assumptions. Reality is rarely well behaved or nice, so we have a problem. Our practice has done reasonably well and taken us far, but a better more targeted and useful theory might truly unleash innovation and far greater utility.

polynomials and finite elements. All of these methods depend to some degree on solutions being well behaved and nice. Most of our simulations are neither well behaved nor nice. We assume an idealized nice solution then approximate using some neighborhood of discrete values. Sometimes this is done using finite differences, or cutting the world into little control volumes (equivalent in simple cases), or creating finite elements and using variational calculus to make approximations. In all cases the underlying presumption is smooth, nice solutions while most of the utility of approximations violates these assumptions. Reality is rarely well behaved or nice, so we have a problem. Our practice has done reasonably well and taken us far, but a better more targeted and useful theory might truly unleash innovation and far greater utility. We don’t really know what happens when the theory falls apart, and simply rely upon bootstrapping ourselves forward. We have gotten very far with very limited theory, and simply moving forward largely on faith. We do have some limited theoretical tools, like conservation principles (Lax-Wendroff’s theorem), and entropy solutions (converging toward solutions associated with viscous regularization consistent with the second law of thermodynamics). The thing we miss is general understanding of what is guiding accuracy and defining error in these cases. We cannot design methods specifically to produce accurate solution in these circumstances and we are guided by heuristics and experience rather than rigorous theory. A more rigorous theoretical construct would provide a springboard for productive innovation. Let’s look at a few of the tools available today to put things in focus.

We don’t really know what happens when the theory falls apart, and simply rely upon bootstrapping ourselves forward. We have gotten very far with very limited theory, and simply moving forward largely on faith. We do have some limited theoretical tools, like conservation principles (Lax-Wendroff’s theorem), and entropy solutions (converging toward solutions associated with viscous regularization consistent with the second law of thermodynamics). The thing we miss is general understanding of what is guiding accuracy and defining error in these cases. We cannot design methods specifically to produce accurate solution in these circumstances and we are guided by heuristics and experience rather than rigorous theory. A more rigorous theoretical construct would provide a springboard for productive innovation. Let’s look at a few of the tools available today to put things in focus. he approximation. In essence the shock wave (or whatever wave is tracked) becomes an internal boundary condition allowing regular methods to be used everywhere else. This typically involves the direct solution of the Rankine-Hugoniot relations (i.e. the shock jump conditions, algebraic relations holding at a discontinuous wave). The problems with this approach are extreme, including unbounded complexity if all waves are tracked, or with solution geometry in multiple dimensions. This choice has been with us since the dawn of computation including the very first calculations at Los Alamos that used this technique, but it rapidly becomes untenable.

he approximation. In essence the shock wave (or whatever wave is tracked) becomes an internal boundary condition allowing regular methods to be used everywhere else. This typically involves the direct solution of the Rankine-Hugoniot relations (i.e. the shock jump conditions, algebraic relations holding at a discontinuous wave). The problems with this approach are extreme, including unbounded complexity if all waves are tracked, or with solution geometry in multiple dimensions. This choice has been with us since the dawn of computation including the very first calculations at Los Alamos that used this technique, but it rapidly becomes untenable. To address the practical aspects of computation shock capturing methods were developed. Shock capturing implicitly computes the shock wave on a background grid through detecting its presence and adding a physically motivated dissipation to stabilize its evolution. This concept has made virtually all of computational science possible. Even when tracking methods are utilized the explosion of complexity is tamed by resorting to shock capturing away from the

To address the practical aspects of computation shock capturing methods were developed. Shock capturing implicitly computes the shock wave on a background grid through detecting its presence and adding a physically motivated dissipation to stabilize its evolution. This concept has made virtually all of computational science possible. Even when tracking methods are utilized the explosion of complexity is tamed by resorting to shock capturing away from the  dominant features being tracked. The origin of the concept came from Von Neumann in 1944, but lacked a critical element for success, dissipation or stabilization. Richtmyer added this critical element with artificial viscosity in 1948 while working at Los Alamos on problems whose complexity was advancing beyond the capacity of shock tracking to deal with. Together Von Neumann’s finite differencing scheme and Richtmyer’s viscosity enabled shock capturing. It was a proof of principle and its functionality was an essential springboard for others to have faith in computational science.

dominant features being tracked. The origin of the concept came from Von Neumann in 1944, but lacked a critical element for success, dissipation or stabilization. Richtmyer added this critical element with artificial viscosity in 1948 while working at Los Alamos on problems whose complexity was advancing beyond the capacity of shock tracking to deal with. Together Von Neumann’s finite differencing scheme and Richtmyer’s viscosity enabled shock capturing. It was a proof of principle and its functionality was an essential springboard for others to have faith in computational science. well served by aggressively exploring these connections in an open-minded and innovative fashion.

well served by aggressively exploring these connections in an open-minded and innovative fashion.

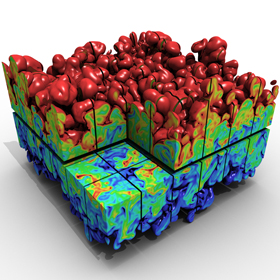

results from the flashy graphics AMR produces to justifiable credible results. A big part of moving forward is putting verification and validation into practice. Both activities are highly dependent on theory that is generally weak or non-existent. Our ability to rigorously apply modeling and simulation to important societal problems is being held back by our theoretical failings.

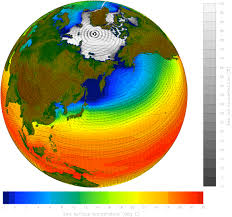

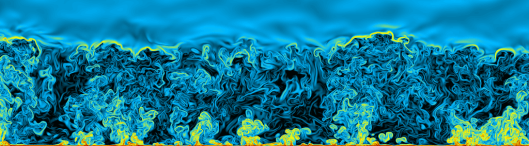

results from the flashy graphics AMR produces to justifiable credible results. A big part of moving forward is putting verification and validation into practice. Both activities are highly dependent on theory that is generally weak or non-existent. Our ability to rigorously apply modeling and simulation to important societal problems is being held back by our theoretical failings. A big issue is a swath of computational science where theory is utterly inadequate much of it involving chaotic solutions where there is extreme dependence on initial conditions. Turbulence is the classical problem most closely related to this issue. Our current theory and rigorous understand is vastly inadequate to spur progress. In most cases we are let down by both the physics modeling, mathematical and numerical theory. In every case we have weak to non-existent rigor leading to heuristic filled models and numerical solvers. Extensions of any of this work are severely hampered by the lack of theory (think higher order accuracy, uncertainty quantification, optimization,…). We don’t know how any of this converges, we just act like it does and use it to justify most of our high performance computing investments. All of our efforts would be massively assisted by almost any progress theoretically. Most of the science we care about is chaotic at a very basic level and lots of interesting things are utterly dependent on understanding this better. The amount of focus on this matter is frightfully low.

A big issue is a swath of computational science where theory is utterly inadequate much of it involving chaotic solutions where there is extreme dependence on initial conditions. Turbulence is the classical problem most closely related to this issue. Our current theory and rigorous understand is vastly inadequate to spur progress. In most cases we are let down by both the physics modeling, mathematical and numerical theory. In every case we have weak to non-existent rigor leading to heuristic filled models and numerical solvers. Extensions of any of this work are severely hampered by the lack of theory (think higher order accuracy, uncertainty quantification, optimization,…). We don’t know how any of this converges, we just act like it does and use it to justify most of our high performance computing investments. All of our efforts would be massively assisted by almost any progress theoretically. Most of the science we care about is chaotic at a very basic level and lots of interesting things are utterly dependent on understanding this better. The amount of focus on this matter is frightfully low. of preparation and qualification for the office of President. Since he has taken office, none of Trump’s actions have provided any relief from these concerns. Whether I’ve looked at his executive orders, appointments, policy directions, public statements, conduct or behavior, the conclusion is the same, Trump is unfit to be President. He is corrupt, crude, uneducated, prone to fits of anger, engages in widespread nepotism, and acts utterly un-Presidential. He has nothing to mitigate any of the concerns I felt that fateful Wednesday when it was clear that he had been elected President. At the same time virtually all of his supporters have been unwavering in support for him. The Republican Party seems impervious to the evidence before them about vast array of problems Trump represents, supporting him, if not enabling his manifest dysfunctions.

of preparation and qualification for the office of President. Since he has taken office, none of Trump’s actions have provided any relief from these concerns. Whether I’ve looked at his executive orders, appointments, policy directions, public statements, conduct or behavior, the conclusion is the same, Trump is unfit to be President. He is corrupt, crude, uneducated, prone to fits of anger, engages in widespread nepotism, and acts utterly un-Presidential. He has nothing to mitigate any of the concerns I felt that fateful Wednesday when it was clear that he had been elected President. At the same time virtually all of his supporters have been unwavering in support for him. The Republican Party seems impervious to the evidence before them about vast array of problems Trump represents, supporting him, if not enabling his manifest dysfunctions. at every turn. The Party and its leader in turn driving a strong support among the common man are defending the core traditional National identity. This gives both Putin and Trump their political base from which they can deliver benefits to the wealthy ruling class while giving the common man red meat in oppression of minorities and non-traditional people. All of this is packaged up with a strongly authoritarian leadership with lots of extra law enforcement and military focus. Both Putin and Trump will promote defending the Homeland from the enemies external and internal. Terrorism provides a handy and evil external threat to further drive the Nationalist tendencies.

at every turn. The Party and its leader in turn driving a strong support among the common man are defending the core traditional National identity. This gives both Putin and Trump their political base from which they can deliver benefits to the wealthy ruling class while giving the common man red meat in oppression of minorities and non-traditional people. All of this is packaged up with a strongly authoritarian leadership with lots of extra law enforcement and military focus. Both Putin and Trump will promote defending the Homeland from the enemies external and internal. Terrorism provides a handy and evil external threat to further drive the Nationalist tendencies. form of the second law of thermodynamics. What I do is complex and highly technical full of incredible subtlety. Even when talking with someone from a nearby technical background the subtlety of approximating physical laws numerically in a manner suitable for computing can be daunting. For someone without a technical background it is positively alien. This character comes to play rather acutely in the design and construction of research programs where complex, technical and subtle does not sell. This is especially true in today’s world where expertise and knowledge is regarded as suspicious, dangerous and threatening to so many. In today’s world one of the biggest insults to hurl at some one is to accuse them of being one of the “elite”. Increasingly it is clear that this isn’t just an American issue, but Worldwide in its scope. It is a clear and present threat to a better future.

form of the second law of thermodynamics. What I do is complex and highly technical full of incredible subtlety. Even when talking with someone from a nearby technical background the subtlety of approximating physical laws numerically in a manner suitable for computing can be daunting. For someone without a technical background it is positively alien. This character comes to play rather acutely in the design and construction of research programs where complex, technical and subtle does not sell. This is especially true in today’s world where expertise and knowledge is regarded as suspicious, dangerous and threatening to so many. In today’s world one of the biggest insults to hurl at some one is to accuse them of being one of the “elite”. Increasingly it is clear that this isn’t just an American issue, but Worldwide in its scope. It is a clear and present threat to a better future. I’ve written often about the sorry state of high performance computing. Our computing programs are blunt and naïve constructed to squeeze money out of funding agencies and legislatures rather then get the job done. The brutal simplicity of the arguments used to support funding is breathtaking. Rather than construct programs to be effective and efficient getting the best from every dollar spent, we construct programs to be marketed at the lowest common denominator. For this reason something subtle, complex and technical like numerical approximation gets no play. In today’s world subtlety is utterly objectionable and a complete buzz kill. We don’t care that it’s the right thing to do, or that it is massively greater in return than simply building giant monstrosities of computing. It would take an expert from the numerical elite to explain it, and those people are untrustworthy nerds, so we will simply get the money to waste on the monstrosities instead. So here I am, an expert and one of the elite using my knowledge and experience to make recommendations on how to be more effective and efficient. You’ve been warned.

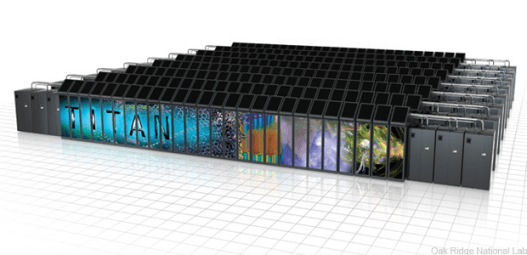

I’ve written often about the sorry state of high performance computing. Our computing programs are blunt and naïve constructed to squeeze money out of funding agencies and legislatures rather then get the job done. The brutal simplicity of the arguments used to support funding is breathtaking. Rather than construct programs to be effective and efficient getting the best from every dollar spent, we construct programs to be marketed at the lowest common denominator. For this reason something subtle, complex and technical like numerical approximation gets no play. In today’s world subtlety is utterly objectionable and a complete buzz kill. We don’t care that it’s the right thing to do, or that it is massively greater in return than simply building giant monstrosities of computing. It would take an expert from the numerical elite to explain it, and those people are untrustworthy nerds, so we will simply get the money to waste on the monstrosities instead. So here I am, an expert and one of the elite using my knowledge and experience to make recommendations on how to be more effective and efficient. You’ve been warned.

What also needs to be in place is a sense of the value of each activity, and priority placed toward those that have the greatest impact, or the greatest opportunity. Instead of doing this today, we are focused on the thing with least impact, farthest from reality and starving the most valuable parts of the ecosystem. One might argue that the hardware is a subject of opportunity, but the truth is the opposite. The environment for improving the performance of hardware is at a historical nadir; Moore’s law is dead, dead, dead. Our focus on hardware is throwing money at an opportunity that has passed into history.

What also needs to be in place is a sense of the value of each activity, and priority placed toward those that have the greatest impact, or the greatest opportunity. Instead of doing this today, we are focused on the thing with least impact, farthest from reality and starving the most valuable parts of the ecosystem. One might argue that the hardware is a subject of opportunity, but the truth is the opposite. The environment for improving the performance of hardware is at a historical nadir; Moore’s law is dead, dead, dead. Our focus on hardware is throwing money at an opportunity that has passed into history. At the core of the argument is a strategy that favors brute force over subtleties understood mainly by experts (or the elite!). Today the brute force argument always takes the lead over anything that might require some level of explanation. In modeling and simulation the esoteric activities such as the actual modeling and its numerical solution are quite subtle and technical in detail compared to the raw computing power that can be understood with ease by the layperson. This is the reason the computing power gets the lead in the program, not because of its efficacy in improving the bottom line. As a result our high performance-computing world is dominated by meaningless discussions of computing power defined by a meaningless benchmark. The political dynamics is basically a modern day “missile gap” like we had during the Cold War. It has exactly as much virtue as the original “missile gap”; it is a pure marketing and political tool with absolutely no technical or strategic validity aside from its ability to free up funding.

At the core of the argument is a strategy that favors brute force over subtleties understood mainly by experts (or the elite!). Today the brute force argument always takes the lead over anything that might require some level of explanation. In modeling and simulation the esoteric activities such as the actual modeling and its numerical solution are quite subtle and technical in detail compared to the raw computing power that can be understood with ease by the layperson. This is the reason the computing power gets the lead in the program, not because of its efficacy in improving the bottom line. As a result our high performance-computing world is dominated by meaningless discussions of computing power defined by a meaningless benchmark. The political dynamics is basically a modern day “missile gap” like we had during the Cold War. It has exactly as much virtue as the original “missile gap”; it is a pure marketing and political tool with absolutely no technical or strategic validity aside from its ability to free up funding. model. Together the two activities should help energize high quality work. In reality most programs consider them to be nuisances and box checking exercises to be finished and ignored as soon as possible. Programs like to say they are doing V&V, but don’t want to emphasize or pay for doing it well. V&V is a mark of quality, but the programs want its approval rather than attend to its result. Even worse, if the results are poor or indicate problems, they are likely to be ignored or dismissed as being inconvenient. Programs get away with this because the practice of V&V is technical and subtle and in the modern world highly susceptible to bullshit.

model. Together the two activities should help energize high quality work. In reality most programs consider them to be nuisances and box checking exercises to be finished and ignored as soon as possible. Programs like to say they are doing V&V, but don’t want to emphasize or pay for doing it well. V&V is a mark of quality, but the programs want its approval rather than attend to its result. Even worse, if the results are poor or indicate problems, they are likely to be ignored or dismissed as being inconvenient. Programs get away with this because the practice of V&V is technical and subtle and in the modern world highly susceptible to bullshit. simulation for decades now. Let us be clear, when we receive an ever-smaller proportion of the maximum computing power as each year passes. Thirty years ago we would commonly get 10, 20 or even 50 percent of the peak performance of the cutting edge supercomputers. Today even one percent of the peak performance is exceptional, and most codes doing real application work are significantly less than that. Worse yet, this dismal performance is getting worse with every passing year. This is one element of the autopsy of Moore’s law that we have been avoiding while its corpse rots before us.

simulation for decades now. Let us be clear, when we receive an ever-smaller proportion of the maximum computing power as each year passes. Thirty years ago we would commonly get 10, 20 or even 50 percent of the peak performance of the cutting edge supercomputers. Today even one percent of the peak performance is exceptional, and most codes doing real application work are significantly less than that. Worse yet, this dismal performance is getting worse with every passing year. This is one element of the autopsy of Moore’s law that we have been avoiding while its corpse rots before us. poorly understood by non-experts even if they are scientists. The relative merits of one method or algorithm compared to another is difficult to articulate. The merits and comparison is highly technical and subtle. Since creating new methods and algorithms makes progress, this means improvements are hard to explain and articulate to non-experts. In some cases both methods and algorithms can produce breakthrough results and produce huge speed-ups. These cases are easy to explain. More generally a new method or algorithm produces subtle improvements like more robustness or flexibility or accuracy than the older options. Most of these changes are not obvious, but making this progress over time leads to enormous improvements that swamp the progress made by faster computers.

poorly understood by non-experts even if they are scientists. The relative merits of one method or algorithm compared to another is difficult to articulate. The merits and comparison is highly technical and subtle. Since creating new methods and algorithms makes progress, this means improvements are hard to explain and articulate to non-experts. In some cases both methods and algorithms can produce breakthrough results and produce huge speed-ups. These cases are easy to explain. More generally a new method or algorithm produces subtle improvements like more robustness or flexibility or accuracy than the older options. Most of these changes are not obvious, but making this progress over time leads to enormous improvements that swamp the progress made by faster computers. failure just as basic learning is. Without the trust to allow people to gloriously make professional mistakes and fail in the pursuit of knowledge, we cannot develop expertise or progress. All of this lands heavily on the most effective and difficult aspects of scientific computing, the modeling and solution of the models numerically. Progress on these aspects is both highly rewarding in terms of improvement, and very risky being prone to failure. To compound matters progress is often highly subjective itself needing great expertise to explain and be understood. In an environment where the elite are suspect and expertise is not trusted such work is unsupported. This is exactly what we see, the most important and effective aspects of high performance computing are being starved in favor of brutish and naïve aspects, which sell well. The price we pay for our lack of trust is an enormous waste of time, money and effort.

failure just as basic learning is. Without the trust to allow people to gloriously make professional mistakes and fail in the pursuit of knowledge, we cannot develop expertise or progress. All of this lands heavily on the most effective and difficult aspects of scientific computing, the modeling and solution of the models numerically. Progress on these aspects is both highly rewarding in terms of improvement, and very risky being prone to failure. To compound matters progress is often highly subjective itself needing great expertise to explain and be understood. In an environment where the elite are suspect and expertise is not trusted such work is unsupported. This is exactly what we see, the most important and effective aspects of high performance computing are being starved in favor of brutish and naïve aspects, which sell well. The price we pay for our lack of trust is an enormous waste of time, money and effort. he solution of models via numerical approximations. The fact that numerical approximation is the key to unlocking its potential seems largely lost in the modern perspective, and engaged in any increasingly naïve manner. For example much of the dialog around high performance computing is predicated on the notion of convergence. In principle, the more computing power one applies to solving a problem, the better the solution. This is applied axiomatically and relies upon a deep mathematical result in numerical approximation. This heritage and emphasis is not considered in the conversation to the detriment of its intellectual depth.

he solution of models via numerical approximations. The fact that numerical approximation is the key to unlocking its potential seems largely lost in the modern perspective, and engaged in any increasingly naïve manner. For example much of the dialog around high performance computing is predicated on the notion of convergence. In principle, the more computing power one applies to solving a problem, the better the solution. This is applied axiomatically and relies upon a deep mathematical result in numerical approximation. This heritage and emphasis is not considered in the conversation to the detriment of its intellectual depth. systematically ignored by the dialog. The impact of this willful ignorance is felt across the modeling and simulation world, a general lack of progress and emphasis on numerical approximation is evident. We have produced a situation where the most valuable aspect of numerical modeling is not getting focused attention. People are behaving as if the major problems are all solved and not worthy of attention or resources. The nature of the numerical approximation is the second most important and impactful aspect of modeling and simulation work. Virtually all the emphasis today is on the computers themselves based on the assumption of their utility in producing better answers. The most important aspect is the modeling itself; the nature and fidelity of the models define the power of the whole process. Once a model has been defined, the numerical solution of the model is the second most important aspect. The nature of this numerical solution is most dependent on the approximation methodology rather than the power of the computer.

systematically ignored by the dialog. The impact of this willful ignorance is felt across the modeling and simulation world, a general lack of progress and emphasis on numerical approximation is evident. We have produced a situation where the most valuable aspect of numerical modeling is not getting focused attention. People are behaving as if the major problems are all solved and not worthy of attention or resources. The nature of the numerical approximation is the second most important and impactful aspect of modeling and simulation work. Virtually all the emphasis today is on the computers themselves based on the assumption of their utility in producing better answers. The most important aspect is the modeling itself; the nature and fidelity of the models define the power of the whole process. Once a model has been defined, the numerical solution of the model is the second most important aspect. The nature of this numerical solution is most dependent on the approximation methodology rather than the power of the computer. So why are we so hell bent on investing in a more inefficient manner of progressing? Our mindless addiction to Moore’s law providing improvements in computing power over the last fifty years for what in effect has been free for the modeling and simulation community.

So why are we so hell bent on investing in a more inefficient manner of progressing? Our mindless addiction to Moore’s law providing improvements in computing power over the last fifty years for what in effect has been free for the modeling and simulation community. Our modeling and simulation programs are addicted to Moore’s law as surely as a crackhead is addicted to crack. Moore’s law has provided a means to progress without planning or intervention for decades, time passes and capability grows almost if by magic. The problem we have is that Moore’s law is dead, and rather than moving on, the modeling and simulation community is attempting to raise the dead. By this analogy, the exascale program is basically designed to create zombie computers that completely suck to use. They are not built to get results or do science, they are built to get exascale performance on some sort of bullshit benchmark.

Our modeling and simulation programs are addicted to Moore’s law as surely as a crackhead is addicted to crack. Moore’s law has provided a means to progress without planning or intervention for decades, time passes and capability grows almost if by magic. The problem we have is that Moore’s law is dead, and rather than moving on, the modeling and simulation community is attempting to raise the dead. By this analogy, the exascale program is basically designed to create zombie computers that completely suck to use. They are not built to get results or do science, they are built to get exascale performance on some sort of bullshit benchmark. approximations is risky and highly prone to failure. You can invest in improving numerical approximations for a very long time without any seeming progress until one gets a quantum leap in performance. The issue in the modern world is the lack of predictability to such improvements. Breakthroughs cannot be predicted and cannot be relied upon to happen on a regular schedule. The breakthrough requires innovative thinking and a lot of trial and error. The ultimate quantum leap in performance is founded on many failures and false starts. If these failures are engaged in a mode where we continually learn and adapt our approach, we eventually solve problems. The problem is that it must be approached as an article of faith, and cannot be planned. Today’s management environment is completely intolerant of such things, and demands continual results. The result is squalid incrementalism and an utter lack of innovative leaps forward.

approximations is risky and highly prone to failure. You can invest in improving numerical approximations for a very long time without any seeming progress until one gets a quantum leap in performance. The issue in the modern world is the lack of predictability to such improvements. Breakthroughs cannot be predicted and cannot be relied upon to happen on a regular schedule. The breakthrough requires innovative thinking and a lot of trial and error. The ultimate quantum leap in performance is founded on many failures and false starts. If these failures are engaged in a mode where we continually learn and adapt our approach, we eventually solve problems. The problem is that it must be approached as an article of faith, and cannot be planned. Today’s management environment is completely intolerant of such things, and demands continual results. The result is squalid incrementalism and an utter lack of innovative leaps forward. ayoff is far more extreme than these simple arguments. The archetype of this extreme payoff is the difference between first and second order monotone schemes. For general fluid flows, second-order monotone schemes produce results that are almost infinitely more accurate than first-order. The reason for this stunning claim are acute differences in the results comes from the impact of the form of the truncation error expressed via the modified equations (the equations solved more accurately by the numerical methods). For first-order methods there is a large viscous effect that makes all flows laminar. Second-order methods are necessary for simulating high Reynolds number turbulent flows because their dissipation doesn’t interfere directly with the fundamental physics.

ayoff is far more extreme than these simple arguments. The archetype of this extreme payoff is the difference between first and second order monotone schemes. For general fluid flows, second-order monotone schemes produce results that are almost infinitely more accurate than first-order. The reason for this stunning claim are acute differences in the results comes from the impact of the form of the truncation error expressed via the modified equations (the equations solved more accurately by the numerical methods). For first-order methods there is a large viscous effect that makes all flows laminar. Second-order methods are necessary for simulating high Reynolds number turbulent flows because their dissipation doesn’t interfere directly with the fundamental physics. We don’t generally have good tools for numerical error approximation in non-standard (or unresolved) cases. One digestion of one of the key problems is found in Banks, Aslam, Rider where sub-first-order convergence is described and analyzed for solutions of a discontinuous problem for the one-way wave equation. The key result in this paper is the nature of mesh convergence for discontinuous or non-differentiable solutions. In this case we see sub-linear fractional order convergence. The key result is a general relationship between the convergence rate and the formal order of accuracy for the method,

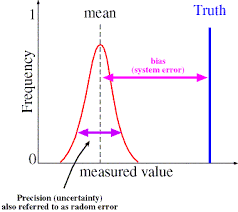

We don’t generally have good tools for numerical error approximation in non-standard (or unresolved) cases. One digestion of one of the key problems is found in Banks, Aslam, Rider where sub-first-order convergence is described and analyzed for solutions of a discontinuous problem for the one-way wave equation. The key result in this paper is the nature of mesh convergence for discontinuous or non-differentiable solutions. In this case we see sub-linear fractional order convergence. The key result is a general relationship between the convergence rate and the formal order of accuracy for the method,  The much less well-appreciated aspect comes with the practice of direct numerical simulation of turbulence (DNS really of anything). One might think that having a DNS would mean that the solution is completely resolved and highly accurate. They are not! Indeed they are not highly convergent even for integral measures. Generally speaking, one gets first-order accuracy or less under mesh refinement. The problem is the highly sensitive nature of the solutions and the scaling of the mesh with the Kolmogorov scale, which is a mean squared measure of the turbulence scale. Clearly there are effects that come from scales that are much smaller than the Kolmogorov scale associated with highly intermittent behavior. To fully resolve such flows would require the scale of turbulence to be described by the maximum norm of the velocity gradient instead of the RMS.

The much less well-appreciated aspect comes with the practice of direct numerical simulation of turbulence (DNS really of anything). One might think that having a DNS would mean that the solution is completely resolved and highly accurate. They are not! Indeed they are not highly convergent even for integral measures. Generally speaking, one gets first-order accuracy or less under mesh refinement. The problem is the highly sensitive nature of the solutions and the scaling of the mesh with the Kolmogorov scale, which is a mean squared measure of the turbulence scale. Clearly there are effects that come from scales that are much smaller than the Kolmogorov scale associated with highly intermittent behavior. To fully resolve such flows would require the scale of turbulence to be described by the maximum norm of the velocity gradient instead of the RMS. When we get to the real foundational aspects of numerical error and limitations, we come to the fundamental theorem of numerical analysis. For PDEs it only applies to linear equations and basically states that consistency and stability is equivalent to convergence. Everything is tied to this. Consistency means you are solving the equations in a valid and correct approximation, stability is getting a result that doesn’t blow up. What is missing is the theoretical application to more general nonlinear equations along with deeper relationships to accuracy, consistency and stability. This theorem was derived back in the early 1950’s and we probably need something more, but there is no effort or emphasis on this today. We need great effort and immensely talented people to progress. While I’m convinced that we have no limit on talent today, we lack effort and perhaps don’t develop or encourage the talent to develop appropriately.

When we get to the real foundational aspects of numerical error and limitations, we come to the fundamental theorem of numerical analysis. For PDEs it only applies to linear equations and basically states that consistency and stability is equivalent to convergence. Everything is tied to this. Consistency means you are solving the equations in a valid and correct approximation, stability is getting a result that doesn’t blow up. What is missing is the theoretical application to more general nonlinear equations along with deeper relationships to accuracy, consistency and stability. This theorem was derived back in the early 1950’s and we probably need something more, but there is no effort or emphasis on this today. We need great effort and immensely talented people to progress. While I’m convinced that we have no limit on talent today, we lack effort and perhaps don’t develop or encourage the talent to develop appropriately. Beyond the issues with hardware emphasis, today’s focus on software is almost equally harmful to progress. Our programs are working steadfastly on maintaining large volumes of source code full of the ideas of the past. Instead of building on the theory, methods, algorithms and idea of the past, we are simply worshiping them. This is the construction of a false ideology. We would do far greater homage to the work of the past if we were building on that work. The theory is not done by a long shot. Our current attitudes toward high performance computing are a travesty, and embodied in a national program that makes the situation worse only to serve the interests of the willfully naive. We are undermining the very foundation upon which the utility of computing is built. We are going to end up wasting a lot of money and getting very little value for it.

Beyond the issues with hardware emphasis, today’s focus on software is almost equally harmful to progress. Our programs are working steadfastly on maintaining large volumes of source code full of the ideas of the past. Instead of building on the theory, methods, algorithms and idea of the past, we are simply worshiping them. This is the construction of a false ideology. We would do far greater homage to the work of the past if we were building on that work. The theory is not done by a long shot. Our current attitudes toward high performance computing are a travesty, and embodied in a national program that makes the situation worse only to serve the interests of the willfully naive. We are undermining the very foundation upon which the utility of computing is built. We are going to end up wasting a lot of money and getting very little value for it. On Saturday I participated in the March for Science in downtown Albuquerque along with many other marches across the World. This was advertised as a non-partisan event, but to anyone there it was clearly and completely partisan and biased. Two things united the people at the march: a philosophy of progressive and liberalism and opposition to conservatism and Donald Trump. The election of a wealthy paragon of vulgarity and ignorance has done wonders for uniting the left wing of politics. Of course, the left wing in the United States is really a moderate wing, made to seem libera

On Saturday I participated in the March for Science in downtown Albuquerque along with many other marches across the World. This was advertised as a non-partisan event, but to anyone there it was clearly and completely partisan and biased. Two things united the people at the march: a philosophy of progressive and liberalism and opposition to conservatism and Donald Trump. The election of a wealthy paragon of vulgarity and ignorance has done wonders for uniting the left wing of politics. Of course, the left wing in the United States is really a moderate wing, made to seem libera l by the extreme views of the right. Among the greater proponents of the left wing are science as an engine of knowledge and progress. The reason for this dichotomy is the right wing’s embrace of ignorance, fear and bigotry as its electoral tools. The right is really the party of money and the rich with fear, bigotry and ignorance wielded as tools to “inspire” enough of the people to vote against their best (long term) interests. Part of this embrace is a logical opposition to virtually every principle science holds dear.

l by the extreme views of the right. Among the greater proponents of the left wing are science as an engine of knowledge and progress. The reason for this dichotomy is the right wing’s embrace of ignorance, fear and bigotry as its electoral tools. The right is really the party of money and the rich with fear, bigotry and ignorance wielded as tools to “inspire” enough of the people to vote against their best (long term) interests. Part of this embrace is a logical opposition to virtually every principle science holds dear. The premise that a march for science should be non-partisan is utterly wrong on the face of it; science is and has always been a completely political thing. The reasoning for this is simple and persuasive. Politics is the way human beings settle their affairs, assign priorities and make decisions. Politics is an essential human endeavor. Science is equally human in its composition being a structured vehicle for societal curiosity leading to the creation of understanding and knowledge. When the political dynamic is arrayed in the manner we see today, science is absolutely and utterly political. We have two opposing views of the future, one consistent with science favoring knowledge and progress, with the other inconsistent with science favoring fear and ignorance. In such an environment science is completely partisan and political. To expect things to be different is foolish and naïve.

The premise that a march for science should be non-partisan is utterly wrong on the face of it; science is and has always been a completely political thing. The reasoning for this is simple and persuasive. Politics is the way human beings settle their affairs, assign priorities and make decisions. Politics is an essential human endeavor. Science is equally human in its composition being a structured vehicle for societal curiosity leading to the creation of understanding and knowledge. When the political dynamic is arrayed in the manner we see today, science is absolutely and utterly political. We have two opposing views of the future, one consistent with science favoring knowledge and progress, with the other inconsistent with science favoring fear and ignorance. In such an environment science is completely partisan and political. To expect things to be different is foolish and naïve. One of the key things to understand is that science has always been a political thing although the contrast has been turned up in recent years. The thing driving the political context is the rightward movement of the Republican, which has led to their embrace of extreme views including religiosity, ignorance and bigotry. Of course, these extreme views are not really the core of the GOP’s soul, money is, but the cult of ignorance and anti-science is useful in propelling their political interests. The Republican Party has embraced extremism in a virulent form because it pushes its supporters to unthinking devotion and obedience. They will support their party without regard for their own best interests. The republican voter base hurts their economic standing in favor of policies that empower their hatreds and bigotry while calming their fear. All forms of fact and truth have become utterly unimportant unless they support their world-view. The upshot is the rule of a political class hell bent on establishing a ruling class in the United States composed of the wealthy. Most of the people voting for the Republican candidates are simply duped by their support of extreme fear, hate and bigotry. The Democratic Party is only marginally better since they have been seduced by the same money leaving voters with no one to work for them. The rejection of science by the right will ultimately be the undoing of the Nation as other nations will eventually usurp the United States militarily and economically.

One of the key things to understand is that science has always been a political thing although the contrast has been turned up in recent years. The thing driving the political context is the rightward movement of the Republican, which has led to their embrace of extreme views including religiosity, ignorance and bigotry. Of course, these extreme views are not really the core of the GOP’s soul, money is, but the cult of ignorance and anti-science is useful in propelling their political interests. The Republican Party has embraced extremism in a virulent form because it pushes its supporters to unthinking devotion and obedience. They will support their party without regard for their own best interests. The republican voter base hurts their economic standing in favor of policies that empower their hatreds and bigotry while calming their fear. All forms of fact and truth have become utterly unimportant unless they support their world-view. The upshot is the rule of a political class hell bent on establishing a ruling class in the United States composed of the wealthy. Most of the people voting for the Republican candidates are simply duped by their support of extreme fear, hate and bigotry. The Democratic Party is only marginally better since they have been seduced by the same money leaving voters with no one to work for them. The rejection of science by the right will ultimately be the undoing of the Nation as other nations will eventually usurp the United States militarily and economically.

values of progress, love and knowledge are viewed as weakness. In this lens it is no wonder that science is rejected.

values of progress, love and knowledge are viewed as weakness. In this lens it is no wonder that science is rejected. id this problem is kill the knowledge before it is produced. We can find example after example of science being silenced because it is likely to produce results that do not match their view of the world. Among the key engines of the ignorance of conservatism is its alliance with extreme religious views. Historically religion and science are frequently at odds because the faith and truth are often incompatible. This isn’t necessarily all religious faith, but rather that stemming from a fundamentalist approach, which is usually grounded in old and antiquated notions (i.e., classically conservative and opposing anything looking like progress). Fervent religious belief cannot deal with truths that do not align with dictums. The best way to avoid this problem is get rid of the truth. When the government is controlled by extremists this translates to reducing and controlling

id this problem is kill the knowledge before it is produced. We can find example after example of science being silenced because it is likely to produce results that do not match their view of the world. Among the key engines of the ignorance of conservatism is its alliance with extreme religious views. Historically religion and science are frequently at odds because the faith and truth are often incompatible. This isn’t necessarily all religious faith, but rather that stemming from a fundamentalist approach, which is usually grounded in old and antiquated notions (i.e., classically conservative and opposing anything looking like progress). Fervent religious belief cannot deal with truths that do not align with dictums. The best way to avoid this problem is get rid of the truth. When the government is controlled by extremists this translates to reducing and controlling not support deeper research that forms the foundation allowing us to develop technology. As a result our ability to be the best at killing people is at risk in the long run. Eventually the foundation of science used to create all our weapons will run out, and we no longer will be the top dogs. The basic research used for weapons work today is largely a relic of the 1960’s and 1970’s. The wholesale diminishment in societal support for research during the 1980’s and onward will start to hurt us more obviously. In addition we have poisoned the research environment in a fairly bipartisan way leading to a huge drop in the effectiveness and efficiency of the fewer research dollars spent.