Then the shit hit the fan.

― John Kenneth Galbraith

I’m an unrelenting progressive. This holds true for politics, work and science where I always see a way for things to get better. I’m very uncomfortable with just sitting back and appreciating how things are. Many who I encounter see this as a degree of pessimism since I see the shortcomings in almost everything. I keenly disagree with this assessment. I see my point-of-view as optimism. It is optimism because I know things can always get better, always improve and constantly achieve a better end state. The people who I rub the wrong way are the proponents of the status quo, who see the current state of affairs as just fine. The difference in worldview is really between my deep reaching desires for a better world versus a world that is good enough already. Often the greatest enemy of getting to a better world is a culture that is a key element of the world, as it exists. Change comes whether culture wants it or not, and problems arise when the prevailing culture is unfit for these changes. Overcoming culture is the hardest part of change, and even when the culture is utterly toxic, it opposes changes that would make things better.

I’m an unrelenting progressive. This holds true for politics, work and science where I always see a way for things to get better. I’m very uncomfortable with just sitting back and appreciating how things are. Many who I encounter see this as a degree of pessimism since I see the shortcomings in almost everything. I keenly disagree with this assessment. I see my point-of-view as optimism. It is optimism because I know things can always get better, always improve and constantly achieve a better end state. The people who I rub the wrong way are the proponents of the status quo, who see the current state of affairs as just fine. The difference in worldview is really between my deep reaching desires for a better world versus a world that is good enough already. Often the greatest enemy of getting to a better world is a culture that is a key element of the world, as it exists. Change comes whether culture wants it or not, and problems arise when the prevailing culture is unfit for these changes. Overcoming culture is the hardest part of change, and even when the culture is utterly toxic, it opposes changes that would make things better.

I’ve spent a good bit of time recently contemplating the unremitting toxicity of our culture. We have suffered through a monumental presidential election with two abysmal candidates both despised by a majority of the electorate. The winner is an abomination of a human being clearly unfit for a public office worthy of respect. He is totally unqualified for the position he will hold, and will likely be the most corrupt person to ever hold the job. The loser was thoroughly qualified, potentially corrupt too, and would have had a  failed presidency because of the toxic political culture in general. We have reaped this entire legacy by allowing the public and political institutions to whither for decades. It is arguable that this erosion is the willful effort of those charged by the public with governing us. Among the institutions that are under siege and damaged in our current era are the research institutions where I work. These institutions have cultures from a bygone era, completely unfit for the modern world yet unmoving and not evolved in the face of new challenges.

failed presidency because of the toxic political culture in general. We have reaped this entire legacy by allowing the public and political institutions to whither for decades. It is arguable that this erosion is the willful effort of those charged by the public with governing us. Among the institutions that are under siege and damaged in our current era are the research institutions where I work. These institutions have cultures from a bygone era, completely unfit for the modern world yet unmoving and not evolved in the face of new challenges.

This sentiment of dysfunction applies to the obviously toxic public culture, but the workplace culture too. In the workplace the toxicity is often cloaked in tidy professional wrapper, and seems wondrously nice, decent and completely OK. Often this professional wrapper shows itself as horribly passive aggressive behavior that the organization basically empowers and endorses. The problem is not the behavior of the people in the culture toward each other, but the nature of the attitude toward work. Quite often we have this layered approach that lends a well-behaved, friendly face on the complete  disempowerment of employees. Increasingly the people working in the trenches are merely cannon fodder, and everything important to work happens with managers. Where I work the toxicity of the workplace and politics collide to produce a double whammy. We are under siege from a political climate that undermines institutions and a business-management culture that undermines the power of the worker.

disempowerment of employees. Increasingly the people working in the trenches are merely cannon fodder, and everything important to work happens with managers. Where I work the toxicity of the workplace and politics collide to produce a double whammy. We are under siege from a political climate that undermines institutions and a business-management culture that undermines the power of the worker.

Great leaders create great cultures regardless of the dominant culture in the organization.

― Bob Anderson

I’m reminded of the quote “culture eats strategy” (attributed to Peter Drucker) and wonder whether or not anything can be done to cure our problems without first addressing the toxicity of the underlying culture. I’ll hit upon a couple examples of the toxic cultures in the workplace and society in general. Both of these stand in opposition to a life well led. No amount of concrete strategy and clarity of thought can allow progress when the culture opposes it.

I am embedded in a horribly toxic workplace culture, which reflects a deeply toxic broader public culture. Our culture at work is polite, and reserved to be true, but toxic to all the principles our managers promote. Recently a high level manager espoused a set of high-level principles to support: diversity & inclusion, excellence, leadership, and partnership & collaboration. None of these principles is actually seen in reality and everything about how our culture operates opposes them. Truly leading and standing for the values espoused with such eloquence by identifying and removi ng the barriers to their actual reality would be a welcome remedy to the normal cynical response. Instead the reality is completely ignored and the fantasy of living to such values is promoted. It is not clear whether the manager knows the promoted values are fiction, or simply exists in a disconnected fantasy world. Either situation is utterly damning. The manager either knows the values are fiction, or they are so disconnected from reality that they believe the fiction. The end result is the same, no actions to remove the toxic culture are ever taken and the culture’s role in undermining values is not acknowledged.

ng the barriers to their actual reality would be a welcome remedy to the normal cynical response. Instead the reality is completely ignored and the fantasy of living to such values is promoted. It is not clear whether the manager knows the promoted values are fiction, or simply exists in a disconnected fantasy world. Either situation is utterly damning. The manager either knows the values are fiction, or they are so disconnected from reality that they believe the fiction. The end result is the same, no actions to remove the toxic culture are ever taken and the culture’s role in undermining values is not acknowledged.

In a starkly parallel sense we have an immensely toxic culture in our society today. The two toxic cultures certainly have connections, and the societal culture is far more destructive. We have all witnessed the most monumental political event of our liv es resulting directly from the toxic culture playing out. The election of a thoroughly toxic human being as President is a great exemplar of the degree of dysfunction today. Our toxic culture is spilling over into societal decisions that may have grave implications for our combined future. One outcome of the toxic societal choice could be a sequence of events that will induce a crisis of monumental proportions. Such crises can be useful in fixing problems and destroying the toxic culture, and allowing its replacement by something better. Unfortunately such crises are painful, destructive and expensive. People are killed. Lives are ruined and pain is inflicted broadly. Perhaps this is the cost we must bear in the wake of allowing a toxic culture to fester and grow in our midst.

es resulting directly from the toxic culture playing out. The election of a thoroughly toxic human being as President is a great exemplar of the degree of dysfunction today. Our toxic culture is spilling over into societal decisions that may have grave implications for our combined future. One outcome of the toxic societal choice could be a sequence of events that will induce a crisis of monumental proportions. Such crises can be useful in fixing problems and destroying the toxic culture, and allowing its replacement by something better. Unfortunately such crises are painful, destructive and expensive. People are killed. Lives are ruined and pain is inflicted broadly. Perhaps this is the cost we must bear in the wake of allowing a toxic culture to fester and grow in our midst.

Reform is usually possible only once a sense of crisis takes hold…. In fact, crises are such valuable opportunities that a wise leader often prolongs a sense of emergency on purpose.

― Charles Ruhig

Cultures are usually developed, defined and encoded through the resolution of crisis. In these crises old cultures fade being replaced by a new culture that succeeds in assisting the resolution of the crisis. If the resolution of the crisis is viewed as a success, the culture becomes a monument to that success. People wishing to succeed adopt the cultural norms and re-enforce the culture’s hold. Over time such cultural touchstones become aged and incapable of dealing with modern reality. We see this problem in spades today either in the workplace or society-wide. The older culture in place cannot deal effectively with the realities of today. Changes in economics, technology and populations are creating a se t of challenges for older cultures, which these older cultures are unfit to manage. Seemingly we are being plunged headlong toward a crisis necessary to resolve the cultural inadequacies. The problem is that the crisis will be an immensely painful and horrible circumstance. We may simply have no choice, but to go through it, and hope we have the wisdom and strength to get to the other side of the abyss.

t of challenges for older cultures, which these older cultures are unfit to manage. Seemingly we are being plunged headlong toward a crisis necessary to resolve the cultural inadequacies. The problem is that the crisis will be an immensely painful and horrible circumstance. We may simply have no choice, but to go through it, and hope we have the wisdom and strength to get to the other side of the abyss.

Crisis is Good. Crisis is a Messenger

― Bryant McGill

A crisis is a terrible thing to waste.

― Paul Romer

What can be done about undoing these toxic cultures without crisis? The usual remedy for a toxic culture is a crisis that demands effective action. This is an unpleasant prospect whether part of an organization or country, but it is the course we find ourselves on. One of the biggest problems with the toxic culture issue is its self-defeating nature. The toxic culture itself defends itself. Our politicians and managers are c reatures whose success has been predicated on the toxic culture. These people are almost completely incapable of making the necessary decisions for avoiding the sorts of disasters that characterize a crisis. The toxic culture and those who succeed in them are unfit to resolve crises successfully. Our leaders are the most successful people in the toxic culture and act to defend such cultures in the face of overwhelming evidence that the culture is toxic. As such they do nothing to avoid the crisis even when it is obvious and make the eventual disaster inevitable.

reatures whose success has been predicated on the toxic culture. These people are almost completely incapable of making the necessary decisions for avoiding the sorts of disasters that characterize a crisis. The toxic culture and those who succeed in them are unfit to resolve crises successfully. Our leaders are the most successful people in the toxic culture and act to defend such cultures in the face of overwhelming evidence that the culture is toxic. As such they do nothing to avoid the crisis even when it is obvious and make the eventual disaster inevitable.

Can we avoid this? I hope so, but I seriously doubt it. I fear that events will eventually unfold that will having us longing for the crisis to rescue us from the slow-motion zombie existence today’s current public-workplace cultures inflict on all of us.

The Chinese use two brush strokes to write the word ‘crisis.’ One brush stroke stands for danger; the other for opportunity. In a crisis, be aware of the danger–but recognize the opportunity.

― John F. Kennedy

eries of basic scientific principles combined with overwhelming need in the socio-political worlds. At the end of the 19th century and beginning of the 20th century a massive revolution occurred in physics fundamentally changing our knowledge of the universe. The needs of global conflict pushed us to harness this knowledge to unleash the power of the atom. Ultimately the technology of atomic energy became a transformative political force probably stabilizing the world against massive conflict. More recently, computer technology has seen a similar set of events play out in a transformative way first scientifically, then in engineering and finally in profound societal impact we are just beginning to see unfold.

eries of basic scientific principles combined with overwhelming need in the socio-political worlds. At the end of the 19th century and beginning of the 20th century a massive revolution occurred in physics fundamentally changing our knowledge of the universe. The needs of global conflict pushed us to harness this knowledge to unleash the power of the atom. Ultimately the technology of atomic energy became a transformative political force probably stabilizing the world against massive conflict. More recently, computer technology has seen a similar set of events play out in a transformative way first scientifically, then in engineering and finally in profound societal impact we are just beginning to see unfold. If we pull our focus into the ability of computational power to transform science, we can easily see the failure to recognize these elements in current ideas. We remain utterly tied to the pursuit of Moore’s law even as it lies in the morgue. Rather than examine the needs of progress, we remain tied to the route taken in the past. The focus of work has become ever more computer (machine) directed, and other more important and beneficial activities have withered from lack of attention. In the past I’ve pointed out the greater importance of modeling, methods, and algorithms in comparison to machines. Today we can look at another angle on this, the time it takes to produce useful computational results, or workflow.

If we pull our focus into the ability of computational power to transform science, we can easily see the failure to recognize these elements in current ideas. We remain utterly tied to the pursuit of Moore’s law even as it lies in the morgue. Rather than examine the needs of progress, we remain tied to the route taken in the past. The focus of work has become ever more computer (machine) directed, and other more important and beneficial activities have withered from lack of attention. In the past I’ve pointed out the greater importance of modeling, methods, and algorithms in comparison to machines. Today we can look at another angle on this, the time it takes to produce useful computational results, or workflow. opportunity. There is a common lack of appreciation for actual utility in research that arises from the naïve and simplistic view of how computational science is done. This view arises from the marketing of high performance computing work as basically only requiring a single magical calculation where science almost erupts spontaneously. Of course this never happens and the lack of scientific process in computational science is a pox on the field.

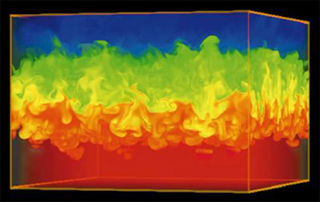

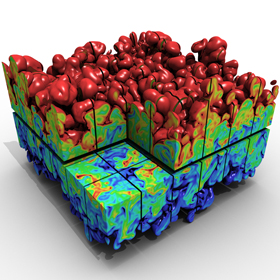

opportunity. There is a common lack of appreciation for actual utility in research that arises from the naïve and simplistic view of how computational science is done. This view arises from the marketing of high performance computing work as basically only requiring a single magical calculation where science almost erupts spontaneously. Of course this never happens and the lack of scientific process in computational science is a pox on the field. be the simplest and clearest example of the overwhelmingly transparent superficiality of current research. Visualization is useful for marketing science, but produces stunningly little actual science or engineering. We are more interested in funding tools for marketing work than actually doing work. Tools for extracting useful engineering or scientific data from calculation usually languish. They have little “sex appeal” compared to flashy visualization, but carry all the impact on the results that matter. If one is really serious about V&V all of these issues are compounded dramatically. For doing hard-nosed V&V visualization has almost no value whatsoever.

be the simplest and clearest example of the overwhelmingly transparent superficiality of current research. Visualization is useful for marketing science, but produces stunningly little actual science or engineering. We are more interested in funding tools for marketing work than actually doing work. Tools for extracting useful engineering or scientific data from calculation usually languish. They have little “sex appeal” compared to flashy visualization, but carry all the impact on the results that matter. If one is really serious about V&V all of these issues are compounded dramatically. For doing hard-nosed V&V visualization has almost no value whatsoever. In the end all of this is evidence that current high performance computing programs have little interest in actual science or engineering. They are hardware focused because the people leading them like hardware; don’t care or understand science and engineering. The people running the show are little more than hardware-obsessed “fan boys” who care little about science. They succeed because of a track record of selling hardware-focused programs, not because it is the right thing to do. The role of computation is science should be central to our endeavor instead of a sideshow that receives little attention and less funding. Real leadership would provide a strong focus on completing important work that could impact the bottom line, doing better science with computational tools.

In the end all of this is evidence that current high performance computing programs have little interest in actual science or engineering. They are hardware focused because the people leading them like hardware; don’t care or understand science and engineering. The people running the show are little more than hardware-obsessed “fan boys” who care little about science. They succeed because of a track record of selling hardware-focused programs, not because it is the right thing to do. The role of computation is science should be central to our endeavor instead of a sideshow that receives little attention and less funding. Real leadership would provide a strong focus on completing important work that could impact the bottom line, doing better science with computational tools. Too often in seeing discourse about numerical methods, one gets the impression that dissipation is something to be avoided at all costs. Calculations are constantly under attack for being too dissipative. Rarely does one ever hear about calculations that are not dissipative enough. A reason for this is the tendency for too little dissipation to cause outright instability contrasted with too much dissipation with low-order methods. In between too little dissipation and instability are a wealth of unphysical solutions, oscillations and terrible computational results. These results may be all too common because of people’s standard disposition toward dissipation. The problem is that too few among the computational cognoscenti recognize that too little dissipation is as poisonous to results as too much (maybe more).

Too often in seeing discourse about numerical methods, one gets the impression that dissipation is something to be avoided at all costs. Calculations are constantly under attack for being too dissipative. Rarely does one ever hear about calculations that are not dissipative enough. A reason for this is the tendency for too little dissipation to cause outright instability contrasted with too much dissipation with low-order methods. In between too little dissipation and instability are a wealth of unphysical solutions, oscillations and terrible computational results. These results may be all too common because of people’s standard disposition toward dissipation. The problem is that too few among the computational cognoscenti recognize that too little dissipation is as poisonous to results as too much (maybe more). a grave error in thinking about the physical laws of direct interest, as the solution of conservation laws does not satisfy this limit when flows are inviscid. Instead the solutions of interest (i.e., weak solutions with discontinuities) in the inviscid limit approach a solution where the entropy production is proportional to variation in the large scale solution cubed,

a grave error in thinking about the physical laws of direct interest, as the solution of conservation laws does not satisfy this limit when flows are inviscid. Instead the solutions of interest (i.e., weak solutions with discontinuities) in the inviscid limit approach a solution where the entropy production is proportional to variation in the large scale solution cubed,  s in the flow, the characteristic speed is a function of the solution, which induces a set of entropy considerations. The simplest and most elegant condition is due to Lax, which says that the characteristics dictate that information flows into a shock. In a Lagrangian frame of reference for a right running shock this would look like,

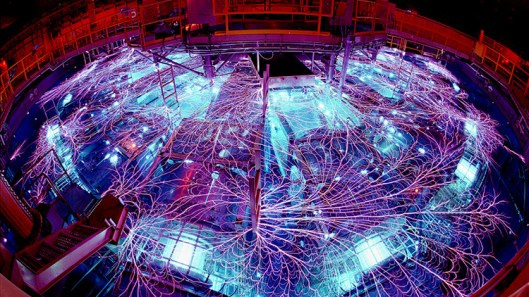

s in the flow, the characteristic speed is a function of the solution, which induces a set of entropy considerations. The simplest and most elegant condition is due to Lax, which says that the characteristics dictate that information flows into a shock. In a Lagrangian frame of reference for a right running shock this would look like,  When we hear about supercomputing, the media focus, press release is always talking about massive calculations. The bigger is always better with as many zeros as possible with some sort of exotic name for the rate of computation, mega, tera, peta, eta, zeta,… Up and to the right! The implicit proposition is that bigger the calculation, the better the science. This is quite simply complete and utter bullshit. These big calculations providing the media footprint for supercomputing and winning prizes are simply stunts, or more generously technology demonstrations, and not actual science. Scientific computation is a much more involved and thoughtful activity involving lots of different calculations many at a vastly smaller scale. Rarely, if ever, do the massive calculations come as a package including the sorts of evidence science is based upon. Real science has error analysis, uncertainty estimates, and in this sense the massive calculations produce a disservice to computational science by skewing the picture of what science using computers should look like.

When we hear about supercomputing, the media focus, press release is always talking about massive calculations. The bigger is always better with as many zeros as possible with some sort of exotic name for the rate of computation, mega, tera, peta, eta, zeta,… Up and to the right! The implicit proposition is that bigger the calculation, the better the science. This is quite simply complete and utter bullshit. These big calculations providing the media footprint for supercomputing and winning prizes are simply stunts, or more generously technology demonstrations, and not actual science. Scientific computation is a much more involved and thoughtful activity involving lots of different calculations many at a vastly smaller scale. Rarely, if ever, do the massive calculations come as a package including the sorts of evidence science is based upon. Real science has error analysis, uncertainty estimates, and in this sense the massive calculations produce a disservice to computational science by skewing the picture of what science using computers should look like. masquerading as a scientific conference. It is simply another in a phalanx of echo chambers we seem to form with increasing regularity across every sector of society. I’m sure the cheerleaders for supercomputing will be crowing about the transformative power of these computers and the boon for science they represent. There will be celebrations of enormous calculations and pronouncements about their scientific value. There is a certain lack of political correctness to the truth about all this; it is mostly pure bullshit.

masquerading as a scientific conference. It is simply another in a phalanx of echo chambers we seem to form with increasing regularity across every sector of society. I’m sure the cheerleaders for supercomputing will be crowing about the transformative power of these computers and the boon for science they represent. There will be celebrations of enormous calculations and pronouncements about their scientific value. There is a certain lack of political correctness to the truth about all this; it is mostly pure bullshit. provided an enormous gain in the power of computers for 50 years and enabled much of the transformative power of computing technology. The key point is that computers and software are just tools; they are incredibly useful tools, but tools nonetheless. Tools allow a human being to extend their own biological capabilities in a myriad of ways. Computers are marvelous at replicating and automating calculations and thought operations at speeds utterly impossible for humans. Everything useful done with these tools is utterly dependent on human beings to devise. My key critique about this approach to computing is the hollowing out of the investigation into devising better ways to use computers and focusing myopically on enhancing the speed of computation.

provided an enormous gain in the power of computers for 50 years and enabled much of the transformative power of computing technology. The key point is that computers and software are just tools; they are incredibly useful tools, but tools nonetheless. Tools allow a human being to extend their own biological capabilities in a myriad of ways. Computers are marvelous at replicating and automating calculations and thought operations at speeds utterly impossible for humans. Everything useful done with these tools is utterly dependent on human beings to devise. My key critique about this approach to computing is the hollowing out of the investigation into devising better ways to use computers and focusing myopically on enhancing the speed of computation. vised to unveil difficult to see phenomena. We then produce explanations or theories to describe what we see, and allow us to predict what we haven’t see yet. The degree of comparison between the theory and the observations confirms our degree of understanding. There is always a gap between our theory and our observations, and each is imperfect in its own way. Observations are intrinsically prone to a variety of errors, and theory is always imperfect. The solutions to theoretical models are also imperfect especially when solved via computation. Understanding these imperfections and the nature of the comparisons between theory and observation is essential to a comprehension of the state of our science.

vised to unveil difficult to see phenomena. We then produce explanations or theories to describe what we see, and allow us to predict what we haven’t see yet. The degree of comparison between the theory and the observations confirms our degree of understanding. There is always a gap between our theory and our observations, and each is imperfect in its own way. Observations are intrinsically prone to a variety of errors, and theory is always imperfect. The solutions to theoretical models are also imperfect especially when solved via computation. Understanding these imperfections and the nature of the comparisons between theory and observation is essential to a comprehension of the state of our science. As I’ve stated before, the scientific method applied to scientific computing is embedded in the practice of verification and validation. Simply stated, a single massive calculation cannot be verified or validated (it could be, but not with current computational techniques and the development of such capability is a worthy research endeavor). The uncertainties in the solution and the model cannot be unveiled in a single calculation, and the comparison with observations cannot be put into a quantitative context. The proponents of our current approach to computing want you to believe that massive calculations have intrinsic scientific value. Why? Because they are so big, they have to be the truth. The problem with this thinking is that any single calculation does not contain steps necessary for determining the quality of the calculation, or putting any model comparison in context.

As I’ve stated before, the scientific method applied to scientific computing is embedded in the practice of verification and validation. Simply stated, a single massive calculation cannot be verified or validated (it could be, but not with current computational techniques and the development of such capability is a worthy research endeavor). The uncertainties in the solution and the model cannot be unveiled in a single calculation, and the comparison with observations cannot be put into a quantitative context. The proponents of our current approach to computing want you to believe that massive calculations have intrinsic scientific value. Why? Because they are so big, they have to be the truth. The problem with this thinking is that any single calculation does not contain steps necessary for determining the quality of the calculation, or putting any model comparison in context. s. These computers are generally the focus of all the attention and cost the most money. The dirty secret is that they are almost completely useless for science and engineering. They are technology demonstrations and little else. They do almost nothing of value to the myriad of programs reporting to use computations to do produce results. All of the utility to actual science and engineering come from the homely cousins of these supercomputers, the capacity computers. These computers are the workhorses of science and engineering because they are set up to do something useful. The capability computers are just show ponies, and perfect exemplars of the modern bullshit based science economy. I’m not OK with this; I’m here to do science and engineering. Are our so-called leaders OK with the focus of attention (and bulk of funding) being non-scientific, media-based, press release generators?

s. These computers are generally the focus of all the attention and cost the most money. The dirty secret is that they are almost completely useless for science and engineering. They are technology demonstrations and little else. They do almost nothing of value to the myriad of programs reporting to use computations to do produce results. All of the utility to actual science and engineering come from the homely cousins of these supercomputers, the capacity computers. These computers are the workhorses of science and engineering because they are set up to do something useful. The capability computers are just show ponies, and perfect exemplars of the modern bullshit based science economy. I’m not OK with this; I’m here to do science and engineering. Are our so-called leaders OK with the focus of attention (and bulk of funding) being non-scientific, media-based, press release generators? e are certainly cases where exascale computing is enabling for model solutions with small enough error to make models useful. This case is rarely made or justified in any massive calculation rather being asserted by authority.

e are certainly cases where exascale computing is enabling for model solutions with small enough error to make models useful. This case is rarely made or justified in any massive calculation rather being asserted by authority. culations. The most common practice is to assess the modeling uncertainty via some sort of sampling approach. This requires many calculations because of the high-dimensional nature of the problem. Sampling converges very slowly with any mean value for the modeling being proportional to the inverse square root of the number of samples and the measure of the variance of the solution.

culations. The most common practice is to assess the modeling uncertainty via some sort of sampling approach. This requires many calculations because of the high-dimensional nature of the problem. Sampling converges very slowly with any mean value for the modeling being proportional to the inverse square root of the number of samples and the measure of the variance of the solution. The uncertainty structure can be approached at a high level, but to truly get to the bottom of the issue requires some technical depth. For example numerical error has many potential sources: discretization error (space, time, energy, … whatever we approximate in), linear algebra error, nonlinear solver error, round-off error, solution regularity and smoothness. Many classes of problems are not well posed and admit multiple physically valid solutions. In this case the whole concept of convergence under mesh refinement needs overhauling. Recently the concept of measure-valued (statistical) solutions has entered the fray. These are taxing on computer resources in the same manner as sampling approaches to uncertainty. Each of these sources requires specific and focused approaches to their estimation along with requisite fidelity.

The uncertainty structure can be approached at a high level, but to truly get to the bottom of the issue requires some technical depth. For example numerical error has many potential sources: discretization error (space, time, energy, … whatever we approximate in), linear algebra error, nonlinear solver error, round-off error, solution regularity and smoothness. Many classes of problems are not well posed and admit multiple physically valid solutions. In this case the whole concept of convergence under mesh refinement needs overhauling. Recently the concept of measure-valued (statistical) solutions has entered the fray. These are taxing on computer resources in the same manner as sampling approaches to uncertainty. Each of these sources requires specific and focused approaches to their estimation along with requisite fidelity. The bottom line is that science and engineering is evidence. To do things correctly you need to operate on an evidentiary basis. More often than not, high performance computing avoids this key scientific approach. Instead we see the basic decision-making operating via assumption. The assumption is that a bigger, more expensive calculation is always better and always serves the scientific interest. This view is as common as it is naïve. There are many and perhaps most cases where the greatest service of science is many smaller calculations. This hinges upon the overall structure of uncertainty in the simulations and whether it is dominated by approximation error, modeling form or lack of knowledge, and even the observational quality available. These matters are subtle and complex, and we all know that today neither subtle, nor complex sells.

The bottom line is that science and engineering is evidence. To do things correctly you need to operate on an evidentiary basis. More often than not, high performance computing avoids this key scientific approach. Instead we see the basic decision-making operating via assumption. The assumption is that a bigger, more expensive calculation is always better and always serves the scientific interest. This view is as common as it is naïve. There are many and perhaps most cases where the greatest service of science is many smaller calculations. This hinges upon the overall structure of uncertainty in the simulations and whether it is dominated by approximation error, modeling form or lack of knowledge, and even the observational quality available. These matters are subtle and complex, and we all know that today neither subtle, nor complex sells. is appalling trend, but the same dynamic is acutely felt there too. The elements undermining facts and reality in our public life are infesting my work. Many institutions are failing society and contributing to the slow-motion disaster we have seen unfolding. We need to face this issue head-on and rebuild our important institutions and restore our functioning society, democracy and governance.

is appalling trend, but the same dynamic is acutely felt there too. The elements undermining facts and reality in our public life are infesting my work. Many institutions are failing society and contributing to the slow-motion disaster we have seen unfolding. We need to face this issue head-on and rebuild our important institutions and restore our functioning society, democracy and governance. broader public sphere, the same thing has happened in the conduct of science. In many ways the undermining of expertise in science is even worse and more corrosive. Increasingly, there is no tolerance or space for the intrusion of expertise into the conduct of scientific or engineering work. The way this tolerance manifests itself is subtle and poisonous. Expertise is tolerated and welcomed as long as it is confirmatory and positive. Expertise is not allowed to offer strong criticism or the slightest rebuke without regard for the shoddiness of work. If an expert does offer anything that seems critical or negative they can expect to be dismissed and never invited back to provide feedback again. Rather than welcome their service and attention, they are derided as troublemakers and malcontents. We see in every corner of the scientific and technical World a steady intrusion of mediocrity and outright bullshit into our discourse as a result.

broader public sphere, the same thing has happened in the conduct of science. In many ways the undermining of expertise in science is even worse and more corrosive. Increasingly, there is no tolerance or space for the intrusion of expertise into the conduct of scientific or engineering work. The way this tolerance manifests itself is subtle and poisonous. Expertise is tolerated and welcomed as long as it is confirmatory and positive. Expertise is not allowed to offer strong criticism or the slightest rebuke without regard for the shoddiness of work. If an expert does offer anything that seems critical or negative they can expect to be dismissed and never invited back to provide feedback again. Rather than welcome their service and attention, they are derided as troublemakers and malcontents. We see in every corner of the scientific and technical World a steady intrusion of mediocrity and outright bullshit into our discourse as a result. to to review technical work for a large important project. The expected outcome was a “rubber stamp” that said the work was excellent, and offered no serious objections. Basically the management wanted me to sign off on the work as being awesome. Instead, I found a number of profound weaknesses in the work, and pointed these out along with some suggested corrective actions. These observations were dismissed and never addressed by the team conducting the work. It became perfectly clear that no such critical feedback was welcome and I wouldn’t be invited back. Worse yet, I was punished for my trouble. I was sent a very clear and unequivocal message, “don’t ever be critical of our work.”

to to review technical work for a large important project. The expected outcome was a “rubber stamp” that said the work was excellent, and offered no serious objections. Basically the management wanted me to sign off on the work as being awesome. Instead, I found a number of profound weaknesses in the work, and pointed these out along with some suggested corrective actions. These observations were dismissed and never addressed by the team conducting the work. It became perfectly clear that no such critical feedback was welcome and I wouldn’t be invited back. Worse yet, I was punished for my trouble. I was sent a very clear and unequivocal message, “don’t ever be critical of our work.”  increasingly meaningless nature of any review, and the hollowing out of expertise’s seal of approval. In the process experts and expertise become covered in the bullshit they pedal and become diminished in the end.

increasingly meaningless nature of any review, and the hollowing out of expertise’s seal of approval. In the process experts and expertise become covered in the bullshit they pedal and become diminished in the end. utterly incompetent bullshit artist president. Donald Trump was completely unfit to hold office, but he is a consummate con man and bullshit artist. In a sense he is the emblem of the age and the perfect exemplar of our addiction to bullshit over substance.

utterly incompetent bullshit artist president. Donald Trump was completely unfit to hold office, but he is a consummate con man and bullshit artist. In a sense he is the emblem of the age and the perfect exemplar of our addiction to bullshit over substance. make, progress is sacrificed. We bury immediate conflict for long-term decline and plant the seeds for far more deep, widespread and damaging conflict. Such horrible conflict may be unfolding right in front of us in the nature of the political process. By finding our problems and being critical we identify where progress can be made, where work can be done to make the World better. By bullshitting our way through things, the problems persist and fester and progress is sacrificed.

make, progress is sacrificed. We bury immediate conflict for long-term decline and plant the seeds for far more deep, widespread and damaging conflict. Such horrible conflict may be unfolding right in front of us in the nature of the political process. By finding our problems and being critical we identify where progress can be made, where work can be done to make the World better. By bullshitting our way through things, the problems persist and fester and progress is sacrificed. implicitly aid and abed the forces in society undermining progress toward a better future. The result of this acceptance of bullshit can be seen in the reduced production of innovation, and breakthrough work, but most acutely in the decay of these institutions.

implicitly aid and abed the forces in society undermining progress toward a better future. The result of this acceptance of bullshit can be seen in the reduced production of innovation, and breakthrough work, but most acutely in the decay of these institutions.

In the past quarter century the role of software in science has made a huge change in importance. I work in a computer research organization that employs many applied mathematicians. One would think that we have a little maelstrom of mathematical thought. Very little actual mathematics takes place with most of them writing software as their prime activity. A great deal of emphasis is placed on software as something to be preserved or invested in. This dynamic places a great deal of other forms of work on the backburner like mathematics (or modeling or algorithmic-methods investigation). The proper question to think about is whether the emphasis on software along with collateral decreases in focus on mathematics or physical modeling is a benefit to the conduct of science.

In the past quarter century the role of software in science has made a huge change in importance. I work in a computer research organization that employs many applied mathematicians. One would think that we have a little maelstrom of mathematical thought. Very little actual mathematics takes place with most of them writing software as their prime activity. A great deal of emphasis is placed on software as something to be preserved or invested in. This dynamic places a great deal of other forms of work on the backburner like mathematics (or modeling or algorithmic-methods investigation). The proper question to think about is whether the emphasis on software along with collateral decreases in focus on mathematics or physical modeling is a benefit to the conduct of science. The simplest answer to the question at hand is that code is a set of instructions that a computer can understand that provides a recipe provided by humans for conducting some calculations. These instructions could integrate a function, or a differential equations, sort some data out, filter an image, or millions of other things. In every case the instructions are devised by humans to do something, and carried out by a computer with greater automation and speed than humans can possibly manage. Without the guidance of humans, the computer is utterly useless, but with human guidance it is a transformative tool. We see modern society completely reshaped by the computer. Too often the focus of humans is on the tool and not the things that give it power, skillful human instructions devised by creative intellects. Dangerously, science is falling into this trap, and the misunderstanding of the true dynamic may have disastrous consequences for the state of progress. We must keep in mind the nature of computing and man’s key role in its utility.

The simplest answer to the question at hand is that code is a set of instructions that a computer can understand that provides a recipe provided by humans for conducting some calculations. These instructions could integrate a function, or a differential equations, sort some data out, filter an image, or millions of other things. In every case the instructions are devised by humans to do something, and carried out by a computer with greater automation and speed than humans can possibly manage. Without the guidance of humans, the computer is utterly useless, but with human guidance it is a transformative tool. We see modern society completely reshaped by the computer. Too often the focus of humans is on the tool and not the things that give it power, skillful human instructions devised by creative intellects. Dangerously, science is falling into this trap, and the misunderstanding of the true dynamic may have disastrous consequences for the state of progress. We must keep in mind the nature of computing and man’s key role in its utility. Nothing is remotely wrong with creating working software to demonstrate a mathematical concept. Often mathematics is empowered by the tangible demonstration of the utility of the ideas expressed in code. The problem occurs when the code becomes the central activity and mathematics is subdued in priority. Increasingly, the essential aspects of mathematics are absent from the demands of the research being replaced by software. This software is viewed as an investment that must be transferred along to new generations of computers. The issue is that the porting of libraries of mathematical code has become the raison d’etre for research. This porting has swallowed innovation in mathematical ideas whole, and the balance in research is desperately lacking.

Nothing is remotely wrong with creating working software to demonstrate a mathematical concept. Often mathematics is empowered by the tangible demonstration of the utility of the ideas expressed in code. The problem occurs when the code becomes the central activity and mathematics is subdued in priority. Increasingly, the essential aspects of mathematics are absent from the demands of the research being replaced by software. This software is viewed as an investment that must be transferred along to new generations of computers. The issue is that the porting of libraries of mathematical code has become the raison d’etre for research. This porting has swallowed innovation in mathematical ideas whole, and the balance in research is desperately lacking. impoverishing our future. In addition we are failing to take advantage of the skills, talents and imagination of the current generation of scientists. We are creating a deficit of possibility that will harm our future in ways we can scarcely imagine. The guilt lies in the failure of our leaders to have sufficient faith in the power of human thought and innovation to continue to march forward into the future in the manner we have in the

impoverishing our future. In addition we are failing to take advantage of the skills, talents and imagination of the current generation of scientists. We are creating a deficit of possibility that will harm our future in ways we can scarcely imagine. The guilt lies in the failure of our leaders to have sufficient faith in the power of human thought and innovation to continue to march forward into the future in the manner we have in the

which can be a wonderful characteristic, but I know what Los Alamos used to mean, and it causes me a great deal of personal pain to see the magnitude of the decline and damage we have done to it. The changes at Los Alamos have been done in the name of compliance, to bring an unruly institution to heel and conform to imposed mediocrity.

which can be a wonderful characteristic, but I know what Los Alamos used to mean, and it causes me a great deal of personal pain to see the magnitude of the decline and damage we have done to it. The changes at Los Alamos have been done in the name of compliance, to bring an unruly institution to heel and conform to imposed mediocrity. culture that Karen and I so greatly benefited from. Organizational culture is a deep well to draw from. It shapes so much of what we see from different institutions. At Los Alamos it has formed the underlying resistance to the imposition of the modern compliance culture. On the other hand, my current institution is tailor made to complete compliance, even subservience to the demands of our masters. When those masters have no interest in progress, quality, or productivity, the result in unremitting mediocrity. This is the core of the discussion, our master’s prime directive is compliance, which bluntly and specifically means “don’t ever fuck up!” In this context Los Alamos is the king of the fuck-ups, and others simply keep places nose clean thus succeeding in the eyes of the masters..

culture that Karen and I so greatly benefited from. Organizational culture is a deep well to draw from. It shapes so much of what we see from different institutions. At Los Alamos it has formed the underlying resistance to the imposition of the modern compliance culture. On the other hand, my current institution is tailor made to complete compliance, even subservience to the demands of our masters. When those masters have no interest in progress, quality, or productivity, the result in unremitting mediocrity. This is the core of the discussion, our master’s prime directive is compliance, which bluntly and specifically means “don’t ever fuck up!” In this context Los Alamos is the king of the fuck-ups, and others simply keep places nose clean thus succeeding in the eyes of the masters.. productivity is never a priority in the modern world. This is especially true once the institutions realized that they could bullshit their way through accomplishment without risking the core value of compliance. Thus doing anything real and difficult is detrimental because you can more easily BS your way to excellence and not run the risk of violating the demands of compliance. In large part compliance assures the most precious commodity in the modern research institution, funding. Lack of compliance is punished by lack of funding. Our chains are created out of money.

productivity is never a priority in the modern world. This is especially true once the institutions realized that they could bullshit their way through accomplishment without risking the core value of compliance. Thus doing anything real and difficult is detrimental because you can more easily BS your way to excellence and not run the risk of violating the demands of compliance. In large part compliance assures the most precious commodity in the modern research institution, funding. Lack of compliance is punished by lack of funding. Our chains are created out of money. A large part of the compliance is lack of resistance to intellectually poor programs. There was once a time when the Labs helped craft the programs that fund them. With each passing year this dynamic breaks down, and the intellectual core of crafting well-defined programs to accomplish important National goals wanes. Why engage in the hard work of providing feedback when it threatens the flow of money? Increasingly the only sign of success is the aggregate dollar figure flowing into a given institution or organization. Any actual quality or accomplishment is merely coincidental. Why focus on excellence or quality when it is so much easier to simply generate a press release that looks good.

A large part of the compliance is lack of resistance to intellectually poor programs. There was once a time when the Labs helped craft the programs that fund them. With each passing year this dynamic breaks down, and the intellectual core of crafting well-defined programs to accomplish important National goals wanes. Why engage in the hard work of providing feedback when it threatens the flow of money? Increasingly the only sign of success is the aggregate dollar figure flowing into a given institution or organization. Any actual quality or accomplishment is merely coincidental. Why focus on excellence or quality when it is so much easier to simply generate a press release that looks good. bad things ever happening. In the end the only way to do this is stop all progress and make sure no one ever accomplishes anything substantial.

bad things ever happening. In the end the only way to do this is stop all progress and make sure no one ever accomplishes anything substantial. Over the past few decades there has been a lot of sturm and drang around the prospect that computation changed science in some fundamental way. The proposition was that computation formed a new way of conducting scientific work to compliment theory, experiment/observation. In essence computation had become the third way for science. I don’t think this proposition stands the test of time and should be rejected. A more proper way to view computation is as a new tool that aids scientists. Traditional computational science is primarily a means of investigating theoretical models of the universe in ways that classical mathematics could not. Today this role is expanding to include augmentation of data acquisition, analysis, and exploration well beyond the capabilities of unaided humans. Computers make for better science, but recognizing that it does change science at all is important to make good decisions.

Over the past few decades there has been a lot of sturm and drang around the prospect that computation changed science in some fundamental way. The proposition was that computation formed a new way of conducting scientific work to compliment theory, experiment/observation. In essence computation had become the third way for science. I don’t think this proposition stands the test of time and should be rejected. A more proper way to view computation is as a new tool that aids scientists. Traditional computational science is primarily a means of investigating theoretical models of the universe in ways that classical mathematics could not. Today this role is expanding to include augmentation of data acquisition, analysis, and exploration well beyond the capabilities of unaided humans. Computers make for better science, but recognizing that it does change science at all is important to make good decisions. The key to my rejection of the premise that computation is a close examination of what science is. Science is a systematic endeavor to understand and organize knowledge of the universe in a testable framework. Standard computation is conducted in a systematic manner to conduct studies of the solution to theoretical equations, but the solutions always depend entirely on the theory. Computation also provides more general ways of testing theory and making predictions well beyond the approaches available prior to computation. Computation frees us of limitations for solving the equations comprising the theory, but nothing about the fundamental dynamic in play. The key point is that utilizing computation is as an enhanced tool set to conduct science in an otherwise standard way.

The key to my rejection of the premise that computation is a close examination of what science is. Science is a systematic endeavor to understand and organize knowledge of the universe in a testable framework. Standard computation is conducted in a systematic manner to conduct studies of the solution to theoretical equations, but the solutions always depend entirely on the theory. Computation also provides more general ways of testing theory and making predictions well beyond the approaches available prior to computation. Computation frees us of limitations for solving the equations comprising the theory, but nothing about the fundamental dynamic in play. The key point is that utilizing computation is as an enhanced tool set to conduct science in an otherwise standard way.

Observations still require human ingenuity and innovation to be achieved. This can take the form of the mere inspiration of measuring or observing a certain factor in the World. Another form is the development of measurement devices that allow measurements. Here is a place where computation is playing a greater and greater role. In many cases computation allows the management of mountains of data that are unthinkably large by former standards. Another way of changing data that is either complementary or completely different is analysis. New methods are available to enhance diagnostics or see effects that were previously hidden or invisible. In essence the ability to drag signal from noise and make the unseeable, clear and crisp. All of these uses are profoundly important to science, but it is science that still operates as it did before. We just have better tools to apply to its conduct.

Observations still require human ingenuity and innovation to be achieved. This can take the form of the mere inspiration of measuring or observing a certain factor in the World. Another form is the development of measurement devices that allow measurements. Here is a place where computation is playing a greater and greater role. In many cases computation allows the management of mountains of data that are unthinkably large by former standards. Another way of changing data that is either complementary or completely different is analysis. New methods are available to enhance diagnostics or see effects that were previously hidden or invisible. In essence the ability to drag signal from noise and make the unseeable, clear and crisp. All of these uses are profoundly important to science, but it is science that still operates as it did before. We just have better tools to apply to its conduct. One of the big ways for computation to reflect the proper structure of science is verification and validation (V&V). In a nutshell V&V is the classical scientific method applied to computational modeling and simulation in a structured, disciplined manner. The high performance computing programs being rolled out today ignore verification and validation almost entirely. Science is supposed to arrive via computation as if by magic. If it is present it is an afterthought. The deeper and more pernicious danger is the belief by many that modeling and simulation can produce data of equal (or even greater) validity than nature itself. This is not a recipe for progress, but rather a recipe for disaster. We are priming ourselves for believing some rather dangerous fictions.

One of the big ways for computation to reflect the proper structure of science is verification and validation (V&V). In a nutshell V&V is the classical scientific method applied to computational modeling and simulation in a structured, disciplined manner. The high performance computing programs being rolled out today ignore verification and validation almost entirely. Science is supposed to arrive via computation as if by magic. If it is present it is an afterthought. The deeper and more pernicious danger is the belief by many that modeling and simulation can produce data of equal (or even greater) validity than nature itself. This is not a recipe for progress, but rather a recipe for disaster. We are priming ourselves for believing some rather dangerous fictions.

The deepest issue with current programs pushing forward on the computing hardware is their balance. The practice of scientific computing requires the interaction and application of great swathes of scientific disciplines. Computing hardware is a small component in the overall scientific enterprise and among the aspect least responsible for the success. The single greatest element in the success of scientific computing is the nature of the models being solved. Nothing else we can focus on has anywhere close to this impact. To put this differently, if a model is incorrect no amount of computer speed, mesh resolution or numerical accuracy can rescue the solution. This is the statement of how scientific theory applies to computation. Even if the model is unyieldingly correct, then the method and approach to solving the model is the next largest aspect in terms of impact. The damning thing about exascale computing is the utter lack of emphasis on either of these activities. Moreover without the application of V&V in a structured, rigorous and systematic manner, these shortcomings will remain unexposed.

The deepest issue with current programs pushing forward on the computing hardware is their balance. The practice of scientific computing requires the interaction and application of great swathes of scientific disciplines. Computing hardware is a small component in the overall scientific enterprise and among the aspect least responsible for the success. The single greatest element in the success of scientific computing is the nature of the models being solved. Nothing else we can focus on has anywhere close to this impact. To put this differently, if a model is incorrect no amount of computer speed, mesh resolution or numerical accuracy can rescue the solution. This is the statement of how scientific theory applies to computation. Even if the model is unyieldingly correct, then the method and approach to solving the model is the next largest aspect in terms of impact. The damning thing about exascale computing is the utter lack of emphasis on either of these activities. Moreover without the application of V&V in a structured, rigorous and systematic manner, these shortcomings will remain unexposed. In summary, we are left to draw a couple of big conclusions: computation is not a new way to do science, but rather an enabling tool for doing standard science better. If we want to get the most out of computing requires a deep and balanced portfolio of scientific activities. The current drive for performance with computing hardware ignores the most important aspects of the portfolio, if science is indeed the objective. If we want to get the most science out of computation, a vigorous V&V program is one way to inject the scientific method into the work. V&V is the scientific method and gaps in V&V reflect gaps in scientific credibility. Simply recognizing how scientific progress occurs and following that recipe can achieve a similar effect. The lack of scientific vitality in current computing programs is utterly damning.

In summary, we are left to draw a couple of big conclusions: computation is not a new way to do science, but rather an enabling tool for doing standard science better. If we want to get the most out of computing requires a deep and balanced portfolio of scientific activities. The current drive for performance with computing hardware ignores the most important aspects of the portfolio, if science is indeed the objective. If we want to get the most science out of computation, a vigorous V&V program is one way to inject the scientific method into the work. V&V is the scientific method and gaps in V&V reflect gaps in scientific credibility. Simply recognizing how scientific progress occurs and following that recipe can achieve a similar effect. The lack of scientific vitality in current computing programs is utterly damning. My wife has a very distinct preference in late night TV shows. First, the show cannot be on late night TV, she is fast asleep by 9:30 most nights. Secondly, she is quite loyal. More than twenty years ago she was essentially forced to watch late night TV while breastfeeding our newborn daughter. Conan O’Brien kept her laughing and smiling through many late night feedings. He isn’t the best late night host, but he is almost certainly the silliest. His shtick is simply stupid with a certain sophisticated spin. One of the dumb bits on his current show is “Why China is kicking

My wife has a very distinct preference in late night TV shows. First, the show cannot be on late night TV, she is fast asleep by 9:30 most nights. Secondly, she is quite loyal. More than twenty years ago she was essentially forced to watch late night TV while breastfeeding our newborn daughter. Conan O’Brien kept her laughing and smiling through many late night feedings. He isn’t the best late night host, but he is almost certainly the silliest. His shtick is simply stupid with a certain sophisticated spin. One of the dumb bits on his current show is “Why China is kicking our ass”. It features Americans doing all sorts of thoughtless and idiotic things on video with the premise being that our stupidity is the root of any loss of American hegemony. As sad as this might inherently be, the principle is rather broadly applicable and generally right on the money. The loss of preeminence nationally is more due to shear hubris; manifest overconfidence and sprawling incompetence on the part of Americans than anything being done by our competitors.

our ass”. It features Americans doing all sorts of thoughtless and idiotic things on video with the premise being that our stupidity is the root of any loss of American hegemony. As sad as this might inherently be, the principle is rather broadly applicable and generally right on the money. The loss of preeminence nationally is more due to shear hubris; manifest overconfidence and sprawling incompetence on the part of Americans than anything being done by our competitors. High performance computing is no different. By our chosen set of metrics, we are losing to the Chinese rather badly through a series of self-inflicted wounds instead of superior Chinese execution. Nonetheless, we are basically handing the crown of international achievement to them because we have become so incredibly incompetent at intellectual endeavors. Today, I’m going to unveil how we have thoughtlessly and idiotically run our high performance computing programs in a manner that undermines our success. My key point is that stopping the self-inflicted damage is the first step toward success. One must take careful note that the measure of superiority is based on a benchmark that has no practical value. Having metric of success with no practical value is a large part of the underlying problem.

High performance computing is no different. By our chosen set of metrics, we are losing to the Chinese rather badly through a series of self-inflicted wounds instead of superior Chinese execution. Nonetheless, we are basically handing the crown of international achievement to them because we have become so incredibly incompetent at intellectual endeavors. Today, I’m going to unveil how we have thoughtlessly and idiotically run our high performance computing programs in a manner that undermines our success. My key point is that stopping the self-inflicted damage is the first step toward success. One must take careful note that the measure of superiority is based on a benchmark that has no practical value. Having metric of success with no practical value is a large part of the underlying problem.

Scientific computing has been a thing for about 70 years being born during World War 2. During that history there has been a constant push and pull of capability of computers, software, models, mathematics, engineering, method and physics. Experimental work has been essential to keep computations tethered to reality. An advance in one area would spur the advances in another in a flywheel of progress. A faster computer would make new problems previously seeming impossible to solve suddenly tractable. Mathematical rigor may suddenly give people faith in a method that previously seemed ad hoc and unreliable. Physics might ask new questions counter to previous knowledge, or experiments would confirm or invalidate model applicability. The ability to express ideas in software allows algorithms and models to be used that may have been too complex with older software systems. Innovative engineering provides new applications for computing that extend the scope and reach of computing to new areas of societal impact. Every single one of these elements is subdued in the present approach to HPC, and robs the ecosystem of vitality and power. We have learned these lessons in the recent past, yet swiftly forgotten them when composing this new program.

Scientific computing has been a thing for about 70 years being born during World War 2. During that history there has been a constant push and pull of capability of computers, software, models, mathematics, engineering, method and physics. Experimental work has been essential to keep computations tethered to reality. An advance in one area would spur the advances in another in a flywheel of progress. A faster computer would make new problems previously seeming impossible to solve suddenly tractable. Mathematical rigor may suddenly give people faith in a method that previously seemed ad hoc and unreliable. Physics might ask new questions counter to previous knowledge, or experiments would confirm or invalidate model applicability. The ability to express ideas in software allows algorithms and models to be used that may have been too complex with older software systems. Innovative engineering provides new applications for computing that extend the scope and reach of computing to new areas of societal impact. Every single one of these elements is subdued in the present approach to HPC, and robs the ecosystem of vitality and power. We have learned these lessons in the recent past, yet swiftly forgotten them when composing this new program. under siege. The assault on scientific competence is broad-based and pervasive as expertise is viewed with suspicion rather than respect. Part of this problem is the lack of intellectual stewardship reflected in numerous empty thoughtless programs. The second piece is the way we are managing science. A couple of easy things engrained into the way we do things that lead to systematic underachievement is inappropriately applied project planning and intrusive micromanagement into the scientific process. The issue isn’t management per se, but its utterly inappropriate application and priorities that are orthogonal to technical achievement.

under siege. The assault on scientific competence is broad-based and pervasive as expertise is viewed with suspicion rather than respect. Part of this problem is the lack of intellectual stewardship reflected in numerous empty thoughtless programs. The second piece is the way we are managing science. A couple of easy things engrained into the way we do things that lead to systematic underachievement is inappropriately applied project planning and intrusive micromanagement into the scientific process. The issue isn’t management per se, but its utterly inappropriate application and priorities that are orthogonal to technical achievement. ve no big long-term goals as a nation beyond simple survival. Its like we have forgotten to dream big and produce any sort of inspirational societal goals. Instead we create big soulless programs in the place of big goals. Exascale computing is perfect example. It is a goal without a real connection to anything societally important and is crafted solely for the purpose of getting money. It is absolutely vacuous and anti-intellectual at its core by viewing supercomputing as a hardware-centered enterprise. Then it is being managed like everything else with relentless short-term focus and failure avoidance. Unfortunately, even if it succeeds, we will continue our tumble into mediocrity.

ve no big long-term goals as a nation beyond simple survival. Its like we have forgotten to dream big and produce any sort of inspirational societal goals. Instead we create big soulless programs in the place of big goals. Exascale computing is perfect example. It is a goal without a real connection to anything societally important and is crafted solely for the purpose of getting money. It is absolutely vacuous and anti-intellectual at its core by viewing supercomputing as a hardware-centered enterprise. Then it is being managed like everything else with relentless short-term focus and failure avoidance. Unfortunately, even if it succeeds, we will continue our tumble into mediocrity. ne of the cornerstones of the program. SBSS provided a backstop against financial catastrophe at the Labs and provided long-term funding stability. This HPC element in SBSS was the ASCI program (which became the ASC program as it matured). The original ASCI program was relentlessly hardware focused with lots of computer science, along with activities to port older modeling and simulation codes to the new computers. This should seem very familiar to anyone looking at the new ECP program. The ASCI program is the model for the current exascale program. Within a few years it became clear that ASCI’s emphasis on hardware and computer science was inadequate to provide modeling and simulation support for SBSS with sufficient confidence. Important scientific elements were added to ASCI including algorithm and method development, verification and validation, and physics model development as well as stronger ties to experimental programs. These additions were absolutely essential for success of the program. That being said, these elements are all subcritical in terms of support, but they are much better than nothing.

ne of the cornerstones of the program. SBSS provided a backstop against financial catastrophe at the Labs and provided long-term funding stability. This HPC element in SBSS was the ASCI program (which became the ASC program as it matured). The original ASCI program was relentlessly hardware focused with lots of computer science, along with activities to port older modeling and simulation codes to the new computers. This should seem very familiar to anyone looking at the new ECP program. The ASCI program is the model for the current exascale program. Within a few years it became clear that ASCI’s emphasis on hardware and computer science was inadequate to provide modeling and simulation support for SBSS with sufficient confidence. Important scientific elements were added to ASCI including algorithm and method development, verification and validation, and physics model development as well as stronger ties to experimental programs. These additions were absolutely essential for success of the program. That being said, these elements are all subcritical in terms of support, but they are much better than nothing. f one looks at the ECP program the composition and emphasis looks just like the original ASCI program without the changes made shortly into its life. It is clear that the lessons learned by ASCI were ignored or forgotten by the new ECP program. It’s a reasonable conclusion that the main lesson taken from ASC program was how to get money by focusing on hardware. Two issues dominate the analysis of this connection:

f one looks at the ECP program the composition and emphasis looks just like the original ASCI program without the changes made shortly into its life. It is clear that the lessons learned by ASCI were ignored or forgotten by the new ECP program. It’s a reasonable conclusion that the main lesson taken from ASC program was how to get money by focusing on hardware. Two issues dominate the analysis of this connection:

ng. Simplicity and stripping away the complexities of reality were the order of the day. Today we are freed to a very large extent from the confines of analytical study by the capacity to approximate solutions to equations. We are free to study the universe as it actually is, and produce a deep study of reality. The analytical methods and ideas still have utility for gaining confidence in these numerical methods, but their lack of grasp on describing reality should be realized. Our ability to study the reality should be celebrated and be the center of our focus. Our seeming devotion to the ideal simply distracts us and draws attention from understanding the real World.

ng. Simplicity and stripping away the complexities of reality were the order of the day. Today we are freed to a very large extent from the confines of analytical study by the capacity to approximate solutions to equations. We are free to study the universe as it actually is, and produce a deep study of reality. The analytical methods and ideas still have utility for gaining confidence in these numerical methods, but their lack of grasp on describing reality should be realized. Our ability to study the reality should be celebrated and be the center of our focus. Our seeming devotion to the ideal simply distracts us and draws attention from understanding the real World. solutions. The ideal equations are supposed to represent the perfect, and in a sense the “hand of God” working in the cosmos. As such they represent the antithesis of moderni