There is nothing quite so useless, as doing with great efficiency, something that should not be done at all.

― Peter F. Drucker

This post is going to be a bit more personal than usual; I’m trying to get my head around why work is deeply unsatisfying, and how the current system seems to conspire to destroy all the awesome potential it should have. My job should have all the things we desire: meaning, empowerment and a quest for mastery. At some level we seem to be in an era that belittles all dreams and robs work of the meaning it should have, disempowers most, and undermines mastery of anything. Worse yet, mastery of things seems to invoke outright suspicion being regarded more than a threat then a resource. On the other hand, I freely admit that I’m lucky and have a good well-paying, modestly empowering job compared to the average Joe or Jane. So many have it so much worse. The flipside of this point of view is that we need to improve work across the board; if the best jobs are this crappy one can scarcely imagine how bad things are for normal or genuinely shitty jobs.

In my overall quest for quality and mastery I refuse to settle for this and it only makes my dilemma all the more confounding. As I state in the title, my job has almost everything going for it and it should be damn close to unambiguously awesome. In fact my job used to be awesome, and lots of forces beyond my control have worked hard to completely fuck that awesomeness up. Again getting to the confounding aspects of the situation, the forces that be seem to be absolutely hell bent on continuing to fuck things up, and turn awesome jobs into genuinely shitty ones. I’m sure the shitty jobs are almost unbearable. Nonetheless, I know that grading on a curve my job is still awesome, but I don’t settle for success being defined by being less shitty than other people. That’s just a recipe for things to get shittier! If I have these issues with my work, what the hell is the average person going through?

So before getting to all the things fucking everything up, let’s talk about why the job should be so incredibly fucking awesome. I get to be a scientist! I get to solve problems, and do math and work with incredible phenomena (some of which I’ve tattooed on my body). I get to invent things like new ways of solving problems. I get to learn and grow and develop new skills, hone old skills and work with a bunch of super smart people who love to share their wealth of knowledge. I get to write papers that other people read and build on, I get to read papers written by a bunch of people who are way smarter than me, and if I understand them I learn something. I get to speak at conferences (which can be in nice places to visit) and listen at them too on interesting topics, and get involved in deep debates over the boundaries of knowledge. I get to contribute to solving important problems for mankind, or my nation, or simply for the joy of solving them. I work with incredible technology that is literally at the very bleeding edge of what we know. I get to do all of this and provide a reasonably comfortable living for my loved ones.

So before getting to all the things fucking everything up, let’s talk about why the job should be so incredibly fucking awesome. I get to be a scientist! I get to solve problems, and do math and work with incredible phenomena (some of which I’ve tattooed on my body). I get to invent things like new ways of solving problems. I get to learn and grow and develop new skills, hone old skills and work with a bunch of super smart people who love to share their wealth of knowledge. I get to write papers that other people read and build on, I get to read papers written by a bunch of people who are way smarter than me, and if I understand them I learn something. I get to speak at conferences (which can be in nice places to visit) and listen at them too on interesting topics, and get involved in deep debates over the boundaries of knowledge. I get to contribute to solving important problems for mankind, or my nation, or simply for the joy of solving them. I work with incredible technology that is literally at the very bleeding edge of what we know. I get to do all of this and provide a reasonably comfortable living for my loved ones.

If failure is not an option, then neither is success.

― Seth Godin

All of the above is true, and here we get to the crux of the problem. When I look at each day I spend at work almost nothing in that day supports any of this. In a very real sense all the things that are awesome about my job are side projects or activities that only exist in the “white space” of my job. The actual job duties that anyone actually gives a shit about don’t involve anything from the above list of awesomeness. Everything I focus on and drive toward is the opposite of awesome; it is pure mediocre drudgery, a slog that starts on Monday and ends on Friday, only to start all over again. In a very deep and real sense, the work has evolved into a state where all the awesome things about being a scientist are not supported at all, and every fucking thing done by society at large undermines it. As a result we are steadily and completely hollowing out value, meaning, and joy from the work of being a scientist. This hollowing is systematic, but serves no higher purpose that I can see other than to place a sense of safety and control over things.

The Cul-de-Sac ( French for “dead end” ) … is a situation where you work and work and work and nothing much changes

― Seth Godin

So the real question to answer is how did we get to this point? How did we create systems whose sole purpose seems to be robbing life of meaning and value? How are formerly great institutions being converted into giant steaming piles of shit. Why is work becoming such a universal shit show? Work with meaning and purpose should be something society values both from the standpoint of pure productivity, but also for the sense of respect for humanity. Instead we are turning away from making work meaningful, and making steady choices that destroy the meaning in work. The forces at play are a combination of fear, greed, and power. Each of these forces has a role to play is a widespread and deep destruction of a potentially better future. These forces provide short-term comfort, but long-term damage that ultimately leaves us poorer both materially and spiritually.

Men go to far greater lengths to avoid what they fear than to obtain what they desire.

― Dan Brown

Of these forces, fear is the most acute and widespread. Fear is harnessed by the rich and powerful to hold onto and grow their power, their stranglehold on society. Across society we see people looking at the world and saying “Oh shit! this is scary, make it stop!” The rich and powerful can harness this chorus of fear to hold onto and enhance their power. The fear comes from the unknown and change, which is driving people into attempting to control things, which also suits the needs of the rich & powerful. This control gives people a false sense of safety and security at the cost of empowerment and meaning. For those at the top of the food chain, control is what they want because it allows them to hold onto their largess. The fear is basically used to enslave the population and cause them to willingly surrender for promises of safety and security against a myriad of fears. In most cases we don’t fear the greatest thing threatening us, the forces that work steadfastly to rob our lives of meaning. At work the fear is the great enemy of all that is good killing meaning, empowerment and mastery in one fell swoop.

Power does not corrupt. Fear corrupts… perhaps the fear of a loss of power.

― John Steinbeck

How does this manifest itself in my day-to-day work? A key mechanism in undermining meaning in work is the ever more intrusive and micromanaged money running research. The control comes under the guise of accountability (who can argue with that, right). The accountability leads to a systematic diminishment in achievement and has much more to do with a lack of societal trust (which embodies part of fear mechanics). Instead of insuring better results and money well spent, the whole dynamic creates a virtual straightjacket for everyone in the system that assures they actually create, learn and produce far less. We see research micromanaged, and projectized in ways that are utterly incongruent with how science can be conducted. The lack of trust translates to lack of risk and the lack of risk equates to lack of achievement (with empowerment and mastery sacrificed at the altar of accountability). This is only one aspect of how the control works to undermine work. There are so many more.

Our greatest fear should not be of failure but of succeeding at things in life that don’t really matter.

― Francis Chan

P art of these systematic control mechanisms at play is the growth of the management culture in all these institutions. Instead of valuing the top scientists and engineers who produce discovery, innovation and progress, we now value the management class above all else. The managers manage people, money and projects that have come to define everything. This is true at the Labs as it is at universities where the actual mission of both has been scarified to money and power. Neither the Labs nor Universities are producing what they were designed to create (weapons, students, knowledge,). Instead they have become money-laundering operations whose primary service is the careers of managers. All one has to do is see who are the headline grabbers from any of these places; it’s the managers (who by and large show no leadership). These managers are measured in dollars and people, not any actual achievements. All of this is enabled by control and control enables people to feel safe and in control. As long as reality doesn’t intrude we will go down this deep death spiral.

art of these systematic control mechanisms at play is the growth of the management culture in all these institutions. Instead of valuing the top scientists and engineers who produce discovery, innovation and progress, we now value the management class above all else. The managers manage people, money and projects that have come to define everything. This is true at the Labs as it is at universities where the actual mission of both has been scarified to money and power. Neither the Labs nor Universities are producing what they were designed to create (weapons, students, knowledge,). Instead they have become money-laundering operations whose primary service is the careers of managers. All one has to do is see who are the headline grabbers from any of these places; it’s the managers (who by and large show no leadership). These managers are measured in dollars and people, not any actual achievements. All of this is enabled by control and control enables people to feel safe and in control. As long as reality doesn’t intrude we will go down this deep death spiral.

We have priorities and emphasis in our work and money that have nothing to do with the reason our Labs, Universities or even companies exist. We have useless training that serves absolutely no purpose other than to check a box off. The excellence or quality of the work done has no priority at all. We have gotten to the point where peer review is a complete sham, and any honest assessment of the quality of the work is met with hostility. We should all wrap our heads collectively around this maxim of the modern workplace, it can be far worse for your career to demand technical quality as part of what you do than to do shoddy work. We are heading headlong into a mode of operation where mediocrity is enshrined as a key organizational value to be defended against potential assaults by competence. All of this can be viewed as the ultimate victory of form over substance. If it looks good, it must be good. The result is that the appearances are managed, and anything of substance is rejected.

The result of the emphasis on everything else except the core mission of our organizations is the systematic devaluation of those missions, along with a requisite creeping incompetence and mediocrity. In the process the meaning and value of the work takes a fatal hit. Actually expressing a value system of quality and excellence is now seen as a threat and becomes are career limiting perspective. A key aspect of the dynamic to  recognize is the relative simplicity of running mediocre operations without any drive for excellence. Its great work if you can get it! If your standards are complete shit, almost anything goes, and you avoid the need for conflict almost entirely. In fact the only source of conflict becomes the need to drive away any sense of excellence. Any hint of quality or excellence has the potential to overturn this entire operation and the sweet deal of running it. So any quality ideas are attacked and driven out as surely as the immune system attacks a virus. While this might be a tad hyperbolic, its not too far off at all, and the actual bull’s-eye for an ever growing swath of our modern world.

recognize is the relative simplicity of running mediocre operations without any drive for excellence. Its great work if you can get it! If your standards are complete shit, almost anything goes, and you avoid the need for conflict almost entirely. In fact the only source of conflict becomes the need to drive away any sense of excellence. Any hint of quality or excellence has the potential to overturn this entire operation and the sweet deal of running it. So any quality ideas are attacked and driven out as surely as the immune system attacks a virus. While this might be a tad hyperbolic, its not too far off at all, and the actual bull’s-eye for an ever growing swath of our modern world.

The key value is money and its continued flow. The whole system runs on a business model of getting money regardless of what it entails doing or how it is done. Of course having no standards makes this so much easier, if you’ll do any shitty thing as long as they pay you for it management is easier. With standards of quality this whole operation becomes self-replicating. In a very direct way the worse thing one can do is get hard work, so the system is wired to drive good work away. You’re actually better off doing shitty work held to shitty standards. Doing the right thing, of the thing right is viewed as a direct threat to the flow of money and generates an attack. The prime directive is money to fund people and measure the success of the managers. Whether or not the money generates excellent meaningful work or focuses on something of value simply does not matter. It becomes a completely viscous cycle where money breeds more money and more money can be bred by doing simple shoddy work than asking hard questions and demanding correct answers. In this way we can see how mediocrity becomes the value that is tolerated and excellence is reviled. Excellence is a threat to power, mediocrity simply accepts being lorded over by the incompetent.

At some level it is impossible to disconnect what is happening in science from the broader cultural trends. Everything happening in the political climate today is part of the trends I see at work. The political climate is utterly and completely corrosive, and the work environment is the same thing. In the United States we have had 20 years of government, which has been engineered to not function. This is to support the argument that government is bad and doesn’t work (and it should be smaller). The fact is that it is engineered not to work by the proponents of this philosophy. The result is a literal self-fulfilling prophesy, government doesn’t work if you don’t try to make it work. If we actually put effort into making it work, valued expertise and excellence, it would work just fine. We get shit because that’s what we ask for. If we demanded excellence and performance, and actually held people accountable for it, we might actually get it, but it would be hard, it would be demanding. The problem is that success would disprove the maxim that government is bad and doesn’t work.

One of my friends recently pointed out that the people managing and running the programs that fund the work at the Labs in Washington actually make less than our Postdocs at the Labs. The result is that we get what we pay for, incompetence, which grows more manifestly obvious with each passing year. If we want things to work we need to hire talented people and hold them to high standards, which means we need to pay them what they are worth.

We see a large body of people in society who are completely governed by fear above all else. The fear is driving people to make horrendous and destructive decisions politically. The fear is driving the workplace into the same set of horrendous and destructive decisions. Its not clear whether we will turn away from this mass fear before things get even worse. I worry that both work and politics will be governed by these fears until it pushes us over the precipice to disaster. Put differently, the shit show we see in public through politics mirrors the private shit show in our workplaces. The shit is likely to get much worse before it gets better.

We see a large body of people in society who are completely governed by fear above all else. The fear is driving people to make horrendous and destructive decisions politically. The fear is driving the workplace into the same set of horrendous and destructive decisions. Its not clear whether we will turn away from this mass fear before things get even worse. I worry that both work and politics will be governed by these fears until it pushes us over the precipice to disaster. Put differently, the shit show we see in public through politics mirrors the private shit show in our workplaces. The shit is likely to get much worse before it gets better.

There are two basic motivating forces: fear and love. When we are afraid, we pull back from life. When we are in love, we open to all that life has to offer with passion, excitement, and acceptance. We need to learn to love ourselves first, in all our glory and our imperfections. If we cannot love ourselves, we cannot fully open to our ability to love others or our potential to create. Evolution and all hopes for a better world rest in the fearlessness and open-hearted vision of people who embrace life.

― John Lennon

Stability is essential for computation to succeed. Better stability principles can pave the way for greater computational success. We are in dire need of new, expanded concepts for stability that provides paths forward toward uncharted vistas of simulation.

Stability is essential for computation to succeed. Better stability principles can pave the way for greater computational success. We are in dire need of new, expanded concepts for stability that provides paths forward toward uncharted vistas of simulation. nstability at Los Alamos during World War 2. This method is still the gold standard for analysis today in spite of rather profound limitations and applicability. In the early 1950’s Lax came up with

nstability at Los Alamos during World War 2. This method is still the gold standard for analysis today in spite of rather profound limitations and applicability. In the early 1950’s Lax came up with the equivalence theorem (interestingly both Von Neumann and Lax worked with Robert Richtmyer,

the equivalence theorem (interestingly both Von Neumann and Lax worked with Robert Richtmyer,  methodology using Mathematica,

methodology using Mathematica,  Hyperbolic PDEs have always been at the leading edge of computation because they are important to applications, difficult and this has attracted a lot of real unambiguous genius to solve it. I’ve mentioned a cadre of genius who blazed the trails 60 to 70 years ago (Von Neumann, Lax, Richtmyer

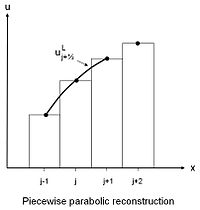

Hyperbolic PDEs have always been at the leading edge of computation because they are important to applications, difficult and this has attracted a lot of real unambiguous genius to solve it. I’ve mentioned a cadre of genius who blazed the trails 60 to 70 years ago (Von Neumann, Lax, Richtmyer  he desired outcome is to use the high-order solution as much as possible, but without inducing the dangerous oscillations. The key is to build upon the foundation of the very stable, but low accuracy, dissipative method. The theory that can be utilized makes the dissipative structure of the solution a nonlinear relationship. This produces a test of the local structure of the solution, which tells us when it is safe to be high-order, and when the solution is so discontinuous that the low order solution must be used. The result is a solution that is high-order as much as possible, and inherits the stability of the low-order solution gaining purchase on its essential properties (asymptotic dissipation and entropy-principles). These methods are so stable and powerful that one might utilize a completely unstable method as one of the options with very little negative consequence. This class of methods revolutionized computational fluid dynamics, and allowed the relative confidence in the use of methods to solve practical problems.

he desired outcome is to use the high-order solution as much as possible, but without inducing the dangerous oscillations. The key is to build upon the foundation of the very stable, but low accuracy, dissipative method. The theory that can be utilized makes the dissipative structure of the solution a nonlinear relationship. This produces a test of the local structure of the solution, which tells us when it is safe to be high-order, and when the solution is so discontinuous that the low order solution must be used. The result is a solution that is high-order as much as possible, and inherits the stability of the low-order solution gaining purchase on its essential properties (asymptotic dissipation and entropy-principles). These methods are so stable and powerful that one might utilize a completely unstable method as one of the options with very little negative consequence. This class of methods revolutionized computational fluid dynamics, and allowed the relative confidence in the use of methods to solve practical problems. need is far less obvious than for hyperbolic PDEs. The truth is that we have made rather stunning progress in both areas and the breakthroughs have put forth the illusion that the methods today are good enough. We need to recognize that this is awesome for those who developed the status quo, but a very bad thing if there are other breakthroughs ripe for the taking. In my view we are at such a point and missing the opportunity to make the “good enough,” “great” or even “awesome”. Nonlinear stability is deeply associated with adaptivity and ultimately more optimal and appropriate approximations for the problem at hand.

need is far less obvious than for hyperbolic PDEs. The truth is that we have made rather stunning progress in both areas and the breakthroughs have put forth the illusion that the methods today are good enough. We need to recognize that this is awesome for those who developed the status quo, but a very bad thing if there are other breakthroughs ripe for the taking. In my view we are at such a point and missing the opportunity to make the “good enough,” “great” or even “awesome”. Nonlinear stability is deeply associated with adaptivity and ultimately more optimal and appropriate approximations for the problem at hand. Further extensions of nonlinear stability would be useful for parabolic PDEs. Generally parabolic equations are fantastically forgiving so doing anything more complicated is not prized unless it produces better accuracy at the same time. Accuracy is imminently achievable because parabolic equations generate smooth solutions. Nonetheless these accurate solutions can still produce unphysical effects that violate other principles. Positivity is rarely threatened although this would be a reasonable property to demand. It is more likely that the solutions will violate some sort of entropy inequality in a mild manner. Instead of producing something demonstrably unphysical, the solution would simply not have enough entropy generated to be physical. As such we can see solutions approaching the right solution, but in a sense from the wrong direction that threatens to produce non-physically admissible solutions. One potential way to think about is might be in an application of heat conductions. One can examine whether or not the flow of heat matches the proper direction of heat flow locally, and if a high-order approximation does not either choose another high-order approximation that does, or limit to a lower order method wit unambiguous satisfaction of the proper direction. The intrinsically unfatal impact of these flaws mean they are not really addressed.

Further extensions of nonlinear stability would be useful for parabolic PDEs. Generally parabolic equations are fantastically forgiving so doing anything more complicated is not prized unless it produces better accuracy at the same time. Accuracy is imminently achievable because parabolic equations generate smooth solutions. Nonetheless these accurate solutions can still produce unphysical effects that violate other principles. Positivity is rarely threatened although this would be a reasonable property to demand. It is more likely that the solutions will violate some sort of entropy inequality in a mild manner. Instead of producing something demonstrably unphysical, the solution would simply not have enough entropy generated to be physical. As such we can see solutions approaching the right solution, but in a sense from the wrong direction that threatens to produce non-physically admissible solutions. One potential way to think about is might be in an application of heat conductions. One can examine whether or not the flow of heat matches the proper direction of heat flow locally, and if a high-order approximation does not either choose another high-order approximation that does, or limit to a lower order method wit unambiguous satisfaction of the proper direction. The intrinsically unfatal impact of these flaws mean they are not really addressed. As surely as the sun rises in the East, peer review that treasured and vital process for the health of science is dying or dead. In many cases we still try to conduct meaningful peer review, but increasingly it is simply a mere animated zombie form of peer review. The zombie peer review of today is a mere shadow of the living soul of science it once was. Its death is merely a manifestation of bigger broader societal trends such as those unraveling the political processes, or transforming our economies. We have allowed the quality of the work being done to become an assumption that we do not actively interrogate through a critical process (e.g., peer review). Instead if we examine the emphasis for how money is spent in science and engineering everything, but the quality of the technical work is focused on and demands are made. Instead there is an inherent assumption that the quality of the technical work is excellent and the organizational or institutional focus. With sufficient time this lack of emphasis is eroding the quality presumptions to the point where they no longer hold sway.

As surely as the sun rises in the East, peer review that treasured and vital process for the health of science is dying or dead. In many cases we still try to conduct meaningful peer review, but increasingly it is simply a mere animated zombie form of peer review. The zombie peer review of today is a mere shadow of the living soul of science it once was. Its death is merely a manifestation of bigger broader societal trends such as those unraveling the political processes, or transforming our economies. We have allowed the quality of the work being done to become an assumption that we do not actively interrogate through a critical process (e.g., peer review). Instead if we examine the emphasis for how money is spent in science and engineering everything, but the quality of the technical work is focused on and demands are made. Instead there is an inherent assumption that the quality of the technical work is excellent and the organizational or institutional focus. With sufficient time this lack of emphasis is eroding the quality presumptions to the point where they no longer hold sway. rk, wellspring of ideas and vital communication mechanism. Whether in the service of publishing cutting edge research, or providing quality checks for Laboratory research or engineering design its primal function is the same; quality, defensibility and clarity are derived through its proper application. In each of its fashions the peer review has an irreplaceable core of a community wisdom, culture and self-policing. With its demise, each of these is at risk of dying too. Rebuilding everything we are tearing down is going to be expensive, time-consuming and painful.

rk, wellspring of ideas and vital communication mechanism. Whether in the service of publishing cutting edge research, or providing quality checks for Laboratory research or engineering design its primal function is the same; quality, defensibility and clarity are derived through its proper application. In each of its fashions the peer review has an irreplaceable core of a community wisdom, culture and self-policing. With its demise, each of these is at risk of dying too. Rebuilding everything we are tearing down is going to be expensive, time-consuming and painful. Doing peer review isn’t given much wait professionally whether you’re a professor or working in a private or government lab. Peer review won’t give you tenure, or pay raises or other benefits; it is simply a moral act as part of the community. This character as an unrewarded moral act gets to the issue at the heart of things. Moral acts and “doing the right thing” is not valued today, nor are there definable norms of behavior that drive things. It simply takes the form of an unregulated professional tax, pro bono work. The way to fix this is change the system to value and reward good peer review (and by the same token punish bad in some way). This is a positive side of modern technology, which would be good to see, as the demise of peer review is driven to some extent by negative aspects of modernity, as I will discuss at the end of this essay.

Doing peer review isn’t given much wait professionally whether you’re a professor or working in a private or government lab. Peer review won’t give you tenure, or pay raises or other benefits; it is simply a moral act as part of the community. This character as an unrewarded moral act gets to the issue at the heart of things. Moral acts and “doing the right thing” is not valued today, nor are there definable norms of behavior that drive things. It simply takes the form of an unregulated professional tax, pro bono work. The way to fix this is change the system to value and reward good peer review (and by the same token punish bad in some way). This is a positive side of modern technology, which would be good to see, as the demise of peer review is driven to some extent by negative aspects of modernity, as I will discuss at the end of this essay. As a result reviewers rarely do a complete of good job of reviewing things, as they understand what the expected result is. Thus the review gets hollowed out from its foundation because the recipient of the review expects to get more than just a passing grade, they expect to get a giant pat on the back. If they don’t get their expected results, the reaction is often swift and punishing to those finding the problems. Often those looking over the shoulder are equally unaccepting of problems being found. Those overseeing work are highly political and worried about appearances or potential scandal. The reviewers know this to and that a bad review won’t result in better work, it will just be trouble for those being reviewed. The end result is that the peer review is broken by the review itself being hollow, the reviewers being easy on the work because of the explicit expectations and the implicit punishments and lack of follow through for any problems that might be found.

As a result reviewers rarely do a complete of good job of reviewing things, as they understand what the expected result is. Thus the review gets hollowed out from its foundation because the recipient of the review expects to get more than just a passing grade, they expect to get a giant pat on the back. If they don’t get their expected results, the reaction is often swift and punishing to those finding the problems. Often those looking over the shoulder are equally unaccepting of problems being found. Those overseeing work are highly political and worried about appearances or potential scandal. The reviewers know this to and that a bad review won’t result in better work, it will just be trouble for those being reviewed. The end result is that the peer review is broken by the review itself being hollow, the reviewers being easy on the work because of the explicit expectations and the implicit punishments and lack of follow through for any problems that might be found. We then get to the level above who is being reviewed and closer to the source of the problem, the political system. Our political systems are poisonous to everything and everyone. We do not have a political system perhaps anywhere in the World that is functioning to govern. The result is a collective inability to deal with issues, problem and challenges at a massive scale. We see nothing, but stagnation and blockage. We have a complete lack of will to deal with anything that is imperfect. Politics is always present and important because science and engineering are still intrinsically human activities, and humans need politics. The problem is that truth, and reality must play some normative role in decisions. The rejection of effective peer review is a rejection of reality as being germane and important in decisions. This rejection is ultimately unstable and unsustainable. The only question is when and how reality will impose itself, but it will happen, and in all likelihood through some sort calamity.

We then get to the level above who is being reviewed and closer to the source of the problem, the political system. Our political systems are poisonous to everything and everyone. We do not have a political system perhaps anywhere in the World that is functioning to govern. The result is a collective inability to deal with issues, problem and challenges at a massive scale. We see nothing, but stagnation and blockage. We have a complete lack of will to deal with anything that is imperfect. Politics is always present and important because science and engineering are still intrinsically human activities, and humans need politics. The problem is that truth, and reality must play some normative role in decisions. The rejection of effective peer review is a rejection of reality as being germane and important in decisions. This rejection is ultimately unstable and unsustainable. The only question is when and how reality will impose itself, but it will happen, and in all likelihood through some sort calamity. To get to a better state visa-vis peer review trust and honesty needs to become a priority. This is a piece of a broader rubric for progress toward a system that values work that is high in quality. We are not talking about excellence as superficially declared by the current branding exercise peer review has become, but the actual achievement of unambiguous excellence and achievement. The combination of honesty, trust and the search for excellence and achievement are needed to begin to fix our system. Much of the basic structure of our modern society is arrayed against this sort of change. We need to recognize the stakes in this struggle and prepare our selves for difficult times. Producing a system that supports something that looks like peer review will be a monumental struggle. We have become accustomed to a system that feeds on false excellence and achievement and celebrates scandal as an opiate for the masses.

To get to a better state visa-vis peer review trust and honesty needs to become a priority. This is a piece of a broader rubric for progress toward a system that values work that is high in quality. We are not talking about excellence as superficially declared by the current branding exercise peer review has become, but the actual achievement of unambiguous excellence and achievement. The combination of honesty, trust and the search for excellence and achievement are needed to begin to fix our system. Much of the basic structure of our modern society is arrayed against this sort of change. We need to recognize the stakes in this struggle and prepare our selves for difficult times. Producing a system that supports something that looks like peer review will be a monumental struggle. We have become accustomed to a system that feeds on false excellence and achievement and celebrates scandal as an opiate for the masses. simply lie to ourselves about how good everything is and how excellent all of are.

simply lie to ourselves about how good everything is and how excellent all of are. of decay ultimately ends the ability to conduct a peer review at all. Moreover, the culture that is arising in science acts as a further inhibition to effective review by removing the attitudes necessary for success from the basic repertoire of behaviors.

of decay ultimately ends the ability to conduct a peer review at all. Moreover, the culture that is arising in science acts as a further inhibition to effective review by removing the attitudes necessary for success from the basic repertoire of behaviors.

t has been a long time since I wrote a list post, and it seemed a good time to do one. They’re always really popular online, and it’s a good way to survey something. Looking forward into the future is always a nice thing when you need to be cheered up. There are lots of important things to do, and lots of massive opportunities. Maybe if we can muster our courage and vision we can solve some important problems and make a better world. I will cover science in general, and hedge the conversation toward computational science, cause that’s what I do and know the most about.

t has been a long time since I wrote a list post, and it seemed a good time to do one. They’re always really popular online, and it’s a good way to survey something. Looking forward into the future is always a nice thing when you need to be cheered up. There are lots of important things to do, and lots of massive opportunities. Maybe if we can muster our courage and vision we can solve some important problems and make a better world. I will cover science in general, and hedge the conversation toward computational science, cause that’s what I do and know the most about. Fixing the research environment and encouraging risk taking, innovation and tolerance for failure. I put this first because it impacts everything else so deeply. There are many wonderful things that the future holds for all of us, but the overall research environment is holding us back from the future we could be having. The environment for conducting good, innovative game changing research is terrible, and needs serious attention. We live in a time where all risk is shunned and any failure is punished. As a result innovation is crippled before it has a chance to breathe. The truth is that it is a symptom of a host larger societal issues revolving around our collective governance and capacity for change and progress.Somehow we have gotten the idea that research can be managed like a construction project, and such management is a mark of quality. Science absolutely needs great management, but the current brand of scheduled breakthroughs, milestones and micromanagement is choking the science away. We have lost the capacity to recognize that current management is only good for leeching money out of the economy for personal enrichment, and terrible for the organizations being managed whether it’s a business, laboratory or university. These current fads are oozing their way into every crevice of research including higher education where so much research happens. The result is a headlong march toward mediocrity and the destruction of the most fertile sources of innovation in the society. We are living off the basic research results of 30-50 years past, and creating an environment that will assure a less prosperous future. This plague is the biggest problem to solve but is truly reflective of a broader cultural milieu and may simply need to run its disastrous course.Over the weekend I read about the difference between first- and second-level thinking. First-level thinking looks for the obvious and superficial as a way of examining problems, issues and potential solutions. It is dealing wit

Fixing the research environment and encouraging risk taking, innovation and tolerance for failure. I put this first because it impacts everything else so deeply. There are many wonderful things that the future holds for all of us, but the overall research environment is holding us back from the future we could be having. The environment for conducting good, innovative game changing research is terrible, and needs serious attention. We live in a time where all risk is shunned and any failure is punished. As a result innovation is crippled before it has a chance to breathe. The truth is that it is a symptom of a host larger societal issues revolving around our collective governance and capacity for change and progress.Somehow we have gotten the idea that research can be managed like a construction project, and such management is a mark of quality. Science absolutely needs great management, but the current brand of scheduled breakthroughs, milestones and micromanagement is choking the science away. We have lost the capacity to recognize that current management is only good for leeching money out of the economy for personal enrichment, and terrible for the organizations being managed whether it’s a business, laboratory or university. These current fads are oozing their way into every crevice of research including higher education where so much research happens. The result is a headlong march toward mediocrity and the destruction of the most fertile sources of innovation in the society. We are living off the basic research results of 30-50 years past, and creating an environment that will assure a less prosperous future. This plague is the biggest problem to solve but is truly reflective of a broader cultural milieu and may simply need to run its disastrous course.Over the weekend I read about the difference between first- and second-level thinking. First-level thinking looks for the obvious and superficial as a way of examining problems, issues and potential solutions. It is dealing wit h things in an obvious and completely intellectually unengaged manner. Let’s just say that science today is governed by first-level thinking, and it’s a very bad thing. This is contrasted with second-level thinking, which teases problems apart, analyzes them, and looks beyond the obvious and superficial. It is the source of innovation, serendipity and inspiration. Second-level thinking is the realm of expertise and depth of thought, and we all should know that in today’s World the expert is shunned and reviled as being dangerous. We will all suffer the ill-effects of devaluing expert judgment and thought as applied to our very real problems.

h things in an obvious and completely intellectually unengaged manner. Let’s just say that science today is governed by first-level thinking, and it’s a very bad thing. This is contrasted with second-level thinking, which teases problems apart, analyzes them, and looks beyond the obvious and superficial. It is the source of innovation, serendipity and inspiration. Second-level thinking is the realm of expertise and depth of thought, and we all should know that in today’s World the expert is shunned and reviled as being dangerous. We will all suffer the ill-effects of devaluing expert judgment and thought as applied to our very real problems. y discoveries we make, and CRISPR seems like the epitome of this.

y discoveries we make, and CRISPR seems like the epitome of this. manufacturing quality and process is a huge aspect of the challenges. This is especially true for high performance parts where the requirements on the quality are very high. The other end of the problem is the opportunity to break free of traditional issues in design and open up the possibility of truly innovative approaches to optimality. Additional problems are associated with the quality and character of the material used in the design since its use in the creation of the part is substantially different than traditional manufactured part’s materials. Many of these challenges will be partially attacked using modeling & simulation drawing upon cutting edge computing platforms.

manufacturing quality and process is a huge aspect of the challenges. This is especially true for high performance parts where the requirements on the quality are very high. The other end of the problem is the opportunity to break free of traditional issues in design and open up the possibility of truly innovative approaches to optimality. Additional problems are associated with the quality and character of the material used in the design since its use in the creation of the part is substantially different than traditional manufactured part’s materials. Many of these challenges will be partially attacked using modeling & simulation drawing upon cutting edge computing platforms. being a bit haughty in my contention that what I’ve laid out in my blog constitutes second-level thinking about high performance computing, but I stand by it, and the summary that the first-level thinking governing our computing efforts today constitutes hopelessly superficial first-level thought. Really solving problems and winning at scientific computing requires a sea change toward applying the fruits of in-depth thinking about how to succeed at using computing as a means for societal good including the conduct of science.

being a bit haughty in my contention that what I’ve laid out in my blog constitutes second-level thinking about high performance computing, but I stand by it, and the summary that the first-level thinking governing our computing efforts today constitutes hopelessly superficial first-level thought. Really solving problems and winning at scientific computing requires a sea change toward applying the fruits of in-depth thinking about how to succeed at using computing as a means for societal good including the conduct of science. of the Orwellian Big Brother we should all fear. Taming big data is the combination of computing, algorithms, statistics and business all rolled into one. It is one of the places where scientific computing is actually alive with holistic energy driving innovation all the way from models of data (reality), algorithms for taming the data and hardware to handle to load. New sensors and measurement devices are only adding to the wealth as the Internet of things moves forward. In science, medicine and engineering new instruments and sensors are flooding the World with huge data sets that must be navigated, understood and utilized. The potential for discovery and progress is immense as is the challenge of grappling with the magnitude of the problem.

of the Orwellian Big Brother we should all fear. Taming big data is the combination of computing, algorithms, statistics and business all rolled into one. It is one of the places where scientific computing is actually alive with holistic energy driving innovation all the way from models of data (reality), algorithms for taming the data and hardware to handle to load. New sensors and measurement devices are only adding to the wealth as the Internet of things moves forward. In science, medicine and engineering new instruments and sensors are flooding the World with huge data sets that must be navigated, understood and utilized. The potential for discovery and progress is immense as is the challenge of grappling with the magnitude of the problem. reproducibility of their work. Nonetheless, this is literally a devil is in the details area and getting all the details right that contributes to research finding is really hard. The less oft spoken subtext to this discussion is the general societal lack of faith in science that is driving this issue. A more troubling thought regarding how replicable research actually comes from considering how uncommon replication actually is. It is uncommon to see actual replication, and difficult to fund or prioritize such work. Seeing how commonly such replication fails under these circumstances only heightens the sense of the magnitude of this problem.

reproducibility of their work. Nonetheless, this is literally a devil is in the details area and getting all the details right that contributes to research finding is really hard. The less oft spoken subtext to this discussion is the general societal lack of faith in science that is driving this issue. A more troubling thought regarding how replicable research actually comes from considering how uncommon replication actually is. It is uncommon to see actual replication, and difficult to fund or prioritize such work. Seeing how commonly such replication fails under these circumstances only heightens the sense of the magnitude of this problem. on those computers. Over time improvements in methods and algorithms have outpaced improvements in hardware. Recently this bit of wisdom has been lost to the sort of first-level thinking so common today. In big data we see needs for algorithm development overcoming the small-minded focus people rely upon. In scientific computing the benefits and potential is there for breakthroughs, but the vision and will to put effort into this is lacking. So I’m going to hedge toward the optimistic and hope that we see through the errors in our thinking and put faith in algorithms to unleash their power on our problems in the very near future!

on those computers. Over time improvements in methods and algorithms have outpaced improvements in hardware. Recently this bit of wisdom has been lost to the sort of first-level thinking so common today. In big data we see needs for algorithm development overcoming the small-minded focus people rely upon. In scientific computing the benefits and potential is there for breakthroughs, but the vision and will to put effort into this is lacking. So I’m going to hedge toward the optimistic and hope that we see through the errors in our thinking and put faith in algorithms to unleash their power on our problems in the very near future! ory is making problems like this tractable. It would seem that driverless-robot cars are solving this problem in one huge area of human activity. Multiple huge entities are working this problem and by all accounts making enormous progress. The standard for the robot cars would seem to be very much higher than humans, and the system is biased against this sort of risk. Nonetheless, it would seem we are very close to seeing driverless cars on a road near you in the not too very distant future. If we can see the use of robot cars on our roads with all the attendant complexity, risks and issues associated with driving it is only a matter of time before robots begin to take their place in many other activities.

ory is making problems like this tractable. It would seem that driverless-robot cars are solving this problem in one huge area of human activity. Multiple huge entities are working this problem and by all accounts making enormous progress. The standard for the robot cars would seem to be very much higher than humans, and the system is biased against this sort of risk. Nonetheless, it would seem we are very close to seeing driverless cars on a road near you in the not too very distant future. If we can see the use of robot cars on our roads with all the attendant complexity, risks and issues associated with driving it is only a matter of time before robots begin to take their place in many other activities. otentially victimized by cyber-criminals as more and more commerce and finance takes place online driving a demand for security. The government-police-military-intelligence apparatus also sees the potential for incredible security issues and possible avenues through the virtual records being created. At the same time the ability to have privacy or be anonymous is shrinking away. People have the desire to not have every detail of their lives exposed to the authorities (employers, neighbors, parents, children, spouses,…) meaning that cyber-privacy will become a big issue too. This will lead to immense technical-legal-social problems and conflict over how to balance the needs-demands-desires for security and privacy. How we deal with these issues will shape our society in huge ways over the coming years.

otentially victimized by cyber-criminals as more and more commerce and finance takes place online driving a demand for security. The government-police-military-intelligence apparatus also sees the potential for incredible security issues and possible avenues through the virtual records being created. At the same time the ability to have privacy or be anonymous is shrinking away. People have the desire to not have every detail of their lives exposed to the authorities (employers, neighbors, parents, children, spouses,…) meaning that cyber-privacy will become a big issue too. This will lead to immense technical-legal-social problems and conflict over how to balance the needs-demands-desires for security and privacy. How we deal with these issues will shape our society in huge ways over the coming years. The news of the Chinese success in solidifying their lead in supercomputer performance “shocked” the high performance-computing World a couple of weeks ago. To make things even more troubling to the United States, the Chinese achievement was accomplished with home grown hardware (a real testament to the USA’s export control law!). It comes as a blow to the American efforts to retake the lead in computing power. It wouldn’t matter if the USA or anyone else for that matter were doing things differently. Of course the subtext of the entire discussion around supercomputer speed is the supposition that raw computer power measures the broader capability in computing, which defines an important body of expertise for National economic and military security. A large part of winning in supercomputing is the degree to which this supposition is patently false. As falsehoods go, this is not ironclad and a matter of debate over lots of subtle details that I elaborated upon last week. The truth depends on how idiotic the discussion needs to be and one’s tolerance for subtle technical arguments. In today’s world arguments can only be simple, verging on moronic and technical discussions are suspect as a matter of course.

The news of the Chinese success in solidifying their lead in supercomputer performance “shocked” the high performance-computing World a couple of weeks ago. To make things even more troubling to the United States, the Chinese achievement was accomplished with home grown hardware (a real testament to the USA’s export control law!). It comes as a blow to the American efforts to retake the lead in computing power. It wouldn’t matter if the USA or anyone else for that matter were doing things differently. Of course the subtext of the entire discussion around supercomputer speed is the supposition that raw computer power measures the broader capability in computing, which defines an important body of expertise for National economic and military security. A large part of winning in supercomputing is the degree to which this supposition is patently false. As falsehoods go, this is not ironclad and a matter of debate over lots of subtle details that I elaborated upon last week. The truth depends on how idiotic the discussion needs to be and one’s tolerance for subtle technical arguments. In today’s world arguments can only be simple, verging on moronic and technical discussions are suspect as a matter of course. seful computers. Secondly, we need to invest our resources in the most effective areas for success these are modeling, methods and algorithms all of which are far greater sources of innovation and true performance for the accomplishment of modeling & simulation. The last thing is to change the focus of supercomputing to modeling & simulation because it is where the societal value of computing is delivered. If these three things were effectively executed upon victory would be assured to whomever made the choices. The option of taking more effective action is there for the taking.

seful computers. Secondly, we need to invest our resources in the most effective areas for success these are modeling, methods and algorithms all of which are far greater sources of innovation and true performance for the accomplishment of modeling & simulation. The last thing is to change the focus of supercomputing to modeling & simulation because it is where the societal value of computing is delivered. If these three things were effectively executed upon victory would be assured to whomever made the choices. The option of taking more effective action is there for the taking. We might take a single example to illustrate the issues associated with modeling: gradient diffusion closures for turbulence. The diffusive closure of the fluid equations for the effects of turbulence is ubiquitous, useful and a dead end without evolution. It is truly a marvel of science going back to the work of Prantl’s mixing length theory. Virtually all the modeling of fluids done with supercomputing is reliant on its fundamental assumptions and intrinsic limitations. The only place where its reach does not extend to is the direct numerical simulation where the flows are computed without the aid of modeling, i.e., a priori (which for the purposes here I will take as a given although it actually needs a lot of conversation itself). All of this said, the ability of direct numerical simulation to answer our scientific and technical questions are limited because turbulence is such a vigorous and difficult multiscale problem that even an exascale computer cannot slay.

We might take a single example to illustrate the issues associated with modeling: gradient diffusion closures for turbulence. The diffusive closure of the fluid equations for the effects of turbulence is ubiquitous, useful and a dead end without evolution. It is truly a marvel of science going back to the work of Prantl’s mixing length theory. Virtually all the modeling of fluids done with supercomputing is reliant on its fundamental assumptions and intrinsic limitations. The only place where its reach does not extend to is the direct numerical simulation where the flows are computed without the aid of modeling, i.e., a priori (which for the purposes here I will take as a given although it actually needs a lot of conversation itself). All of this said, the ability of direct numerical simulation to answer our scientific and technical questions are limited because turbulence is such a vigorous and difficult multiscale problem that even an exascale computer cannot slay. Once a model is conceived of in theory we need to solve it. If the improved model cannot yield solutions, its utility is limited. Methods for computing solutions to models beyond the capability of analytical tools were the transformative aspect of modeling & simulation. Before this many models were only solvable in very limited cases through apply a number of even more limiting assumptions and simplifications. Beyond just solve the model; we need to solve it correctly, accurately and efficiently. This is where methods come in. Some models are nigh on impossible to solve, or entail connections and terms that evade tractability. Thus coming up with a method to solve the model is a necessary element in the success of computing. In the early years of scientific computing many methods came into use that tamed models into ease of use. Today’s work on methods has slowed to a crawl, and in a sense our methods development research are victims of their own success.

Once a model is conceived of in theory we need to solve it. If the improved model cannot yield solutions, its utility is limited. Methods for computing solutions to models beyond the capability of analytical tools were the transformative aspect of modeling & simulation. Before this many models were only solvable in very limited cases through apply a number of even more limiting assumptions and simplifications. Beyond just solve the model; we need to solve it correctly, accurately and efficiently. This is where methods come in. Some models are nigh on impossible to solve, or entail connections and terms that evade tractability. Thus coming up with a method to solve the model is a necessary element in the success of computing. In the early years of scientific computing many methods came into use that tamed models into ease of use. Today’s work on methods has slowed to a crawl, and in a sense our methods development research are victims of their own success.

The biggest issue is the death of Moore’s law and our impending failure to produce the results promised. Rather than reform our programs to achieve real benefits for science and national security, we will see a catastrophic failure. This will be viewed through the usual lens of scandal. It is totally foreseeable and predictable. It would be advisable to fix this before disaster, but my guess is we don’t have the intellect, foresight, bravery or leadership to pull this off. The end is in sight and it won’t be pretty. Instead there is a different path that would be as glorious and successful. Does anyone have the ability to turn away from the disastrous path and consciously choose success?

The biggest issue is the death of Moore’s law and our impending failure to produce the results promised. Rather than reform our programs to achieve real benefits for science and national security, we will see a catastrophic failure. This will be viewed through the usual lens of scandal. It is totally foreseeable and predictable. It would be advisable to fix this before disaster, but my guess is we don’t have the intellect, foresight, bravery or leadership to pull this off. The end is in sight and it won’t be pretty. Instead there is a different path that would be as glorious and successful. Does anyone have the ability to turn away from the disastrous path and consciously choose success? eek was full of the USA’s continued losing streak to Chinese supercomputers. Their degree of supremacy is only growing and now the Chinese have more machines on the list of top high performance computers than the USA. Perhaps as importantly the Chinese didn’t relied on homegrown computer hardware rather than on the USA’s. One could argue that American export law cost them money, but also encouraged them to build their own. So is this a failure or success of the policy, or a bit of both. Rather than panic, maybe its time to admit that it doesn’t really matter. Rather than offer a lot of concern we should start a discussion about how meaningful it actually is. If the truth is told it isn’t very important at all.

eek was full of the USA’s continued losing streak to Chinese supercomputers. Their degree of supremacy is only growing and now the Chinese have more machines on the list of top high performance computers than the USA. Perhaps as importantly the Chinese didn’t relied on homegrown computer hardware rather than on the USA’s. One could argue that American export law cost them money, but also encouraged them to build their own. So is this a failure or success of the policy, or a bit of both. Rather than panic, maybe its time to admit that it doesn’t really matter. Rather than offer a lot of concern we should start a discussion about how meaningful it actually is. If the truth is told it isn’t very important at all. If we too aggressively pursue regaining the summit of this list we may damage the part of supercomputing that actually does matter. Furthermore what we aren’t discussing is the relative positions of the Chinese to the Americans (or the Europeans for that matter) in the part of computing that does matter: modeling & simulation. Modeling and simulation is the real reason we do computing and the value in it is not measured or defined by computing hardware. Granted that computer hardware plays a significant role in the overall capacity to conduct simulations, but is not even the dominant player in modeling effectiveness. Worse yet, in the process of throwing all our effort behind getting the fastest hardware, we are systematically undermining the parts of supercomputing that add real value and have far greater importance. Underlying this assessment is the conclusion that our current policy isn’t based on what is important and supports a focus on less important aspects of the field that simply are more explicable to lay people.

If we too aggressively pursue regaining the summit of this list we may damage the part of supercomputing that actually does matter. Furthermore what we aren’t discussing is the relative positions of the Chinese to the Americans (or the Europeans for that matter) in the part of computing that does matter: modeling & simulation. Modeling and simulation is the real reason we do computing and the value in it is not measured or defined by computing hardware. Granted that computer hardware plays a significant role in the overall capacity to conduct simulations, but is not even the dominant player in modeling effectiveness. Worse yet, in the process of throwing all our effort behind getting the fastest hardware, we are systematically undermining the parts of supercomputing that add real value and have far greater importance. Underlying this assessment is the conclusion that our current policy isn’t based on what is important and supports a focus on less important aspects of the field that simply are more explicable to lay people. We have set about a serious program to recapture the supercomputing throne. In its wake we will do untold amounts of damage to the future of modeling & simulation. We are in the process of spending huge sums of money chasing a summit that does not matter at all. In the process we will starve the very efforts that could allow us to unleash the full power of the science and engineering capability we should be striving for. A capability that would have massively positive impacts on all of the things supercomputing is supposed to contribute toward. The entire situation is patently absurd, and tragic. It is ironic that those who act to promote high performance computing are killing it. They are killing it because they fail to understand it or how science actually works.

We have set about a serious program to recapture the supercomputing throne. In its wake we will do untold amounts of damage to the future of modeling & simulation. We are in the process of spending huge sums of money chasing a summit that does not matter at all. In the process we will starve the very efforts that could allow us to unleash the full power of the science and engineering capability we should be striving for. A capability that would have massively positive impacts on all of the things supercomputing is supposed to contribute toward. The entire situation is patently absurd, and tragic. It is ironic that those who act to promote high performance computing are killing it. They are killing it because they fail to understand it or how science actually works. The single most important thing in modeling & simulation is the nature of the model itself. The model contains the entirety of the capacity of the rest of the simulation to reproduce reality. In looking at HPC today any effort to improve models is utterly and completely lacking. Next in importance are the methods that solve those models, and again we see no effort at all in developing better methods. Next we have algorithms whose character determines the efficiency of solution, and again the efforts to improve this character are completely absent. With algorithms we start to see some effort, but only in the service of implementing existing ones on the new computers. Next in importance comes code and system software and here we see significant effort. The effort is to move old codes onto new computers, and produce software systems that unveil the power of new computers to some utility. Last and furthest from importance is the hardware. Here, we see the greatest degree of focus. In the final analysis we see the greatest focus, money and energy on those things that matter least. It is the makings of a complete disaster, and a disaster of our own making.

The single most important thing in modeling & simulation is the nature of the model itself. The model contains the entirety of the capacity of the rest of the simulation to reproduce reality. In looking at HPC today any effort to improve models is utterly and completely lacking. Next in importance are the methods that solve those models, and again we see no effort at all in developing better methods. Next we have algorithms whose character determines the efficiency of solution, and again the efforts to improve this character are completely absent. With algorithms we start to see some effort, but only in the service of implementing existing ones on the new computers. Next in importance comes code and system software and here we see significant effort. The effort is to move old codes onto new computers, and produce software systems that unveil the power of new computers to some utility. Last and furthest from importance is the hardware. Here, we see the greatest degree of focus. In the final analysis we see the greatest focus, money and energy on those things that matter least. It is the makings of a complete disaster, and a disaster of our own making. It is time to focus our collective creative and innovative energy where opportunity exists. For example, the arena of algorithmic innovation has been a more fertile and productive route to improving the performance of modeling& simulation than hardware. The evidence for this source of progress is vast and varied; I’ve written on it on several occasions (

It is time to focus our collective creative and innovative energy where opportunity exists. For example, the arena of algorithmic innovation has been a more fertile and productive route to improving the performance of modeling& simulation than hardware. The evidence for this source of progress is vast and varied; I’ve written on it on several occasions ( All of this gets at a far more widespread and dangerous societal issue; we are incapable of dealing with any issue that is complex and technical. Increasingly we have no tolerance for anything where expert judgment is necessary. Science and scientists are increasingly being dismissed when their messaging is unpleasant or difficult to take. Examples abound with last week’s Brexit easily coming to mind, and climate change providing an ongoing example of willful ignorance. Talking about how well we or anyone else does modeling & simulation is subtle and technical. As everyone should be well aware subtle and technical arguments and discussions are not possible in the public sphere. As a result we are left with horrifically superficial measures such as raw supercomputing power as measured by a meaningless, but accepted benchmark. The result is a harvest of immense damage to actual capability and investment in a strategy that does very little to improve matters. We are left with a hopelessly ineffective high performance-computing program that wastes sums of money; entire careers and will in all likelihood result in a real loss of National supremacy in modeling & simulation.

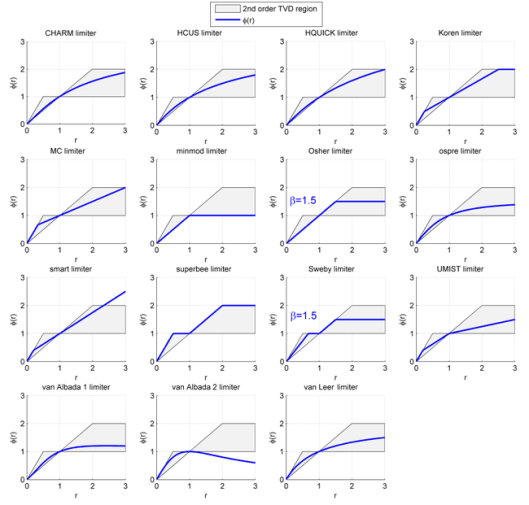

All of this gets at a far more widespread and dangerous societal issue; we are incapable of dealing with any issue that is complex and technical. Increasingly we have no tolerance for anything where expert judgment is necessary. Science and scientists are increasingly being dismissed when their messaging is unpleasant or difficult to take. Examples abound with last week’s Brexit easily coming to mind, and climate change providing an ongoing example of willful ignorance. Talking about how well we or anyone else does modeling & simulation is subtle and technical. As everyone should be well aware subtle and technical arguments and discussions are not possible in the public sphere. As a result we are left with horrifically superficial measures such as raw supercomputing power as measured by a meaningless, but accepted benchmark. The result is a harvest of immense damage to actual capability and investment in a strategy that does very little to improve matters. We are left with a hopelessly ineffective high performance-computing program that wastes sums of money; entire careers and will in all likelihood result in a real loss of National supremacy in modeling & simulation. A key part of the first generation’s methods success was the systematic justification for the form of limiters via a deep mathematical theory. This was introduced by Ami Harten with his total variation diminishing (TVD) methods. This nice structure for limiters and proofs of the non-oscillatory property really allowed these methods to take off. To amp things up even more, Sweby did some analysis and introduced a handy diagram to visualize the limiter and determine simply whether it fit the bill for being a monotone limiter. The theory builds upon some earlier work of Harten that showed how upwind methods provided a systematic basis for reliable computation through vanishing viscosity.

A key part of the first generation’s methods success was the systematic justification for the form of limiters via a deep mathematical theory. This was introduced by Ami Harten with his total variation diminishing (TVD) methods. This nice structure for limiters and proofs of the non-oscillatory property really allowed these methods to take off. To amp things up even more, Sweby did some analysis and introduced a handy diagram to visualize the limiter and determine simply whether it fit the bill for being a monotone limiter. The theory builds upon some earlier work of Harten that showed how upwind methods provided a systematic basis for reliable computation through vanishing viscosity. he TVD and monotonicity-preserving methods while reliable and tunable through these functional relations have serious shortcomings. These methods have serious limitations in accuracy especially near detailed features in solutions. These detailed structures are significantly degraded because the methods based on these principles are only first-order accurate in these areas basically being upwind differencing. In mathematical terms this means that the solution are first-order in the L-infinity norm, approximately one-and-a-half in the L-2 (energy) norm and second-order in the L1 norm. The L1 norm is natural for shocks so it isn’t a complete disaster. While this class of method is vastly better than their linear predecessors effectively providing solutions completely impossible before, the methods are imperfect. The desire is to remove the accuracy limitations of these methods especially to avoid degradation of features in the solution.

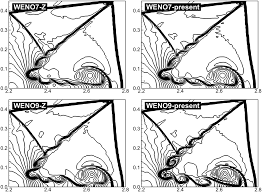

he TVD and monotonicity-preserving methods while reliable and tunable through these functional relations have serious shortcomings. These methods have serious limitations in accuracy especially near detailed features in solutions. These detailed structures are significantly degraded because the methods based on these principles are only first-order accurate in these areas basically being upwind differencing. In mathematical terms this means that the solution are first-order in the L-infinity norm, approximately one-and-a-half in the L-2 (energy) norm and second-order in the L1 norm. The L1 norm is natural for shocks so it isn’t a complete disaster. While this class of method is vastly better than their linear predecessors effectively providing solutions completely impossible before, the methods are imperfect. The desire is to remove the accuracy limitations of these methods especially to avoid degradation of features in the solution. A big part of understanding the issues with ENO comes down to how the method works. The approximation is made through hierarchically selecting the smoothest approximation (in some sense) for each order and working ones way to high-order. In this way the selection of the second-order term is dependent on the first-order term, and the third-order term is dependent on the selected second-order term, the fourth-order term on the third-order one,… This makes the ENO method subject to small variations in the data, and prey to pathological data. For example the data could be chosen so that the linearly unstable stencil is preferentially chosen leading to nasty solutions. The safety of the relatively smooth and dissipative method makes up for these dangers. Still these problems resulted in the creation of the weighted ENO (WENO) methods that have basically replaced ENO for all intents and purposes.