Better to get hurt by the truth than comforted with a lie.

― Khaled Hosseini

Being honest about one’s shortcomings is incredibly difficult. This is true whether one is looking at their self, or looking at a computer model. It’s even harder to let someone else be honest with you. This difficulty is the core of many problems with verification and validation (V&V). If done correctly, V&V is a form of radical honesty that many simply cannot tolerate. The reasons are easy to see if our reward systems are considered. Computer modeling desires to get great results on the problems they want to solve. Computer modelers are rated on their ability to get seemingly high-quality answers (https://williamjrider.wordpress.com/2016/12/22/verification-and-validation-with-uncertainty-quantification-is-the-scientific-method/ ). As a result, there is significant friction with honest V&V assessments, which provide uncertainty and doubt on the quality of results. The tension between good results and honesty will always favor the results. Thus V&V is done poorly to conserve the ability of modelers to believe their results are better than they really are. If we want V&V to be done well an additional level of emphasis needs to be placed on honesty.

If you do not tell the truth about yourself you cannot tell it about other people.

― Virginia Woolf

V&V is about assessing capability. It is not about getting great answers. This distinction is essential to recognize. V&V is about collecting highly credible evidence about the nature of modeling capability. By its very nature, the credibility of the evidence means that the results are whatever the results happen to be. If the results are good the evidence will show this persuasively. If the results are poor, the evidence will indicate the quality (https://williamjrider.wordpress.com/2017/09/22/testing-the-limits-of-our-knowledge/ ). The utility of V&V is providing a path to improvement along with evidence to support this path. As such, V&V provides a path and evidence for getting to improved results. This improved result would then be supported by V&V assessments. This entire process is predicated on the honesty of those conducting the work, but the management of these efforts is a problem. Management is continually trying to promote the great results outcomes for modeling. Unless the results are actually great, this promotion provides direction for lower quality V&V. In the process, honesty and evidence are typically sacrificed.

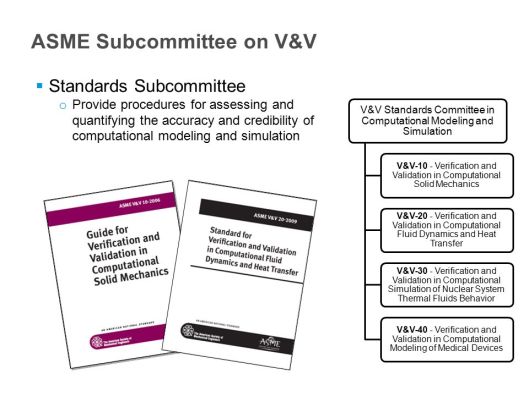

Standards Subcommittee. Provide procedures for assessing and quantifying the accuracy and credibility of computational modeling and simulation. V&V Standards Committee in Computational Modeling and Simulation. V&V-10 – Verification and Validation in Computational Solid Mechanics. V&V-20 – Verification and Validation in Computational Fluid Dynamics and Heat Transfer. V&V-30 – Verification and Validation in Computational Simulation of Nuclear System Thermal Fluids Behavior. V&V-40 – Verification and Validation in Computational Modeling of Medical Devices.

If we want to do V&V properly, something in this value system needs to change. Fundamentally, honesty and a true understanding of the basis of computational modeling must surpass the desire to show great capability. The trends in management of science are firmly arrayed against honestly assessing capability. With the prevalence of management by press release, and a marketing based sales pitch for science money both act to promote a basic lack of honesty and undermine disclosure of problems. V&V provides firm evidence of what we know, and what we don’t know. The quantitative and qualitative aspects of V&V can produce exceptionally useful evidence of where modeling needs to improve. These characteristics conflict directly with the narrative that modeling has already brought reality to heel. Program after program is sold on the basis that modeling can produce predictions of what will be seen in reality. Computational modeling is seen as an alternative to expensive and dangerous experiments and testing. It can provide reduced costs and cycle times for engineering. All of this can be a real benefit, but the degree of current mastery is seriously oversold.

Doing V&V properly can unmask this deception (I do mean deception even if the deceivers are largely innocent of outright graft). The deception is more the product of massive amounts of wishful thinking, and harmful group think focused on showing good results rather than honest results. Sometimes this means willfully ignoring evidence that does not support the mastery. In other cases, the results are based on heavy-handed calibrations, and the modeling is far from predictive. In the naïve view, the non-predictive modeling will be presented as predictions and hailed as great achievements. Those who manage modeling are largely responsible for this state of affairs. They reward the results that show how good the models are and punish honest assessment. Since V&V is the vehicle for honest assessment, it suffers. Modelers will either avoid V&V entirely, or thwart any effort to apply it properly. Usually the results are given without any firm breakdown of uncertainties, and simply assert that the “agreement is good” or the “agreement is excellent” without any evidentiary basis save plots that display data points and simulation values being “close”.

If you truly have faith in your convictions, then your convictions should be able to stand criticism and testing.

― DaShanne Stokes

This situation can be made better by changing the narrative about what constitutes good results. If we value knowledge and evidence of mastery as objectives instead of predictive power, we tilt the scales toward honesty. One of the clearest invitations to hedge toward dishonesty is the demand of “predictive modeling”. Predictive modeling has become a mantra and sales pitch instead of an objective. Vast sums of money are allotted to purchase computers, and place modeling software on these computers with the promise of prediction. We are told that we can predict how our nuclear weapons work so that we don’t have to test them. The new computer that is a little bit faster is the key to doing this (they always help, but are never the lynchpin). We can predict the effects of human activity on climate to be proactive about stemming its effects. We can predict weather and hurricanes with increasing precision. We can predict all sorts of consequences and effect better designs of our products. All of these predictive capabilities are real, and all have been massively oversold. We have lost our ability to look at challenges as good things and muster the will to overcome them. We need to tilt ourselves to be honest about how predictive we are, and understand where our efforts can make modeling better. Just as important we need to unveil the real limits on our ability to predict.

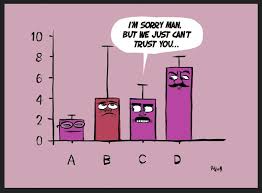

A large part of the conduct of V&V is unmasking the detailed nature of uncertainty. Some of this uncertainty comes from our lack of knowledge of nature, or flaws in our fundamental models. Other uncertainty is simply intrinsic to our reality. This is phenomena that is variable even with seemingly identical starting points. Separating these types of uncertainty, and defining their magnitude should be greatly in the service of science. For the uncertainties that we can reduce through greater knowledge, we can array efforts to affect this reduction. This must be coupled to the opportunity for experiment and theory to improve matters. On the other hand, if uncertainty is irreducible, it is important to factor it into decisions and accommodate its presence. By ignoring uncertainty with the practice of default of ZERO uncertainty (https://williamjrider.wordpress.com/2016/04/22/the-default-uncertainty-is-always-zero/ ), we become powerless to assert our authority, or practically react to it.

In the conduct of predictive science, we should look to uncertainty as one of our primary outcomes. When V&V is conducted with high professional standards, uncertainty is unveiled and estimated in magnitude. With our highly over-promised mantra of predictive modeling enabled by high performance computing, uncertainty is almost always viewed negatively. This creates an environment where willful or casual ignorance of uncertainty is tolerated and even encouraged. Incomplete and haphazard V&V practice becomes accepted because it serves the narrative of predictive science. The truth and actual uncertainty is treated as bad news, and greeted with scorn instead of praise. It is simply so much easier to accept the comfort that the modeling has achieved a level of mastery. This comfort is usually offered without evidence.

In the conduct of predictive science, we should look to uncertainty as one of our primary outcomes. When V&V is conducted with high professional standards, uncertainty is unveiled and estimated in magnitude. With our highly over-promised mantra of predictive modeling enabled by high performance computing, uncertainty is almost always viewed negatively. This creates an environment where willful or casual ignorance of uncertainty is tolerated and even encouraged. Incomplete and haphazard V&V practice becomes accepted because it serves the narrative of predictive science. The truth and actual uncertainty is treated as bad news, and greeted with scorn instead of praise. It is simply so much easier to accept the comfort that the modeling has achieved a level of mastery. This comfort is usually offered without evidence.

The trouble with most of us is that we’d rather be ruined by praise than saved by criticism.

― Norman Vincent Peale

Somehow a different narrative and value system needs to be promoted for science to flourish. A starting point would be a recognition of the value of highly professional V&V work and the desire for completeness and disclosure. A second element of the value system would be valuing progress in science. In keeping with the value on progress would be a recognition that detailed knowledge of uncertainty provides direct and useful evidence to steer science productively. We can also use uncertainty to act proactively in making decisions based on actual predictive power. Furthermore, we may choose not to use modeling to decide if the uncertainties are too large and informing decisions. The general support for the march forward of scientific knowledge and capability is greatly aided by V&V. If we have a firm accounting of our current state of knowledge and capability, we can mindfully choose where to put emphasis on progress.\

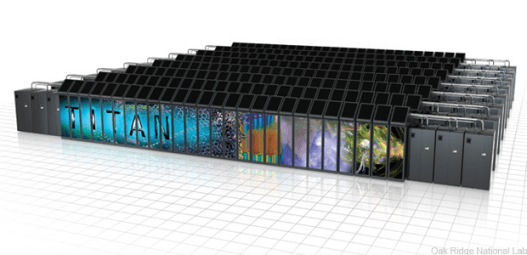

This last point gets at the problems with implementing a more professional V&V practice. If V&V finds that uncertainties are too large, the rational choice may be to not use modeling at all. This runs the risk of being politically incorrect. Programs are sold on predictive modeling, and the money might look like a waste! We might find that the uncertainties from numerical error are much smaller than other uncertainties, and the new super expensive, super-fast computer will not help make things any better. In other cases, we might find out that the model is not converging toward a (correct) solution. Again, the computer is not going to help. Actual V&V is likely to produce results that require changing programs and investments in reaction. Current management often looks to this as a negative and worries that the feedback will reflect poorly on previous investments. There is a deep-seated lack of trust between the source of the money and the work. The lack of trust is driving a lack of honesty in science. Any money spent on fruitless endeavors is viewed as a potential scandal. The money will simply be withdrawn instead of redirected more productively. No one trusts the scientific process to work effectively. The result is an unwillingness to engage in a frank and accurate dialog about how predictive we actually are.

This last point gets at the problems with implementing a more professional V&V practice. If V&V finds that uncertainties are too large, the rational choice may be to not use modeling at all. This runs the risk of being politically incorrect. Programs are sold on predictive modeling, and the money might look like a waste! We might find that the uncertainties from numerical error are much smaller than other uncertainties, and the new super expensive, super-fast computer will not help make things any better. In other cases, we might find out that the model is not converging toward a (correct) solution. Again, the computer is not going to help. Actual V&V is likely to produce results that require changing programs and investments in reaction. Current management often looks to this as a negative and worries that the feedback will reflect poorly on previous investments. There is a deep-seated lack of trust between the source of the money and the work. The lack of trust is driving a lack of honesty in science. Any money spent on fruitless endeavors is viewed as a potential scandal. The money will simply be withdrawn instead of redirected more productively. No one trusts the scientific process to work effectively. The result is an unwillingness to engage in a frank and accurate dialog about how predictive we actually are.

It’s discouraging to think how many people are shocked by honesty and how few by deceit.

― Noël Coward

It wouldn’t be too much of a stretch to say that technical matters are a minor aspect of improving V&V. This does not make light of, nor minimize the immense technical challenges in conducting V&V. The problem is that the current culture of science is utterly toxic for progress technically. We need a couple of elements to change in the culture of science to make progress. The first one is trust. The lack of trust is pervasive and utterly incapacitating (https://williamjrider.wordpress.com/2013/11/27/trust/, https://williamjrider.wordpress.com/2016/04/01/our-collective-lack-of-trust-and-its-massive-costs/, https://williamjrider.wordpress.com/2014/12/11/trust-and-truth-in-management/ ). Because of the underlying lack of trust, scientists and engineers cannot provide honest results or honest feedback on results. They do not feel safe and secure to do either. This is a core element surrounding the issues with peer review (https://williamjrider.wordpress.com/2016/07/16/the-death-of-peer-review/ ). In an environment where there is compromised trust, peer review cannot flourish because honesty is fatal.

Nothing in this world is harder than speaking the truth, nothing easier than flattery.

― Fyodor Dostoyevsky

The second is a value on honesty. Today’s World is full of examples where honesty is punished rather than rewarded. Speaking truth to power is a great way to get fired. Those of us who want to be honest are left in a precarious position. Choose safety and security while compromising our core principles, or stay true to our principles and risk everything. Over time, the forces of compromised integrity, marketing and bullshit over substance wear us down. Today the liars and charlatans are winning. Being someone of integrity is painful and overwhelming difficult. The system seems to be stacked against honest discourse and disclosure. Of course, honesty and trust are completely coupled. Both need to be supported and rewarded. V&V is simply one area where these trends play out and distort work.

It is both jarring and hopeful that the elements holding science back are evident in the wider world. The new and current political discourse is full of issues that are tied to trust and honesty. The degree to which we lack trust and honesty in the public sphere is completely disheartening. The entire system seems to be spiraling out of control. It does not seem that the system can continue on this path much longer (https://williamjrider.wordpress.com/2017/10/20/our-silence-is-their-real-power/ ). Perhaps we have hit bottom and things will get better. How much worse can things get? The time for things to start getting better has already passed. This is true in the broader public World as well as science. In both cases trust for each other, and a spirit of honesty would go a long way to providing a foundation for progress. The forces of stagnation and opposition to progress have won too much ground.

Integrity is telling myself the truth. And honesty is telling the truth to other people.

― Spencer Johnson

Nothing is so difficult as not deceiving oneself.

― Ludwig Wittgenstein

There is something seriously off about working on scientific computing today. Once upon a time it felt like working in the future where the technology and the work was amazingly advanced and forward-looking. Over the past decade this feeling has changed dramatically. Working in scientific computing is starting to feel worn-out, old and backwards. It has lost a lot of its sheen and it’s no longer sexy and fresh. If I look back 10 years everything we then had was top of the line and right at the “bleeding” edge. Now we seem to be living in the past, the current advances driving computing are absent from our work lives. We are slaving away in a totally reactive mode. Scientific computing is staid, immobile and static, where modern computing is dynamic, mobile and adaptive. If I want to step into the modern world, now I have to leave work. Work is a glimpse into the past instead of a window to the future. It is not simply the technology, but the management systems that come along with our approach. We are being left behind, and our leadership seems oblivious to the problem.

There is something seriously off about working on scientific computing today. Once upon a time it felt like working in the future where the technology and the work was amazingly advanced and forward-looking. Over the past decade this feeling has changed dramatically. Working in scientific computing is starting to feel worn-out, old and backwards. It has lost a lot of its sheen and it’s no longer sexy and fresh. If I look back 10 years everything we then had was top of the line and right at the “bleeding” edge. Now we seem to be living in the past, the current advances driving computing are absent from our work lives. We are slaving away in a totally reactive mode. Scientific computing is staid, immobile and static, where modern computing is dynamic, mobile and adaptive. If I want to step into the modern world, now I have to leave work. Work is a glimpse into the past instead of a window to the future. It is not simply the technology, but the management systems that come along with our approach. We are being left behind, and our leadership seems oblivious to the problem. Even worse than the irony is the price this approach is exacting on scientific computing. For example, the computing industry used to beat a path to scientific computing’s door, and now we have to basically bribe the industry to pay attention to us. A fair accounting of the role of government in computing is some combination of being a purely niche market, and partially pork barrel spending. Scientific computing used to be a driving force in the industry, and now lies as a cul-de-sac, or even pocket universe, divorced from the day-to-day reality of computing. Scientific computing is now a tiny and unimportant market to an industry that dominates the modern World. In the process, scientific computing has allowed itself to become disconnected from modernity, and hopelessly imbalanced. Rather than leverage the modern World and its technological wonders many of which are grounded in information science, it resists and fails to make best use of the opportunity. It robs scientific computing of impact in the broader World, and diminishes the draw of new talent to the field.

Even worse than the irony is the price this approach is exacting on scientific computing. For example, the computing industry used to beat a path to scientific computing’s door, and now we have to basically bribe the industry to pay attention to us. A fair accounting of the role of government in computing is some combination of being a purely niche market, and partially pork barrel spending. Scientific computing used to be a driving force in the industry, and now lies as a cul-de-sac, or even pocket universe, divorced from the day-to-day reality of computing. Scientific computing is now a tiny and unimportant market to an industry that dominates the modern World. In the process, scientific computing has allowed itself to become disconnected from modernity, and hopelessly imbalanced. Rather than leverage the modern World and its technological wonders many of which are grounded in information science, it resists and fails to make best use of the opportunity. It robs scientific computing of impact in the broader World, and diminishes the draw of new talent to the field. present imbalances. If one looks at the modern computing industry and its ascension to the top of the economic food chain, two things come to mind: mobile computing – cell phones – and the Internet. Mobile computing made connectivity and access ubiquitous with massive penetration into our lives. Networks and apps began to create new social connections in the real world and lubricated communications between people in a myriad of ways. The Internet became both a huge information repository, and commerce. but also an engine of social connection. In short order, the adoption and use of the internet and computing in the broader human World overtook and surpassed the use by scientists and business. Where once scientists used and knew computers better than anyone, now the World is full of people for whom computing is far more important than for science. Science once were in the lead, and now they are behind. Worse yet, science is not adapting to this new reality.

present imbalances. If one looks at the modern computing industry and its ascension to the top of the economic food chain, two things come to mind: mobile computing – cell phones – and the Internet. Mobile computing made connectivity and access ubiquitous with massive penetration into our lives. Networks and apps began to create new social connections in the real world and lubricated communications between people in a myriad of ways. The Internet became both a huge information repository, and commerce. but also an engine of social connection. In short order, the adoption and use of the internet and computing in the broader human World overtook and surpassed the use by scientists and business. Where once scientists used and knew computers better than anyone, now the World is full of people for whom computing is far more important than for science. Science once were in the lead, and now they are behind. Worse yet, science is not adapting to this new reality.

that Google solved is firmly grounded in scientific computing and applied mathematics. It is easy to see how massive the impact of this solution is. Today we in scientific computing are getting further and further from relevance to society. This niche does scientific computing little good because it is swimming against a tide that is more like a tsunami. The result is a horribly expensive and marginally effective effort that will fail needlessly where it has the potential to provide phenomenal value.

that Google solved is firmly grounded in scientific computing and applied mathematics. It is easy to see how massive the impact of this solution is. Today we in scientific computing are getting further and further from relevance to society. This niche does scientific computing little good because it is swimming against a tide that is more like a tsunami. The result is a horribly expensive and marginally effective effort that will fail needlessly where it has the potential to provide phenomenal value.

Ideally, it should not be, but proving that ideal is a very high bar that is almost never met. A great deal of compelling evidence is needed to support an assertion that the code is not part of the model. The real difficulty is that the more complex the modeling problem is, the more the code is definitely and irreducibly part of the model. These complex models are the most important uses of modeling and simulation. The complex models of engineered things, or important physical systems have many submodels each essential to successful modeling. The code is often designed quite specifically to model a class of problems. The code then becomes are clear part of the definition of the problem. Even in the simplest cases, the code includes the recipe for the numerical solution of a model. This numerical solution leaves its fingerprints all over the solution of the model. The numerical solution is imperfect and contains errors that influence the solution. For a code, there is the mesh and geometric description plus boundary conditions, not to mention the various modeling options employed. Removing the specific details of the implementation of the model in the code from consideration as part of the model becomes increasingly intractable.

Ideally, it should not be, but proving that ideal is a very high bar that is almost never met. A great deal of compelling evidence is needed to support an assertion that the code is not part of the model. The real difficulty is that the more complex the modeling problem is, the more the code is definitely and irreducibly part of the model. These complex models are the most important uses of modeling and simulation. The complex models of engineered things, or important physical systems have many submodels each essential to successful modeling. The code is often designed quite specifically to model a class of problems. The code then becomes are clear part of the definition of the problem. Even in the simplest cases, the code includes the recipe for the numerical solution of a model. This numerical solution leaves its fingerprints all over the solution of the model. The numerical solution is imperfect and contains errors that influence the solution. For a code, there is the mesh and geometric description plus boundary conditions, not to mention the various modeling options employed. Removing the specific details of the implementation of the model in the code from consideration as part of the model becomes increasingly intractable. central to the conduct of science and engineering that it should be dealt with head on. It isn’t going away. We model our reality when we want to make sure we understand it. We engage in modeling when we have something in the Real World, we want to demonstrate an understand of. Sometimes this is for the purpose of understanding, but ultimately this gives way to manipulation, the essence of engineering. The Real World is complex and effective models are usually immune to analytical solution.

central to the conduct of science and engineering that it should be dealt with head on. It isn’t going away. We model our reality when we want to make sure we understand it. We engage in modeling when we have something in the Real World, we want to demonstrate an understand of. Sometimes this is for the purpose of understanding, but ultimately this gives way to manipulation, the essence of engineering. The Real World is complex and effective models are usually immune to analytical solution. are implemented in computer code, or “a computer code”. The details and correctness of the implementation become inseparable from the model itself. It becomes quite difficult to extract the model as any sort of pure mathematical construct; the code is part of it intimately.

are implemented in computer code, or “a computer code”. The details and correctness of the implementation become inseparable from the model itself. It becomes quite difficult to extract the model as any sort of pure mathematical construct; the code is part of it intimately. k requiring detailed verification and validation. It is an abstraction and representation of the processes we believe produce observable physical effects. We theorize that the model explains how these effects are produced. Some models are not remotely this high minded; they are nothing, but crude empirical engines for reproducing what we observe. Unfortunately, as phenomena become more complex, these crude models become increasingly essential to modeling. They may not play a central role in the modeling, but still provide necessary physical effects for utility. These submodels necessary to produce realistic simulations become ever more prone to include these crude empirical engines as problems enter the engineering realm. As the reality of interest becomes more complicated, the modeling becomes elaborate and complex being a deep chain of efforts to grapple with these details.

k requiring detailed verification and validation. It is an abstraction and representation of the processes we believe produce observable physical effects. We theorize that the model explains how these effects are produced. Some models are not remotely this high minded; they are nothing, but crude empirical engines for reproducing what we observe. Unfortunately, as phenomena become more complex, these crude models become increasingly essential to modeling. They may not play a central role in the modeling, but still provide necessary physical effects for utility. These submodels necessary to produce realistic simulations become ever more prone to include these crude empirical engines as problems enter the engineering realm. As the reality of interest becomes more complicated, the modeling becomes elaborate and complex being a deep chain of efforts to grapple with these details. impact of numerical approximation. The numerical uncertainty needs to be accounted for to isolate the model. This uncertainty defines the level of approximation in the solution to the model, and a deviation from the mathematical idealization the model represents. In actual validation work, we see a stunning lack of this essential step from validation work presented. Another big part of the validation is recognizing the subtle differences between calibrated results and predictive simulation. Again, calibration is rarely elaborated in validation to the degree that it should.

impact of numerical approximation. The numerical uncertainty needs to be accounted for to isolate the model. This uncertainty defines the level of approximation in the solution to the model, and a deviation from the mathematical idealization the model represents. In actual validation work, we see a stunning lack of this essential step from validation work presented. Another big part of the validation is recognizing the subtle differences between calibrated results and predictive simulation. Again, calibration is rarely elaborated in validation to the degree that it should.

strong function of the discretization and solver used in the code. The question of whether the code matters comes down to asking if another code used skillfully would produce a significantly different result. This is rarely, if ever, the case. To make matters worse, verification evidence tends to be flimsy and half-assed. Even if we could make this call and ignore the code, we rarely have evidence that this is a valid and defensible decision.

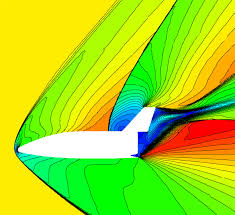

strong function of the discretization and solver used in the code. The question of whether the code matters comes down to asking if another code used skillfully would produce a significantly different result. This is rarely, if ever, the case. To make matters worse, verification evidence tends to be flimsy and half-assed. Even if we could make this call and ignore the code, we rarely have evidence that this is a valid and defensible decision. Another common thread to horribleness is the increasing tendency for science and engineering to be marketed. The press release has given way to the tweet, but the sentiment is the same. Science is marketed for the masses who have no taste for the details necessary for high quality work. A deep problem is that this lack of focus and detail is creeping back into science itself. Aspects of scientific and engineering work that used to be utterly essential are becoming increasingly optional. Much of this essential intellectual labor is associated with the hidden aspects of the investigation. Things related to mathematics, checking for correctness, assessment of error, preceding work, various doubts about results and alternative means of investigation. This sort of deep work has been crowded out by flashy graphics, movies and undisciplined demonstrations of vast computing power.

Another common thread to horribleness is the increasing tendency for science and engineering to be marketed. The press release has given way to the tweet, but the sentiment is the same. Science is marketed for the masses who have no taste for the details necessary for high quality work. A deep problem is that this lack of focus and detail is creeping back into science itself. Aspects of scientific and engineering work that used to be utterly essential are becoming increasingly optional. Much of this essential intellectual labor is associated with the hidden aspects of the investigation. Things related to mathematics, checking for correctness, assessment of error, preceding work, various doubts about results and alternative means of investigation. This sort of deep work has been crowded out by flashy graphics, movies and undisciplined demonstrations of vast computing power. ngineering. These terrible things would be awful with or without a computer being involved. Other things come from a lack of understanding of how to add computing to an investigation in a quality focused manner. The failure to recognize the multidisciplinary nature of computational science is often at the root of many of the awful things I will now describe.

ngineering. These terrible things would be awful with or without a computer being involved. Other things come from a lack of understanding of how to add computing to an investigation in a quality focused manner. The failure to recognize the multidisciplinary nature of computational science is often at the root of many of the awful things I will now describe. background work needed to create high quality results. A great deal of modeling is associated with bounding uncertainty or bounding the knowledge we possess. A single calculation is incapable of this sort of rigor and focus. If you see a single massive calculation as the sole evidence of work, you should smell and call “bullshit”. –

background work needed to create high quality results. A great deal of modeling is associated with bounding uncertainty or bounding the knowledge we possess. A single calculation is incapable of this sort of rigor and focus. If you see a single massive calculation as the sole evidence of work, you should smell and call “bullshit”. –  l error bars are an endangered species. We never see them in practice even though we know how to compute them. They should simply be a routine element of modern computing. They are almost never demanded by anyone, and their lack never precludes publication. It certainly never precludes a calculation being promoted as marketing for computing. If I was cynically minded, I might even day that error bars when used are opposed to marketing the calculation. The implicit message in the computing marketing is that the calculations are so accurate that they are basically exact, no error at all. If you don’t see error bars or some explicit discussion of uncertainty you should see the calculation as flawed, and potentially simply bullshit. –

l error bars are an endangered species. We never see them in practice even though we know how to compute them. They should simply be a routine element of modern computing. They are almost never demanded by anyone, and their lack never precludes publication. It certainly never precludes a calculation being promoted as marketing for computing. If I was cynically minded, I might even day that error bars when used are opposed to marketing the calculation. The implicit message in the computing marketing is that the calculations are so accurate that they are basically exact, no error at all. If you don’t see error bars or some explicit discussion of uncertainty you should see the calculation as flawed, and potentially simply bullshit. –  Taking the word of the marketing narrative is injurious to high quality science and engineering. The narrative seeks to defend the idea is that buying these super expensive computers is worthwhile, and magically produces great science and engineering. The path to advancing the impact of computational science dominantly flows through computing hardware. This is simply a deeply flawed and utterly naïve perspective. Great science and engineering is hard work and never a foregone conclusion. Getting high quality results depends on spanning the full range of disciplines associated with computational science adaptively as evidence and results demand. We should always ask hard questions of scientific work, and demand hard evidence of claims. Press releases and tweets are renowned for simply being cynical advertisements and lacking all rigor and substance.

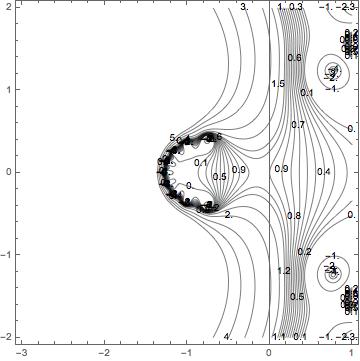

Taking the word of the marketing narrative is injurious to high quality science and engineering. The narrative seeks to defend the idea is that buying these super expensive computers is worthwhile, and magically produces great science and engineering. The path to advancing the impact of computational science dominantly flows through computing hardware. This is simply a deeply flawed and utterly naïve perspective. Great science and engineering is hard work and never a foregone conclusion. Getting high quality results depends on spanning the full range of disciplines associated with computational science adaptively as evidence and results demand. We should always ask hard questions of scientific work, and demand hard evidence of claims. Press releases and tweets are renowned for simply being cynical advertisements and lacking all rigor and substance. developments for solving hyperbolic PDEs in the 1980’s. For a long time this was one of the best methods available to solve the Euler equations. It still outperforms most of the methods in common use today. For astrophysics, it is the method of choice, and also made major inroads to the weather and climate modeling communities. In spite of having over 4000 citations, I can’t help but think that this paper wasn’t as influential as it could have been. This is saying a lot, but I think this is completely true. This partly due to its style, and relative difficulty as a read. In other words, the paper is not as pedagogically effective as it could have been. The most complex and difficult to understand version of the method is presented in the paper. The paper could have used a different approach to great effect by perhaps providing a simplified version to introduce the reader and deliver the more complex approach as a specific instance. Nonetheless, the paper was a massive milestone in the field.

developments for solving hyperbolic PDEs in the 1980’s. For a long time this was one of the best methods available to solve the Euler equations. It still outperforms most of the methods in common use today. For astrophysics, it is the method of choice, and also made major inroads to the weather and climate modeling communities. In spite of having over 4000 citations, I can’t help but think that this paper wasn’t as influential as it could have been. This is saying a lot, but I think this is completely true. This partly due to its style, and relative difficulty as a read. In other words, the paper is not as pedagogically effective as it could have been. The most complex and difficult to understand version of the method is presented in the paper. The paper could have used a different approach to great effect by perhaps providing a simplified version to introduce the reader and deliver the more complex approach as a specific instance. Nonetheless, the paper was a massive milestone in the field. Riemann solution. Advances that occurred later greatly simplified and clarified this presentation. This is a specific difficulty of being an early adopter of methods, the clarity of presentation and understanding is dimmed by purely narrative effects. Many of these shortcomings have been addressed in the recent literature discussed below.

Riemann solution. Advances that occurred later greatly simplified and clarified this presentation. This is a specific difficulty of being an early adopter of methods, the clarity of presentation and understanding is dimmed by purely narrative effects. Many of these shortcomings have been addressed in the recent literature discussed below.

elaborate ways of producing dissipation while achieving high quality. For very nonlinear problems this is not enough. The paper describes several ways of adding a little bit more, one of these is the shock flattening, and another is an artificial viscosity. Rather than use the classical Von Neumann-Richtmyer approach (that really is more like the Riemann solver), they add a small amount of viscosity using a technique developed by Lapidus appropriate for conservation form solvers. There are other techniques such as grid-jiggling that only really work with PPMLR and may not have any broader utility. Nonetheless, there may be aspects of the thought process that may be useful.

elaborate ways of producing dissipation while achieving high quality. For very nonlinear problems this is not enough. The paper describes several ways of adding a little bit more, one of these is the shock flattening, and another is an artificial viscosity. Rather than use the classical Von Neumann-Richtmyer approach (that really is more like the Riemann solver), they add a small amount of viscosity using a technique developed by Lapidus appropriate for conservation form solvers. There are other techniques such as grid-jiggling that only really work with PPMLR and may not have any broader utility. Nonetheless, there may be aspects of the thought process that may be useful. Riemann solvers with clarity and refinement hadn’t been developed by 1984. Nevertheless, the monolithic implementation of PPM has been a workhorse method for computational science. Through Paul Woodward’s efforts it is often the first real method to be applied to brand new supercomputers, and generates the first scientific results of note on them.

Riemann solvers with clarity and refinement hadn’t been developed by 1984. Nevertheless, the monolithic implementation of PPM has been a workhorse method for computational science. Through Paul Woodward’s efforts it is often the first real method to be applied to brand new supercomputers, and generates the first scientific results of note on them.

A year ago, I sat in one of my manager’s office seething in anger. After Trump’s election victory, my emotions shifted from despair to anger seamlessly. At that particular moment, it was anger that I felt. How could the United States possibly have elected this awful man President? Was the United States so completely broken that Donald Trump was a remotely plausible candidate, much less victor.

A year ago, I sat in one of my manager’s office seething in anger. After Trump’s election victory, my emotions shifted from despair to anger seamlessly. At that particular moment, it was anger that I felt. How could the United States possibly have elected this awful man President? Was the United States so completely broken that Donald Trump was a remotely plausible candidate, much less victor. Apparently, the answer is yes, the United States is that broken. I said something to the effect that we too are to blame for this horrible moment in history. I knew that both of us voted for Clinton, but felt that we played our own role in the election of our reigning moron-in-chief. Today a year into this national nightmare, the nature of our actions leading to this unfolding national and global tragedy is taking shape. We have grown to accept outright incompetence in many things, and now we have a genuinely incompetent manager as President. Lots of incompetence is accepted daily without even blinking, I see it every single day. We have a system that increasingly renders, the competent, incompetent by brutish compliance with directives born of broad-based societal dysfunction.

Apparently, the answer is yes, the United States is that broken. I said something to the effect that we too are to blame for this horrible moment in history. I knew that both of us voted for Clinton, but felt that we played our own role in the election of our reigning moron-in-chief. Today a year into this national nightmare, the nature of our actions leading to this unfolding national and global tragedy is taking shape. We have grown to accept outright incompetence in many things, and now we have a genuinely incompetent manager as President. Lots of incompetence is accepted daily without even blinking, I see it every single day. We have a system that increasingly renders, the competent, incompetent by brutish compliance with directives born of broad-based societal dysfunction. science, or really anything other than marketing himself. His is an utterly self-absorbed anti-intellectual completely lacking empathy and the basic knowledge we should expect him to have. The societal destruction wrought by this buffoon-in-chief is profound. Our most important institutions are being savaged. Divisions in society are being magnified and we stand on the brink of disaster. The worst thing is that this disaster is virtually everyone’s fault whether you stand on the right or the left, you are to blame. The United States was in a weakened state and the Trump virus was poised to infect us. Our immune system was seriously compromised and failed to reject this harmful organism.

science, or really anything other than marketing himself. His is an utterly self-absorbed anti-intellectual completely lacking empathy and the basic knowledge we should expect him to have. The societal destruction wrought by this buffoon-in-chief is profound. Our most important institutions are being savaged. Divisions in society are being magnified and we stand on the brink of disaster. The worst thing is that this disaster is virtually everyone’s fault whether you stand on the right or the left, you are to blame. The United States was in a weakened state and the Trump virus was poised to infect us. Our immune system was seriously compromised and failed to reject this harmful organism. argument about the need to diminish it. The result has been a steady march toward dysfunction and poor performance along with deep seated mistrust, if not outright distain.

argument about the need to diminish it. The result has been a steady march toward dysfunction and poor performance along with deep seated mistrust, if not outright distain.

The Democrats are no better other than some basic human capacity for empathy. For example, the Clintons were quite competent, but competence is something we as a nation don’t need any more, or even believe in. Americans chose the incompetent candidate for President over the competent one. At the same time the Democrats feed into the greedy and corrupt nature of modern governance with a fervor only exceeded by the Republicans. They are what my dad called “limousine liberals” and really cater to the rich and powerful first and foremost while appealing to some elements of compassion (it is still better than “limousine douchebags” on the right). As a result the Democratic party ends up being only slightly less corrupt than the Republican while offering none of the cultural red meat that drives the conservative culture warriors to the polls.

The Democrats are no better other than some basic human capacity for empathy. For example, the Clintons were quite competent, but competence is something we as a nation don’t need any more, or even believe in. Americans chose the incompetent candidate for President over the competent one. At the same time the Democrats feed into the greedy and corrupt nature of modern governance with a fervor only exceeded by the Republicans. They are what my dad called “limousine liberals” and really cater to the rich and powerful first and foremost while appealing to some elements of compassion (it is still better than “limousine douchebags” on the right). As a result the Democratic party ends up being only slightly less corrupt than the Republican while offering none of the cultural red meat that drives the conservative culture warriors to the polls. While both parties cater to the greedy needs of the rich and powerful, the differences in the approach is completely seen in the approach to social issues. The Republicans appeal to traditional values along with enough fear and hate to bring the voters out. They stand in the way of scary progress and the future as the guardians of the past. They are the force that defends American values, which means white people and Christian values. With the Republicans, you can be sure that the Nation will treat those we fear and hate with violence and righteous anger without regard to effectiveness. We will have a criminal justice system that exacts vengeance on the guilty, but does nothing to reform or treat criminals. The same forces provide just enough racially biased policy to make the racists in the Republican ranks happy.

While both parties cater to the greedy needs of the rich and powerful, the differences in the approach is completely seen in the approach to social issues. The Republicans appeal to traditional values along with enough fear and hate to bring the voters out. They stand in the way of scary progress and the future as the guardians of the past. They are the force that defends American values, which means white people and Christian values. With the Republicans, you can be sure that the Nation will treat those we fear and hate with violence and righteous anger without regard to effectiveness. We will have a criminal justice system that exacts vengeance on the guilty, but does nothing to reform or treat criminals. The same forces provide just enough racially biased policy to make the racists in the Republican ranks happy. correctness. They are indeed “snowflakes” who are incapable of debate and standing up for their beliefs. When they don’t like what someone has to say, they attack them and completely oppose the right to speak. The lack of tolerance on the left is one of the forces that powered Trump to the White House. It did this through a loss of any moral high ground, and the production of a divided and ineffective liberal movement. The left has science, progress, empathy and basic human decency on their side yet continue to lose. A big part of their losing strategy is the failure to support each other, and engage in an active dialog on the issues they care so much about.

correctness. They are indeed “snowflakes” who are incapable of debate and standing up for their beliefs. When they don’t like what someone has to say, they attack them and completely oppose the right to speak. The lack of tolerance on the left is one of the forces that powered Trump to the White House. It did this through a loss of any moral high ground, and the production of a divided and ineffective liberal movement. The left has science, progress, empathy and basic human decency on their side yet continue to lose. A big part of their losing strategy is the failure to support each other, and engage in an active dialog on the issues they care so much about. science. Both are wrought by the destructive tendency of the Republican party that makes governing impossible. They are a party of destruction, not creation. When Republicans are put in power they can’t do anything, their entire being is devoted to taking things apart. The Democrats are no better because of their devotion to compliance, regulation and compulsive rule following without thought. This tendency is paired with the liberal’s inability to tolerate any discussion or debate over a litany of politically correct talking points.

science. Both are wrought by the destructive tendency of the Republican party that makes governing impossible. They are a party of destruction, not creation. When Republicans are put in power they can’t do anything, their entire being is devoted to taking things apart. The Democrats are no better because of their devotion to compliance, regulation and compulsive rule following without thought. This tendency is paired with the liberal’s inability to tolerate any discussion or debate over a litany of politically correct talking points. f you are doing anything of real substance and performing at a high level, fuck ups are inevitable. The real key to the operation is the ability of technical competence to be faked. Our false confidence in the competent execution of our work is a localized harbinger of “fake news”.

f you are doing anything of real substance and performing at a high level, fuck ups are inevitable. The real key to the operation is the ability of technical competence to be faked. Our false confidence in the competent execution of our work is a localized harbinger of “fake news”. on raising our level of excellence across the board in science and engineering. Our technical standards should be higher than ever because of the difficulty and importance of this enterprise. Requiring 100% success might seem to be a way to do this, but it isn’t.

on raising our level of excellence across the board in science and engineering. Our technical standards should be higher than ever because of the difficulty and importance of this enterprise. Requiring 100% success might seem to be a way to do this, but it isn’t. This is the place where we get to the core of the accent of Trump. When we lower our standards on leadership we get someone like Trump. The lowering of standards has taken place across the breadth of society. This is not simply National leadership, but corporate and social leadership. Greedy, corrupt and incompetent leaders are increasingly tolerated at all levels of society. At the Labs where I work, the leadership has to say yes to the government, no matter how moronic the direction is. If you don’t say yes, you are removed and punished. We now have leadership that is incapable of engaging in active discussion about how to succeed in our enterprise. The result are labs that simply take the money and execute whatever work they are given without regard for the wisdom of the direction. We now have the blind leading the spineless, and the blind are walking us right over the cliff. Our dysfunctional political system has finally shit the bed and put a moron in the White House. Everyone knows it, and yet a large portion of the population is completely fooled (or simply to foolish or naïve to understand how bad the situations is).

This is the place where we get to the core of the accent of Trump. When we lower our standards on leadership we get someone like Trump. The lowering of standards has taken place across the breadth of society. This is not simply National leadership, but corporate and social leadership. Greedy, corrupt and incompetent leaders are increasingly tolerated at all levels of society. At the Labs where I work, the leadership has to say yes to the government, no matter how moronic the direction is. If you don’t say yes, you are removed and punished. We now have leadership that is incapable of engaging in active discussion about how to succeed in our enterprise. The result are labs that simply take the money and execute whatever work they are given without regard for the wisdom of the direction. We now have the blind leading the spineless, and the blind are walking us right over the cliff. Our dysfunctional political system has finally shit the bed and put a moron in the White House. Everyone knows it, and yet a large portion of the population is completely fooled (or simply to foolish or naïve to understand how bad the situations is). administration lessens the United States’ prestige. The World had counted on the United States for decades, but cannot any longer. We have made a decision as a nation that disqualifies us from a position of leadership. The Republican party has the greatest responsibility for this, but the Democrats are not blameless. Our institutional leadership shares the blame too. Places like the Labs where I work are being destroyed one incompetent step at a time. All of us need to fix this.

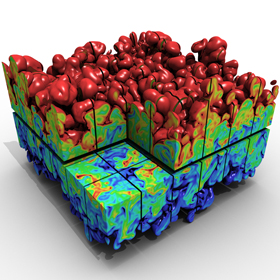

administration lessens the United States’ prestige. The World had counted on the United States for decades, but cannot any longer. We have made a decision as a nation that disqualifies us from a position of leadership. The Republican party has the greatest responsibility for this, but the Democrats are not blameless. Our institutional leadership shares the blame too. Places like the Labs where I work are being destroyed one incompetent step at a time. All of us need to fix this. The saddest thing about DNS is the tendency for scientist’s brains to almost audibly click into the off position when its invoked. All one has to say is that their calculation is a DNS and almost any question or doubt leaves the room. No need to look deeper, or think about the results, we are solving the fundamental laws of physics with stunning accuracy! It must be right! They will assert, “this is a first principles” calculation, and predictive at that. Simply marvel at the truths waiting to be unveiled in the sea of bits. Add a bit of machine learning, or artificial intelligence to navigate the massive dataset produced by DNS, (the datasets are so fucking massive, they must have something good! Right?) and you have the recipe for the perfect bullshit sandwich. How dare some infidel cast doubt, or uncertainty on the results! Current DNS practice is a religion within the scientific community, and brings an intellectual rot into the core computational science. DNS reflects some of the worst wishful thinking in the field where the desire for truth, and understanding overwhelms good sense. A more damning assessment would be a tendency to submit to intellectual laziness when pressed by expediency, or difficulty in progress.

The saddest thing about DNS is the tendency for scientist’s brains to almost audibly click into the off position when its invoked. All one has to say is that their calculation is a DNS and almost any question or doubt leaves the room. No need to look deeper, or think about the results, we are solving the fundamental laws of physics with stunning accuracy! It must be right! They will assert, “this is a first principles” calculation, and predictive at that. Simply marvel at the truths waiting to be unveiled in the sea of bits. Add a bit of machine learning, or artificial intelligence to navigate the massive dataset produced by DNS, (the datasets are so fucking massive, they must have something good! Right?) and you have the recipe for the perfect bullshit sandwich. How dare some infidel cast doubt, or uncertainty on the results! Current DNS practice is a religion within the scientific community, and brings an intellectual rot into the core computational science. DNS reflects some of the worst wishful thinking in the field where the desire for truth, and understanding overwhelms good sense. A more damning assessment would be a tendency to submit to intellectual laziness when pressed by expediency, or difficulty in progress. Let’s unpack this issue a bit and get to the core of the problems. First, I will submit that DNS is an unambiguously valuable scientific tool. A large body of work valuable to a broad swath of science can benefit from DNS. We can study our understanding of the universe in myriad ways at phenomenal detail. On the other hand, DNS is not ever a substitute for observations. We do not know the fundamental laws of the universe with such certainty that the solutions provide an absolute truth. The laws we know are models plain and simple. They will always be models. As models, they are approximate and incomplete by their basic nature. This is how science works, we have a theory that explains the universe, and we test that theory (i.e., model) against what we observe. If the model produces the observations with high precision, the model is confirmed. This model confirmation is always tentative and subject to being tested with new or more accurate observations. Solving a model does not replace observations, ever, and some uses of DNS are masking laziness or limitations in observational (experimental) science.

Let’s unpack this issue a bit and get to the core of the problems. First, I will submit that DNS is an unambiguously valuable scientific tool. A large body of work valuable to a broad swath of science can benefit from DNS. We can study our understanding of the universe in myriad ways at phenomenal detail. On the other hand, DNS is not ever a substitute for observations. We do not know the fundamental laws of the universe with such certainty that the solutions provide an absolute truth. The laws we know are models plain and simple. They will always be models. As models, they are approximate and incomplete by their basic nature. This is how science works, we have a theory that explains the universe, and we test that theory (i.e., model) against what we observe. If the model produces the observations with high precision, the model is confirmed. This model confirmation is always tentative and subject to being tested with new or more accurate observations. Solving a model does not replace observations, ever, and some uses of DNS are masking laziness or limitations in observational (experimental) science.

approximate. The approximate solution is never free of numerical error. In DNS, the estimate of the magnitude of approximation error is almost universally lacking from results.

approximate. The approximate solution is never free of numerical error. In DNS, the estimate of the magnitude of approximation error is almost universally lacking from results. Part of doing science correctly is honesty about challenges. Progress can be made with careful consideration of the limitations of our current knowledge. Some of these limits are utterly intrinsic. We can observe reality, but various challenges limit the fidelity and certainty of what we can sense. We can model reality, but these models are always approximate. The models encode simplifications and assumptions. Progress is made by putting these two forms of understanding into tension. Do our models predict or reproduce the observations to within their certainty? If so, we need to work on improving the observations until they challenge the models. If not, the models need to be improved, so that the observations are produced. The current use of DNS short-circuits this tension and acts to undermine progress. It wrongly puts modeling in the place of reality, which only works to derail necessary work on improving models, or work to improve observation. As such, poor DNS practices are actually stalling scientific progress.

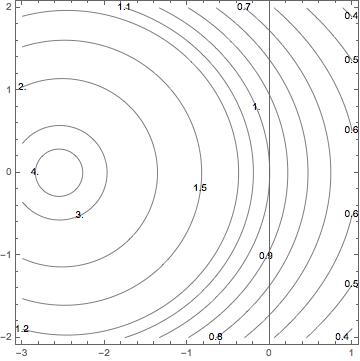

Part of doing science correctly is honesty about challenges. Progress can be made with careful consideration of the limitations of our current knowledge. Some of these limits are utterly intrinsic. We can observe reality, but various challenges limit the fidelity and certainty of what we can sense. We can model reality, but these models are always approximate. The models encode simplifications and assumptions. Progress is made by putting these two forms of understanding into tension. Do our models predict or reproduce the observations to within their certainty? If so, we need to work on improving the observations until they challenge the models. If not, the models need to be improved, so that the observations are produced. The current use of DNS short-circuits this tension and acts to undermine progress. It wrongly puts modeling in the place of reality, which only works to derail necessary work on improving models, or work to improve observation. As such, poor DNS practices are actually stalling scientific progress. The standards of practice in verification of computer codes and applied calculations are generally appalling. Most of the time when I encounter work, I’m just happy to see anything at all done to verify a code. Put differently, most of the published literature accepts a slip shod practice in terms of verification. In some areas like shock physics, the viewgraph norm still reigns supreme. It actually rules supreme in a far broader swath of science, but you talk about what you know. The missing element in most of the literature is the lack of quantitative analysis of results. Even when the work is better and includes detailed quantitative analysis, the work usually lacks a deep connection with numerical analysis results. The typical best practice in verification only includes the comparison of the observed rate of convergence with the theoretical rate of convergence. Worse yet, the result is asymptotic and codes are rarely practically used with asymptotic meshes. Thus, standard practice is largely superficial, and only scratches the surface of the connections with numerical analysis.

The standards of practice in verification of computer codes and applied calculations are generally appalling. Most of the time when I encounter work, I’m just happy to see anything at all done to verify a code. Put differently, most of the published literature accepts a slip shod practice in terms of verification. In some areas like shock physics, the viewgraph norm still reigns supreme. It actually rules supreme in a far broader swath of science, but you talk about what you know. The missing element in most of the literature is the lack of quantitative analysis of results. Even when the work is better and includes detailed quantitative analysis, the work usually lacks a deep connection with numerical analysis results. The typical best practice in verification only includes the comparison of the observed rate of convergence with the theoretical rate of convergence. Worse yet, the result is asymptotic and codes are rarely practically used with asymptotic meshes. Thus, standard practice is largely superficial, and only scratches the surface of the connections with numerical analysis.

ted in the shadows for years and years as one of Hollywood’s worst kept secrets. Weinstein preyed on women with virtual impunity with his power and prestige acting to keep his actions in the dark. The promise and threat of his power in that industry gave him virtual license to act. The silence of the myriad of insiders who knew about the pattern of abuse allowed the crimes to continue unabated. Only after the abuse came to light broadly and outside the movie industry did the unacceptability arise. When the abuse stayed in the shadows, and its knowledge limited to industry insiders, it continued.

ted in the shadows for years and years as one of Hollywood’s worst kept secrets. Weinstein preyed on women with virtual impunity with his power and prestige acting to keep his actions in the dark. The promise and threat of his power in that industry gave him virtual license to act. The silence of the myriad of insiders who knew about the pattern of abuse allowed the crimes to continue unabated. Only after the abuse came to light broadly and outside the movie industry did the unacceptability arise. When the abuse stayed in the shadows, and its knowledge limited to industry insiders, it continued. Our current President is serial abuser of power whether it be the legal system, women, business associates or the American people, his entire life is constructed around abuse of power and the privileges of wealth. Many people are his enablers, and nothing enables it more than silence. Like Weinstein, his sexual misconducts are many and well known, yet routinely go unpunished. Others either remain silence or ignore and excuse the abuse a being completely normal.

Our current President is serial abuser of power whether it be the legal system, women, business associates or the American people, his entire life is constructed around abuse of power and the privileges of wealth. Many people are his enablers, and nothing enables it more than silence. Like Weinstein, his sexual misconducts are many and well known, yet routinely go unpunished. Others either remain silence or ignore and excuse the abuse a being completely normal. ower and ability to abuse it. They are an entire collection of champion power abusers. Like all abusers, they maintain their power through the cowering masses below them. When we are silent their power is maintained. They are moving the squash all resistance. My training was pointed at the inside of the institutions and instruments of government where they can use “legal” threats to shut us up. They have waged an all-out assault against the news media. Anything they don’t like is labeled as “fake news” and attacked. The legitimacy of facts has been destroyed, providing the foundation for their power. We are now being threatened to cut off the supply of facts to base resistance upon. This training was the act of people wanting to rule like dictators in an authoritarian manner.

ower and ability to abuse it. They are an entire collection of champion power abusers. Like all abusers, they maintain their power through the cowering masses below them. When we are silent their power is maintained. They are moving the squash all resistance. My training was pointed at the inside of the institutions and instruments of government where they can use “legal” threats to shut us up. They have waged an all-out assault against the news media. Anything they don’t like is labeled as “fake news” and attacked. The legitimacy of facts has been destroyed, providing the foundation for their power. We are now being threatened to cut off the supply of facts to base resistance upon. This training was the act of people wanting to rule like dictators in an authoritarian manner. the set-up is perfect. They are the wolves and we, the sheep, are primed for slaughter. Recent years have witnessed an explosion in the amount of information deemed classified or sensitive. Much of this information is controlled because it is embarrassing or uncomfortable for those in power. Increasingly, information is simply hidden based on non-existent standards. This is a situation that is primed for abuse of power. People is positions of power can hide anything they don’t like. For example, something bad or embarrassing can be deemed to be proprietary or business-sensitive, and buried from view. Here the threats come in handy to make sure that everyone keeps their mouths shut. Various abuses of power can now run free within the system without risk of exposure. Add a weakened free press and you’ve created the perfect storm.

the set-up is perfect. They are the wolves and we, the sheep, are primed for slaughter. Recent years have witnessed an explosion in the amount of information deemed classified or sensitive. Much of this information is controlled because it is embarrassing or uncomfortable for those in power. Increasingly, information is simply hidden based on non-existent standards. This is a situation that is primed for abuse of power. People is positions of power can hide anything they don’t like. For example, something bad or embarrassing can be deemed to be proprietary or business-sensitive, and buried from view. Here the threats come in handy to make sure that everyone keeps their mouths shut. Various abuses of power can now run free within the system without risk of exposure. Add a weakened free press and you’ve created the perfect storm.

oo often we make the case that their misdeeds are acceptable because of the power they grant to your causes through their position. This is exactly the bargain Trump makes with the right wing, and Weinstein made with the left.

oo often we make the case that their misdeeds are acceptable because of the power they grant to your causes through their position. This is exactly the bargain Trump makes with the right wing, and Weinstein made with the left.