Science is not about making predictions or performing experiments. Science is about explaining.

― Bill Gaede

We would be far better off removing the word “predictive” as a focus for science. If we replaced the emphasis on prediction with a focus on explanation and understanding, our science would improve overnight. The sense that our science must predict carries connotations that are unrelentingly counter-productive to the conduct of science. The side-effects of the predictivity undermine the scientific method at every turn. The goal of understanding nature and explaining what happens in the natural world is consistent with the conduct of high quality science. In many respects large swaths of the natural world are unpredictable in highly predictable ways. Our weather is a canonical example of this. Moreover, we find the weather to be unpredictable in a bounded manner as time scales become longer. Science that has focused on understanding and explanation has revealed these truths. Attempting to focus prediction under some circumstances is both foolhardy and technically impossible. As such the reality of prediction needs to be entered into carefully and thoughtfully under well-chosen circumstances. We also need the freedom to find out that we are wrong and incapable of prediction. Ultimately, we need to find out limits on prediction and work to improve or accept these limits.

We would be far better off removing the word “predictive” as a focus for science. If we replaced the emphasis on prediction with a focus on explanation and understanding, our science would improve overnight. The sense that our science must predict carries connotations that are unrelentingly counter-productive to the conduct of science. The side-effects of the predictivity undermine the scientific method at every turn. The goal of understanding nature and explaining what happens in the natural world is consistent with the conduct of high quality science. In many respects large swaths of the natural world are unpredictable in highly predictable ways. Our weather is a canonical example of this. Moreover, we find the weather to be unpredictable in a bounded manner as time scales become longer. Science that has focused on understanding and explanation has revealed these truths. Attempting to focus prediction under some circumstances is both foolhardy and technically impossible. As such the reality of prediction needs to be entered into carefully and thoughtfully under well-chosen circumstances. We also need the freedom to find out that we are wrong and incapable of prediction. Ultimately, we need to find out limits on prediction and work to improve or accept these limits.

“Predictive Science” is mostly just a buzzword. We put it in our proposals to improve the chances of hitting funding. A slightly less cynical take would take predictive as the objective for science that is completely aspirational. In the context of our current world, we strive for predictive science as a means of confirming our mastery over a scientific subject. In this context the word predictive implies that the we understand the science well enough to foresee outcomes. We should also practice some deep humility in what this means. Predictivity is always a limited statement, and these limitations should always be firmly in mind. First, predictions are limited to some subset of what can be measured and fail for other quantities. The question is whether the predictions are correct for what matters? Secondly, the understanding is always waiting to be disproved by a reality that is more complex than we realize. Good science is acutely aware of these limitations and actively probes the boundary of our understanding.

chances of hitting funding. A slightly less cynical take would take predictive as the objective for science that is completely aspirational. In the context of our current world, we strive for predictive science as a means of confirming our mastery over a scientific subject. In this context the word predictive implies that the we understand the science well enough to foresee outcomes. We should also practice some deep humility in what this means. Predictivity is always a limited statement, and these limitations should always be firmly in mind. First, predictions are limited to some subset of what can be measured and fail for other quantities. The question is whether the predictions are correct for what matters? Secondly, the understanding is always waiting to be disproved by a reality that is more complex than we realize. Good science is acutely aware of these limitations and actively probes the boundary of our understanding.

In the modern world we constantly have new tools to help expand our understanding of science. Among the most important of these new tools is modeling and simulation. Modeling and simulation is simply an extension of the classical scientific approach. Computers allow us to solve our models in science more generally than classical means. This has increased the importance and role of models in science. We can envision more complex models having more general solutions with computational solutions. Part of this power comes with some substantial responsibility; computational simulations are highly technical and difficult. They come with a host of potential flaws, errors and uncertainties that cloud results and need focused assessment. Getting the science of computation correct and assessed to play a significant role in the scientific enterprise requires a broad multidisciplinary approach with substantial rigor. Playing a broad integrating role in predictive science is verification and validation (V&V). In a nutshell V&V is the scientific method as applied to modeling and simulation. Its outcomes are essential for making any claims regarding how predictive your science is.

In the modern world we constantly have new tools to help expand our understanding of science. Among the most important of these new tools is modeling and simulation. Modeling and simulation is simply an extension of the classical scientific approach. Computers allow us to solve our models in science more generally than classical means. This has increased the importance and role of models in science. We can envision more complex models having more general solutions with computational solutions. Part of this power comes with some substantial responsibility; computational simulations are highly technical and difficult. They come with a host of potential flaws, errors and uncertainties that cloud results and need focused assessment. Getting the science of computation correct and assessed to play a significant role in the scientific enterprise requires a broad multidisciplinary approach with substantial rigor. Playing a broad integrating role in predictive science is verification and validation (V&V). In a nutshell V&V is the scientific method as applied to modeling and simulation. Its outcomes are essential for making any claims regarding how predictive your science is.

Experiment is the sole source of truth. It alone can teach us something new; it alone can give us certainty.

― Henri Poincaré

We can take a moment to articulate the scientific method and then restate it in a modern context using computational simulation. The scientific method involves making hypotheses about the universe and testing those hypotheses against observations of the natural world. One of the key ways to make observations are experiments where the measurements of reality are controlled and focused to elucidate nature more clearly. These hypotheses or theories usually produce models of reality, which take the form of mathematical statements. These models can be used to make predictions about what an observation will be, which then confirms the hypothesis. If the observations are in conflict with the model’s predictions, the hypothesis and model need to be discarded or modified. Over time observations become more accurate, often showing the flaws in models. This usually means a model needs to be refined rather than thrown out. This process is the source of progress in science. In a sense it is a competition between what we observe and how well we observe it, and the quality of our models of reality. Predictions are the crucible where this tension can be realized.

We can take a moment to articulate the scientific method and then restate it in a modern context using computational simulation. The scientific method involves making hypotheses about the universe and testing those hypotheses against observations of the natural world. One of the key ways to make observations are experiments where the measurements of reality are controlled and focused to elucidate nature more clearly. These hypotheses or theories usually produce models of reality, which take the form of mathematical statements. These models can be used to make predictions about what an observation will be, which then confirms the hypothesis. If the observations are in conflict with the model’s predictions, the hypothesis and model need to be discarded or modified. Over time observations become more accurate, often showing the flaws in models. This usually means a model needs to be refined rather than thrown out. This process is the source of progress in science. In a sense it is a competition between what we observe and how well we observe it, and the quality of our models of reality. Predictions are the crucible where this tension can be realized.

The quest for absolute certainty is an immature, if not infantile, trait of thinking.

― Herbert Feigl

One of the best ways to understand how to do predictive science in the context of modeling and simulation is a simple realization. V&V is basically a methodology that encodes the scientific method into modeling and simulation. All of the content of V&V is assuring that science is being done with a simulation and we aren’t fooling ourselves. Verification is all about making sure the implementation of the model and its solution are credible and correct. The second half of verification is associated with estimating the errors in the numerical solution of the model. We need to assess the numerical uncertainty and the degree to which it clouds the model’s solution.

Validation is then the structured comparison of the simulated model’s solution with observations. Validation is not something that is completed, but rather it is an assessment of work. At the end of the validation process evidence has been accumulated as to the state of the model. Is the model consistent with the observations? If the uncertainties in the modeling and simulation process along with the uncertainties in the observations can lead to the conclusion that the model is correct enough to be used. In many cases the model is found to be inadequate for the purpose and needs to be modified ˙or changed completely. This process is simply the hypothesis testing so central to the conduct of science.

Validation is then the structured comparison of the simulated model’s solution with observations. Validation is not something that is completed, but rather it is an assessment of work. At the end of the validation process evidence has been accumulated as to the state of the model. Is the model consistent with the observations? If the uncertainties in the modeling and simulation process along with the uncertainties in the observations can lead to the conclusion that the model is correct enough to be used. In many cases the model is found to be inadequate for the purpose and needs to be modified ˙or changed completely. This process is simply the hypothesis testing so central to the conduct of science.

Since all models are wrong the scientist cannot obtain a “correct” one by excessive elaboration. On the contrary following William of Occam he should seek an economical description of natural phenomena. Just as the ability to devise simple but evocative models is the signature of the great scientist so overelaboration and overparameterization is often the mark of mediocrity.

― George Box

Now it would be very remarkable if any system existing in the real world could be exactly represented by any simple model. However, cunningly chosen parsimonious models often do provide remarkably useful approximations. For example, the law PV = RT relating pressure P, volume V and temperature T of an “ideal” gas via a constant R is not exactly true for any real gas, but it frequently provides a useful approximation and furthermore its structure is informative since it springs from a physical view of the behavior of gas molecules.

― George Box

The George Box maxim about all models being wrong, but some being useful is important and key in the conduct of V&V. It is also central to modeling and simulation’s most important perspective, the constancy of necessity for improvement. Every model is a mathematical abstraction that has limited capacity for explaining nature. At the same time the model has a utility that may be sufficient for explaining everything we can measure. This does not mean that the model is right, or perfect, it means the model is adequate. The creative tension in science is the narrative of arc of refining hypotheses and models of reality or improving measurements and experiments to more acutely test the models. V&V is a process for achieving this end in computational simulations. Our goal should always be to find inadequacy in models and define the demand for improvement. If we do not have the measurements to demonstrate a model’s incorrectness, the experiments and measurements need to improve. All of this serves progress in science in a clear manner.

The law that entropy always increases holds, I think, the supreme position among the laws of Nature. If someone points out to you that your pet theory of the universe is in disagreement with Maxwell’s equations — then so much the worse for Maxwell’s equations. If it is found to be contradicted by observation — well, these experimentalists do bungle things sometimes. But if your theory is found to be against the second law of thermodynamics I can give you no hope; there is nothing for it but to collapse in deepest humiliation.

–Sir Arthur Stanley Eddington

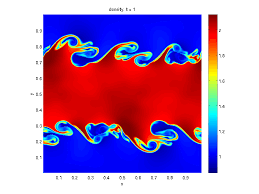

Let’s take a well thought of and highly accepted model, the incompressible Navier-Stokes equations. This model is thought to largely contain the proper physics of fluid mechanics, most notably turbulence. Perhaps this is true although our lack of progress in turbulence might indicate that something is amiss. I will state without doubt that the incompressible Navier-Stokes equations are wrong in some clear and unambiguous ways. The deepest problem with the model is incompressibility. Incompressible fluids do not exist and the form of the mass equation showing divergence free velocity fields implies several deeply unphysical things. All materials in the universe are compressible and support sound waves, and this relation opposes this truth. Incompressible flow is largely divorced from thermodynamics and materials are thermodynamic. The system of equations violates causality rather severely, the sound waves travel at infinite speeds. All of this is true, but at the same time this system of equations is undeniably useful. There are large categories of fluid physics that they explain quite remarkably. Nonetheless the equations are also obviously unphysical. Whether or not this unphysical character is consequential should be something people keep in mind.

Let’s take a well thought of and highly accepted model, the incompressible Navier-Stokes equations. This model is thought to largely contain the proper physics of fluid mechanics, most notably turbulence. Perhaps this is true although our lack of progress in turbulence might indicate that something is amiss. I will state without doubt that the incompressible Navier-Stokes equations are wrong in some clear and unambiguous ways. The deepest problem with the model is incompressibility. Incompressible fluids do not exist and the form of the mass equation showing divergence free velocity fields implies several deeply unphysical things. All materials in the universe are compressible and support sound waves, and this relation opposes this truth. Incompressible flow is largely divorced from thermodynamics and materials are thermodynamic. The system of equations violates causality rather severely, the sound waves travel at infinite speeds. All of this is true, but at the same time this system of equations is undeniably useful. There are large categories of fluid physics that they explain quite remarkably. Nonetheless the equations are also obviously unphysical. Whether or not this unphysical character is consequential should be something people keep in mind.

It is impossible to trap modern physics into predicting anything with perfect determinism because it deals with probabilities from the outset.

― Arthur Stanley Eddington

In conducting predictive science one of the most important things you can do is make a prediction. While you might start with something where you expect the prediction to be correct (or correct enough), the real learning comes from making predictions that turn out to be wrong. It is wrong predictions that will teach you something. Sometimes the thing you learn is something about your measurement or experiment that needs to be refined. At other times the wrong prediction can be traced back to the model itself. This is your demand and opportunity to improve the model. Is the difference due to something fundamental in the model’s assumptions? Or is it simply something that can be fixed by adjusting the closure of the model? Too often we view failed predictions as problems when instead they are opportunities to improve the state of affairs. I might posit that if you succeed with a prediction, it is a call to improvement; either improve the measurement and experiment, or the model. Experiments should set out to show flaws in the models. If this is done the model needs to be improved. Successful predictions are simply not vehicles for improving scientific knowledge, they tell us we need to do better.

In conducting predictive science one of the most important things you can do is make a prediction. While you might start with something where you expect the prediction to be correct (or correct enough), the real learning comes from making predictions that turn out to be wrong. It is wrong predictions that will teach you something. Sometimes the thing you learn is something about your measurement or experiment that needs to be refined. At other times the wrong prediction can be traced back to the model itself. This is your demand and opportunity to improve the model. Is the difference due to something fundamental in the model’s assumptions? Or is it simply something that can be fixed by adjusting the closure of the model? Too often we view failed predictions as problems when instead they are opportunities to improve the state of affairs. I might posit that if you succeed with a prediction, it is a call to improvement; either improve the measurement and experiment, or the model. Experiments should set out to show flaws in the models. If this is done the model needs to be improved. Successful predictions are simply not vehicles for improving scientific knowledge, they tell us we need to do better.

When the number of factors coming into play in a phenomenological complex is too large scientific method in most cases fails. One need only think of the weather, in which case the prediction even for a few days ahead is impossible.

― Albert Einstein

In this context we can view predictions as things that at some level we want to fail at. If the prediction is too easy, the experiment is not sufficiently challenging. Success and failure exists on a continuum. For simple enough predictions our models will always work, and for complex enough predictions, the models will always fail. The trick is finding the spot where the predictions are on the edge of credibility, and progress is needed and ripe. Too often we take the mindset is taken where predictions need to be successful. An experiment that is easy to predict is not a success, it is a waste. I would rather see predictions be focused at the edge of success and failure. If we are interested in making progress, predictions need to fail so that models can improve. By the same token a successful prediction indicates that the experiment and measurement need to be improved to more properly challenge the models. The real art of predictive science is working at the edge of our predictive modeling capability.

A healthy focus on predictive science with a taste for failure produces a strong driver for lubricating the scientific method and successfully integrating modeling and simulation as a valuable tool. Prediction involves two sides of science to work in concert; the experiment-observation of the natural world, and the modeling of the natural world via mathematical abstraction. The better the observations and experiments, the greater the challenge to models. Conversely, the better the model, the greater the challenge to observations. We need to tee up the tension between how we sense and perceive the natural world, and how we understand that world through modeling. It is important to examine where the ascendency in science exists. Are the observations too good for the models? Or can no observation challenge the models? This tells us clearly where we should prioritize.

A healthy focus on predictive science with a taste for failure produces a strong driver for lubricating the scientific method and successfully integrating modeling and simulation as a valuable tool. Prediction involves two sides of science to work in concert; the experiment-observation of the natural world, and the modeling of the natural world via mathematical abstraction. The better the observations and experiments, the greater the challenge to models. Conversely, the better the model, the greater the challenge to observations. We need to tee up the tension between how we sense and perceive the natural world, and how we understand that world through modeling. It is important to examine where the ascendency in science exists. Are the observations too good for the models? Or can no observation challenge the models? This tells us clearly where we should prioritize.

We need to understand where progress is needed to advance science. We need to take advantages of technology in moving ahead in either vein. If observations are already quite refined, but new technology exists to improve them, it behooves us to take advantage of it. By the same token modeling can be improved via new technology such a solution methods, algorithmic improvements and faster computers. What is lacking from the current dialog is a clear focus on where the progress imperative exists. A part of integrating predictive science well is determining where the progress is most needed. We can bias our efforts to focus on where the progress is most needed while keeping opportunities to make improvements in mind.

The important word I haven’t mentioned yet is “uncertainty”. We cannot have predictive science without dealing with uncertainty and its sources. In general, we systematically or perhaps even pathologically underestimate how uncertain our knowledge is. We like to believe our experiments and models are more certain than they actually are. This is really easy to do in practice. For many categories of experiments, we ignore sources of uncertainty and simply get away with an estimate of zero for that uncertainty. If we do a single experiment, we never have to explicitly confront that the experiment isn’t completely reproducible. On the modeling side we see the particular experiment as something to be modeling precisely even if the phenomena of interest are highly variable. This is common and a source of willful cognitive dissonance. Rather than confront this rather fundamental uncertainty, we willfully ignore it. We do not run replicate experiments and measure the variation in results. We do not subject the modeling to reasonable variations in the experimental conditions and check the variation in the results. We pretend that the experiment is completely well-posed, and the model is too. In doing this we fail at the scientific method rather profoundly.

The important word I haven’t mentioned yet is “uncertainty”. We cannot have predictive science without dealing with uncertainty and its sources. In general, we systematically or perhaps even pathologically underestimate how uncertain our knowledge is. We like to believe our experiments and models are more certain than they actually are. This is really easy to do in practice. For many categories of experiments, we ignore sources of uncertainty and simply get away with an estimate of zero for that uncertainty. If we do a single experiment, we never have to explicitly confront that the experiment isn’t completely reproducible. On the modeling side we see the particular experiment as something to be modeling precisely even if the phenomena of interest are highly variable. This is common and a source of willful cognitive dissonance. Rather than confront this rather fundamental uncertainty, we willfully ignore it. We do not run replicate experiments and measure the variation in results. We do not subject the modeling to reasonable variations in the experimental conditions and check the variation in the results. We pretend that the experiment is completely well-posed, and the model is too. In doing this we fail at the scientific method rather profoundly.

Another key source of uncertainty is numerical error. It is still common to present results without any sense of the numerical error. Typically, the mesh used for the calculation is asserted to be fine enough without any evidence. More commonly the results are simply given without any comment at all. At the same time the nation is investing huge amounts of money in faster computers that implicitly assume that faster computers yield better solutions, a priori. This entire dialog often proceeds without any support from evidence. It is 100% assumption. When one examines these issues directly there is often a large amount of numerical error that is being ignored. Numerical error is small in simple problems without complications. For real problems with real geometry and real boundary conditions with real constitutive models, the numerical errors are invariably significant. One should expect some evidence to be presented regarding its magnitude, and you should be suspicious if it’s not there. Too often we simply give simulations a pass on this detail and fail due diligence.

Truth has nothing to do with the conclusion, and everything to do with the methodology.

― Stefan Molyneux

In this sense the entirety of V&V is a set of processes for collecting evidence about credibility and uncertainty. In one respect verification is mostly an exercise in collecting evidence of credibility and due diligence for quality in computational tools. Are the models, codes and methods implemented in a credible and high-quality manner. Has the code development been conducted in a careful manner where the developers have checked and done a reasonable job of producing code without obvious bugs? Validation could be characterized by collecting uncertainties. We find upon examination that many uncertainties are ignored in both computational and experimental work. Without these uncertainties and the evidence surrounding them, the entire practice of validation is untethered from reality. We are left to investigate through assumption and supposition. This sort of validation practice has a tendency to simply regress to commonly accepted notions. In such an environments models are usually accepted as valid and evidence is often skewed toward that as a preordained conclusion. Without care and evidence, the engine of progress for science is disconnected.

In this light we can see that V&V is simply a structured way of collecting evidence necessary the scientific method. Collecting this evidence is difficult and requires assumptions to be challenged. Challenging assumptions is courting failure. Making progress requires failure and the invalidation of models. It requires doing experiments that we fail to be able to predict with existing models. We need to assure that the model is the problem, and the failure isn’t due to numerical error. To determine these predictive failures requires a good understanding of uncertainty in both experiments and computational modeling. The more genuinely high quality the experimental work is, the more genuinely testing the validation is to model. We can collect evidence about the correctness of the model and clear standards for judging improvements in the models. The same goes for the uncertainty in computations, which needs evidence so that progress can be measured.

In this light we can see that V&V is simply a structured way of collecting evidence necessary the scientific method. Collecting this evidence is difficult and requires assumptions to be challenged. Challenging assumptions is courting failure. Making progress requires failure and the invalidation of models. It requires doing experiments that we fail to be able to predict with existing models. We need to assure that the model is the problem, and the failure isn’t due to numerical error. To determine these predictive failures requires a good understanding of uncertainty in both experiments and computational modeling. The more genuinely high quality the experimental work is, the more genuinely testing the validation is to model. We can collect evidence about the correctness of the model and clear standards for judging improvements in the models. The same goes for the uncertainty in computations, which needs evidence so that progress can be measured.

It doesn’t matter how beautiful your theory is … If it doesn’t agree with experiment, it’s wrong.

― Richard Feynman

Now we get to the rub in the context of modeling and simulation in modern predictive science. To make progress we need to fail to be predictive. In other words, we need to fail in order to succeed. Success should be denoted by making progress in becoming more predictive. We should take the perspective that predictivity is a continuum, not a state. One of the fundamental precepts of stockpile steward ship is predictive modeling and simulation. We want confident and credible evidence that we are capable of faithfully predicting certain essential aspects of reality. The only way to succeed at this mission is continually challenging and pushing ourselves at the limit of our capability. This is means that failure should be an almost constant state of being. The problem is projecting a sense of success, which society demands while continually failing. We do not do this well. Instead we need to project a sense that we continually succeed at everything we promise.

In the process we create conditions where the larger goal of prediction is undermined at every turn. Rather than define success in terms of real progress, we produce artificial measures of success. A key to improving this state of affairs is an honest assessment of all of our uncertainties both experimentally and computationally. There are genuine challenges to this honesty. Generally, the more work we do, the more uncertainty we unveil. This is true of experiments and computations. Think about examining replicate uncertainty in complex experiments. In most cases the experiment is done exactly once, and the prospect of reproducing the experiment is completely avoided. As soon as replicate experiments are conducted the uncertainty becomes larger. Before the replicates, this uncertainty was simply zero and no one challenges this assertion. Instead of going back and adjusting our past state based on current knowledge we run the very real risk of looking like we are moving backwards. The answer is not to continue this willful ignorance but take a mea culpa and admit our former shortcomings. These mea culpas are similarly avoided thus backing the forces of progress into an ever-tighter corner.

In the process we create conditions where the larger goal of prediction is undermined at every turn. Rather than define success in terms of real progress, we produce artificial measures of success. A key to improving this state of affairs is an honest assessment of all of our uncertainties both experimentally and computationally. There are genuine challenges to this honesty. Generally, the more work we do, the more uncertainty we unveil. This is true of experiments and computations. Think about examining replicate uncertainty in complex experiments. In most cases the experiment is done exactly once, and the prospect of reproducing the experiment is completely avoided. As soon as replicate experiments are conducted the uncertainty becomes larger. Before the replicates, this uncertainty was simply zero and no one challenges this assertion. Instead of going back and adjusting our past state based on current knowledge we run the very real risk of looking like we are moving backwards. The answer is not to continue this willful ignorance but take a mea culpa and admit our former shortcomings. These mea culpas are similarly avoided thus backing the forces of progress into an ever-tighter corner.

The core of the issue is relentlessly psychological. People are uncomfortable with uncertainty and want to believe things are certain. They are uncomfortable about random events, and a sense of determinism is comforting. As such modeling reflects these desires and beliefs. Experiments are similarly biased toward these beliefs. When we allow these beliefs to go unchallenged, the entire basis of scientific progress becomes unhinged. Confronting and challenging these comforting implicit assumptions may be the single most difficult for predictive science. We are governed by assumptions that limit our actual capacity to predict nature. Admitting flaws in these assumptions and measuring how much we don’t know is essential for creating the environment necessary for progress. The fear of saying, “I don’t know” is our biggest challenge. In many respects we are managed to never give that response. We need to admit what we don’t know and challenge ourselves to seek those answers.

The core of the issue is relentlessly psychological. People are uncomfortable with uncertainty and want to believe things are certain. They are uncomfortable about random events, and a sense of determinism is comforting. As such modeling reflects these desires and beliefs. Experiments are similarly biased toward these beliefs. When we allow these beliefs to go unchallenged, the entire basis of scientific progress becomes unhinged. Confronting and challenging these comforting implicit assumptions may be the single most difficult for predictive science. We are governed by assumptions that limit our actual capacity to predict nature. Admitting flaws in these assumptions and measuring how much we don’t know is essential for creating the environment necessary for progress. The fear of saying, “I don’t know” is our biggest challenge. In many respects we are managed to never give that response. We need to admit what we don’t know and challenge ourselves to seek those answers.

Only a few centuries ago, a mere second in cosmic time, we knew nothing of where or when we were. Oblivious to the rest of the cosmos, we inhabited a kind of prison, a tiny universe bounded by a nutshell.

How did we escape from the prison? It was the work of generations of searchers who took five simple rules to heart:

- Question authority. No idea is true just because someone says so, including me.

- Think for yourself. Question yourself. Don’t believe anything just because you want to. Believing something doesn’t make it so.

- Test ideas by the evidence gained from observation and experiment.If a favorite idea fails a well-designed test, it’s wrong. Get over it.

- Follow the evidence wherever it leads. If you have no evidence, reserve judgment.

And perhaps the most important rule of all…

- Remember: you could be wrong. Even the best scientists have been wrong about some things. Newton, Einstein, and every other great scientist in history — they all made mistakes. Of course they did. They were human.

Science is a way to keep from fooling ourselves, and each other.

― Neil deGrasse Tyson

n today’s world from work to private life to public discourse, experts are receding in importance. They used to be respected voices who added deep knowledge to any discussion, not any more. Time and time again experts are being rejected by the current flow of events. Experts are messy and bring painful reality into focus. With the Internet, Facebook and the manufactured reality they allow, it’s just easier to dispense with the expert. One can replace the expert with a more comforting and simpler narrative. One can provide a politically tuned narrative that is framed to support an objective. One can simply take a page from the legal world and hire their own expert. The expert is a pain to control, and expertise is expensive. Today we can just make shit up and it’s just as credible as the truth, and much less trouble to manage. Today our management culture with its marketing focus has no time for facts and experts to cloud matters. Why deal with the difficulties that reality offers when you can wish them away. The pitch for money is much cleaner without objective reality to make things hard. Since quality really doesn’t matter anyway, no one knows the difference. We live in the age of bullshit and lies. The expert is obsolete.

n today’s world from work to private life to public discourse, experts are receding in importance. They used to be respected voices who added deep knowledge to any discussion, not any more. Time and time again experts are being rejected by the current flow of events. Experts are messy and bring painful reality into focus. With the Internet, Facebook and the manufactured reality they allow, it’s just easier to dispense with the expert. One can replace the expert with a more comforting and simpler narrative. One can provide a politically tuned narrative that is framed to support an objective. One can simply take a page from the legal world and hire their own expert. The expert is a pain to control, and expertise is expensive. Today we can just make shit up and it’s just as credible as the truth, and much less trouble to manage. Today our management culture with its marketing focus has no time for facts and experts to cloud matters. Why deal with the difficulties that reality offers when you can wish them away. The pitch for money is much cleaner without objective reality to make things hard. Since quality really doesn’t matter anyway, no one knows the difference. We live in the age of bullshit and lies. The expert is obsolete.

All of these horrors have been slowing dawning on me while seeing our broader world begin to go up in flames. The evening news is a cascade of ever more surreal and unbelievable events. The news has become absolutely painful to watch. A big part of this horrible discourse is the chants of “fake news” and the reality of it. The problems with fake news are permeating the discourse across society. Science and scientific experts are no different. A lack of confidence and credibility in the sources of information is a broad problem. Unless the system values integrity, quality and truth it will fade from view. Increasingly, the system values none of these things and we are getting their opposites. For each thing experts acts as gatekeepers of integrity, quality and truth. As such they are to be pushed out the way as impediments to success. The simple politically crafted message that comforts those with a certain point of view is welcomed by the masses. The messy objective reality with its subtle shadings and complexity are something people would rather not examine.

All of these horrors have been slowing dawning on me while seeing our broader world begin to go up in flames. The evening news is a cascade of ever more surreal and unbelievable events. The news has become absolutely painful to watch. A big part of this horrible discourse is the chants of “fake news” and the reality of it. The problems with fake news are permeating the discourse across society. Science and scientific experts are no different. A lack of confidence and credibility in the sources of information is a broad problem. Unless the system values integrity, quality and truth it will fade from view. Increasingly, the system values none of these things and we are getting their opposites. For each thing experts acts as gatekeepers of integrity, quality and truth. As such they are to be pushed out the way as impediments to success. The simple politically crafted message that comforts those with a certain point of view is welcomed by the masses. The messy objective reality with its subtle shadings and complexity are something people would rather not examine.

One of the clearest characteristics of our current research environment is the dominance of money. This only shadows the role of money is society at large. Money has become the one-size-fits-all measuring stick for science. This includes the view of the quality of science. If something gets a lot of money, it must be good. Quality is defined by budget. This shallow mindset is incredibly corrupting all the way from the sort of Lab’s where I work at to Universities and everything in between. Among the corrupting influences is the tendency for promotion of science to morph into pure marketing. Science is increasingly managed as a marketing problem and quality is equivalent to its potential for being flashy. In the wake of this attitude is a loss of focus on the basics and fundamentals of managing research quality.

One of the clearest characteristics of our current research environment is the dominance of money. This only shadows the role of money is society at large. Money has become the one-size-fits-all measuring stick for science. This includes the view of the quality of science. If something gets a lot of money, it must be good. Quality is defined by budget. This shallow mindset is incredibly corrupting all the way from the sort of Lab’s where I work at to Universities and everything in between. Among the corrupting influences is the tendency for promotion of science to morph into pure marketing. Science is increasingly managed as a marketing problem and quality is equivalent to its potential for being flashy. In the wake of this attitude is a loss of focus on the basics and fundamentals of managing research quality. Money is a tool. Just like a screwdriver, or a pencil, or a gun. We have lost sight of this fact. Money has become a thing unto itself and replaced the value it represents as an objective. Along the way the principles that should be attached to the money have also been scuttled. This entire ethos has infected society from top to bottom with the moneyed interests at the top lording over those without money. Our research institutions are properly a focused reflection of these societal trends. They have a similar social stratification and general loss of collective purpose and identity. Managers have become the most important thing superseding science or mission in priority. Our staff are simply necessary details and utterly replaceable especially with quality being an exercise in messaging. Expertise is a nuisance, and expert knowledge something that only creates problems. This environment is tailored to a recession of science, knowledge and intellect from public life. This is exactly what we see in every corner of our society. In its place reigns managers and the money, they control. Quality and excellence are meaningless unless they come with dollars attached. This is our value system, everything is for sale.

Money is a tool. Just like a screwdriver, or a pencil, or a gun. We have lost sight of this fact. Money has become a thing unto itself and replaced the value it represents as an objective. Along the way the principles that should be attached to the money have also been scuttled. This entire ethos has infected society from top to bottom with the moneyed interests at the top lording over those without money. Our research institutions are properly a focused reflection of these societal trends. They have a similar social stratification and general loss of collective purpose and identity. Managers have become the most important thing superseding science or mission in priority. Our staff are simply necessary details and utterly replaceable especially with quality being an exercise in messaging. Expertise is a nuisance, and expert knowledge something that only creates problems. This environment is tailored to a recession of science, knowledge and intellect from public life. This is exactly what we see in every corner of our society. In its place reigns managers and the money, they control. Quality and excellence are meaningless unless they come with dollars attached. This is our value system, everything is for sale. The result of the system we have created is research quality in virtual freefall. The technical class has become part of the general underclass whose well-being is not the priority of this social order. Part of the rise of the management elite as the identity of organizations is driven by this focus on money. Managers look down into organizations for glitzy marketing ammo, to help the money flow. The actual quality and meaning of the research is without value unless it comes with lots of money. Send us your slide decks and especially those beautiful colorful graphics and movies. Those things sell this program and get the money in the door. That is what we are all about, selling to the customer. The customer is always right, even when they are wrong as long as they have the cash. The program’s value is measured in dollars. Truth is measured in dollars, and available for purchase. We are obsessed with metrics, and organizations far and wide work hard to massage them to look good. Things like peer review are to be managed and generally can be politicked into something that makes organizations look good. In the process every bit of ethics and integrity can be squeezed out. These managers have rewritten the rules to make this all kosher. They are clueless about the corrosive and damaging all of this is to the research culture.

The result of the system we have created is research quality in virtual freefall. The technical class has become part of the general underclass whose well-being is not the priority of this social order. Part of the rise of the management elite as the identity of organizations is driven by this focus on money. Managers look down into organizations for glitzy marketing ammo, to help the money flow. The actual quality and meaning of the research is without value unless it comes with lots of money. Send us your slide decks and especially those beautiful colorful graphics and movies. Those things sell this program and get the money in the door. That is what we are all about, selling to the customer. The customer is always right, even when they are wrong as long as they have the cash. The program’s value is measured in dollars. Truth is measured in dollars, and available for purchase. We are obsessed with metrics, and organizations far and wide work hard to massage them to look good. Things like peer review are to be managed and generally can be politicked into something that makes organizations look good. In the process every bit of ethics and integrity can be squeezed out. These managers have rewritten the rules to make this all kosher. They are clueless about the corrosive and damaging all of this is to the research culture.

our highest leadership today. We are not led by people with integrity, ethics or basic competence. The United States has installed a rampant symptom of corruption and incompetence in its highest office. Trump is not the problem, he is the symptom of the issue. He may become a bigger problem if allowed to reign too long, he can become a secondary infection. He exemplified every single issue we have with ethics, integrity and competence to an almost cartoonish magnitude. Donald Trump is the embodiment of every horrible boss you’ve ever had, then amplified to an unimaginable degree. He is completely and utterly unfit for the job of President whether measured by intellect, demeanor, ethics, integrity or philosophy. He is pathologically incurious. He is a rampant narcissist whose only concern is himself. He is lazy and incompetent. He is likely a career white color criminal who has used money and privilege to escape legal consequences. He is a gifted grifter and conman (whose greatest con is getting this office). He has no governing philosophy or moral compass. He is a racist, bigot and serial abuser of women.

our highest leadership today. We are not led by people with integrity, ethics or basic competence. The United States has installed a rampant symptom of corruption and incompetence in its highest office. Trump is not the problem, he is the symptom of the issue. He may become a bigger problem if allowed to reign too long, he can become a secondary infection. He exemplified every single issue we have with ethics, integrity and competence to an almost cartoonish magnitude. Donald Trump is the embodiment of every horrible boss you’ve ever had, then amplified to an unimaginable degree. He is completely and utterly unfit for the job of President whether measured by intellect, demeanor, ethics, integrity or philosophy. He is pathologically incurious. He is a rampant narcissist whose only concern is himself. He is lazy and incompetent. He is likely a career white color criminal who has used money and privilege to escape legal consequences. He is a gifted grifter and conman (whose greatest con is getting this office). He has no governing philosophy or moral compass. He is a racist, bigot and serial abuser of women. In a nutshell Donald Trump is someone you never want to meet and someone who should never wield the power of his current office. You don’t want him to be your boss, he will make your life miserable and throw you under the bus if it suits him. He is a threat to our future both physically and morally. In the context of this discussion he is the exemplar of what ills the United States including organizations that conduct research. He stands as the symbol of what the management class represents. He is decay. He is incompetence. He is a pathological liar. He is worthy of no respect or admiration save his ability to fool millions. He is the supremacy of marketing over substance. He is someone who has no idea how ironic his mantra “make America great again” is completely undermined by his every breath. His rise to power is the most clear and evident example of how our greatness as a nation has been lost and his every action accelerates our decline. People across the World have lost faith in the United States for the good reason. Any country that elected this moronic, unethical con man as leader is completely untrustworthy. No one symbolizes our fall from greatness more completely than Donald Trump as President.

In a nutshell Donald Trump is someone you never want to meet and someone who should never wield the power of his current office. You don’t want him to be your boss, he will make your life miserable and throw you under the bus if it suits him. He is a threat to our future both physically and morally. In the context of this discussion he is the exemplar of what ills the United States including organizations that conduct research. He stands as the symbol of what the management class represents. He is decay. He is incompetence. He is a pathological liar. He is worthy of no respect or admiration save his ability to fool millions. He is the supremacy of marketing over substance. He is someone who has no idea how ironic his mantra “make America great again” is completely undermined by his every breath. His rise to power is the most clear and evident example of how our greatness as a nation has been lost and his every action accelerates our decline. People across the World have lost faith in the United States for the good reason. Any country that elected this moronic, unethical con man as leader is completely untrustworthy. No one symbolizes our fall from greatness more completely than Donald Trump as President. The long-term impact could well be catastrophic. We can only fake it for so long before it catches up with us. We can allow our leadership to demonstrate such radical disregard for those they lead for so long. The lack of integrity, ethics and morality from our leadership even when approved by society will create damage that our culture cannot sustain. Even if we measure things in the faulty lens of money, the problems are obvious. Money has been flowing steadily into the pockets of the very rich and the management class and away from societal investment. We have been starving our infrastructure for decades. Our roads are awful, and bridges will collapse. 21st Century infrastructure is a pipe dream. Our investments in research and development have been declining in the same time frame scarified for short term profit. At the same time the wealth of the rich has grown, and inequality has become profound and historically unprecedented. These figures are completely correlated. This correlation is not incidental, it is a change in the priorities of society to favor wealth accumulation. The decline of research is simply another symptom.

The long-term impact could well be catastrophic. We can only fake it for so long before it catches up with us. We can allow our leadership to demonstrate such radical disregard for those they lead for so long. The lack of integrity, ethics and morality from our leadership even when approved by society will create damage that our culture cannot sustain. Even if we measure things in the faulty lens of money, the problems are obvious. Money has been flowing steadily into the pockets of the very rich and the management class and away from societal investment. We have been starving our infrastructure for decades. Our roads are awful, and bridges will collapse. 21st Century infrastructure is a pipe dream. Our investments in research and development have been declining in the same time frame scarified for short term profit. At the same time the wealth of the rich has grown, and inequality has become profound and historically unprecedented. These figures are completely correlated. This correlation is not incidental, it is a change in the priorities of society to favor wealth accumulation. The decline of research is simply another symptom. After monotonicity-preserving methods came along and revolutionized the numerical solution of hyperbolic conservation laws, people began pursuing follow-on breakthroughs. Heretofore nothing has appeared as a real breakthrough although progress has been made. There are some very good reasons for this and understanding them helps us see how and where progress might be made and how. As I noted several weeks ago in the blog post about Total Variation Diminishing methods, the breakthrough with monotonicity preserving came in several stages. The methods were invented by practitioners who were solving difficult practical problems. This process drove the innovation in the methods. Once the methods received significantly notice as a breakthrough, the math came along to bring the methodology into rigor and explanation. The math produced a series of wonderful connections to theory that gave results legitimacy, and the theory also connected the methods to earlier methods dominating the codes at that time. People were very confident about the methods once math theory was present to provide structural explanations. With essential non-oscillatory (ENO) methods, the math came first. This is the very heart of the problem.

After monotonicity-preserving methods came along and revolutionized the numerical solution of hyperbolic conservation laws, people began pursuing follow-on breakthroughs. Heretofore nothing has appeared as a real breakthrough although progress has been made. There are some very good reasons for this and understanding them helps us see how and where progress might be made and how. As I noted several weeks ago in the blog post about Total Variation Diminishing methods, the breakthrough with monotonicity preserving came in several stages. The methods were invented by practitioners who were solving difficult practical problems. This process drove the innovation in the methods. Once the methods received significantly notice as a breakthrough, the math came along to bring the methodology into rigor and explanation. The math produced a series of wonderful connections to theory that gave results legitimacy, and the theory also connected the methods to earlier methods dominating the codes at that time. People were very confident about the methods once math theory was present to provide structural explanations. With essential non-oscillatory (ENO) methods, the math came first. This is the very heart of the problem. As I noted the monotonicity preserving methods came along and total variation theory to make it feel rigorous and tie it to solid mathematical expectations. Before this the monotonicity preserving methods felt sort of magical and unreliable. The math solidified the hold of these methods and allowed people to trust the results they were seeing. With ENO, the math came first with a specific mathematical intent expressed by the methods. The methods were not created to solve hard problems although they had some advantages for some hard problems. This created a number of issues that these methods could not overcome. First and foremost was fragility, followed by a lack of genuine efficacy. The methods would tend to fail when confronted with real problems and didn’t give better results for the same cost. More deeply, the methods didn’t have the pedigree of doing something amazing that no one had seen before. ENO methods had no pull.

As I noted the monotonicity preserving methods came along and total variation theory to make it feel rigorous and tie it to solid mathematical expectations. Before this the monotonicity preserving methods felt sort of magical and unreliable. The math solidified the hold of these methods and allowed people to trust the results they were seeing. With ENO, the math came first with a specific mathematical intent expressed by the methods. The methods were not created to solve hard problems although they had some advantages for some hard problems. This created a number of issues that these methods could not overcome. First and foremost was fragility, followed by a lack of genuine efficacy. The methods would tend to fail when confronted with real problems and didn’t give better results for the same cost. More deeply, the methods didn’t have the pedigree of doing something amazing that no one had seen before. ENO methods had no pull.

ENO methods were devised to move the methods ahead. ENO took the adaptive discrete representation to new heights. Aside from the “adaptive” aspect the new method was a radical departure from those it preceded. The math itself was mostly notional and fuzzy lacking a firm connection to the same preceding work. If you had invested in TVD methods, the basic machinery you used had to be completely overhauled for ENO. The method also came with very few guarantees of success. Finally, it was expensive, and suffered from numerous frailties. It was a postulated exploration of interesting ideas, but in the mathematical frame, not the application frame. Its development also happened at the time when applied mathematics began to abandon applications in favor of a more abstract and remote connection via packaged software.

ENO methods were devised to move the methods ahead. ENO took the adaptive discrete representation to new heights. Aside from the “adaptive” aspect the new method was a radical departure from those it preceded. The math itself was mostly notional and fuzzy lacking a firm connection to the same preceding work. If you had invested in TVD methods, the basic machinery you used had to be completely overhauled for ENO. The method also came with very few guarantees of success. Finally, it was expensive, and suffered from numerous frailties. It was a postulated exploration of interesting ideas, but in the mathematical frame, not the application frame. Its development also happened at the time when applied mathematics began to abandon applications in favor of a more abstract and remote connection via packaged software. ENO and WENO methods were advantageous for a narrow class of problems usually having a great deal of fine scale structure. At the same time, they were not a significant (or any) improvement over the second-order accurate methods that dominate the production codes for the broadest class of important application problems. It’s reasonable to ask what might have been done differently to product a more effective outcome? One of the things that hurt the broader adoption of ENO and WENO methods is an increasingly impenetrable codes where large modification is nearly impossible as we create a new generation of legacy codes (retaining the code base).

ENO and WENO methods were advantageous for a narrow class of problems usually having a great deal of fine scale structure. At the same time, they were not a significant (or any) improvement over the second-order accurate methods that dominate the production codes for the broadest class of important application problems. It’s reasonable to ask what might have been done differently to product a more effective outcome? One of the things that hurt the broader adoption of ENO and WENO methods is an increasingly impenetrable codes where large modification is nearly impossible as we create a new generation of legacy codes (retaining the code base). When I got my first job out of school it was in Los Alamos home of one of the greatest scientific institutions in the World. This Lab birthed the Atomic Age and changed the World. I went there to work, but also learn and grow in a place where science reigned supreme and technical credibility really and truly mattered. Los Alamos did not disappoint at all. The place lived and breathed science, and I was bathed in knowledge and expertise. I can’t think of a better place to be a young scientist. Little did I know that the era of great science and technical superiority was drawing to a close. The place that welcomed me with so much generosity of spirit was dying. Today it is a mere shell of its former self along with Laboratories strewn across the country whose former greatness has been replaced by rampant mediocrity, pathetic leadership and a management class that rules this decline. Money has replaced achievement, integrity and quality as the lifeblood of science. Starting with a quote by Feynman is apt because the spirit he represents so well is the very thing we have completely beat out of the system.

When I got my first job out of school it was in Los Alamos home of one of the greatest scientific institutions in the World. This Lab birthed the Atomic Age and changed the World. I went there to work, but also learn and grow in a place where science reigned supreme and technical credibility really and truly mattered. Los Alamos did not disappoint at all. The place lived and breathed science, and I was bathed in knowledge and expertise. I can’t think of a better place to be a young scientist. Little did I know that the era of great science and technical superiority was drawing to a close. The place that welcomed me with so much generosity of spirit was dying. Today it is a mere shell of its former self along with Laboratories strewn across the country whose former greatness has been replaced by rampant mediocrity, pathetic leadership and a management class that rules this decline. Money has replaced achievement, integrity and quality as the lifeblood of science. Starting with a quote by Feynman is apt because the spirit he represents so well is the very thing we have completely beat out of the system.

The days of technical competence and scientific accomplishment are over. This foundation for American greatness has been overrun by risk aversion, fear and compliance with a spirit of commonness. I use the word “greatness” with gritted teeth because of the perversion of its meaning by the current President. This perversion is acute in the context of science because he represents everything that is destroying the greatness of the United States. Rather than “making America great again” he is accelerating every trend that has been eroding the foundation of American achievement. The management he epitomizes is the very thing that is the blunt tool bludgeoning American greatness into a bloody pulp. Trump’s pervasive incompetence masquerading as management expertise will surely push numerous American institutions further over the edge into mediocrity. His brand of management is all to prevalent today and utterly toxic to quality and integrity.

The days of technical competence and scientific accomplishment are over. This foundation for American greatness has been overrun by risk aversion, fear and compliance with a spirit of commonness. I use the word “greatness” with gritted teeth because of the perversion of its meaning by the current President. This perversion is acute in the context of science because he represents everything that is destroying the greatness of the United States. Rather than “making America great again” he is accelerating every trend that has been eroding the foundation of American achievement. The management he epitomizes is the very thing that is the blunt tool bludgeoning American greatness into a bloody pulp. Trump’s pervasive incompetence masquerading as management expertise will surely push numerous American institutions further over the edge into mediocrity. His brand of management is all to prevalent today and utterly toxic to quality and integrity. “butthead cowboys” and keep them from fucking up. Put differently, the management is there to destroy any individuality and make sure no one ever achieves anything great because no one can take a risk sufficient to achieve something miraculous. Anyone expressing individuality is a threat and needs to be chained up. We replaced stunning World class technical achievement with controlled staff, copious reporting, milestone setting, project management and compliance all delivered with mediocrity. This is bad enough by itself, but for an institution responsible for maintaining our nuclear weapons stockpile, the consequences are dire. Los Alamos isn’t remotely alone. Everything in the United States is being assaulted by the arrayed forces of mediocrity. It is reasonable to ask whether the responsibilities the Labs are charged with continue to be competently achieved.

“butthead cowboys” and keep them from fucking up. Put differently, the management is there to destroy any individuality and make sure no one ever achieves anything great because no one can take a risk sufficient to achieve something miraculous. Anyone expressing individuality is a threat and needs to be chained up. We replaced stunning World class technical achievement with controlled staff, copious reporting, milestone setting, project management and compliance all delivered with mediocrity. This is bad enough by itself, but for an institution responsible for maintaining our nuclear weapons stockpile, the consequences are dire. Los Alamos isn’t remotely alone. Everything in the United States is being assaulted by the arrayed forces of mediocrity. It is reasonable to ask whether the responsibilities the Labs are charged with continue to be competently achieved.

All of this is now blazoned across the political landscape with an inescapable sense that America’s best days are behind us. The deeply perverse outcome of the latest National election is a president who is a cartoonish version of a successful manager. We have put our abuser and a representative of the class that has undermined our Nation’s true greatness in the position of restoring that greatness. What a grand farce! Every day produces evidence that the current efforts toward restoring greatness are using the very things undermining it. The level of irony is so great as to defy credulity. The current administration’s efforts are the end point of a process that started over 20 years ago, obliterating professional government service and hollowing out technical expertise in every corner. The management class that has arisen in their place cannot achieve anything but moving money and people. Their ability to create the new and wonderful foundation of technical achievement is absent.

All of this is now blazoned across the political landscape with an inescapable sense that America’s best days are behind us. The deeply perverse outcome of the latest National election is a president who is a cartoonish version of a successful manager. We have put our abuser and a representative of the class that has undermined our Nation’s true greatness in the position of restoring that greatness. What a grand farce! Every day produces evidence that the current efforts toward restoring greatness are using the very things undermining it. The level of irony is so great as to defy credulity. The current administration’s efforts are the end point of a process that started over 20 years ago, obliterating professional government service and hollowing out technical expertise in every corner. The management class that has arisen in their place cannot achieve anything but moving money and people. Their ability to create the new and wonderful foundation of technical achievement is absent.

from the beginning. In a very real way the bullshit science of Star Wars was a trail blazer for today’s rampant scientific charlatans. Rather than give science a free reign to seek breakthroughs along with the inevitable failure, society suddenly sought guaranteed achievement at a reduced cost. In reality it got neither achievement or economized results. With the flow of money being equated to quality as opposed to results, the combination has poisoned science.

from the beginning. In a very real way the bullshit science of Star Wars was a trail blazer for today’s rampant scientific charlatans. Rather than give science a free reign to seek breakthroughs along with the inevitable failure, society suddenly sought guaranteed achievement at a reduced cost. In reality it got neither achievement or economized results. With the flow of money being equated to quality as opposed to results, the combination has poisoned science. the dominant factor in every decision. Since the managers are the gate keepers for funding they have uprooted technical achievement and progress as the core of organizational identity. It is no understatement to say that the dominance of financial concerns is tied to the ascendency of management and the decline of technical work. At the same time the desire for assured results produced a legion of charlatans who began to infest the research establishment. This combination has produced the corrosive effect of reducing the integrity of the entire system where money rules and results can be finessed to outright fabricated. Standards are so low now that it doesn’t really matter.

the dominant factor in every decision. Since the managers are the gate keepers for funding they have uprooted technical achievement and progress as the core of organizational identity. It is no understatement to say that the dominance of financial concerns is tied to the ascendency of management and the decline of technical work. At the same time the desire for assured results produced a legion of charlatans who began to infest the research establishment. This combination has produced the corrosive effect of reducing the integrity of the entire system where money rules and results can be finessed to outright fabricated. Standards are so low now that it doesn’t really matter. One of the key trends impacting our government funded Labs and research is the languid approach to science by the government. Spearheading this systematic decline in support is the long-term Republican approach to starving government that really took the stage in 1994 with the “Contract with America”. Since that time the funding for science has declined in real dollars along with a decrease in the support for professionalism by those in government. Over time the salaries and level of professional management has been under siege as part of an overall assault on governing. A compounding effect has been an ever-present squeeze on the rules related to conducting science. On the one hand we are told that the best business practices will be utilized to make science more efficient. Simultaneously, best practices in support for science have denied us. The result is no efficiency along with no best practices and simply a decline in overall professionalism for the Labs. All of this is deeply compounding the overall decline in support for research.

One of the key trends impacting our government funded Labs and research is the languid approach to science by the government. Spearheading this systematic decline in support is the long-term Republican approach to starving government that really took the stage in 1994 with the “Contract with America”. Since that time the funding for science has declined in real dollars along with a decrease in the support for professionalism by those in government. Over time the salaries and level of professional management has been under siege as part of an overall assault on governing. A compounding effect has been an ever-present squeeze on the rules related to conducting science. On the one hand we are told that the best business practices will be utilized to make science more efficient. Simultaneously, best practices in support for science have denied us. The result is no efficiency along with no best practices and simply a decline in overall professionalism for the Labs. All of this is deeply compounding the overall decline in support for research. Meetings. Meetings, Meetings. Meetings suck. Meetings are awful. Meeting are soul sucking, time wasters. Meetings are a good way to “work” without actually working. Meetings absolutely deserve the bad rap they get. Most people think that meetings should be abolished. One of the most dreaded workplace events is a day that is completely full of meetings. These days invariably feel like complete losses, draining all productive energy from what ought to be a day full of promise. I say this as an unabashed extrovert knowing that the introvert is going to feel overwhelmed by the prospect.

Meetings. Meetings, Meetings. Meetings suck. Meetings are awful. Meeting are soul sucking, time wasters. Meetings are a good way to “work” without actually working. Meetings absolutely deserve the bad rap they get. Most people think that meetings should be abolished. One of the most dreaded workplace events is a day that is completely full of meetings. These days invariably feel like complete losses, draining all productive energy from what ought to be a day full of promise. I say this as an unabashed extrovert knowing that the introvert is going to feel overwhelmed by the prospect.

If there is one thing that unifies people at work, it is meetings, and how much we despise them. Workplace culture is full of meetings and most of them are genuinely awful. Poorly run meetings are a veritable plague in the workplace. Meetings are also an essential human element in work, and work is a completely human and social endeavor. A large part of the problem is the relative difficulty of running a meeting well, which exceeds the talent and will of most people (managers). It is actually very hard to do this well. We have now gotten to the point where all of us almost reflexively expect a meeting to be awful and plan accordingly. For my own part, I take something to read, or my computer to do actual work, or the old stand-by of passing time (i.e., fucking off) on my handy dandy iPhone. I’ve even resorted to the newest meeting past-time of texting another meeting attendee to talk about how shitty the meeting is. All of this can be avoided by taking meetings more seriously and crafting time that is well spent. If this can’t be done the meeting should be cancelled until the time is well spent.

If there is one thing that unifies people at work, it is meetings, and how much we despise them. Workplace culture is full of meetings and most of them are genuinely awful. Poorly run meetings are a veritable plague in the workplace. Meetings are also an essential human element in work, and work is a completely human and social endeavor. A large part of the problem is the relative difficulty of running a meeting well, which exceeds the talent and will of most people (managers). It is actually very hard to do this well. We have now gotten to the point where all of us almost reflexively expect a meeting to be awful and plan accordingly. For my own part, I take something to read, or my computer to do actual work, or the old stand-by of passing time (i.e., fucking off) on my handy dandy iPhone. I’ve even resorted to the newest meeting past-time of texting another meeting attendee to talk about how shitty the meeting is. All of this can be avoided by taking meetings more seriously and crafting time that is well spent. If this can’t be done the meeting should be cancelled until the time is well spent.

Conferences, Talks and symposiums. This is a form of meeting that generally works pretty well. The conference has a huge advantage as a form of meeting. Time spend at a conference is almost always time well spent. Even at their worst, a conference should be a banquet of new information and exposure to new ideas. Of course, they can be done very poorly and the benefits can be undermined by poor execution and lack of attention to detail.