Honest differences are often a healthy sign of progress.

― Mahatma Gandhi

Last week I attended a rather large scientific meeting in Knoxville Tennessee. It was the kickoff meeting for the Exascale Computing Project. This is a relatively  huge program ($250 million/year) and the talent present at the meeting was truly astounding, a veritable who’s who in computational science in the United States. This project is the crown jewel of the national strategy to retain (or recapture) pre-eminence in high performance computing. Such a meeting has all the makings for banquet of inspiration, and intellectually thought-provoking discussions along with incredible energy. Simply meeting all of these great scientists, many of whom also happen to be wonderful friends only added to the potential. While friends abounded and acquaintances were made or rekindled, this was the high point of the week. The wealth of inspiration and intellectual discourse possible was quenched by bureaucratic imperatives leaving the meeting a barren and lifeless launch of a soulless project.

huge program ($250 million/year) and the talent present at the meeting was truly astounding, a veritable who’s who in computational science in the United States. This project is the crown jewel of the national strategy to retain (or recapture) pre-eminence in high performance computing. Such a meeting has all the makings for banquet of inspiration, and intellectually thought-provoking discussions along with incredible energy. Simply meeting all of these great scientists, many of whom also happen to be wonderful friends only added to the potential. While friends abounded and acquaintances were made or rekindled, this was the high point of the week. The wealth of inspiration and intellectual discourse possible was quenched by bureaucratic imperatives leaving the meeting a barren and lifeless launch of a soulless project.

The telltale signs of worry were all present in the lead up to the meeting: management of work took priority over the work itself, many traditional areas of accomplishment are simply ignored, political concerns swamping technical ones, and most damningly no aspirational vision. The meeting did nothing to dampen or dispel these signs, and we see a program spiraling toward outright crisis. Among the issues hampering the project is the degree of project management formality being applied, which is appropriate for a benign construction projects and completely inappropriate for HPC success. The demands of the management formality was delivered to the audience much like the wasteful prep work for standardized testing in our public schools. It will almost certainly have the same mediocrity inducing impact as that same testing regime, the illusion of progress and success where none actually exists. The misapplication of this management formality is likely to provide a merciful deathblow to this wounded mutant of a program. Some point in the next couple of years we will see the euthanized project as being the subject of a mercy killing.

degree of project management formality being applied, which is appropriate for a benign construction projects and completely inappropriate for HPC success. The demands of the management formality was delivered to the audience much like the wasteful prep work for standardized testing in our public schools. It will almost certainly have the same mediocrity inducing impact as that same testing regime, the illusion of progress and success where none actually exists. The misapplication of this management formality is likely to provide a merciful deathblow to this wounded mutant of a program. Some point in the next couple of years we will see the euthanized project as being the subject of a mercy killing.

There can be no progress without head-on confrontation.

― Christopher Hitchens

The depth of the vision problem in high performance computing (HPC) is massive. For a quarter of a billion dollars a year, one might expect an expressive and expansive vision for a future to be at the helm of the project. Instead the vision is a stale and spent version of the same approach taken in HPC for the past quarter of a Century. ECP simply has nothing new to offer. The vision of computing for the future is the vision of the past. A quarter of a century ago the stockpile stewardship program came to being in the United States and the lynchpin of the program was HPC. New massively parallel computers would unleash their power and tame our understanding of reality. All that was needed then was some faster computers and reality would submit to the power of computation. Today’s vision  is exactly the same except the power of the computers is 1000 times greater than the computers that would unlock the secrets of the universe a quarter of a century ago. Aside from the Exascale replacing Petascale in computing power, the vision of 25 years ago is identical to today’s vision. The problem then as now is the incompleteness of the vision and fatal flaws in how it is executed. If one adds a management approach that is seemingly devised by Chinese spies to undermine the program’s productivity and morale, the outcome of ECP seems assured, failure. This wouldn’t be the glorious failure of putting your best foot forward seeking great things, but failure born of incompetence and almost malicious disregard for the talent at their disposal.

is exactly the same except the power of the computers is 1000 times greater than the computers that would unlock the secrets of the universe a quarter of a century ago. Aside from the Exascale replacing Petascale in computing power, the vision of 25 years ago is identical to today’s vision. The problem then as now is the incompleteness of the vision and fatal flaws in how it is executed. If one adds a management approach that is seemingly devised by Chinese spies to undermine the program’s productivity and morale, the outcome of ECP seems assured, failure. This wouldn’t be the glorious failure of putting your best foot forward seeking great things, but failure born of incompetence and almost malicious disregard for the talent at their disposal.

The biggest issue with the entire approach to HPC is evident in the room of scientists I sat with last week, the minds and talents of these talented people are not being engaged. Let’s be completely clear, the room was full of immense talent with many members of the National Academies present, yet no intellectual engagement to speak of. How can we  succeed at something so massive and difficult while the voices of those paid to work on the project are silenced? At the same time we are failing to develop an entire generation of scientists with the holistic set of activities needed for successful HPC. The balance of technical activities needed for healthy useful HPC capability is simply unsupported and almost actively discouraged. We are effectively hollowing out an entire generation of applied mathematicians, computational engineers and physicists pushing them to focus more on software engineering than their primary disiplines. Today someone working in applied mathematics is more likely to focus on object oriented constructs in C++ than functional analysis. Moreover the software is acting as a straightjacket for the mathematics slowly suffocating actual mathematical investigations. We see important applied mathematical work avoided because software interfaces and assumptions are incompatible. One of the key aspects of ECP is the drive for everything to be expressed in software as products and our raison d’être. We’ve lost the balance of software as a necessary element in checking the utility of mathematics. We now have software in ascendency, and mathematics as a mere afterthought. Seeing this unfold with the arrayed talents on display in Knoxville last week felt absolutely and utterly tragic. Key scientific questions that the vitality of scientific computing absolutely hinge upon are left hanging without attention and progress on them is almost actively discouraged.

succeed at something so massive and difficult while the voices of those paid to work on the project are silenced? At the same time we are failing to develop an entire generation of scientists with the holistic set of activities needed for successful HPC. The balance of technical activities needed for healthy useful HPC capability is simply unsupported and almost actively discouraged. We are effectively hollowing out an entire generation of applied mathematicians, computational engineers and physicists pushing them to focus more on software engineering than their primary disiplines. Today someone working in applied mathematics is more likely to focus on object oriented constructs in C++ than functional analysis. Moreover the software is acting as a straightjacket for the mathematics slowly suffocating actual mathematical investigations. We see important applied mathematical work avoided because software interfaces and assumptions are incompatible. One of the key aspects of ECP is the drive for everything to be expressed in software as products and our raison d’être. We’ve lost the balance of software as a necessary element in checking the utility of mathematics. We now have software in ascendency, and mathematics as a mere afterthought. Seeing this unfold with the arrayed talents on display in Knoxville last week felt absolutely and utterly tragic. Key scientific questions that the vitality of scientific computing absolutely hinge upon are left hanging without attention and progress on them is almost actively discouraged.

When people don’t express themselves, they die one piece at a time.

— Laurie Halse Anderson

At the core of this tragedy is a fatally flawed vision of where we are going as a community. It was flawed 25 years ago, and we have failed to learn from the plainly obvious lessons. The original vision of computer power uber alles is technically and scientifically flawed, but financially viable. This is the core of the problem as dysfunction; we can get a flawed program funded and that is all we need to go forward. No leadership asserts itself to steer the program toward technical vitality. The flawed vision brings in money and money is all we need to do things. This gets to the core of so many problems as money becomes the sole source of legitimacy, correctness and value. We have lost the ability to lead by principle, and make hard choices. Instead the baser instincts hold sway looking only to provide the support for little empires that rule nothing.

First, we should outline the deep flaws in the current HPC push. The ECP program is about one thing, computer hardware. The issue a quarter of a century ago is the same as it is today; the hardware alone does not solve problems or endow us with capability. It is a single element in our overall ability to solve problems. I’ve argued many times that it is far from being the most important element, and may be one of the lesser capabilities to support. The item of greatest importance are the models of reality we solve, followed by the methods used to solve these models. Much of the enabling efficiency of solution is found in innovative algorithms. The key to this discussion is the subtext that these three most important elements in the HPC ecosystem are unsupported and minimized in priority by ECP. The focal point on hardware arises from two elements, the easier path to funding, and the fandom of hardware among the HPC cognoscenti.

the methods used to solve these models. Much of the enabling efficiency of solution is found in innovative algorithms. The key to this discussion is the subtext that these three most important elements in the HPC ecosystem are unsupported and minimized in priority by ECP. The focal point on hardware arises from two elements, the easier path to funding, and the fandom of hardware among the HPC cognoscenti.

We would be so much better off if the current programs took a decisive break with the past, and looked to move HPC in a different direction. In a deep and abiding way the computer industry has transformed in the last decade by the power of mobile computing. We have seen cellphones become the dominant factor in the industry. Innovative applications and pervasive connectivity has become the source of value and power. A vision of HPC that resonates with the direction of the broader industry would benefit from the flywheel effect instead of running counter to direction. Instead of building on this base, the HPC world remains tethered to the mainframe era long gone everywhere else. Moreover HPC remains in this mode even as the laws of physics conspire against it, and efforts suffer from terrible side effects of the difficulty in making progress in the outdated approach being taken. The hardware is acting to further tax every effort in HPC making the already threadbare support untenably shallow.

Instead of focusing on producing another class of outdated lumbering dinosaur mainframes, the HPC effort could leap onto clear industry trends and seek a bold resonant path. A combination of cloud based resources, coupled with connectivity could unleash ubiquitous computing and seamless integration with mobile computing forces. The ability to communicate works wonders for combining ideas and pushing innovation ahead would do more to advance science than almost any amount of computing power conceivable. Mobile computing is focused on general-purpose use, but hardly optimized for scientific use, which brings different dynamics. Specific effort to energize science through different computing dynamics could provide boundless progress. Instead of trying something distinct and new, we head back to a mine that has long since born its greatest ore.

Progress in science is one of the most fertile engines for advancing the state of humanity. The United States with its wealth and diversit y has been a leading light in progress globally. A combination of our political climate and innate limits in the American mindset seem to be conspiring to undo this engine of progress. Looking at the ECP program as a microcosm of the American experience is instructive. The overt control of all activities is suggestive of the pervasive lack of trust in our society. This lack of trust is paired with deep fear of scandal and more demands for control. Working in almost unison with these twin engines of destruction is the lack of respect for human capital in general, which is only made more tragic when one realizes the magnitude of the talent being wasted. Instead of trust and faith in the arrayed talent of the individuals being funded by the program, we are going to undermine all their efforts with doubt, fear and marginalization. The active role of bullshit in defining success allows the disregard for talent to go unnoticed (think bullshit and alternative facts as brothers).

y has been a leading light in progress globally. A combination of our political climate and innate limits in the American mindset seem to be conspiring to undo this engine of progress. Looking at the ECP program as a microcosm of the American experience is instructive. The overt control of all activities is suggestive of the pervasive lack of trust in our society. This lack of trust is paired with deep fear of scandal and more demands for control. Working in almost unison with these twin engines of destruction is the lack of respect for human capital in general, which is only made more tragic when one realizes the magnitude of the talent being wasted. Instead of trust and faith in the arrayed talent of the individuals being funded by the program, we are going to undermine all their efforts with doubt, fear and marginalization. The active role of bullshit in defining success allows the disregard for talent to go unnoticed (think bullshit and alternative facts as brothers).

Progress in science should always be an imperative of the highest order for our research. When progress is obviously constrained and defined with strict boundaries as we are seeing with HPC, the term malpractice should come to mind. One of the clearest elements of HPC is a focus upon management and strict project controls. Instead I see the hallmarks of mismanagement in the failure to engage and harness the talents, capabilities and  potential of the human resource available to them. Proper and able management of the people working on the project would harness and channel their efforts productively. Better yet, it would inspire and enable these talented individuals to innovate and discover new things that might power a brighter future for all of us. Instead we see the rule of fear, and limitations governing people’s actions. Instead we see an ever-tightening leash placed around people’s neck suffocating their ability to perform at their best. This is the core of the unfolding research tragedy that is doubtlessly playing out across a myriad of programs far beyond the small-scale tragedy unfolding with HPC.

potential of the human resource available to them. Proper and able management of the people working on the project would harness and channel their efforts productively. Better yet, it would inspire and enable these talented individuals to innovate and discover new things that might power a brighter future for all of us. Instead we see the rule of fear, and limitations governing people’s actions. Instead we see an ever-tightening leash placed around people’s neck suffocating their ability to perform at their best. This is the core of the unfolding research tragedy that is doubtlessly playing out across a myriad of programs far beyond the small-scale tragedy unfolding with HPC.

We can only see a short distance ahead, but we can see plenty there that needs to be done.

― Alan Turing

If one wants to understand fear and how it can destroy competence and achievement take a look at (American) football. How many times have you seen a team undone during the two minute drill? A team who has been dominating the other team defensively suddenly becomes porous when it switches to the prevent defense, it is a strategy born out of fear. They stop doing what works, but is risking and takes a safety first approach. It happens over and over providing the Madden quip that the only thing the prevent defense prevents is victory. It is a perfect metaphor for how fear plays out in society.

If one wants to understand fear and how it can destroy competence and achievement take a look at (American) football. How many times have you seen a team undone during the two minute drill? A team who has been dominating the other team defensively suddenly becomes porous when it switches to the prevent defense, it is a strategy born out of fear. They stop doing what works, but is risking and takes a safety first approach. It happens over and over providing the Madden quip that the only thing the prevent defense prevents is victory. It is a perfect metaphor for how fear plays out in society. Over 80 years ago we had a leader, FDR, who chastened us against fear saying, “we have nothing to fear but fear itself”. Today we have leaders who embrace fear as a prime motivator in almost every single public policy decision. We have the cynical use of fear to gain power used across the globe. Fear is also a really powerful way to free money from governments too. Terrorism is both a powerful political tool for both those committing the terrorist acts, and the military-police-industrial complexes to retain their control over society. We see the rise of vast police states across the Western world fueled by irrational fears of terrorism.

Over 80 years ago we had a leader, FDR, who chastened us against fear saying, “we have nothing to fear but fear itself”. Today we have leaders who embrace fear as a prime motivator in almost every single public policy decision. We have the cynical use of fear to gain power used across the globe. Fear is also a really powerful way to free money from governments too. Terrorism is both a powerful political tool for both those committing the terrorist acts, and the military-police-industrial complexes to retain their control over society. We see the rise of vast police states across the Western world fueled by irrational fears of terrorism. Fear also keeps people from taking risks. Many people decide not to travel because of fears associated with terrorism, among other things. Fear plays a more subtle role in work. If failure becomes unacceptable, fear will keep people from taking on difficult work, and focus on easier, low-risk work. This ultimately undermines our ability to achieve great things. If one does not focus on attempting to achieve great things, the great things simply will not happen. We are all poorer for it. Fear is ultimately the victory of small-minded limited thinking over hope and abundance of a better future. Instead of attacking the future with gusto and optimism, fear pushes us to contact to the past and turn our backs on progress.

Fear also keeps people from taking risks. Many people decide not to travel because of fears associated with terrorism, among other things. Fear plays a more subtle role in work. If failure becomes unacceptable, fear will keep people from taking on difficult work, and focus on easier, low-risk work. This ultimately undermines our ability to achieve great things. If one does not focus on attempting to achieve great things, the great things simply will not happen. We are all poorer for it. Fear is ultimately the victory of small-minded limited thinking over hope and abundance of a better future. Instead of attacking the future with gusto and optimism, fear pushes us to contact to the past and turn our backs on progress. ommunication. Good communication is based on trust. Fear is the absence of trust. People are afraid of ideas, and afraid to share their ideas or information with others. As Google amply demonstrates, knowledge is power. Fear keeps people form sharing information and leader to an overall diminishment in power. Information if held closely will produce control, but control of a smaller pie. Free information makes the pie bigger, creates abundance, but people are afraid of this. For example a lot of information is viewed as dangerous and held closely leading to things like classification. This is necessary, but also prone to horrible abuse.

ommunication. Good communication is based on trust. Fear is the absence of trust. People are afraid of ideas, and afraid to share their ideas or information with others. As Google amply demonstrates, knowledge is power. Fear keeps people form sharing information and leader to an overall diminishment in power. Information if held closely will produce control, but control of a smaller pie. Free information makes the pie bigger, creates abundance, but people are afraid of this. For example a lot of information is viewed as dangerous and held closely leading to things like classification. This is necessary, but also prone to horrible abuse.

Without leadership rejecting fear too many people simply give into it. Today leaders do not reject fear; they embrace it; they use it for their purposes, and amplify their power. It is easy to do because fear engages people’s animal core and it is prone to cynical manipulation. This fear paralyzes us and makes us weak. Fear is expensive, and slow. Fear is starving the efforts society could be making to make a better future. Progress and the hope of a better future rests squarely on our courage and bravery in the face of fear and the rejection of it as the organizing principle for our civilization.

Without leadership rejecting fear too many people simply give into it. Today leaders do not reject fear; they embrace it; they use it for their purposes, and amplify their power. It is easy to do because fear engages people’s animal core and it is prone to cynical manipulation. This fear paralyzes us and makes us weak. Fear is expensive, and slow. Fear is starving the efforts society could be making to make a better future. Progress and the hope of a better future rests squarely on our courage and bravery in the face of fear and the rejection of it as the organizing principle for our civilization. combination of mathematical structure and computer code the ideas can produce almost magical capabilities in understanding and explaining the World around us allowing us to tame reality in new innovative ways. One little correction is immediately in order; models themselves can be useful without computers. Simple models can be solved via analytical means and these solutions provided classical physics with many breakthroughs in the era before computers. Computers offered the ability expand the scope of these solutions to far more difficult and general models of reality.

combination of mathematical structure and computer code the ideas can produce almost magical capabilities in understanding and explaining the World around us allowing us to tame reality in new innovative ways. One little correction is immediately in order; models themselves can be useful without computers. Simple models can be solved via analytical means and these solutions provided classical physics with many breakthroughs in the era before computers. Computers offered the ability expand the scope of these solutions to far more difficult and general models of reality. This then takes us to the magic from methods and algorithms, which are similar, but differing in character. The method is the means of taking a model and solving it. The method enables a model to be solved, the nature of that solution, and the basic efficiency of the solution. Ultimately the methods power what is possible to achieve with computers. All our modeling and simulation codes depend upon these methods for their core abilities

This then takes us to the magic from methods and algorithms, which are similar, but differing in character. The method is the means of taking a model and solving it. The method enables a model to be solved, the nature of that solution, and the basic efficiency of the solution. Ultimately the methods power what is possible to achieve with computers. All our modeling and simulation codes depend upon these methods for their core abilities The magic isn’t limited to just making solutions possible, the means of making the solution possible also added important physical modeling to the equations. The core methodology used for shock capturing is the addition of subgrid dissipative physics (i.e., artificial viscosity). The foundation of shock capturing led directly to large eddy simulation and the ability to simulate turbulence. Improved shock capturing developed in the 1970’s and 1980’s created implicit large eddy simulation. To many this seemed completely magical; the modeling simply came for free. In reality this magic was predictable. The basic method of shock capturing was the same as the basic subgrid modeling in LES. Finding out that improved shock capturing gives automatic LES modeling is actually quite logical. In essence the connection is due to the model leaving key physics out of the equations. Nature doesn’t allow this to go unpunished.

The magic isn’t limited to just making solutions possible, the means of making the solution possible also added important physical modeling to the equations. The core methodology used for shock capturing is the addition of subgrid dissipative physics (i.e., artificial viscosity). The foundation of shock capturing led directly to large eddy simulation and the ability to simulate turbulence. Improved shock capturing developed in the 1970’s and 1980’s created implicit large eddy simulation. To many this seemed completely magical; the modeling simply came for free. In reality this magic was predictable. The basic method of shock capturing was the same as the basic subgrid modeling in LES. Finding out that improved shock capturing gives automatic LES modeling is actually quite logical. In essence the connection is due to the model leaving key physics out of the equations. Nature doesn’t allow this to go unpunished. -order accuracy. The trick is that the classical second-order results are oscillatory and prone to being unphysical. Modern shock capturing methods solve this issue and make solutions realizable. It turns out that the fundamental and leading truncation error in a second-order finite volume method produces the same form of dissipation as many models produce in the limit of vanishing viscosity. In other words, the second order solutions match the asymptotic structure of the solutions to the inviscid equations in a deep manner. This structural matching is the basis of the seemingly magic ability of second-order methods to produce convincingly turbulent calculations.

-order accuracy. The trick is that the classical second-order results are oscillatory and prone to being unphysical. Modern shock capturing methods solve this issue and make solutions realizable. It turns out that the fundamental and leading truncation error in a second-order finite volume method produces the same form of dissipation as many models produce in the limit of vanishing viscosity. In other words, the second order solutions match the asymptotic structure of the solutions to the inviscid equations in a deep manner. This structural matching is the basis of the seemingly magic ability of second-order methods to produce convincingly turbulent calculations.

ughs and adaption of complex efforts. Instead we tend to have highly controlled and scripted work lacking any innovation and discovery. In other words the control and lack of trust conspire to remove magic as a potential result. Over the years this leads to a lessening of the wonderful things we can accomplish.

ughs and adaption of complex efforts. Instead we tend to have highly controlled and scripted work lacking any innovation and discovery. In other words the control and lack of trust conspire to remove magic as a potential result. Over the years this leads to a lessening of the wonderful things we can accomplish. Being the successful and competent at high performance computing (HPC) is an essential enabling technology for supporting many scientific, military and industrial activities. It plays an important role in national defense, economics, cyber-everything and a measure of National competence. So it is important. Being the top nation in high performance computers is an important benchmark in defining national power. It does not measure overall success or competence, but rather a component of those things. Success and competence in high performance computing depends on a number of things including physics modeling and experimentation, applied mathematics, many types of engineering including software engineering, and computer hardware. In the list of these things computing hardware is among the least important aspects of competence. It is generally enabling for everything else, but hardly defines competence. In other words, hardware is necessary and far from sufficient.

Being the successful and competent at high performance computing (HPC) is an essential enabling technology for supporting many scientific, military and industrial activities. It plays an important role in national defense, economics, cyber-everything and a measure of National competence. So it is important. Being the top nation in high performance computers is an important benchmark in defining national power. It does not measure overall success or competence, but rather a component of those things. Success and competence in high performance computing depends on a number of things including physics modeling and experimentation, applied mathematics, many types of engineering including software engineering, and computer hardware. In the list of these things computing hardware is among the least important aspects of competence. It is generally enabling for everything else, but hardly defines competence. In other words, hardware is necessary and far from sufficient.

kicking the habit is hard. In a sense under Moore’s law computer performance skyrocketed for free, and people are not ready to see it go.

kicking the habit is hard. In a sense under Moore’s law computer performance skyrocketed for free, and people are not ready to see it go. other areas due its difficulty of use. This goes above and beyond the vast resource sink the hardware is.

other areas due its difficulty of use. This goes above and beyond the vast resource sink the hardware is. I work on this program and quietly make all these points. They fall of deaf ears because the people committed to hardware dominate the national and international conversations. Hardware is an easier sell to the political class who are not sophisticated enough to smell the bullshit they are being fed. Hardware has worked to get funding before, so we go back to the well. Hardware advances are easy to understand and sell politically. The more naïve and superficial the argument, the better fit it is for our increasingly elite-unfriendly body politic. All the other things needed for HPC competence and advances are supported largely by pro bono work. They are simply added effort that comes down to doing the right thing. There is a rub that puts all this good faith effort at risk. The balance and all the other work is not a priority or emphasis of the program. Generally it is not important or measured in the success of the program, or defined in the tasking from the funding agencies.

I work on this program and quietly make all these points. They fall of deaf ears because the people committed to hardware dominate the national and international conversations. Hardware is an easier sell to the political class who are not sophisticated enough to smell the bullshit they are being fed. Hardware has worked to get funding before, so we go back to the well. Hardware advances are easy to understand and sell politically. The more naïve and superficial the argument, the better fit it is for our increasingly elite-unfriendly body politic. All the other things needed for HPC competence and advances are supported largely by pro bono work. They are simply added effort that comes down to doing the right thing. There is a rub that puts all this good faith effort at risk. The balance and all the other work is not a priority or emphasis of the program. Generally it is not important or measured in the success of the program, or defined in the tasking from the funding agencies. We live in an era where we are driven to be unwaveringly compliant to rules and regulations. In other words you work on what you’re paid to work on, and you’re paid to complete the tasks spelled out in the work orders. As a result all of the things you do out of good faith and responsibility can be viewed as violating these rules. Success might depend doing all of these unfunded and unstated things, but the defined success from the work contracts are missing these elements. As a result the things that need to be done; do not get done. More often than not, you receive little credit or personal success from pursing doing the right thing. You do not get management or institutional support either. Expecting these unprioritized, unintentional things to happen is simply magical thinking.

We live in an era where we are driven to be unwaveringly compliant to rules and regulations. In other words you work on what you’re paid to work on, and you’re paid to complete the tasks spelled out in the work orders. As a result all of the things you do out of good faith and responsibility can be viewed as violating these rules. Success might depend doing all of these unfunded and unstated things, but the defined success from the work contracts are missing these elements. As a result the things that need to be done; do not get done. More often than not, you receive little credit or personal success from pursing doing the right thing. You do not get management or institutional support either. Expecting these unprioritized, unintentional things to happen is simply magical thinking. We have the situation where the priorities of the program are arrayed toward success in a single area that puts other areas needed for success at risk. Management then asks people to do good faith pro bono work to make up the difference. This good faith work violates the letter of the law in compliance toward contracted work. There appears to be no intention of supporting all of the other disciplines needed for success. We rely upon people’s sense of responsibility for closing this gap even when we drive a sense of duty that pushes against doing any extra work. In addition, the hardware focus levies an immense tax on all other work because the hardware is so incredibly user-unfriendly. The bottom line is a systematic abdication of responsibility by those charged with leading our efforts. Moreover we exist within a time and system where grass roots dissent and negative feedback is squashed. Our tepid and incompetent leadership can rest assured that their decisions will not be questioned.

We have the situation where the priorities of the program are arrayed toward success in a single area that puts other areas needed for success at risk. Management then asks people to do good faith pro bono work to make up the difference. This good faith work violates the letter of the law in compliance toward contracted work. There appears to be no intention of supporting all of the other disciplines needed for success. We rely upon people’s sense of responsibility for closing this gap even when we drive a sense of duty that pushes against doing any extra work. In addition, the hardware focus levies an immense tax on all other work because the hardware is so incredibly user-unfriendly. The bottom line is a systematic abdication of responsibility by those charged with leading our efforts. Moreover we exist within a time and system where grass roots dissent and negative feedback is squashed. Our tepid and incompetent leadership can rest assured that their decisions will not be questioned. Before getting to my conclusion, one might reasonably ask, “what should we be doing instead?” First we need an HPC program with balance between the impact on reality and the stream of enabling technology. The single most contemptible aspect of current programs is the nature of the hardware focus. The computers we are building are monstrosities, largely unfit for scientific use and vomitously inefficient. They are chasing a meaningless summit of performance measured through an antiquated and empty benchmark. We would be better served through building computers tailored to scientific computation that solve real important problems with efficiency. We should be building computers and software that spur our productivity and are easy to

Before getting to my conclusion, one might reasonably ask, “what should we be doing instead?” First we need an HPC program with balance between the impact on reality and the stream of enabling technology. The single most contemptible aspect of current programs is the nature of the hardware focus. The computers we are building are monstrosities, largely unfit for scientific use and vomitously inefficient. They are chasing a meaningless summit of performance measured through an antiquated and empty benchmark. We would be better served through building computers tailored to scientific computation that solve real important problems with efficiency. We should be building computers and software that spur our productivity and are easy to use. Instead we levy an enormous penalty toward any useful application of these machines because of their monstrous nature. A refocus away from the meaningless summit defined by an outdated benchmark could have vast benefits for science.

use. Instead we levy an enormous penalty toward any useful application of these machines because of their monstrous nature. A refocus away from the meaningless summit defined by an outdated benchmark could have vast benefits for science.

money. If something is not being paid for it is not important. If one couples steadfast compliance with only working on what you’re funded to do, any call to do the right thing despite funding is simply comical. The right thing becomes complying, and the important thing in this environment is funding the right things. As we work to account for every dime of spending in ever finer increments, the importance of sensible and visionary leadership becomes greater. The very nature of this accounting tsunami is to blunt and deny visionary leadership’s ability to exist. The end result is spending every dime as intended and wasting the vast majority of it on shitty, useless results. Any other outcome in the modern world is implausible.

money. If something is not being paid for it is not important. If one couples steadfast compliance with only working on what you’re funded to do, any call to do the right thing despite funding is simply comical. The right thing becomes complying, and the important thing in this environment is funding the right things. As we work to account for every dime of spending in ever finer increments, the importance of sensible and visionary leadership becomes greater. The very nature of this accounting tsunami is to blunt and deny visionary leadership’s ability to exist. The end result is spending every dime as intended and wasting the vast majority of it on shitty, useless results. Any other outcome in the modern world is implausible. Despite a relatively obvious path to fulfillment, the estimation of numerical error in modeling and simulation appears to be worryingly difficult to achieve. A big part of the problem is outright laziness, inattention, and poor standards. A secondary issue is the mismatch between theory and practice. If we maintain reasonable pressure on the modeling and simulation community we can overcome the first problem, but it does require not accepting substandard work. The second problem requires some focused research, along with a more pragmatic approach to practical problems. Along with these systemic issues we can deal with a simpler problem, where to put the error bars on simulations, or should they show a bias or symmetric error. I strongly favor a bias.

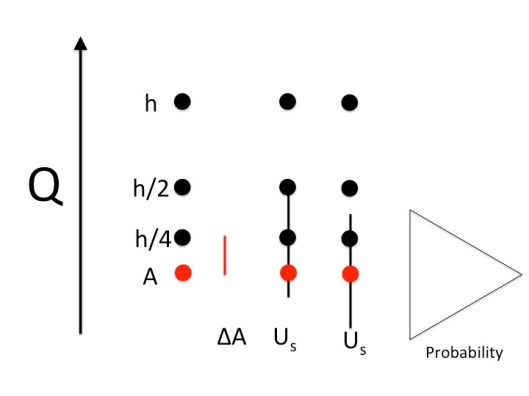

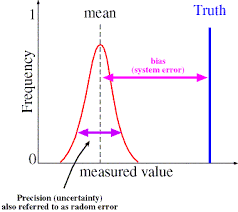

Despite a relatively obvious path to fulfillment, the estimation of numerical error in modeling and simulation appears to be worryingly difficult to achieve. A big part of the problem is outright laziness, inattention, and poor standards. A secondary issue is the mismatch between theory and practice. If we maintain reasonable pressure on the modeling and simulation community we can overcome the first problem, but it does require not accepting substandard work. The second problem requires some focused research, along with a more pragmatic approach to practical problems. Along with these systemic issues we can deal with a simpler problem, where to put the error bars on simulations, or should they show a bias or symmetric error. I strongly favor a bias. The core issue I’m talking about is the position of the numerical error bar. Current approaches center the error bar on the finite grid solution of interest, usually the finest mesh used. This has the effect of giving the impression that this solution is the most likely answer, and the true answer could be either direction from that answer. Neither of these suggestions is supported by the data used to construct the error bar. For this reason the standard practice today is problematic and should be changed to something supportable by the evidence. The current error bars suggest incorrectly that the most likely error is zero. This is completely and utterly unsupported by evidence.

The core issue I’m talking about is the position of the numerical error bar. Current approaches center the error bar on the finite grid solution of interest, usually the finest mesh used. This has the effect of giving the impression that this solution is the most likely answer, and the true answer could be either direction from that answer. Neither of these suggestions is supported by the data used to construct the error bar. For this reason the standard practice today is problematic and should be changed to something supportable by the evidence. The current error bars suggest incorrectly that the most likely error is zero. This is completely and utterly unsupported by evidence. mulation examples in existence are subject to bias in the solutions. This bias comes from numerical solution, modeling inadequacy, and bad assumptions to name a few of the sources. In contrast uncertainty quantification is usually applied in a statistical and clearly unbiased manner. This is a serious difference in perspective. The differences are clear. With bias the difference between simulation and reality is one sided and the deviation can be cured by calibrating parts of the model to compensate. Unbiased uncertainty is common in measurement error and ends up dominating the approach to UQ in simulations. The result is a mismatch between the dominant mode of uncertainty and how it is modeled. Coming up with a more nuanced and appropriate model that acknowledges and deals with bias appropriately would be great progress.

mulation examples in existence are subject to bias in the solutions. This bias comes from numerical solution, modeling inadequacy, and bad assumptions to name a few of the sources. In contrast uncertainty quantification is usually applied in a statistical and clearly unbiased manner. This is a serious difference in perspective. The differences are clear. With bias the difference between simulation and reality is one sided and the deviation can be cured by calibrating parts of the model to compensate. Unbiased uncertainty is common in measurement error and ends up dominating the approach to UQ in simulations. The result is a mismatch between the dominant mode of uncertainty and how it is modeled. Coming up with a more nuanced and appropriate model that acknowledges and deals with bias appropriately would be great progress. ociated with lack of computational resolution. The computational mesh is always far too coarse for comfort, and the numerical errors are significant. There are also issues associated with initial conditions, energy balance and representing physics at and below the level of the grid. In both cases the models are invariably calibrated heavily. This calibration compensates for the lack of mesh resolution, lack of knowledge of initial data and physics as well as problems with representing the energy balance essential to the simulation (especially climate). A serious modeling deficiency is the merging of all of these uncertainties into the calibration with an associated loss of information.

ociated with lack of computational resolution. The computational mesh is always far too coarse for comfort, and the numerical errors are significant. There are also issues associated with initial conditions, energy balance and representing physics at and below the level of the grid. In both cases the models are invariably calibrated heavily. This calibration compensates for the lack of mesh resolution, lack of knowledge of initial data and physics as well as problems with representing the energy balance essential to the simulation (especially climate). A serious modeling deficiency is the merging of all of these uncertainties into the calibration with an associated loss of information. The issues with calibration are profound. Without calibration the models are effectively useless. For these models to contribute to our societal knowledge and decision-making or raw scientific investigation, the calibration is an absolute necessity. Calibration depends entirely on existing data, and this carries a burden of applicability. How valid is the calibration when the simulation is probing outside the range of the data used to calibrate? We commonly include the intrinsic numerical bias in the calibration, and most commonly a turbulence or mixing model is adjusted to account for the numerical bias. A colleague familiar with ocean models quipped that if the ocean were as viscous as we modeled it, one could drive to London from New York. It is well known that numerical viscosity stabilizes calculation, and we can use numerical methods to model turbulence (implicit large eddy simulation), but this practice should at the very least make people uncomfortable. We are also left with the difficult matter of how to validate models that have been calibrated.

The issues with calibration are profound. Without calibration the models are effectively useless. For these models to contribute to our societal knowledge and decision-making or raw scientific investigation, the calibration is an absolute necessity. Calibration depends entirely on existing data, and this carries a burden of applicability. How valid is the calibration when the simulation is probing outside the range of the data used to calibrate? We commonly include the intrinsic numerical bias in the calibration, and most commonly a turbulence or mixing model is adjusted to account for the numerical bias. A colleague familiar with ocean models quipped that if the ocean were as viscous as we modeled it, one could drive to London from New York. It is well known that numerical viscosity stabilizes calculation, and we can use numerical methods to model turbulence (implicit large eddy simulation), but this practice should at the very least make people uncomfortable. We are also left with the difficult matter of how to validate models that have been calibrated. some part of the model. This issue plays out in weather and climate modeling where the mesh is part of the model rather than independent aspect of it. It should surprise no one that LES was born from weather-climate modeling (at the time where the distinction didn’t exist). In other words the chosen mesh and the model are intimately linked. If the mesh is modified, the modeling must also be modified (recalibrated) to get the balancing of the solution correct. This tends to happen in simulations where an intimate balance is essential to the phenomena. In these cases there is a system that in one respect or another is in a nearly equilibrium state, and the deviations from this equilibrium are essential. Aspects of the modeling related to the scales of interest including the grid itself impact the equilibrium to a degree that an un-calibrated model is nearly useless.

some part of the model. This issue plays out in weather and climate modeling where the mesh is part of the model rather than independent aspect of it. It should surprise no one that LES was born from weather-climate modeling (at the time where the distinction didn’t exist). In other words the chosen mesh and the model are intimately linked. If the mesh is modified, the modeling must also be modified (recalibrated) to get the balancing of the solution correct. This tends to happen in simulations where an intimate balance is essential to the phenomena. In these cases there is a system that in one respect or another is in a nearly equilibrium state, and the deviations from this equilibrium are essential. Aspects of the modeling related to the scales of interest including the grid itself impact the equilibrium to a degree that an un-calibrated model is nearly useless. h to a value (assuming the rest of the model is held fixed) then the error is well behaved. The sequence of solutions on the meshes can then be used to estimate the solution to the mathematical problem, that is the solution where the mesh resolution is infinite (absurd as it might be). Along with this estimate of the “perfect” solution, the error can be estimated for any of the meshes. For this well-behaved case the error is one sided, a bias between the ideal solution and the one with a mesh. Any fuzz in the estimate would be applied to the bias. In other words any uncertainty in the error estimate is centered about the extrapolated “perfect” solution, not the finite grid solutions. The problem with the current accepted methodology is that the error is given as a standard two-sided error bar that is appropriate for statistical errors. In other words we use a two-sided accounting for this error even though there is no evidence for it. This is a problem that should be corrected. I should note that many models (i.e., like climate or weather) invariably recalibrate after all mesh changes, which invalidates the entire verification exercise where the model aside from the grid should be fixed across the mesh sequence.

h to a value (assuming the rest of the model is held fixed) then the error is well behaved. The sequence of solutions on the meshes can then be used to estimate the solution to the mathematical problem, that is the solution where the mesh resolution is infinite (absurd as it might be). Along with this estimate of the “perfect” solution, the error can be estimated for any of the meshes. For this well-behaved case the error is one sided, a bias between the ideal solution and the one with a mesh. Any fuzz in the estimate would be applied to the bias. In other words any uncertainty in the error estimate is centered about the extrapolated “perfect” solution, not the finite grid solutions. The problem with the current accepted methodology is that the error is given as a standard two-sided error bar that is appropriate for statistical errors. In other words we use a two-sided accounting for this error even though there is no evidence for it. This is a problem that should be corrected. I should note that many models (i.e., like climate or weather) invariably recalibrate after all mesh changes, which invalidates the entire verification exercise where the model aside from the grid should be fixed across the mesh sequence. quantities that cannot be measured. In this case the uncertainty must be approached carefully. The uncertainty in these values must almost invariably be larger than the quantities used for calibration. One needs to look at the modeling connections for these values and attack a reasonable approach to treating the quantities with an appropriate “grain of salt”. This includes numerical error, which I talked about above too. In the best case there is data available that was not used to calibrate the model. Maybe these are values that are not as highly prized or as important as those used to calibrate. The uncertainty between these measured data values and the simulation gives very strong indications regarding the uncertainty in the simulation. In other cases some of the data potentially available for calibration has been left out, and can be used for validating the calibrated model. This assumes that the hold-out data is sufficiently independent of the data used.

quantities that cannot be measured. In this case the uncertainty must be approached carefully. The uncertainty in these values must almost invariably be larger than the quantities used for calibration. One needs to look at the modeling connections for these values and attack a reasonable approach to treating the quantities with an appropriate “grain of salt”. This includes numerical error, which I talked about above too. In the best case there is data available that was not used to calibrate the model. Maybe these are values that are not as highly prized or as important as those used to calibrate. The uncertainty between these measured data values and the simulation gives very strong indications regarding the uncertainty in the simulation. In other cases some of the data potentially available for calibration has been left out, and can be used for validating the calibrated model. This assumes that the hold-out data is sufficiently independent of the data used. certainty is to apply significant variation to the parameters used to calibrate the model. In addition we should include the numerical error in the uncertainty. In the case of deeply calibrated models these sources of uncertainty can be quite large and generally paint an overly pessimistic picture of the uncertainty. Conversely we have an extremely optimistic picture of uncertainty with calibration. The hope and best possible outcome is that these two views bound reality, and the true uncertainty lies between these extremes. For decision-making using simulation this bounding approach to uncertainty quantification should serve us well.

certainty is to apply significant variation to the parameters used to calibrate the model. In addition we should include the numerical error in the uncertainty. In the case of deeply calibrated models these sources of uncertainty can be quite large and generally paint an overly pessimistic picture of the uncertainty. Conversely we have an extremely optimistic picture of uncertainty with calibration. The hope and best possible outcome is that these two views bound reality, and the true uncertainty lies between these extremes. For decision-making using simulation this bounding approach to uncertainty quantification should serve us well.

rd Business Review (HBR). I know my managers read many of the same things I do. They also read business books, sometimes in a faddish manner. Among these is Daniel Pink’s excellent “Drive”. When I read HBR I feel inspired, and hopeful (Seth Godin’s books are another source of frustration and inspiration). When I read Drive I was left yearning for a workplace that operated on the principles expressed there. Yet when I return to the reality of work these pieces of literature seem fictional, even more like science fiction. The reality of work today is almost completely orthogonal to these aspirational writings. How can my managers read these things, then turn around and operate the way they do? No one seems to actually think through what implementation of these ideas would look like in the workplace. With each passing year we fall further from the ideal, more toward a workplace that crushes dreams, and simply drives people into some sort of cardboard cutout variety of behavior without any real soul.

rd Business Review (HBR). I know my managers read many of the same things I do. They also read business books, sometimes in a faddish manner. Among these is Daniel Pink’s excellent “Drive”. When I read HBR I feel inspired, and hopeful (Seth Godin’s books are another source of frustration and inspiration). When I read Drive I was left yearning for a workplace that operated on the principles expressed there. Yet when I return to the reality of work these pieces of literature seem fictional, even more like science fiction. The reality of work today is almost completely orthogonal to these aspirational writings. How can my managers read these things, then turn around and operate the way they do? No one seems to actually think through what implementation of these ideas would look like in the workplace. With each passing year we fall further from the ideal, more toward a workplace that crushes dreams, and simply drives people into some sort of cardboard cutout variety of behavior without any real soul. While work is the focus of my adult world, similar trends are at work on our children. School has become a similarly structured training ground for compliance and squalid mediocrity. Standardized testing is one route to this outcome where children are trained to take tests and no solve problems. Standardized testing becomes the perfect rubric for the soulless workplace that awaits them in the adult world. The rejection of fact and science by society as a whole is another way. We have a large segment of society who is suspicious of intellect. Too many people now view educated intellectuals as dangerous and their knowledge and facts are rejected whenever they disagree with the politically chosen philosophy. This attitude is a direct threat to the value of an educated populace. Under a system where intellect is devalued, education transforms into a means of training the population to obey authority and fall into line. The workplace is subject to the same trends, compliance and authority is prized along with predictability of results. The lack of value for intellect is also present within the sort of research institutions I work at. This is because it threatens predictability of results. As a result out of the box thinking is discouraged, and the entire system is geared to keep everyone in the box. We create systems oriented toward control and safety without realizing the price paid for rejecting exploration and risk. We all live a life less rich and less rewarding as a result, and by accumulating this over society, a broad-based diminishment of results.

While work is the focus of my adult world, similar trends are at work on our children. School has become a similarly structured training ground for compliance and squalid mediocrity. Standardized testing is one route to this outcome where children are trained to take tests and no solve problems. Standardized testing becomes the perfect rubric for the soulless workplace that awaits them in the adult world. The rejection of fact and science by society as a whole is another way. We have a large segment of society who is suspicious of intellect. Too many people now view educated intellectuals as dangerous and their knowledge and facts are rejected whenever they disagree with the politically chosen philosophy. This attitude is a direct threat to the value of an educated populace. Under a system where intellect is devalued, education transforms into a means of training the population to obey authority and fall into line. The workplace is subject to the same trends, compliance and authority is prized along with predictability of results. The lack of value for intellect is also present within the sort of research institutions I work at. This is because it threatens predictability of results. As a result out of the box thinking is discouraged, and the entire system is geared to keep everyone in the box. We create systems oriented toward control and safety without realizing the price paid for rejecting exploration and risk. We all live a life less rich and less rewarding as a result, and by accumulating this over society, a broad-based diminishment of results. o massive structures of control and societal safety. It also creates an apparatus for big brother to come to fruition in a way that makes Orwell more prescient than ever. The counter to such widespread safety and control is the diminished richness of life that is sacrificed to achieve it. Lives well-lived and bold outcomes are reduced in achieving safety. I’ve gotten to the point where this trade no longer seems worth it. What am I staying safe for? I am risking living a pathetic and empty life in trade for safety and security, so that I can die quietly. This is life in the box, and I want to live out of the box. I want to work out of the box too.

o massive structures of control and societal safety. It also creates an apparatus for big brother to come to fruition in a way that makes Orwell more prescient than ever. The counter to such widespread safety and control is the diminished richness of life that is sacrificed to achieve it. Lives well-lived and bold outcomes are reduced in achieving safety. I’ve gotten to the point where this trade no longer seems worth it. What am I staying safe for? I am risking living a pathetic and empty life in trade for safety and security, so that I can die quietly. This is life in the box, and I want to live out of the box. I want to work out of the box too. authority. The ruling business class and wealthy elite enjoy power through subtle subjugation of the vast populace. The populace accepts their subjugation in trade for promises of safety and security through the control of risk and danger.

authority. The ruling business class and wealthy elite enjoy power through subtle subjugation of the vast populace. The populace accepts their subjugation in trade for promises of safety and security through the control of risk and danger.

of science differently. First and foremost modeling and simulation enhances our ability to make predictions and test theories. As with any tool, it needs to be used with care and skill. My proposition is that the modeling and simulation practice of verification and validation combined with uncertainty quantification (VVUQ) defines this care and skill. Moreover VVUQ provides an instantiation of the scientific method for modeling and simulation. An absence of emphasis on VVUQ in modeling and simulation programs should bring doubt and scrutiny on the level of scientific discourse involved. In order to see this one needs to examine the scientific method in a bit more detail.

of science differently. First and foremost modeling and simulation enhances our ability to make predictions and test theories. As with any tool, it needs to be used with care and skill. My proposition is that the modeling and simulation practice of verification and validation combined with uncertainty quantification (VVUQ) defines this care and skill. Moreover VVUQ provides an instantiation of the scientific method for modeling and simulation. An absence of emphasis on VVUQ in modeling and simulation programs should bring doubt and scrutiny on the level of scientific discourse involved. In order to see this one needs to examine the scientific method in a bit more detail. ce elaborate and complex mathematical models, which are difficult to solve and inhibit the effective scope of predictions. Scientific computing relaxes this limitations significantly, but only if sufficient care is taken with assuring the credibility of the simulations. The entire process of VVUQ serves to provide the assessment of the simulation so that they may confidently be used in the scientific process. Nothing about modeling and simulation changes the process of posing questions and accumulating evidence in favor of a hypothesis. It does change how that relaxing limitations on the testing of theory arrives at evidence. Theories that were not fully testable are now open to far more complete examination as they now may make broader predictions than classical approaches allowed.

ce elaborate and complex mathematical models, which are difficult to solve and inhibit the effective scope of predictions. Scientific computing relaxes this limitations significantly, but only if sufficient care is taken with assuring the credibility of the simulations. The entire process of VVUQ serves to provide the assessment of the simulation so that they may confidently be used in the scientific process. Nothing about modeling and simulation changes the process of posing questions and accumulating evidence in favor of a hypothesis. It does change how that relaxing limitations on the testing of theory arrives at evidence. Theories that were not fully testable are now open to far more complete examination as they now may make broader predictions than classical approaches allowed. nd simulation where the degree of approximate accuracy is rarely included in the overall assessment. In many cases the level of error is never addressed and studied as part of the uncertainty assessment. Thus verification plays two key roles in the scientific study using modeling and simulation. Verification acts to define the credibility of the approximate solution to the theory being tested, and an estimation of the approximation quality. Without an estimate of the numerical approximation, we possibly suffer from conflating this error with modeling imperfections, and obscuring the assessment of the validity of the model. One should be aware of the pernicious practice of simply avoiding error estimation by declarative statements of being mesh-converged. This declaration should be coupled with direct evidence of mesh convergence, and the explicit capacity to provide estimates of actual numerical error. Without such evidence the declaration should be rejected.

nd simulation where the degree of approximate accuracy is rarely included in the overall assessment. In many cases the level of error is never addressed and studied as part of the uncertainty assessment. Thus verification plays two key roles in the scientific study using modeling and simulation. Verification acts to define the credibility of the approximate solution to the theory being tested, and an estimation of the approximation quality. Without an estimate of the numerical approximation, we possibly suffer from conflating this error with modeling imperfections, and obscuring the assessment of the validity of the model. One should be aware of the pernicious practice of simply avoiding error estimation by declarative statements of being mesh-converged. This declaration should be coupled with direct evidence of mesh convergence, and the explicit capacity to provide estimates of actual numerical error. Without such evidence the declaration should be rejected. experiments or observation of the natural world. In keeping with the theme an important element of the data in the context of validation is its quality and a proper uncertainty assessment. Again this assessment is vital for its ability to put the whole comparison with simulations in context, and help define what a good or bad comparison might be. Data with small uncertainty demands a completely different comparison than large uncertainty. Similarly for the simulations where the level of uncertainty has a large impact on how to view results. When the uncertainty is unspecified either data or simulation are untethered and scientific conclusions or engineering judgments are threatened.

experiments or observation of the natural world. In keeping with the theme an important element of the data in the context of validation is its quality and a proper uncertainty assessment. Again this assessment is vital for its ability to put the whole comparison with simulations in context, and help define what a good or bad comparison might be. Data with small uncertainty demands a completely different comparison than large uncertainty. Similarly for the simulations where the level of uncertainty has a large impact on how to view results. When the uncertainty is unspecified either data or simulation are untethered and scientific conclusions or engineering judgments are threatened. It is no understatement to note that this perspective is utterly missing from the high performance computing world today and the foolish drive to exascale we find ourselves on. Current exascale programs are almost completely lacking any emphasis on VVUQ. This highlights the lack of science in our current exascale programs. They are rather naked and direct hardware-centric programs that show little or no interest in actual science, or applications. The whole program is completely hardware-focused. The holistic nature of modeling and simulation is ignored and the activities connecting modeling and simulation with reality are systematically starved of resources, focus and attention. It is not too hyperbolic to declare that our exascale programs are not about science.

It is no understatement to note that this perspective is utterly missing from the high performance computing world today and the foolish drive to exascale we find ourselves on. Current exascale programs are almost completely lacking any emphasis on VVUQ. This highlights the lack of science in our current exascale programs. They are rather naked and direct hardware-centric programs that show little or no interest in actual science, or applications. The whole program is completely hardware-focused. The holistic nature of modeling and simulation is ignored and the activities connecting modeling and simulation with reality are systematically starved of resources, focus and attention. It is not too hyperbolic to declare that our exascale programs are not about science. perceived as vulnerability. Stating weaknesses or limitations to anything cannot be tolerated in today’s political environment, and risks project existence because it is perceived as failure. Instead of an honest assessment of the state of knowledge and level of theoretical predictivity, today’s science prefers to make over-inflated claims and publish via press release. VVUQ runs counter to this practice if done correctly. Done properly VVUQ provides people using modeling and simulation for scientific or engineering work with a detailed assessment of credibility and fitness for purpose.

perceived as vulnerability. Stating weaknesses or limitations to anything cannot be tolerated in today’s political environment, and risks project existence because it is perceived as failure. Instead of an honest assessment of the state of knowledge and level of theoretical predictivity, today’s science prefers to make over-inflated claims and publish via press release. VVUQ runs counter to this practice if done correctly. Done properly VVUQ provides people using modeling and simulation for scientific or engineering work with a detailed assessment of credibility and fitness for purpose. claration of intent by the program to seek results associated with spin and BS instead of a serious scientific or engineering effort. This end state is signaled by far more than merely a lack of VVUQ, but also the lack of serious application and modeling support. This simply compounds the lack of method and algorithm support that also plagues the program. The most cynical part of all of this is the centrality of application impact to the case made for the HPC programs. The pitch to the nation or the World is the utility of modeling and simulation to economic or physical security, yet the programs are structured to make sure this cannot happen, and will not be a viable outcome.

claration of intent by the program to seek results associated with spin and BS instead of a serious scientific or engineering effort. This end state is signaled by far more than merely a lack of VVUQ, but also the lack of serious application and modeling support. This simply compounds the lack of method and algorithm support that also plagues the program. The most cynical part of all of this is the centrality of application impact to the case made for the HPC programs. The pitch to the nation or the World is the utility of modeling and simulation to economic or physical security, yet the programs are structured to make sure this cannot happen, and will not be a viable outcome. of a solid from a molecular dynamics simulation.

of a solid from a molecular dynamics simulation. om using applications to superficially market the computers, the efforts are proportional to their proximity to the computer hardware. As a result large parts of the vital middle ground are languishing without effective support. Again we lose the middle ground that is the source of efficiency and enables the quality of the overall modeling and simulation. The creation of powerful models, solution methods, algorithms, and their instantiation in software all lack sufficient support. Each of these activities has vastly more potential than hardware to unleash capability, yet it remains without effective support. When one makes are careful examination of the program all the complexity and sophistication is centered on the hardware. The result has a simpler is better philosophy for the entire middle ground and those applications drawn into the marketing ploy.

om using applications to superficially market the computers, the efforts are proportional to their proximity to the computer hardware. As a result large parts of the vital middle ground are languishing without effective support. Again we lose the middle ground that is the source of efficiency and enables the quality of the overall modeling and simulation. The creation of powerful models, solution methods, algorithms, and their instantiation in software all lack sufficient support. Each of these activities has vastly more potential than hardware to unleash capability, yet it remains without effective support. When one makes are careful examination of the program all the complexity and sophistication is centered on the hardware. The result has a simpler is better philosophy for the entire middle ground and those applications drawn into the marketing ploy. l and complicated numerical method run with relatively simple models and simple meshes. DNS uses vast amounts of computer power on leading edge machines, but uses no model at all aside from the governing equations and very simple (albeit high-order) methods. As demands for credible simulations grow we need to embrace complexity in several directions for progress to be made.

l and complicated numerical method run with relatively simple models and simple meshes. DNS uses vast amounts of computer power on leading edge machines, but uses no model at all aside from the governing equations and very simple (albeit high-order) methods. As demands for credible simulations grow we need to embrace complexity in several directions for progress to be made. modeling and simulation. For the sophisticated and knowledgeable person, the computer is merely a tool, and the real product is the complete and assessed calculation tied to a full V&V pedigree.

modeling and simulation. For the sophisticated and knowledgeable person, the computer is merely a tool, and the real product is the complete and assessed calculation tied to a full V&V pedigree.