Not trying or putting forth your best effort is.

Only those who dare to fail greatly can ever achieve greatly.

― Robert F. Kennedy

Last week I attended the ASME Verification and Validation Symposium as I have for the last five years. One of the keynote talks ended with a discussion of the context of results in V&V. It used the following labels for an axis: Success (good) and Failure (bad). I took issue with it, and made a fairly controversial statement. Failure is not negative or bad, and we should not keep referring to it as being negative. I might even go so far as to suggest that we actually encourage failure, or at the very least celebrate it because failure also implies your trying. I would be much happier if the scale was related to effort and excellence of work. The greatest sin is not failing; it is not trying.

Keep trying; success is hard won through much failure.

― Ken Poirot

It is actually worse than simply being a problem that the best effort isn’t put forth, lack of acceptance of failure inhibits success. The outright acceptance of failure as a viable outcome of work is necessary for the sort of success one can have pride in. If nothing is risked enough to potentially fail than nothing can be achieved. Today we have accepted the absence of failure as being the tell tale sign of success. It is not. This connection is desperately unhealthy and leads to a diminishing return on effort. Potential failure while an unpleasant prospect is absolutely necessary for achievement. As such the failures when best effort is put forth should be celebrated and lauded whenever possible and encouraged. Instead we have a culture that crucifies those who fail with regard for the effort on excellence of the work going into it.

It is actually worse than simply being a problem that the best effort isn’t put forth, lack of acceptance of failure inhibits success. The outright acceptance of failure as a viable outcome of work is necessary for the sort of success one can have pride in. If nothing is risked enough to potentially fail than nothing can be achieved. Today we have accepted the absence of failure as being the tell tale sign of success. It is not. This connection is desperately unhealthy and leads to a diminishing return on effort. Potential failure while an unpleasant prospect is absolutely necessary for achievement. As such the failures when best effort is put forth should be celebrated and lauded whenever possible and encouraged. Instead we have a culture that crucifies those who fail with regard for the effort on excellence of the work going into it.

Right now we are suffering in many endeavors from deep unremitting fear of failure. The outright fear of failing and the consequences of that failure are resulting in many efforts reducing their aggressiveness in attacking their goals. We reset our goals downward to avoid any possibility of being regarded as failing. The result is an extensive reduction in achievement. We achieve less because we are so afraid of failing at anything. This is resulting is the destruction of careers, and the squandering of vast sums of money. We are committing to mediocre work that is guaranteed of “success” rather than attempting excellent work that could fail.

A person who never made a mistake, never tried anything new

― Albert Einstein

We have not recognized the extent to which failure energizes our ability to learn, and bootstrap ourselves to a greater level of achievement. Failure is perhaps the greatest means to teach us powerful lessons. Failure is a means to define our limits of understanding and knowledge. Failure is the fuel for discovery. Where we fail, we have work that needs to be done. We have mystery and challenge. Without failure we lose discovery, mystery, challenge and understanding. Our knowledge becomes stagnant and we cease learning. We should be embracing failure because failure leads to growth and achievement.

Instead today we recoil and run from failure. Failure has become such a massive black mark professionally that people simply will not associate themselves with something that isn’t a sure thing. The problem is that sure things aren’t research they are developed science and technology. If one is engaged in research, we do not have certain results. The promise of discovery is also tinged with the possibility of failure. Without the possibility of failure, discovery is not possible. Without an outright tolerance for a failed result or idea, the discovery of something new and wonderful cannot be had. At a personal level the ability to learn, develop and master knowledge is driven by failure. The greatest and most compelling lessons in life are all driven by failures. With a failure you learn a lesson that sticks with you, and your learning sticks.

Creatives fail and the really good ones fail often.

― Steven Kotler

It is the ability to tolerate ambiguity in results that leads to much of the management response. Management is based on assuring results and defining success. Our modern management culture seems to be incapable of tolerating the prospect of failure. Of course the differences in failure are not readily supported by our systems today. There is a difference between an earnest effort that still fails and an incompetent effort that fails. One should be supported and celebrated and the other is the definition of unsuccessful. We have lost the capacity to tolerate these subtleties. All failure is viewed as the same and management is intolerant. They require results that can be predicted and failure undermines this central tenant of management.

The end result of all of this failure avoidance is a generically misplaced sense of what constitutes achievement. More deeply we are losing the capacity to fully understand how to structure work so that things of consequence may be achieved. In the process we are wasting money, careers and lives in the pursuit of hollowed out victories. The lack of failure is now celebrated even though the level of success and achievement is a mere shadow of the sorts of success we saw a mere generation ago. We have become so completely under the spell of avoidance of scandal that we shy away from doing anything bold or visionary.

A Transportation Security Administration (TSA) officer pats down Elliott Erwitt as he works his way through security at San Francisco International Airport in San Francisco, Wednesday, Nov. 24, 2010. (AP Photo/Jeff Chiu)

We live in an age where the system cannot tolerate a single bad event (e.g., failure whether it is an engineered system, or a security system,…). In the real World failures are utterly and completely unavoidable. There is a price to be paid for reductions of bad events and one can never have an absolute guarantee. The cost of reducing the probability of bad events escalates rather dramatically as you look to reduce the tail probabilities beyond a certain point. Things like the mass media and demagoguery by politicians takes any bad event and stokes fears using the public’s response as a tool for their own power and purposes. We are shamelessly manipulated to be terrified of things that have always been one-off minor risks to our lives. Our legal system does its dead level best to amp all of this fearful behavior for their own selfish interests of siphoning as much money as possible from whoever has the misfortune of tapping into the tails of extreme events.

In the area of security, the lack of tolerance for bad events is immense. More than this, the pervasive security apparatus produces a side effect that greatly empowers things like terrorism. Terror’s greatest weapon is not high explosives, but fear and we go out of our way to do terrorists jobs for them. Instead of tamping down fears our government and politicians go out of their way to scare the shit out of the public. This allows them to gain power and fund more activities to answer the security concerns of the scared shitless public. The best way to get rid of terror is to stop getting scared. The greatest weapon against terror is bravery, not bombs. A fearless public cannot be terrorized.

In the area of security, the lack of tolerance for bad events is immense. More than this, the pervasive security apparatus produces a side effect that greatly empowers things like terrorism. Terror’s greatest weapon is not high explosives, but fear and we go out of our way to do terrorists jobs for them. Instead of tamping down fears our government and politicians go out of their way to scare the shit out of the public. This allows them to gain power and fund more activities to answer the security concerns of the scared shitless public. The best way to get rid of terror is to stop getting scared. The greatest weapon against terror is bravery, not bombs. A fearless public cannot be terrorized.

The end result of all of this risk intolerance is a lack of achievement as individuals, organizations, or the society itself. Without the acceptance of failure, we relegate ourselves to a complete lack of achievement. Without the ability to risk greatly we lack the ability to achieve greatly. Risk, danger and failure all improve our lives in every respect. The dial is too turned away from accepting risk to allow us to be part of progress. All of us will live poorer lives with less knowledge, achievement and experience because of the attitudes that exist today. The deeper issue is that the lack of appetite for obvious risks and failure actually kicks the door open for even greater risks and more massive failures in the long run. These sorts of outcomes may already be upon us in terms of massive opportunity cost. Terrorism is something that has cost our society vast sums of money and undermined the full breadth of society. We should have had astronauts on Mars already, yet the reality of this is decades away, so our societal achievement is actually deeply pathetic. The gap between “what could be” and “what is” has grown into a yawning chasm. Somebody needs to lead with bravery and pragmatically take the leap over the edge to fill it.

The end result of all of this risk intolerance is a lack of achievement as individuals, organizations, or the society itself. Without the acceptance of failure, we relegate ourselves to a complete lack of achievement. Without the ability to risk greatly we lack the ability to achieve greatly. Risk, danger and failure all improve our lives in every respect. The dial is too turned away from accepting risk to allow us to be part of progress. All of us will live poorer lives with less knowledge, achievement and experience because of the attitudes that exist today. The deeper issue is that the lack of appetite for obvious risks and failure actually kicks the door open for even greater risks and more massive failures in the long run. These sorts of outcomes may already be upon us in terms of massive opportunity cost. Terrorism is something that has cost our society vast sums of money and undermined the full breadth of society. We should have had astronauts on Mars already, yet the reality of this is decades away, so our societal achievement is actually deeply pathetic. The gap between “what could be” and “what is” has grown into a yawning chasm. Somebody needs to lead with bravery and pragmatically take the leap over the edge to fill it.

We have nothing to fear but fear itself.

– Franklin D. Roosevelt.

In the constellation of numerical analysis theorems the Lax equivalence theorem may have no equals in its importance. It is simple and its impact is profound on the whole business of numerical approximations. The theorem basically implies that if you provide a consistent approximation to the differential equations of interest and it is stable, the solution will converge. The devil of course is in the details. Consistency is defined by having an approximation with ordered errors in the mesh or time discretization, which implies that the approximation is at least first-order accurate, if not better. A key aspect of this that is overlooked is the necessity to have mesh spacing sufficiently small to achieve the defined error where failure to do so renders the solution erratically convergent at best.

In the constellation of numerical analysis theorems the Lax equivalence theorem may have no equals in its importance. It is simple and its impact is profound on the whole business of numerical approximations. The theorem basically implies that if you provide a consistent approximation to the differential equations of interest and it is stable, the solution will converge. The devil of course is in the details. Consistency is defined by having an approximation with ordered errors in the mesh or time discretization, which implies that the approximation is at least first-order accurate, if not better. A key aspect of this that is overlooked is the necessity to have mesh spacing sufficiently small to achieve the defined error where failure to do so renders the solution erratically convergent at best.

Stability then becomes the issue where you must assure that the approximations produce bounded results under the appropriate control of the solution. Usually the stability is defined as a character of the time stepping approach and requires that the time step be sufficiently small to provide stability. A lesser-known equivalence theorem is due to Dahlquist and applies to integrating ordinary differential equations and applies to multistep methods. From this work the whole aspect of zero stability arises where you have to assure that a non-zero time step size gives stability in the first place. More deeply, Dahlquist’s version of the equivalence theorem applies to nonlinear equations, but is limited to multistep methods where as Lax’s applies to linear equations.

Stability then becomes the issue where you must assure that the approximations produce bounded results under the appropriate control of the solution. Usually the stability is defined as a character of the time stepping approach and requires that the time step be sufficiently small to provide stability. A lesser-known equivalence theorem is due to Dahlquist and applies to integrating ordinary differential equations and applies to multistep methods. From this work the whole aspect of zero stability arises where you have to assure that a non-zero time step size gives stability in the first place. More deeply, Dahlquist’s version of the equivalence theorem applies to nonlinear equations, but is limited to multistep methods where as Lax’s applies to linear equations. rem doesn’t formally apply to the nonlinear case the guidance is remarkably powerful and appropriate. We have a simple and limited theorem that produces incredible consequences for any approximation methodology that we are applying to partial differential equations. Moreover the whole this was derived in the early 1950’s and generally thought through even earlier. The theorem came to pass because we knew that approximations to PDEs and their solution on computers do work. Dahlquist’s work is founded on a similar path; the availability of the computers shows us what the possibilities are and the issues that must be elucidated. We do see a virtuous cycle where the availability of computing capability spurs on developments in theory. This is an important aspect of healthy science where different aspects of a given field push and pull each other. Today we are counting on hardware advances to push the field forward. We should be careful that our focus is set where advances are ripe, its my opinion that hardware isn’t it.

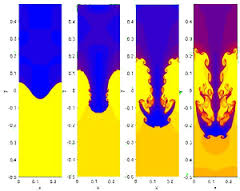

rem doesn’t formally apply to the nonlinear case the guidance is remarkably powerful and appropriate. We have a simple and limited theorem that produces incredible consequences for any approximation methodology that we are applying to partial differential equations. Moreover the whole this was derived in the early 1950’s and generally thought through even earlier. The theorem came to pass because we knew that approximations to PDEs and their solution on computers do work. Dahlquist’s work is founded on a similar path; the availability of the computers shows us what the possibilities are and the issues that must be elucidated. We do see a virtuous cycle where the availability of computing capability spurs on developments in theory. This is an important aspect of healthy science where different aspects of a given field push and pull each other. Today we are counting on hardware advances to push the field forward. We should be careful that our focus is set where advances are ripe, its my opinion that hardware isn’t it. One of the very large topics in V&V that is generally overlooked is models and their range of validity. All models are limited in terms of their range of applicability based on time and length scales. For some phenomena this is relatively obvious, e.g., multiphase flow. For other phenomena the range of applicability is much more subtle. Among the first important topics to examine is the satisfaction of the continuum hypothesis, the capacity of a homogenization or averaging to be representative. The degree of satisfaction of homogenization is dependent on the scale of the problem and degrades as phenomenon becomes smaller scale. For multiphase flow this is obvious as the example of bubbly flow shows. As the number of bubbles becomes smaller any averaging becomes highly problematic. It argues that the models should be modified in some fashion to account for the change in scale size.

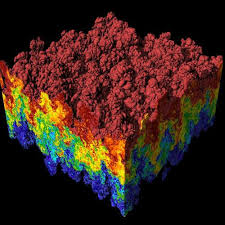

One of the very large topics in V&V that is generally overlooked is models and their range of validity. All models are limited in terms of their range of applicability based on time and length scales. For some phenomena this is relatively obvious, e.g., multiphase flow. For other phenomena the range of applicability is much more subtle. Among the first important topics to examine is the satisfaction of the continuum hypothesis, the capacity of a homogenization or averaging to be representative. The degree of satisfaction of homogenization is dependent on the scale of the problem and degrades as phenomenon becomes smaller scale. For multiphase flow this is obvious as the example of bubbly flow shows. As the number of bubbles becomes smaller any averaging becomes highly problematic. It argues that the models should be modified in some fashion to account for the change in scale size. Another more pernicious and difficult issues are homogenization assumptions that are not so fundamental. Consider the situation where a solid is being modeled in a continuum fashion. When the mesh is very large, the solid comprised of discrete grains can be modeled by averaging over these grains because there are so many of them. Over time we are able to solve problems with smaller and smaller mesh scales. Ultimately we now solve problems where the mesh size approaches the grain size. Clearly under this circumstance the homogenization used for averaging will lose its validity. The structural variations in the homogenized equations are removed and should become substantial and not be ignored as the mesh size becomes small. In the quest for exascale computing this issue is completely and utterly ignored. Some areas of study for high performance computing consider these issues carefully most notably climate and weather modeling where the mesh size issues are rather glaring. I would note that these fields are subjected to continual and rather public validation.

Another more pernicious and difficult issues are homogenization assumptions that are not so fundamental. Consider the situation where a solid is being modeled in a continuum fashion. When the mesh is very large, the solid comprised of discrete grains can be modeled by averaging over these grains because there are so many of them. Over time we are able to solve problems with smaller and smaller mesh scales. Ultimately we now solve problems where the mesh size approaches the grain size. Clearly under this circumstance the homogenization used for averaging will lose its validity. The structural variations in the homogenized equations are removed and should become substantial and not be ignored as the mesh size becomes small. In the quest for exascale computing this issue is completely and utterly ignored. Some areas of study for high performance computing consider these issues carefully most notably climate and weather modeling where the mesh size issues are rather glaring. I would note that these fields are subjected to continual and rather public validation. The theorem is applied to the convergence of the model’s solution in the limit where the “mesh” spacing goes to zero. Models are always limited in their applicability as a function of length and time scale. The equivalence theorem will be applied and take many models outside their true applicability. An important thing to wrangle in the grand scheme of things is whether models are being solved and convergent in the actual range of scales where it is applicable. A true tragedy would be a model that is only accurate and convergent in regimes where it is not applicable. This may actually be the case in many cases most notably the aforementioned multiphase flow. This calls into question the nature of the modeling and numerical methods used to solve the equations.

The theorem is applied to the convergence of the model’s solution in the limit where the “mesh” spacing goes to zero. Models are always limited in their applicability as a function of length and time scale. The equivalence theorem will be applied and take many models outside their true applicability. An important thing to wrangle in the grand scheme of things is whether models are being solved and convergent in the actual range of scales where it is applicable. A true tragedy would be a model that is only accurate and convergent in regimes where it is not applicable. This may actually be the case in many cases most notably the aforementioned multiphase flow. This calls into question the nature of the modeling and numerical methods used to solve the equations. WTF has become the catchphrase for today’s world. “What the fuck” moments fill our days and nothing is more WTF than Donald Trump. We will be examining the viability of the reality show star, and general douchebag celebrity-rich guy as a viable presidential candidate for decades to come. Some view his success as a candidate apocalyptically, or characterize it as an “extinction level event” politically. In the current light it would seem to be a stunning indictment of our modern society. How could this happen? How could we have come to this moment as a nation where it is even a possibility for such a completely insane outcome to be a reasonably high probability outcome of our political process? What else does it say about us as a people? WTF? What in the actual fuck!

WTF has become the catchphrase for today’s world. “What the fuck” moments fill our days and nothing is more WTF than Donald Trump. We will be examining the viability of the reality show star, and general douchebag celebrity-rich guy as a viable presidential candidate for decades to come. Some view his success as a candidate apocalyptically, or characterize it as an “extinction level event” politically. In the current light it would seem to be a stunning indictment of our modern society. How could this happen? How could we have come to this moment as a nation where it is even a possibility for such a completely insane outcome to be a reasonably high probability outcome of our political process? What else does it say about us as a people? WTF? What in the actual fuck! The phrase “what the fuck” came into the popular lexicon along with Tom Cruz in the movie, “Risky Business” back in 1983. There the lead character played by Tom Cruz exclaims, “sometimes you gotta say, what the fuck” It was a mantra for just going for broke and trying stuff without obvious regard for the consequences. Given our general lack of an appetite for risk and failure, the other side of the coin took the phrase over. Over time the phrase has morphed into a general commentary about things that are generally unbelievable. Accordingly the acronym WTF came into being by 1985. I hope that 2016 is peak-what the fuck, cause things can’t get much more what the fuck without everything turning into a complete shitshow. Going to a deeper view of things the real story behind where we are is the victory of bullshit as a narrative element in society. In a sense the transfer of WTF from a mantra for risk taking has transmogrified into a mantra for the breadth of impact of not taking risks!

The phrase “what the fuck” came into the popular lexicon along with Tom Cruz in the movie, “Risky Business” back in 1983. There the lead character played by Tom Cruz exclaims, “sometimes you gotta say, what the fuck” It was a mantra for just going for broke and trying stuff without obvious regard for the consequences. Given our general lack of an appetite for risk and failure, the other side of the coin took the phrase over. Over time the phrase has morphed into a general commentary about things that are generally unbelievable. Accordingly the acronym WTF came into being by 1985. I hope that 2016 is peak-what the fuck, cause things can’t get much more what the fuck without everything turning into a complete shitshow. Going to a deeper view of things the real story behind where we are is the victory of bullshit as a narrative element in society. In a sense the transfer of WTF from a mantra for risk taking has transmogrified into a mantra for the breadth of impact of not taking risks!

It is the general victory of showmanship and entertainment. The superficial and bombastic rule the day. I think that Trump is committing one of the greatest frauds of all time. He is completely and utterly unfit for office, yet has a reasonable (or perhaps unreasonable) chance to win the election. The fraud is being committed in plain sight and the fact that he speaks falsehoods at a marvelously high rate without any of the normative ill effects. Trump’s victory is testimony to how gullible the public is to complete bullshit. This gullibility reflects the lack of will on the part of the public to address real issues. With the sort of “leadership” that Trump represents, the ability to address real problems will further erode. The big irony is that Trump’s mantra of “Make America Great Again” is the direct opposite impact of his message. Trump’s sort of leadership destroys the capacity of the Nation to solve the sort of problems that lead to actual greatness. He is hastening the decline of the United States by choking our will to act in a tidal wave of bullshit.

It is the general victory of showmanship and entertainment. The superficial and bombastic rule the day. I think that Trump is committing one of the greatest frauds of all time. He is completely and utterly unfit for office, yet has a reasonable (or perhaps unreasonable) chance to win the election. The fraud is being committed in plain sight and the fact that he speaks falsehoods at a marvelously high rate without any of the normative ill effects. Trump’s victory is testimony to how gullible the public is to complete bullshit. This gullibility reflects the lack of will on the part of the public to address real issues. With the sort of “leadership” that Trump represents, the ability to address real problems will further erode. The big irony is that Trump’s mantra of “Make America Great Again” is the direct opposite impact of his message. Trump’s sort of leadership destroys the capacity of the Nation to solve the sort of problems that lead to actual greatness. He is hastening the decline of the United States by choking our will to act in a tidal wave of bullshit. There is a lot more bullshit in society than just Trump; he is just the most obvious example right now. Those who master bullshit win the day today, and it drives the depth of the WTF moments. Fundamentally there are forces in society today that are driving us toward the sorts of outcomes that cause us to think, “WTF?” For example we are prioritizing a high degree of micromanagement over achievement due to the risks associated with giving people freedom. Freedom encourages achievement, but also carries the risk of scandal when people abuse their freedom. Without the risks you cannot have the achievements. Today the priority is no scandal and accomplishment simply isn’t important enough to empower anyone. We are developing systems of management that serve to disempower people so that they don’t do anything unpredictable (like achieve something!).

There is a lot more bullshit in society than just Trump; he is just the most obvious example right now. Those who master bullshit win the day today, and it drives the depth of the WTF moments. Fundamentally there are forces in society today that are driving us toward the sorts of outcomes that cause us to think, “WTF?” For example we are prioritizing a high degree of micromanagement over achievement due to the risks associated with giving people freedom. Freedom encourages achievement, but also carries the risk of scandal when people abuse their freedom. Without the risks you cannot have the achievements. Today the priority is no scandal and accomplishment simply isn’t important enough to empower anyone. We are developing systems of management that serve to disempower people so that they don’t do anything unpredictable (like achieve something!). I wonder deeply about the extent to which things like the Internet play into this dynamic. Does the Internet allow bullshit to be presented with equality to bona fide facts? Does the Internet and computers allow a degree of micromanagement that strangles achievement? Does the Internet produce new patterns in society that we don’t understand much less have the capacity to manage? What is the value of information if it can’t be managed or understood in any way that is beyond superficial? The real danger is that people will gravitate toward what they want to view as facts instead of confronting issues that are unpleasant. The danger seems to be playing out in the political events in the United States and beyond.

I wonder deeply about the extent to which things like the Internet play into this dynamic. Does the Internet allow bullshit to be presented with equality to bona fide facts? Does the Internet and computers allow a degree of micromanagement that strangles achievement? Does the Internet produce new patterns in society that we don’t understand much less have the capacity to manage? What is the value of information if it can’t be managed or understood in any way that is beyond superficial? The real danger is that people will gravitate toward what they want to view as facts instead of confronting issues that are unpleasant. The danger seems to be playing out in the political events in the United States and beyond. happening faster and it is lubricating changes to take effect at a high pace. On the one hand we have an incredible ability to communicate with people that is beyond the capacity to even imagine a generation ago. The same communication mechanisms produces a deluge of information we are drowning and input to a degree that is choking people’s capacity to process what they are being fed. What good is the information, if the people receiving it are unable to comprehend it sufficiently to take action? or if people are unable to distinguish the proper actionable information from the complete garbage?

happening faster and it is lubricating changes to take effect at a high pace. On the one hand we have an incredible ability to communicate with people that is beyond the capacity to even imagine a generation ago. The same communication mechanisms produces a deluge of information we are drowning and input to a degree that is choking people’s capacity to process what they are being fed. What good is the information, if the people receiving it are unable to comprehend it sufficiently to take action? or if people are unable to distinguish the proper actionable information from the complete garbage? All of these forces are increasingly driving the elites in society (and increasingly the elites are simply those who have a modicum of education) to look at events and say “WTF?” I mean what the actual fuck is going on? The movie Idiocracy was set 500 years in the future, and yet we seem to be moving toward that vision of the future at an accelerated path that makes anyone educated enough to see what is happening tremble with fear. The sort of complete societal shitshow in the movie seems to be unfolding in front of our very eyes today. The mind-numbing effects of reality show television and pervasive low-brow entertainment is spreading like a plague. Donald Trump is the most obvious evidence of how bad things have gotten.

All of these forces are increasingly driving the elites in society (and increasingly the elites are simply those who have a modicum of education) to look at events and say “WTF?” I mean what the actual fuck is going on? The movie Idiocracy was set 500 years in the future, and yet we seem to be moving toward that vision of the future at an accelerated path that makes anyone educated enough to see what is happening tremble with fear. The sort of complete societal shitshow in the movie seems to be unfolding in front of our very eyes today. The mind-numbing effects of reality show television and pervasive low-brow entertainment is spreading like a plague. Donald Trump is the most obvious evidence of how bad things have gotten. The sort of terrible outcomes we see obviously through our broken political discourse are happening across society. The scientific world I work in is no different. The low-brow and superficial are dominating the dialog. Our programs are dominated by strangling micromanagement that operates in the name of accountability, but really speaks volumes about the lack of trust. Furthermore the low-brow dialog simply reflects the societal desire to eliminate the technical elites from the process. This also connects back to the micromanagement because the elites can’t be trusted either. It’s become better to speak to the uneducated common man who you can “have a beer with” than trust the gibberish coming from an elite. As a result the rise of complete bullshit as technical achievements has occurred. When the people in charge can’t distinguish between Nobel Prize winning work and complete pseudo-science, the low-bar wins out. Those of us who know better are left with nothing to do but say What the Fuck? Over and over again.

The sort of terrible outcomes we see obviously through our broken political discourse are happening across society. The scientific world I work in is no different. The low-brow and superficial are dominating the dialog. Our programs are dominated by strangling micromanagement that operates in the name of accountability, but really speaks volumes about the lack of trust. Furthermore the low-brow dialog simply reflects the societal desire to eliminate the technical elites from the process. This also connects back to the micromanagement because the elites can’t be trusted either. It’s become better to speak to the uneducated common man who you can “have a beer with” than trust the gibberish coming from an elite. As a result the rise of complete bullshit as technical achievements has occurred. When the people in charge can’t distinguish between Nobel Prize winning work and complete pseudo-science, the low-bar wins out. Those of us who know better are left with nothing to do but say What the Fuck? Over and over again.

Effectively we are creating an ecosystem where the apex predators are missing, and this isn’t a good thing. The models we use in science are the key to everything. They are the translation of our understanding into mathematics that we can solve and manipulate to explore our collective reality. Computers allow us to solve much more elaborate models than otherwise possible, but little else. The core of the value in scientific computing are the capacity of the models to explain and examine the physical World we live in. They are the “apex predators” in the scientific computing system, and taking this analogy further our models are becoming virtual dinosaurs where evolution has ceased to take place. The models in our codes are becoming a set of fossilized skeletons and not at all alive, evolving and growing.

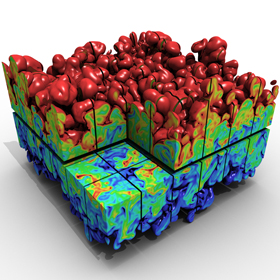

Effectively we are creating an ecosystem where the apex predators are missing, and this isn’t a good thing. The models we use in science are the key to everything. They are the translation of our understanding into mathematics that we can solve and manipulate to explore our collective reality. Computers allow us to solve much more elaborate models than otherwise possible, but little else. The core of the value in scientific computing are the capacity of the models to explain and examine the physical World we live in. They are the “apex predators” in the scientific computing system, and taking this analogy further our models are becoming virtual dinosaurs where evolution has ceased to take place. The models in our codes are becoming a set of fossilized skeletons and not at all alive, evolving and growing. People do not seem to understand that faulty models render the entirety of the computing exercise moot. Yes, the computational results may be rendered into exciting and eye-catching pictures suitable for entertaining and enchanting various non-experts including congressmen, generals, business leaders and the general public. These eye-catching pictures are getting better all the time and now form the basis of a lot of the special effects in the movies. All of this does nothing for how well the models capture reality. The deepest truth is that no amount of computer power, numerical accuracy, mesh refinement, or computational speed can rescue a model that is incorrect. The entire process of validation against observations made in reality must be applied to determine if models are correct. HPC does little to solve this problem. If the validation provides evidence that the model is wrong and a more complex model is needed then HPC can provide a tool to solve it.

People do not seem to understand that faulty models render the entirety of the computing exercise moot. Yes, the computational results may be rendered into exciting and eye-catching pictures suitable for entertaining and enchanting various non-experts including congressmen, generals, business leaders and the general public. These eye-catching pictures are getting better all the time and now form the basis of a lot of the special effects in the movies. All of this does nothing for how well the models capture reality. The deepest truth is that no amount of computer power, numerical accuracy, mesh refinement, or computational speed can rescue a model that is incorrect. The entire process of validation against observations made in reality must be applied to determine if models are correct. HPC does little to solve this problem. If the validation provides evidence that the model is wrong and a more complex model is needed then HPC can provide a tool to solve it. seamlessly to produce incredible things. We have immensely complex machines that produce important outcomes in the real world through a set of interweaved systems that translate electrical signals into instructions understood by the computer and humans, into discrete equations, solved by mathematical procedures that describe the real world and ultimately compared with measured quantities in systems we care about. If we look at our focus today, the complexity of focus is the part of the technology that connects very elaborate complex computers to the instructions understood both by computers and people. This is electrical engineering and computer science. The focus begins to dampen in the part of the system where the mathematics, physics and reality comes in. These activities form the bond between the computer and reality. These activities are not a priority, and conspicuously diminished significantly by today’s HPC.

seamlessly to produce incredible things. We have immensely complex machines that produce important outcomes in the real world through a set of interweaved systems that translate electrical signals into instructions understood by the computer and humans, into discrete equations, solved by mathematical procedures that describe the real world and ultimately compared with measured quantities in systems we care about. If we look at our focus today, the complexity of focus is the part of the technology that connects very elaborate complex computers to the instructions understood both by computers and people. This is electrical engineering and computer science. The focus begins to dampen in the part of the system where the mathematics, physics and reality comes in. These activities form the bond between the computer and reality. These activities are not a priority, and conspicuously diminished significantly by today’s HPC. HPC today is structured in a manner to eviscerate fields that have been essential to the success of scientific computing. A good example is our applied mathematics programs. In many cases applied mathematics has become little more than scientific programming and code development. Far too little actual mathematics is happening today, and far too much focus is seen in productizing mathematics in software. Many people with training in applied mathematics only do software development today and spend little or no effort in doing analysis and development away from their keyboards. It isn’t that software development isn’t important, the issue is the lack of balance in the overall ratio of mathematics to software. The power and beauty of applied mathematics must be harnessed to achieve success in modeling and simulation. Today we are simply bypassing

HPC today is structured in a manner to eviscerate fields that have been essential to the success of scientific computing. A good example is our applied mathematics programs. In many cases applied mathematics has become little more than scientific programming and code development. Far too little actual mathematics is happening today, and far too much focus is seen in productizing mathematics in software. Many people with training in applied mathematics only do software development today and spend little or no effort in doing analysis and development away from their keyboards. It isn’t that software development isn’t important, the issue is the lack of balance in the overall ratio of mathematics to software. The power and beauty of applied mathematics must be harnessed to achieve success in modeling and simulation. Today we are simply bypassing  this essential part of the problem to focus on delivering software products.

this essential part of the problem to focus on delivering software products. To avoid the sort of implicit assumption of ZERO uncertainty one can use (expert) judgment to fill in the information gap. This can be accomplished in a distinctly principled fashion and always works better with a basis in evidence. The key is the recognition that we base our uncertainty on a model (a model that is associated with error too). The models are fairly standard and need a certain minimum amount of information to be solvable, and we are always better off with too much information making it effectively over-determined. Here we look at several forms of models that lead to uncertainty estimation including discretization error, and statistical models applicable to epistemic or experimental uncertainty.

To avoid the sort of implicit assumption of ZERO uncertainty one can use (expert) judgment to fill in the information gap. This can be accomplished in a distinctly principled fashion and always works better with a basis in evidence. The key is the recognition that we base our uncertainty on a model (a model that is associated with error too). The models are fairly standard and need a certain minimum amount of information to be solvable, and we are always better off with too much information making it effectively over-determined. Here we look at several forms of models that lead to uncertainty estimation including discretization error, and statistical models applicable to epistemic or experimental uncertainty. meshes to solve the error model exactly or more if we solve it in some sort of optimal manner. We recently had a method published that discusses how to include expert judgment in the determination of numerical error and uncertainty using models of this type. This model can be solved along with data using minimization techniques including the expert judgment as constraints on the solution for the unknowns. For both the over- or the under-determined cases different minimizations one can get multiple solutions to the model and robust statistical techniques may be used to find the “best” answers. This means that one needs to resort to more than simple curve fitting, and least squares procedures; one needs to solve a nonlinear problem associated with minimizing the fitting error (i.e., residuals) with respect to other error representations.

meshes to solve the error model exactly or more if we solve it in some sort of optimal manner. We recently had a method published that discusses how to include expert judgment in the determination of numerical error and uncertainty using models of this type. This model can be solved along with data using minimization techniques including the expert judgment as constraints on the solution for the unknowns. For both the over- or the under-determined cases different minimizations one can get multiple solutions to the model and robust statistical techniques may be used to find the “best” answers. This means that one needs to resort to more than simple curve fitting, and least squares procedures; one needs to solve a nonlinear problem associated with minimizing the fitting error (i.e., residuals) with respect to other error representations. ables can be completely eliminated by simply choosing the solution based on expert judgment. For numerical error an obvious example is assuming that calculations are converging at an expert-defined rate. Of course the rate assumed needs an adequate justification based on a combination of information associated with the nature of the numerical method and the solution to the problem. A key assumption that often does not hold up is the achievement of the method’s theoretical rate of convergence for realistic problems. In many cases a high-order method will perform at a lower rate of convergence because the problem has a structure with less regularity than necessary for the high-order accuracy. Problems with shocks or other forms of discontinuities will not usually support high-order results and a good operating assumption is a first-order convergence rate.

ables can be completely eliminated by simply choosing the solution based on expert judgment. For numerical error an obvious example is assuming that calculations are converging at an expert-defined rate. Of course the rate assumed needs an adequate justification based on a combination of information associated with the nature of the numerical method and the solution to the problem. A key assumption that often does not hold up is the achievement of the method’s theoretical rate of convergence for realistic problems. In many cases a high-order method will perform at a lower rate of convergence because the problem has a structure with less regularity than necessary for the high-order accuracy. Problems with shocks or other forms of discontinuities will not usually support high-order results and a good operating assumption is a first-order convergence rate. To make things concrete let’s tackle a couple of examples of how all of this might work. In the paper published recently we looked at solution verification when people use two meshes instead of the three needed to fully determine the error model. This seems kind of extreme, but in this post the example is the cases where people only use a single mesh. Seemingly we can do nothing at all to estimate uncertainty, but as I explained last week, this is the time to bear down and include an uncertainty because it is the most uncertain situation, and the most important time to assess it. Instead people throw up their hands and do nothing at all, which is the worst thing to do. So we have a single solution

To make things concrete let’s tackle a couple of examples of how all of this might work. In the paper published recently we looked at solution verification when people use two meshes instead of the three needed to fully determine the error model. This seems kind of extreme, but in this post the example is the cases where people only use a single mesh. Seemingly we can do nothing at all to estimate uncertainty, but as I explained last week, this is the time to bear down and include an uncertainty because it is the most uncertain situation, and the most important time to assess it. Instead people throw up their hands and do nothing at all, which is the worst thing to do. So we have a single solution  A lot of this information is probably good to include as part of the analysis when you have enough information too. The right way to think about this information is as constraints on the solution. If the constraints are active they have been triggered by the analysis and help determine the solution. If the constraints have no effect on the solution then they are proven to be correct given the data. In this way the solution can be shown to be consistent with the views of the expertise. If one is in the circumstance where the expert judgment is completely determining the solution, one should be very wary as this is a big red flag.

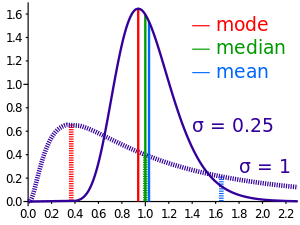

A lot of this information is probably good to include as part of the analysis when you have enough information too. The right way to think about this information is as constraints on the solution. If the constraints are active they have been triggered by the analysis and help determine the solution. If the constraints have no effect on the solution then they are proven to be correct given the data. In this way the solution can be shown to be consistent with the views of the expertise. If one is in the circumstance where the expert judgment is completely determining the solution, one should be very wary as this is a big red flag. standard modeling assumption is the use of the normal or Gaussian distribution as the starting assumption. This is almost always chosen as a default. A reasonable blog post title would be “The default probability distribution is always Gaussian”. A good thing for a distribution is that we can start to assess it beginning with two data points. A bad and common situation is that we only have a single data point. Thus uncertainty estimation is impossible without adding information from somewhere, and an expert judgment is the obvious place to look. With statistical data and its quality we can apply the standard error estimation using the sample size to scale the additional uncertainty driven by poor sampling,

standard modeling assumption is the use of the normal or Gaussian distribution as the starting assumption. This is almost always chosen as a default. A reasonable blog post title would be “The default probability distribution is always Gaussian”. A good thing for a distribution is that we can start to assess it beginning with two data points. A bad and common situation is that we only have a single data point. Thus uncertainty estimation is impossible without adding information from somewhere, and an expert judgment is the obvious place to look. With statistical data and its quality we can apply the standard error estimation using the sample size to scale the additional uncertainty driven by poor sampling,  For statistical models eventually one might resort to using a Bayesian method to encode the expert judgment in defining a prior distribution. In general terms this might seem to be an absolutely key approach to structure the expert judgment where statistical modeling is called for. The basic form of Bayes theorem is

For statistical models eventually one might resort to using a Bayesian method to encode the expert judgment in defining a prior distribution. In general terms this might seem to be an absolutely key approach to structure the expert judgment where statistical modeling is called for. The basic form of Bayes theorem is  I have noticed that we tend to accept a phenomenally common and undeniably unfortunate practice where a failure to assess uncertainty means that the uncertainty reported (acknowledged, accepted) is identically ZERO. In other words if we do nothing at all, no work, no judgment, the work (modeling, simulation, experiment, test) is allowed to provide an uncertainty that is ZERO. This encourages scientists and engineers to continue to do nothing because this wildly optimistic assessment is a seeming benefit. If somebody does work to estimate the uncertainty the degree of uncertainty always gets larger as a result. This practice is desperately harmful to the practice and progress in science and incredibly common.

I have noticed that we tend to accept a phenomenally common and undeniably unfortunate practice where a failure to assess uncertainty means that the uncertainty reported (acknowledged, accepted) is identically ZERO. In other words if we do nothing at all, no work, no judgment, the work (modeling, simulation, experiment, test) is allowed to provide an uncertainty that is ZERO. This encourages scientists and engineers to continue to do nothing because this wildly optimistic assessment is a seeming benefit. If somebody does work to estimate the uncertainty the degree of uncertainty always gets larger as a result. This practice is desperately harmful to the practice and progress in science and incredibly common. t shortcomings, a gullible or lazy community readily accepts the incomplete work. Some of the better work has uncertainties associated with it, but almost always varying degrees of incompleteness. Of course one should acknowledge up front that uncertainty estimation is always incomplete, but the degree of incompleteness can be spellbindingly large.

t shortcomings, a gullible or lazy community readily accepts the incomplete work. Some of the better work has uncertainties associated with it, but almost always varying degrees of incompleteness. Of course one should acknowledge up front that uncertainty estimation is always incomplete, but the degree of incompleteness can be spellbindingly large.

within the expressed taxonomy for uncertainty. I’ll start with numerical uncertainty estimation that is the most commonly completely non-assessed uncertainty. Far too often a single calculation is simply shown and used without any discussion. In slightly better cases, the calculation will be given with some comments on the sensitivity of the results to the mesh and the statement that numerical errors are negligible at the mesh given. Don’t buy it! This is usually complete bullshit! In every case where no quantitative uncertainty is explicitly provided, you should be suspicious. In other cases unless the reasoning is stated as being expertise or experience it should be questioned. If it is stated as being experiential then the basis for this experience and its documentation should be given explicitly along with evidence that it is directly relevant.

within the expressed taxonomy for uncertainty. I’ll start with numerical uncertainty estimation that is the most commonly completely non-assessed uncertainty. Far too often a single calculation is simply shown and used without any discussion. In slightly better cases, the calculation will be given with some comments on the sensitivity of the results to the mesh and the statement that numerical errors are negligible at the mesh given. Don’t buy it! This is usually complete bullshit! In every case where no quantitative uncertainty is explicitly provided, you should be suspicious. In other cases unless the reasoning is stated as being expertise or experience it should be questioned. If it is stated as being experiential then the basis for this experience and its documentation should be given explicitly along with evidence that it is directly relevant. estimation. Far too many calculational efforts provide a single calculation without any idea of the requisite uncertainties. In a nutshell, the philosophy in many cases is that the goal is to complete the best single calculation possible and creating a calculation that is capable of being assessed is not a priority. In other words the value proposition for computation is either do the best single calculation without any idea of the uncertainty versus a lower quality simulation with a well-defined assessment of uncertainty. Today the best single calculation is the default approach. This best single calculation then uses the default uncertainty estimate of exactly ZERO because nothing else is done. We need to adopt an attitude that will reject this approach because of the dangers associated with accepting a calculation without any quality assessment.

estimation. Far too many calculational efforts provide a single calculation without any idea of the requisite uncertainties. In a nutshell, the philosophy in many cases is that the goal is to complete the best single calculation possible and creating a calculation that is capable of being assessed is not a priority. In other words the value proposition for computation is either do the best single calculation without any idea of the uncertainty versus a lower quality simulation with a well-defined assessment of uncertainty. Today the best single calculation is the default approach. This best single calculation then uses the default uncertainty estimate of exactly ZERO because nothing else is done. We need to adopt an attitude that will reject this approach because of the dangers associated with accepting a calculation without any quality assessment. random uncertainty. This sort of uncertainty is clearly overlooked by our modeling approach in a way that most people fail to appreciate. Our models and governing equations are oriented toward solving the average or mean solution for a given engineering or science problem. This key question is usually muddled together in modeling by adopting an approach that mixes a specific experimental event with a model focused on the average. This results in a model that has an unclear separation of the general and specific. Few experiments or events being simulated are viewed from the context that they are simply a single instantiation of a distribution of possible outcomes. The distribution of possible outcomes is generally completely unknown and not even considered. This leads to an important source of systematic uncertainty that is completely ignored.

random uncertainty. This sort of uncertainty is clearly overlooked by our modeling approach in a way that most people fail to appreciate. Our models and governing equations are oriented toward solving the average or mean solution for a given engineering or science problem. This key question is usually muddled together in modeling by adopting an approach that mixes a specific experimental event with a model focused on the average. This results in a model that has an unclear separation of the general and specific. Few experiments or events being simulated are viewed from the context that they are simply a single instantiation of a distribution of possible outcomes. The distribution of possible outcomes is generally completely unknown and not even considered. This leads to an important source of systematic uncertainty that is completely ignored. value in challenging our theory. Moreover the whole sequence of necessary activities like model development, and analysis, method and algorithm development along with experimental science and engineering are all receiving almost no attention today. These activities are absolutely necessary for modeling and simulation success along with the sort of systematic practices I’ve elaborated on in this post. Without a sea change in the attitude toward how modeling and simulation is practiced and what it depends upon, its promise as a technology will be stillborn and nullified by our collective hubris.

value in challenging our theory. Moreover the whole sequence of necessary activities like model development, and analysis, method and algorithm development along with experimental science and engineering are all receiving almost no attention today. These activities are absolutely necessary for modeling and simulation success along with the sort of systematic practices I’ve elaborated on in this post. Without a sea change in the attitude toward how modeling and simulation is practiced and what it depends upon, its promise as a technology will be stillborn and nullified by our collective hubris. Fluid mechanics at its simplest is something called Stokes flow, basically motion so slow that it is solely governed by viscous forces. This is the asymptotic state where the Reynolds number (the ratio of inertial to viscous forces) is identically zero. It’s a bit oxymoronic as it is never reached, it’s the equations of motion without any motion or where the motion can be ignored. In this limit flows preserve their basic symmetries to a very high degree.

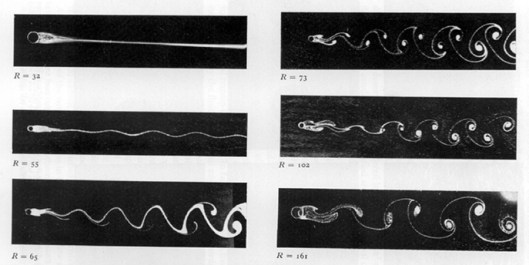

Fluid mechanics at its simplest is something called Stokes flow, basically motion so slow that it is solely governed by viscous forces. This is the asymptotic state where the Reynolds number (the ratio of inertial to viscous forces) is identically zero. It’s a bit oxymoronic as it is never reached, it’s the equations of motion without any motion or where the motion can be ignored. In this limit flows preserve their basic symmetries to a very high degree. The fundamental asymmetry in physics is the arrow of time, and its close association with entropy. The connection with asymmetry and entropy is quite clear and strong for shock waves where the mathematical theory is well-developed and accepted. The simplest case to examine is Burgers’ equation,

The fundamental asymmetry in physics is the arrow of time, and its close association with entropy. The connection with asymmetry and entropy is quite clear and strong for shock waves where the mathematical theory is well-developed and accepted. The simplest case to examine is Burgers’ equation,  On the one hand the form seems to be unavoidable dimensionally, on the other it is a profound result that provides the basis of the Clay prize for turbulence. It gets to the core of my belief that to a very large degree the understanding of turbulence will elude us as long as we use the intrinsically unphysical incompressible approximation. This may seem controversial, but incompressibility is an approximation to reality, not a fundamental relation. As such its utility is dependent upon the application. It is undeniably useful, but has limits, which are shamelessly exposed by turbulence. Without viscosity the equations governing incompressible flows are pathological in the extreme. Deep mathematical analysis has been unable to find singular solutions of the nature needed to explain turbulence in incompressible flows.

On the one hand the form seems to be unavoidable dimensionally, on the other it is a profound result that provides the basis of the Clay prize for turbulence. It gets to the core of my belief that to a very large degree the understanding of turbulence will elude us as long as we use the intrinsically unphysical incompressible approximation. This may seem controversial, but incompressibility is an approximation to reality, not a fundamental relation. As such its utility is dependent upon the application. It is undeniably useful, but has limits, which are shamelessly exposed by turbulence. Without viscosity the equations governing incompressible flows are pathological in the extreme. Deep mathematical analysis has been unable to find singular solutions of the nature needed to explain turbulence in incompressible flows.

simulation is build the next generation of computers. The proposition is so shallow on the face of it as to be utterly laughable. Except no one is laughing, the programs are predicated on it. The whole mentality is damaging because it intrinsically limits our thinking about how to balance the various elements needed for progress. We see a lack of the sort of approach that can lead to progress with experimental work starved of funding and focus without the needed mathematical modeling effort necessary for utility. Actual applied mathematics has become a veritable endangered species only seen rarely in the wild.

simulation is build the next generation of computers. The proposition is so shallow on the face of it as to be utterly laughable. Except no one is laughing, the programs are predicated on it. The whole mentality is damaging because it intrinsically limits our thinking about how to balance the various elements needed for progress. We see a lack of the sort of approach that can lead to progress with experimental work starved of funding and focus without the needed mathematical modeling effort necessary for utility. Actual applied mathematics has become a veritable endangered species only seen rarely in the wild.

structures and models for average behavior. One of the key things to really straighten out is the nature of the question we are asking the model to answer. If the question isn’t clearly articulated, the model will provide deceptive answers that will send scientists and engineers in the wrong direction. Getting this model to question dynamic sorted out is far more important to the success of modeling and simulation than any advance in computing power. It is also completely and utterly off the radar of the modern research agenda. I worry that the present focus will produce damage to the forces of progress that may take decades to undue.

structures and models for average behavior. One of the key things to really straighten out is the nature of the question we are asking the model to answer. If the question isn’t clearly articulated, the model will provide deceptive answers that will send scientists and engineers in the wrong direction. Getting this model to question dynamic sorted out is far more important to the success of modeling and simulation than any advance in computing power. It is also completely and utterly off the radar of the modern research agenda. I worry that the present focus will produce damage to the forces of progress that may take decades to undue. If there is any place where singularities are dealt with systematically and properly it is fluid mechanics. Even in fluid mechanics there is a frighteningly large amount of missing territory most acutely in turbulence. The place where things really work is shock waves and we have some very bright people to thank for the order. We can calculate an immense amount of physical phenomena where shock waves are important while ignoring a tremendous amount of detail. All that matter is for the calculation to provide the appropriate integral content of dissipation from the shock wave, and the calculation is wonderfully stable and physical. It is almost never necessary and almost certainly wasteful to compute the full gory details of a shock wave.

If there is any place where singularities are dealt with systematically and properly it is fluid mechanics. Even in fluid mechanics there is a frighteningly large amount of missing territory most acutely in turbulence. The place where things really work is shock waves and we have some very bright people to thank for the order. We can calculate an immense amount of physical phenomena where shock waves are important while ignoring a tremendous amount of detail. All that matter is for the calculation to provide the appropriate integral content of dissipation from the shock wave, and the calculation is wonderfully stable and physical. It is almost never necessary and almost certainly wasteful to compute the full gory details of a shock wave. y to progress. I believe the issue is the nature of the governing equations and a need to change this model away from incompressibility, which is a useful and unphysical approximation, not a fundamental physical law. In spite of all the problems, the state of affairs in turbulence is remarkably good compared with solid mechanics.

y to progress. I believe the issue is the nature of the governing equations and a need to change this model away from incompressibility, which is a useful and unphysical approximation, not a fundamental physical law. In spite of all the problems, the state of affairs in turbulence is remarkably good compared with solid mechanics. An example of lower mathematical maturity can be seen in the field of solid mechanics. In solids, the mathematical theory is stunted by comparison to fluids. A clear part of the issue is the approach taken by the fathers of the field in not providing a clear path for combined analytical-numerical analysis as fluids had. The result of this is a numerical background that is completely left adrift of the analytical structure of the equations. In essence the only option is to fully resolve everything in the governing equations. No structural and systematic explanation exists for the key singularities in material, which is absolutely vital for computational utility. In a nutshell the notion of the regularized singularity so powerful in fluid mechanics is foreign. This has a dramatically negative impact on the capacity of modeling and simulation to have a maximal impact.

An example of lower mathematical maturity can be seen in the field of solid mechanics. In solids, the mathematical theory is stunted by comparison to fluids. A clear part of the issue is the approach taken by the fathers of the field in not providing a clear path for combined analytical-numerical analysis as fluids had. The result of this is a numerical background that is completely left adrift of the analytical structure of the equations. In essence the only option is to fully resolve everything in the governing equations. No structural and systematic explanation exists for the key singularities in material, which is absolutely vital for computational utility. In a nutshell the notion of the regularized singularity so powerful in fluid mechanics is foreign. This has a dramatically negative impact on the capacity of modeling and simulation to have a maximal impact. Being thrust into a leadership position at work has been an eye-opening experience to say the least. It makes crystal clear a whole host of issues that need to be solved. Being a problem-solver at heart, I’m searching for a root cause for all the problems that I see. One can see the symptoms all around, poor understanding, poor coordination, lack of communication, hidden agendas, ineffective vision, and intellectually vacuous goals… I’ve come more and more to the view that all of these things, evident as the day is long are simply symptomatic of a core problem. The core problem is a lack of trust so broad and deep that it rots everything it touches.

Being thrust into a leadership position at work has been an eye-opening experience to say the least. It makes crystal clear a whole host of issues that need to be solved. Being a problem-solver at heart, I’m searching for a root cause for all the problems that I see. One can see the symptoms all around, poor understanding, poor coordination, lack of communication, hidden agendas, ineffective vision, and intellectually vacuous goals… I’ve come more and more to the view that all of these things, evident as the day is long are simply symptomatic of a core problem. The core problem is a lack of trust so broad and deep that it rots everything it touches. on the whole of society leeches. They claim to be helping society even while they damage our future to feed their hunger. The deeper physic wound is the feeling that everyone is so motivated leading to the broad-based lack of trust of your fellow man.

on the whole of society leeches. They claim to be helping society even while they damage our future to feed their hunger. The deeper physic wound is the feeling that everyone is so motivated leading to the broad-based lack of trust of your fellow man. In the overall execution of work another aspect of the current environment can be characterized as the proprietary attitude. Information hiding and lack of communication seem to be a growing problem even as the capacity for transmitting information grows. Various legal, or political concerns seem to outweigh the needs for efficiency, progress and transparency. Today people seem to know much less than they used to instead of more. People are narrower and more tactical in their work rather than broader and strategic. We are encouraged to simply mind our own business rather than seek a broader attitude. The thing that really suffers in all of this is the opportunity to make progress for a better future.

In the overall execution of work another aspect of the current environment can be characterized as the proprietary attitude. Information hiding and lack of communication seem to be a growing problem even as the capacity for transmitting information grows. Various legal, or political concerns seem to outweigh the needs for efficiency, progress and transparency. Today people seem to know much less than they used to instead of more. People are narrower and more tactical in their work rather than broader and strategic. We are encouraged to simply mind our own business rather than seek a broader attitude. The thing that really suffers in all of this is the opportunity to make progress for a better future. The simplest thing to do is value the truth, value excellence and cease rewarding the sort of trust-busting actions enumerated above. Instead of allowing slip-shod work to be reported as excellence we need to make strong value judgments about the quality of work, reward excellence, and punish incompetence. The truth and fact needs to be valued above lies and spin. Bad information needs to be identified as such and eradicated without mercy. Many greedy self-interested parties are strongly inclined to seed doubt and push lies and spin. The battle is for the nature of society. Do we want to live in a World of distrust and cynicism or one of truth and faith in one another? The balance today is firmly stuck at distrust and cynicism. The issue of excellence is rather pregnant. Today everyone is an expert, and no one is an expert with the general notion of expertise being highly suspected. The impact of such a milieu is absolutely damaging to the structure of society and the prospects for progress. We need to seed, reward and nurture excellence across society instead of doubting and demonizing it.

The simplest thing to do is value the truth, value excellence and cease rewarding the sort of trust-busting actions enumerated above. Instead of allowing slip-shod work to be reported as excellence we need to make strong value judgments about the quality of work, reward excellence, and punish incompetence. The truth and fact needs to be valued above lies and spin. Bad information needs to be identified as such and eradicated without mercy. Many greedy self-interested parties are strongly inclined to seed doubt and push lies and spin. The battle is for the nature of society. Do we want to live in a World of distrust and cynicism or one of truth and faith in one another? The balance today is firmly stuck at distrust and cynicism. The issue of excellence is rather pregnant. Today everyone is an expert, and no one is an expert with the general notion of expertise being highly suspected. The impact of such a milieu is absolutely damaging to the structure of society and the prospects for progress. We need to seed, reward and nurture excellence across society instead of doubting and demonizing it. Ultimately we need to conscientiously drive for trust as a virtue in how we approach each other. A big part of trust is the need for truth in our communication. The sort of lying, spinning and bullshit in communication does nothing but undermine trust, and empower low quality work. We need to empower excellence through our actions rather than simply declare things to be excellent by definition and fiat. Failure is a necessary element in achievement and expertise. It must be encouraged. We should promote progress and quality as a necessary outcome across the broadest spectrum of work. Everything discussed above needs to be based in a definitive reality and have actual basis in facts instead of simply bullshitting about it, or it only having “truthiness”. Not being able to see the evidence of reality in claims of excellence and quality simply amplifies the problems with trust, and risks devolving into a viscous cycle dragging us down instead of a virtuous cycle that lifts us up.

Ultimately we need to conscientiously drive for trust as a virtue in how we approach each other. A big part of trust is the need for truth in our communication. The sort of lying, spinning and bullshit in communication does nothing but undermine trust, and empower low quality work. We need to empower excellence through our actions rather than simply declare things to be excellent by definition and fiat. Failure is a necessary element in achievement and expertise. It must be encouraged. We should promote progress and quality as a necessary outcome across the broadest spectrum of work. Everything discussed above needs to be based in a definitive reality and have actual basis in facts instead of simply bullshitting about it, or it only having “truthiness”. Not being able to see the evidence of reality in claims of excellence and quality simply amplifies the problems with trust, and risks devolving into a viscous cycle dragging us down instead of a virtuous cycle that lifts us up. I chose the name the “Regularized Singularity” because it’s so important to the conduct of computational simulations of significance. For real world computations, the nonlinearity of the models dictates that the formation of a singularity is almost a foregone conclusion. To remain well behaved and physical, the singularity must be regularized, which means the singular behavior is moderated into something computable. This almost always accomplished with the application of a dissipative mechanism and effectively imposes the second law of thermodynamics on the solution.